目录

step -1. 数据下载

# data

datadir=./downloads

if [ ${stage} -le -1 ] && [ ${stop_stage} -ge -1 ]; then

echo "stage -1: Data Download"

mkdir -p ${datadir}

local/download_and_untar.sh ${datadir} ${data_url}

fi

通过运行local下的download_and_untar.sh脚本下载an4数据集,当然也可以自己从官网下载自己解压缩,下载后的数据集存放在downloads文件夹下

step 0~2. Kaldi格式数据准备

step0. 数据准备

# general configuration

dumpdir=dump # directory to dump full features

# feature configuration

do_delta=false

# data

datadir=./downloads

an4_root=${datadir}/an4

train_set="train_nodev"

train_dev="train_dev"

if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

### Task dependent. You have to make data the following preparation part by yourself.

### But you can utilize Kaldi recipes in most cases

echo "stage 0: Data preparation"

mkdir -p data/{train,test} exp #递归创建data/train、data/test、exp目录

if [ ! -f ${an4_root}/README ]; then #找不到an4数据集的情况

echo Cannot find an4 root! Exiting...

exit 1

fi

python3 local/data_prep.py ${an4_root} sph2pipe#运行local文件夹下的data_prep.py

#生成train和test的wav.scp、text、utt2spk、spk2utt

for x in test train; do

for f in text wav.scp utt2spk; do

sort data/${x}/${f} -o data/${x}/${f}

done

utils/utt2spk_to_spk2utt.pl data/${x}/utt2spk > data/${x}/spk2utt

done

fi

#递归创建文件夹dump/train_nodev/daltafalse、dump/train_dev/daltafalse

feat_tr_dir=${dumpdir}/${train_set}/delta${do_delta}; mkdir -p ${feat_tr_dir}

feat_dt_dir=${dumpdir}/${train_dev}/delta${do_delta}; mkdir -p ${feat_dt_dir}

生成最重要的四个文件wav.scp、text、utt2spk、spk2utt

wav.scp文件保存了语音编号和该语音在系统中的绝对路径的位置

test文件保存了语音编号与该语音对应的转录文本

utt2spk文件保存了语音编号和说话人编号

spk2utt文件保存了说话人编号和该说话人所有语音编号的对应关系

step1. 特征提取

if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

### Task dependent. You have to design training and dev sets by yourself.

### But you can utilize Kaldi recipes in most cases

echo "stage 1: Feature Generation"

fbankdir=fbank

# Generate the fbank features; by default 80-dimensional fbanks with pitch on each frame 对每一帧提取80维的fbank特征

for x in test train; do

steps/make_fbank_pitch.sh --cmd "$train_cmd" --nj 8 --write_utt2num_frames true \

data/${x} exp/make_fbank/${x} ${fbankdir}

utils/fix_data_dir.sh data/${x} #保证文件是有序的

done

# make a dev set

utils/subset_data_dir.sh --first data/train 100 data/${train_dev}

n=$(($(wc -l < data/train/text) - 100))

utils/subset_data_dir.sh --last data/train ${n} data/${train_set}

# compute global CMVN 计算倒谱均值归一化

compute-cmvn-stats scp:data/${train_set}/feats.scp data/${train_set}/cmvn.ark

# dump features 转存特征

dump.sh --cmd "$train_cmd" --nj 8 --do_delta ${do_delta} \

data/${train_set}/feats.scp data/${train_set}/cmvn.ark exp/dump_feats/train ${feat_tr_dir}

dump.sh --cmd "$train_cmd" --nj 8 --do_delta ${do_delta} \

data/${train_dev}/feats.scp data/${train_set}/cmvn.ark exp/dump_feats/dev ${feat_dt_dir}

for rtask in ${recog_set}; do

feat_recog_dir=${dumpdir}/${rtask}/delta${do_delta}; mkdir -p ${feat_recog_dir}

dump.sh --cmd "$train_cmd" --nj 8 --do_delta ${do_delta} \

data/${rtask}/feats.scp data/${train_set}/cmvn.ark exp/dump_feats/recog/${rtask} \

${feat_recog_dir}

done

fi

dict=data/lang_1char/${train_set}_units.txt

echo "dictionary: ${dict}"

make_fbank_pitch.sh脚本用来提取80维的fbank特征

subset_data_dir.sh分割数据,用于建立初始小模型,而后一步一步扩充

step2. 字典和Json数据准备

if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

### Task dependent. You have to check non-linguistic symbols used in the corpus.

echo "stage 2: Dictionary and Json Data Preparation"

mkdir -p data/lang_1char/ #递归创建词典文件夹

echo "<unk> 1" > ${dict} # <unk> must be 1, 0 will be used for "blank" in CTC

#Espnet中使用text2token.py来通过映射文件中的text文件生成词典

text2token.py -s 1 -n 1 data/${train_set}/text | cut -f 2- -d" " | tr " " "\n" \

| sort | uniq | grep -v -e '^\s*$' | awk '{print $0 " " NR+1}' >> ${dict}

wc -l ${dict}

# make json labels 使用data2json.sh脚本,打包成json文件

data2json.sh --feat ${feat_tr_dir}/feats.scp \

data/${train_set} ${dict} > ${feat_tr_dir}/data.json

data2json.sh --feat ${feat_dt_dir}/feats.scp \

data/${train_dev} ${dict} > ${feat_dt_dir}/data.json

for rtask in ${recog_set}; do

feat_recog_dir=${dumpdir}/${rtask}/delta${do_delta}

data2json.sh --feat ${feat_recog_dir}/feats.scp \

data/${rtask} ${dict} > ${feat_recog_dir}/data.json

done

fi

# It takes about one day. If you just want to do end-to-end ASR without LM,

# you can skip this and remove --rnnlm option in the recognition (stage 5)

if [ -z ${lmtag} ]; then

lmtag=$(basename ${lm_config%.*})

if [ ${use_wordlm} = true ]; then

lmtag=${lmtag}_word${lm_vocabsize}

fi

fi

lmexpname=train_rnnlm_${backend}_${lmtag}

lmexpdir=exp/${lmexpname} #创建日志文件

mkdir -p ${lmexpdir}

下面是生成的字典和打包后的json文件

目录说明

conf/:Kaldi配置,如语音特征

data/:使用Kaldi准备几乎所有原始数据

exp/:通过实验确定文件,如日志文件、参数模型

fbank/:语音特征二进制文件,如ark、scp

dump/:用于ESPnet训练的元数据,如json、hdf5

local/:语料库特定的数据准备脚本

steps/,utils/:Kaldi的辅助脚本

step3~4 神经网络的训练

step 3. 语言模型训练

if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

echo "stage 3: LM Preparation"

#两种方式对语言模型的训练语料进行标号

if [ ${use_wordlm} = true ]; then

lmdatadir=data/local/wordlm_train

lmdict=${lmdatadir}/wordlist_${lm_vocabsize}.txt

mkdir -p ${lmdatadir}

cut -f 2- -d" " data/${train_set}/text > ${lmdatadir}/train.txt

cut -f 2- -d" " data/${train_dev}/text > ${lmdatadir}/valid.txt

cut -f 2- -d" " data/${lm_test}/text > ${lmdatadir}/test.txt

text2vocabulary.py -s ${lm_vocabsize} -o ${lmdict} ${lmdatadir}/train.txt

else

lmdatadir=data/local/lm_train

lmdict=${dict}

mkdir -p ${lmdatadir}

text2token.py -s 1 -n 1 data/${train_set}/text \

| cut -f 2- -d" " > ${lmdatadir}/train.txt

text2token.py -s 1 -n 1 data/${train_dev}/text \

| cut -f 2- -d" " > ${lmdatadir}/valid.txt

text2token.py -s 1 -n 1 data/${lm_test}/text \

| cut -f 2- -d" " > ${lmdatadir}/test.txt

fi

#使用lm_train.py脚本进行语言模型的训练

${cuda_cmd} --gpu ${ngpu} ${lmexpdir}/train.log \

lm_train.py \

--config ${lm_config} \

--ngpu ${ngpu} \

--backend ${backend} \

--verbose 1 \

--outdir ${lmexpdir} \

--tensorboard-dir tensorboard/${lmexpname} \

--train-label ${lmdatadir}/train.txt \

--valid-label ${lmdatadir}/valid.txt \

--test-label ${lmdatadir}/test.txt \

--resume ${lm_resume} \

--dict ${lmdict}

fi

if [ -z ${tag} ]; then

expname=${train_set}_${backend}_$(basename ${train_config%.*})

if ${do_delta}; then

expname=${expname}_delta

fi

else

expname=${train_set}_${backend}_${tag}

fi

expdir=exp/${expname}

mkdir -p ${expdir}

使用lm_train.py脚本进行语言模型的训练

step 4. 声学模型训练

if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

echo "stage 4: Network Training"

${cuda_cmd} --gpu ${ngpu} ${expdir}/train.log \

asr_train.py \

--config ${train_config} \

--ngpu ${ngpu} \

--backend ${backend} \

--outdir ${expdir}/results \

--tensorboard-dir tensorboard/${expname} \

--debugmode ${debugmode} \

--dict ${dict} \

--debugdir ${expdir} \

--minibatches ${N} \

--verbose ${verbose} \

--resume ${resume} \

--train-json ${feat_tr_dir}/data.json \

--valid-json ${feat_dt_dir}/data.json

fi

使用asr_train.py脚本进行声学模型的训练

step5 解码

if [ ${stage} -le 5 ] && [ ${stop_stage} -ge 5 ]; then

echo "stage 5: Decoding"

nj=8

pids=() # initialize pids

for rtask in ${recog_set}; do

(

decode_dir=decode_${rtask}_$(basename ${decode_config%.*})_${lmtag}

if [ ${use_wordlm} = true ]; then

recog_opts="--word-rnnlm ${lmexpdir}/rnnlm.model.best"

else

recog_opts="--rnnlm ${lmexpdir}/rnnlm.model.best"

fi

feat_recog_dir=${dumpdir}/${rtask}/delta${do_delta}

# split data

splitjson.py --parts ${nj} ${feat_recog_dir}/data.json

#### use CPU for decoding

ngpu=0

${decode_cmd} JOB=1:${nj} ${expdir}/${decode_dir}/log/decode.JOB.log \

asr_recog.py \

--config ${decode_config} \

--ngpu ${ngpu} \

--backend ${backend} \

--debugmode ${debugmode} \

--verbose ${verbose} \

--recog-json ${feat_recog_dir}/split${nj}utt/data.JOB.json \

--result-label ${expdir}/${decode_dir}/data.JOB.json \

--model ${expdir}/results/${recog_model} \

${recog_opts}

score_sclite.sh ${expdir}/${decode_dir} ${dict}

) &

pids+=($!) # store background pids

done

i=0; for pid in "${pids[@]}"; do wait ${pid} || ((++i)); done

[ ${i} -gt 0 ] && echo "$0: ${i} background jobs are failed." && false

fi

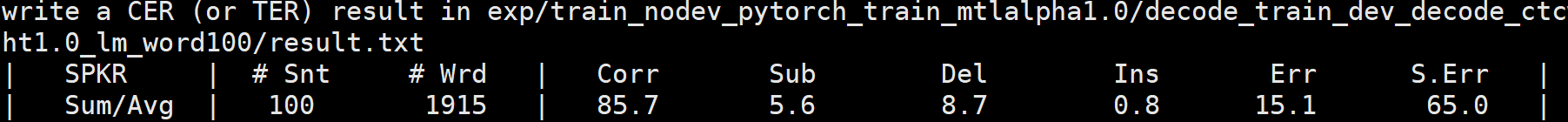

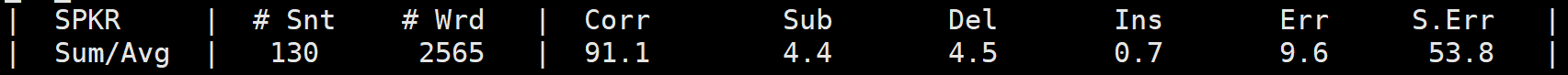

最终结果:

参考资料

[1] Espnet实践

[2] Espnet Notebook

9529

9529

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?