redis单线程和IO多线程

redis 命令处理是单线程

redis整体不是单线程的,所说的redis单线程是指命令处理、逻辑处理是单线程。就是不管有多少条连接去操作redis的数据,redis对命令的处理都在一个线程完成。

redis-server是主线程,所说的redis是单线程主要指redis-server这个线程,用于处理命令。

对于单线程来说不能有耗时操作(所谓耗时是指阻塞IO或者CPU运算数据的时间比较长),对于redis而言耗时会影响响应性能。

redis 的耗时操作有哪些

那么redis有哪些耗时操作以及怎么解决的呢?

(1)IO密集型,磁盘IO。redis是一个数据库,支持持久化,这就有磁盘IO的操作,这是需要耗时的。redis会fork一个子进程,在子进程中做持久化,不占用主进程,不会干扰主进程的命令处理。此外,redis还有aof的持久化策略,在bio_aof_fsync线程中进行异步刷盘,也不会占用redis-server主线程。

(2)IO密集型,网络IO。当redis服务多个客户,数据请求或返回数据量比较大时(比如读取前100名排行榜,或者日志写入),这是耗时的。redis通过开启IO多线程(io_thd_* 线程)来处理网络IO,解决网络IO导致的耗时问题。

(3)CPU密集型,redis支持丰富的数据结构,如果数据结构的时间复杂度比较高(比如 O ( n ) O(n) O(n))可能导致CPU被占用大量的时间去运算,从而耗时。

redis 命令处理不采用多线程的原因

-

redis支持丰富的数据结构(string、list、hash、set、zset等),而且对象类型由多个数据结构实现,加锁复杂、锁粒度不好控制;它不像memcached只支持一种数据结构,加锁简单。

-

频繁的上下文切换,抵消多线程的优势。redis是一个数据库,有些时间段会密集访问,有些时间段又比较少的访问;如果使用多线程,就需要将一些线程休眠和将一些线程唤醒,这就存在线程调度问题,线程调度就会引发上下文切换。

redis 使用单线程能高效的原因

在机制上

- redis是内存数据库,数据存储在内存中,可以高效访问。

- redis使用hash table的数据组织方式,查询数据的时间复杂度为 O ( 1 ) O(1) O(1),能快速查找数据。

- redis支持丰富的数据结构,根据性能进行数据结构切换,执行效率与空间占用保持平衡。

- redis使用高效的reactor网络模型。

优化方式

- redis采用分治思想,把rehash分摊到每一个操作步骤当中,同时在定时器中以100为步长最多rehash 1ms,实现高效。

- redis将耗时阻塞的操作放在其他线程处理。

- redis的对象类型采用不同的数据结构实现。比如string对象有int、raw、embstr三种编码方式。

127.0.0.1:6379> get fly

"100"

127.0.0.1:6379> OBJECT encoding fly

"int"

127.0.0.1:6379> set fly 1024a

OK

127.0.0.1:6379> get fly

"1024a"

127.0.0.1:6379> OBJECT encoding fly

"embstr"

127.0.0.1:6379> set fly 123456789012345678901234567890123456789012345678901234567890

OK

127.0.0.1:6379> get fly

"123456789012345678901234567890123456789012345678901234567890"

127.0.0.1:6379> OBJECT encoding fly

"raw"

redis io多线程

redis的 网络IO可能存在IO密集情况,IO密集可能是耗时操作,从而影响单线程的性能问题。为此,redis有IO多线程,可以在redis.conf文件中配置io-threads参数。

################################ THREADED I/O #################################

# Redis is mostly single threaded, however there are certain threaded

# operations such as UNLINK, slow I/O accesses and other things that are

# performed on side threads.

#

# Now it is also possible to handle Redis clients socket reads and writes

# in different I/O threads. Since especially writing is so slow, normally

# Redis users use pipelining in order to speed up the Redis performances per

# core, and spawn multiple instances in order to scale more. Using I/O

# threads it is possible to easily speedup two times Redis without resorting

# to pipelining nor sharding of the instance.

#

# By default threading is disabled, we suggest enabling it only in machines

# that have at least 4 or more cores, leaving at least one spare core.

# Using more than 8 threads is unlikely to help much. We also recommend using

# threaded I/O only if you actually have performance problems, with Redis

# instances being able to use a quite big percentage of CPU time, otherwise

# there is no point in using this feature.

#

# So for instance if you have a four cores boxes, try to use 2 or 3 I/O

# threads, if you have a 8 cores, try to use 6 threads. In order to

# enable I/O threads use the following configuration directive:

#

io-threads 4

#

# Setting io-threads to 1 will just use the main thread as usual.

# When I/O threads are enabled, we only use threads for writes, that is

# to thread the write(2) syscall and transfer the client buffers to the

# socket. However it is also possible to enable threading of reads and

# protocol parsing using the following configuration directive, by setting

# it to yes:

#

io-threads-do-reads yes

#

# Usually threading reads doesn't help much.

#

# NOTE 1: This configuration directive cannot be changed at runtime via

# CONFIG SET. Aso this feature currently does not work when SSL is

# enabled.

#

# NOTE 2: If you want to test the Redis speedup using redis-benchmark, make

# sure you also run the benchmark itself in threaded mode, using the

# --threads option to match the number of Redis threads, otherwise you'll not

# be able to notice the improvements.

注意,开启的IO多线程中,包含主线程在里面的。

另外,io-threads-do-reads参数用来决定是否需要在IO多线程中进行read+decode操作的;该参数默认为no,即IO多线程仅参与encode+send操作;如果设为yes,则IO多线程都参与read+decode和encode+send操作。

io-threads-do-reads的使用

(1)redis接收大量的数据时。比如要向redis中写日志时,会有大量的数据需要写入redis,redis需要读大量的数据并解析,这时候就要开启io-threads-do-reads。

(2)redis发送大量的数据时。比如客户端需要获取排行榜数据,需要从redis中获取大量数据时,需要优化encode+send操作;这是默认就有的。

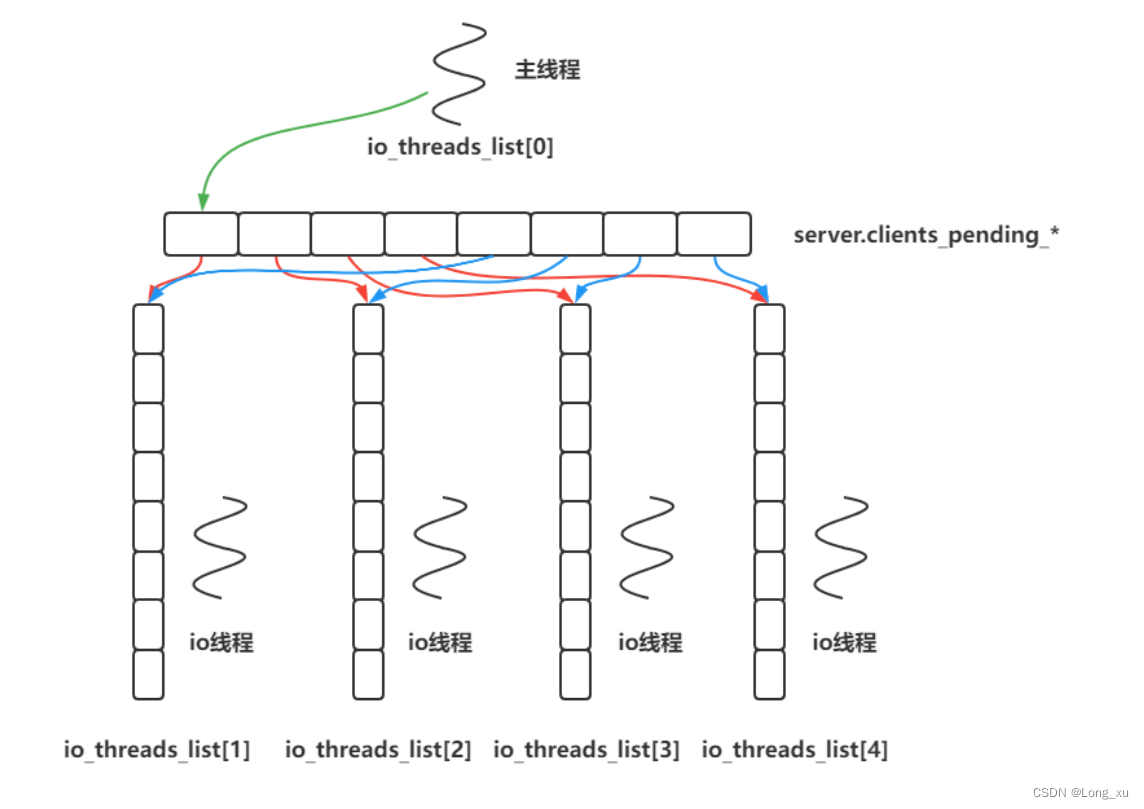

原理图

IO多线程起用判定

IO多线程总前提是 多个并发连接时使用,一条连接不会使用IO多线程。

networking.c的stopThreadedIOIfNeeded()函数:

/* This function checks if there are not enough pending clients to justify

* taking the I/O threads active: in that case I/O threads are stopped if

* currently active. We track the pending writes as a measure of clients

* we need to handle in parallel, however the I/O threading is disabled

* globally for reads as well if we have too little pending clients.

*

* The function returns 0 if the I/O threading should be used because there

* are enough active threads, otherwise 1 is returned and the I/O threads

* could be possibly stopped (if already active) as a side effect. */

int stopThreadedIOIfNeeded(void) {

int pending = listLength(server.clients_pending_write);

/* Return ASAP if IO threads are disabled (single threaded mode). */

if (server.io_threads_num == 1) return 1;

if (pending < (server.io_threads_num*2)) {

if (server.io_threads_active) stopThreadedIO();

return 1;

} else {

return 0;

}

}

源码剖析redis IO多线程

创建监听fd后,会绑定连接回调函数acceptTcpHandler。

server.c

/* Create an event handler for accepting new connections in TCP and Unix

* domain sockets. */

for (j = 0; j < server.ipfd_count; j++) {

if (aeCreateFileEvent(server.el, server.ipfd[j], AE_READABLE,

acceptTcpHandler,NULL) == AE_ERR)

{

serverPanic(

"Unrecoverable error creating server.ipfd file event.");

}

}

(1)当连接到来时,acceptTcpHandler会调用acceptCommonHandler,acceptCommonHandler里面调用createClient建立连接。createClient创建连接时调用connSetReadHandler设置读事件回调函数readQueryFromClient。

networking.c

client *createClient(connection *conn) {

client *c = zmalloc(sizeof(client));

/* passing NULL as conn it is possible to create a non connected client.

* This is useful since all the commands needs to be executed

* in the context of a client. When commands are executed in other

* contexts (for instance a Lua script) we need a non connected client. */

if (conn) {

connNonBlock(conn);

connEnableTcpNoDelay(conn);

if (server.tcpkeepalive)

connKeepAlive(conn,server.tcpkeepalive);

connSetReadHandler(conn, readQueryFromClient);

connSetPrivateData(conn, c);

}

selectDb(c,0);

uint64_t client_id = ++server.next_client_id;

c->id = client_id;

c->resp = 2;

c->conn = conn;

c->name = NULL;

c->bufpos = 0;

c->qb_pos = 0;

c->querybuf = sdsempty();

c->pending_querybuf = sdsempty();

c->querybuf_peak = 0;

c->reqtype = 0;

c->argc = 0;

c->argv = NULL;

c->argv_len_sum = 0;

c->cmd = c->lastcmd = NULL;

c->user = DefaultUser;

c->multibulklen = 0;

c->bulklen = -1;

c->sentlen = 0;

c->flags = 0;

c->ctime = c->lastinteraction = server.unixtime;

/* If the default user does not require authentication, the user is

* directly authenticated. */

c->authenticated = (c->user->flags & USER_FLAG_NOPASS) &&

!(c->user->flags & USER_FLAG_DISABLED);

c->replstate = REPL_STATE_NONE;

c->repl_put_online_on_ack = 0;

c->reploff = 0;

c->read_reploff = 0;

c->repl_ack_off = 0;

c->repl_ack_time = 0;

c->repl_last_partial_write = 0;

c->slave_listening_port = 0;

c->slave_ip[0] = '\0';

c->slave_capa = SLAVE_CAPA_NONE;

c->reply = listCreate();

c->reply_bytes = 0;

c->obuf_soft_limit_reached_time = 0;

listSetFreeMethod(c->reply,freeClientReplyValue);

listSetDupMethod(c->reply,dupClientReplyValue);

c->btype = BLOCKED_NONE;

c->bpop.timeout = 0;

c->bpop.keys = dictCreate(&objectKeyHeapPointerValueDictType,NULL);

c->bpop.target = NULL;

c->bpop.xread_group = NULL;

c->bpop.xread_consumer = NULL;

c->bpop.xread_group_noack = 0;

c->bpop.numreplicas = 0;

c->bpop.reploffset = 0;

c->woff = 0;

c->watched_keys = listCreate();

c->pubsub_channels = dictCreate(&objectKeyPointerValueDictType,NULL);

c->pubsub_patterns = listCreate();

c->peerid = NULL;

c->client_list_node = NULL;

c->client_tracking_redirection = 0;

c->client_tracking_prefixes = NULL;

c->client_cron_last_memory_usage = 0;

c->client_cron_last_memory_type = CLIENT_TYPE_NORMAL;

c->auth_callback = NULL;

c->auth_callback_privdata = NULL;

c->auth_module = NULL;

listSetFreeMethod(c->pubsub_patterns,decrRefCountVoid);

listSetMatchMethod(c->pubsub_patterns,listMatchObjects);

if (conn) linkClient(c);

initClientMultiState(c);

return c;

}

static void acceptCommonHandler(connection *conn, int flags, char *ip) {

client *c;

char conninfo[100];

UNUSED(ip);

if (connGetState(conn) != CONN_STATE_ACCEPTING) {

serverLog(LL_VERBOSE,

"Accepted client connection in error state: %s (conn: %s)",

connGetLastError(conn),

connGetInfo(conn, conninfo, sizeof(conninfo)));

connClose(conn);

return;

}

/* Limit the number of connections we take at the same time.

*

* Admission control will happen before a client is created and connAccept()

* called, because we don't want to even start transport-level negotiation

* if rejected. */

if (listLength(server.clients) + getClusterConnectionsCount()

>= server.maxclients)

{

char *err;

if (server.cluster_enabled)

err = "-ERR max number of clients + cluster "

"connections reached\r\n";

else

err = "-ERR max number of clients reached\r\n";

/* That's a best effort error message, don't check write errors.

* Note that for TLS connections, no handshake was done yet so nothing

* is written and the connection will just drop. */

if (connWrite(conn,err,strlen(err)) == -1) {

/* Nothing to do, Just to avoid the warning... */

}

server.stat_rejected_conn++;

connClose(conn);

return;

}

/* Create connection and client */

if ((c = createClient(conn)) == NULL) {

serverLog(LL_WARNING,

"Error registering fd event for the new client: %s (conn: %s)",

connGetLastError(conn),

connGetInfo(conn, conninfo, sizeof(conninfo)));

connClose(conn); /* May be already closed, just ignore errors */

return;

}

/* Last chance to keep flags */

c->flags |= flags;

/* Initiate accept.

*

* Note that connAccept() is free to do two things here:

* 1. Call clientAcceptHandler() immediately;

* 2. Schedule a future call to clientAcceptHandler().

*

* Because of that, we must do nothing else afterwards.

*/

if (connAccept(conn, clientAcceptHandler) == C_ERR) {

char conninfo[100];

if (connGetState(conn) == CONN_STATE_ERROR)

serverLog(LL_WARNING,

"Error accepting a client connection: %s (conn: %s)",

connGetLastError(conn), connGetInfo(conn, conninfo, sizeof(conninfo)));

freeClient(connGetPrivateData(conn));

return;

}

}

void acceptTcpHandler(aeEventLoop *el, int fd, void *privdata, int mask) {

int cport, cfd, max = MAX_ACCEPTS_PER_CALL;

char cip[NET_IP_STR_LEN];

UNUSED(el);

UNUSED(mask);

UNUSED(privdata);

while(max--) {

cfd = anetTcpAccept(server.neterr, fd, cip, sizeof(cip), &cport);

if (cfd == ANET_ERR) {

if (errno != EWOULDBLOCK)

serverLog(LL_WARNING,

"Accepting client connection: %s", server.neterr);

return;

}

serverLog(LL_VERBOSE,"Accepted %s:%d", cip, cport);

acceptCommonHandler(connCreateAcceptedSocket(cfd),0,cip);

}

}

(2)当客户端发送数据时,触发redis读事件,进入读事件回调函数readQueryFromClient,里面调用postponeClientRead进行延迟处理客户端的读。

postponeClientRead调用listAddNodeHead将具体的连接添加到clients_pending_read全局队列中。

networking.c

/* Return 1 if we want to handle the client read later using threaded I/O.

* This is called by the readable handler of the event loop.

* As a side effect of calling this function the client is put in the

* pending read clients and flagged as such. */

int postponeClientRead(client *c) {

if (server.io_threads_active &&

server.io_threads_do_reads &&

!clientsArePaused() &&

!ProcessingEventsWhileBlocked &&

!(c->flags & (CLIENT_MASTER|CLIENT_SLAVE|CLIENT_PENDING_READ)))

{

c->flags |= CLIENT_PENDING_READ;

listAddNodeHead(server.clients_pending_read,c);

return 1;

} else {

return 0;

}

}

void readQueryFromClient(connection *conn) {

client *c = connGetPrivateData(conn);

int nread, readlen;

size_t qblen;

/* Check if we want to read from the client later when exiting from

* the event loop. This is the case if threaded I/O is enabled. */

if (postponeClientRead(c)) return;

/* Update total number of reads on server */

server.stat_total_reads_processed++;

readlen = PROTO_IOBUF_LEN;

/* If this is a multi bulk request, and we are processing a bulk reply

* that is large enough, try to maximize the probability that the query

* buffer contains exactly the SDS string representing the object, even

* at the risk of requiring more read(2) calls. This way the function

* processMultiBulkBuffer() can avoid copying buffers to create the

* Redis Object representing the argument. */

if (c->reqtype == PROTO_REQ_MULTIBULK && c->multibulklen && c->bulklen != -1

&& c->bulklen >= PROTO_MBULK_BIG_ARG)

{

ssize_t remaining = (size_t)(c->bulklen+2)-sdslen(c->querybuf);

/* Note that the 'remaining' variable may be zero in some edge case,

* for example once we resume a blocked client after CLIENT PAUSE. */

if (remaining > 0 && remaining < readlen) readlen = remaining;

}

qblen = sdslen(c->querybuf);

if (c->querybuf_peak < qblen) c->querybuf_peak = qblen;

c->querybuf = sdsMakeRoomFor(c->querybuf, readlen);

nread = connRead(c->conn, c->querybuf+qblen, readlen);

if (nread == -1) {

if (connGetState(conn) == CONN_STATE_CONNECTED) {

return;

} else {

serverLog(LL_VERBOSE, "Reading from client: %s",connGetLastError(c->conn));

freeClientAsync(c);

return;

}

} else if (nread == 0) {

serverLog(LL_VERBOSE, "Client closed connection");

freeClientAsync(c);

return;

} else if (c->flags & CLIENT_MASTER) {

/* Append the query buffer to the pending (not applied) buffer

* of the master. We'll use this buffer later in order to have a

* copy of the string applied by the last command executed. */

c->pending_querybuf = sdscatlen(c->pending_querybuf,

c->querybuf+qblen,nread);

}

sdsIncrLen(c->querybuf,nread);

c->lastinteraction = server.unixtime;

if (c->flags & CLIENT_MASTER) c->read_reploff += nread;

server.stat_net_input_bytes += nread;

if (sdslen(c->querybuf) > server.client_max_querybuf_len) {

sds ci = catClientInfoString(sdsempty(),c), bytes = sdsempty();

bytes = sdscatrepr(bytes,c->querybuf,64);

serverLog(LL_WARNING,"Closing client that reached max query buffer length: %s (qbuf initial bytes: %s)", ci, bytes);

sdsfree(ci);

sdsfree(bytes);

freeClientAsync(c);

return;

}

/* There is more data in the client input buffer, continue parsing it

* in case to check if there is a full command to execute. */

processInputBuffer(c);

}

(3)任务分配是在handleClientsWithPendingReadsUsingThreads函数进行的;这里调用listAddNodeTail将任务分配到IO处理线程的专属队列io_threads_list。

networking.c

/* When threaded I/O is also enabled for the reading + parsing side, the

* readable handler will just put normal clients into a queue of clients to

* process (instead of serving them synchronously). This function runs

* the queue using the I/O threads, and process them in order to accumulate

* the reads in the buffers, and also parse the first command available

* rendering it in the client structures. */

int handleClientsWithPendingReadsUsingThreads(void) {

if (!server.io_threads_active || !server.io_threads_do_reads) return 0;

int processed = listLength(server.clients_pending_read);

if (processed == 0) return 0;

if (tio_debug) printf("%d TOTAL READ pending clients\n", processed);

/* Distribute the clients across N different lists. */

listIter li;

listNode *ln;

listRewind(server.clients_pending_read,&li);

int item_id = 1;

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

int target_id = item_id % server.io_threads_num;

listAddNodeTail(io_threads_list[target_id],c);

item_id++;

}

/* Give the start condition to the waiting threads, by setting the

* start condition atomic var. */

io_threads_op = IO_THREADS_OP_READ;

for (int j = 1; j < server.io_threads_num; j++) {

int count = listLength(io_threads_list[j]);

io_threads_pending[j] = count;

}

/* Also use the main thread to process a slice of clients. */

listRewind(io_threads_list[0],&li);

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

readQueryFromClient(c->conn);

}

listEmpty(io_threads_list[0]);

/* Wait for all the other threads to end their work. */

while(1) {

unsigned long pending = 0;

for (int j = 1; j < server.io_threads_num; j++)

pending += io_threads_pending[j];

if (pending == 0) break;

}

if (tio_debug) printf("I/O READ All threads finshed\n");

/* Run the list of clients again to process the new buffers. */

while(listLength(server.clients_pending_read)) {

ln = listFirst(server.clients_pending_read);

client *c = listNodeValue(ln);

c->flags &= ~CLIENT_PENDING_READ;

listDelNode(server.clients_pending_read,ln);

/* Clients can become paused while executing the queued commands,

* so we need to check in between each command. If a pause was

* executed, we still remove the command and it will get picked up

* later when clients are unpaused and we re-queue all clients. */

if (clientsArePaused()) continue;

if (processPendingCommandsAndResetClient(c) == C_ERR) {

/* If the client is no longer valid, we avoid

* processing the client later. So we just go

* to the next. */

continue;

}

processInputBuffer(c);

/* We may have pending replies if a thread readQueryFromClient() produced

* replies and did not install a write handler (it can't).

*/

if (!(c->flags & CLIENT_PENDING_WRITE) && clientHasPendingReplies(c))

clientInstallWriteHandler(c);

}

/* Update processed count on server */

server.stat_io_reads_processed += processed;

return processed;

}

(4)IO线程开始处理任务。

IO线程的入口处理函数会不断的从队列中取出任务,读事件调用readQueryFromClient。

但是,由于主线程设置了CLIENT_PENDING_READ标志,则 if (postponeClientRead©) return;语句不会返回,会往下执行调用connRead(),也就是读操作,将数据读到缓冲区。读完数据之后,调用processInputBuffer进行数据解析。

networking.c

/* This is the list of clients each thread will serve when threaded I/O is

* used. We spawn io_threads_num-1 threads, since one is the main thread

* itself. */

list *io_threads_list[IO_THREADS_MAX_NUM];

void *IOThreadMain(void *myid) {

/* The ID is the thread number (from 0 to server.iothreads_num-1), and is

* used by the thread to just manipulate a single sub-array of clients. */

long id = (unsigned long)myid;

char thdname[16];

snprintf(thdname, sizeof(thdname), "io_thd_%ld", id);

redis_set_thread_title(thdname);

redisSetCpuAffinity(server.server_cpulist);

makeThreadKillable();

while(1) {

/* Wait for start */

for (int j = 0; j < 1000000; j++) {

if (io_threads_pending[id] != 0) break;

}

/* Give the main thread a chance to stop this thread. */

if (io_threads_pending[id] == 0) {

pthread_mutex_lock(&io_threads_mutex[id]);

pthread_mutex_unlock(&io_threads_mutex[id]);

continue;

}

serverAssert(io_threads_pending[id] != 0);

if (tio_debug) printf("[%ld] %d to handle\n", id, (int)listLength(io_threads_list[id]));

/* Process: note that the main thread will never touch our list

* before we drop the pending count to 0. */

listIter li;

listNode *ln;

listRewind(io_threads_list[id],&li);

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

if (io_threads_op == IO_THREADS_OP_WRITE) {

writeToClient(c,0);

} else if (io_threads_op == IO_THREADS_OP_READ) {

readQueryFromClient(c->conn);

} else {

serverPanic("io_threads_op value is unknown");

}

}

listEmpty(io_threads_list[id]);

io_threads_pending[id] = 0;

if (tio_debug) printf("[%ld] Done\n", id);

}

}

connection.h

/* Read from the connection, behaves the same as read(2).

*

* Like read(2), a short read is possible. A return value of 0 will indicate the

* connection was closed, and -1 will indicate an error.

*

* The caller should NOT rely on errno. Testing for an EAGAIN-like condition, use

* connGetState() to see if the connection state is still CONN_STATE_CONNECTED.

*/

static inline int connRead(connection *conn, void *buf, size_t buf_len) {

return conn->type->read(conn, buf, buf_len);

}

(5)processInputBuffer函数进行分割数据包和解析数据包。里面调用processInlineBuffer或processMultibulkBuffer来界定数据包。

因为设置了CLIENT_PENDING_READ标志,IO处理线程不会执行processCommandAndResetClient函数来处理命令,只有主线程会执行processCommandAndResetClient函数。

networking.c

void processInputBuffer(client *c) {

/* Keep processing while there is something in the input buffer */

while(c->qb_pos < sdslen(c->querybuf)) {

/* Return if clients are paused. */

if (!(c->flags & CLIENT_SLAVE) &&

!(c->flags & CLIENT_PENDING_READ) &&

clientsArePaused()) break;

/* Immediately abort if the client is in the middle of something. */

if (c->flags & CLIENT_BLOCKED) break;

/* Don't process more buffers from clients that have already pending

* commands to execute in c->argv. */

if (c->flags & CLIENT_PENDING_COMMAND) break;

/* Don't process input from the master while there is a busy script

* condition on the slave. We want just to accumulate the replication

* stream (instead of replying -BUSY like we do with other clients) and

* later resume the processing. */

if (server.lua_timedout && c->flags & CLIENT_MASTER) break;

/* CLIENT_CLOSE_AFTER_REPLY closes the connection once the reply is

* written to the client. Make sure to not let the reply grow after

* this flag has been set (i.e. don't process more commands).

*

* The same applies for clients we want to terminate ASAP. */

if (c->flags & (CLIENT_CLOSE_AFTER_REPLY|CLIENT_CLOSE_ASAP)) break;

/* Determine request type when unknown. */

if (!c->reqtype) {

if (c->querybuf[c->qb_pos] == '*') {

c->reqtype = PROTO_REQ_MULTIBULK;

} else {

c->reqtype = PROTO_REQ_INLINE;

}

}

if (c->reqtype == PROTO_REQ_INLINE) {

if (processInlineBuffer(c) != C_OK) break;

/* If the Gopher mode and we got zero or one argument, process

* the request in Gopher mode. To avoid data race, Redis won't

* support Gopher if enable io threads to read queries. */

if (server.gopher_enabled && !server.io_threads_do_reads &&

((c->argc == 1 && ((char*)(c->argv[0]->ptr))[0] == '/') ||

c->argc == 0))

{

processGopherRequest(c);

resetClient(c);

c->flags |= CLIENT_CLOSE_AFTER_REPLY;

break;

}

} else if (c->reqtype == PROTO_REQ_MULTIBULK) {

if (processMultibulkBuffer(c) != C_OK) break;

} else {

serverPanic("Unknown request type");

}

/* Multibulk processing could see a <= 0 length. */

if (c->argc == 0) {

resetClient(c);

} else {

/* If we are in the context of an I/O thread, we can't really

* execute the command here. All we can do is to flag the client

* as one that needs to process the command. */

if (c->flags & CLIENT_PENDING_READ) {

c->flags |= CLIENT_PENDING_COMMAND;

break;

}

/* We are finally ready to execute the command. */

if (processCommandAndResetClient(c) == C_ERR) {

/* If the client is no longer valid, we avoid exiting this

* loop and trimming the client buffer later. So we return

* ASAP in that case. */

return;

}

}

}

/* Trim to pos */

if (c->qb_pos) {

sdsrange(c->querybuf,c->qb_pos,-1);

c->qb_pos = 0;

}

}

(6)主线程会等待所有的IO线程处理完才执行processCommandAndResetClient函数,processCommandAndResetClient函数调用processCommand函数处理命令。

从这就可以看出redis处理命令是单线程的。

networking.c的handleClientsWithPendingReadsUsingThreads函数部分代码:

/* Wait for all the other threads to end their work. */

while(1) {

unsigned long pending = 0;

for (int j = 1; j < server.io_threads_num; j++)

pending += io_threads_pending[j];

if (pending == 0) break;

}

if (tio_debug) printf("I/O READ All threads finshed\n");

/* Run the list of clients again to process the new buffers. */

while(listLength(server.clients_pending_read)) {

ln = listFirst(server.clients_pending_read);

client *c = listNodeValue(ln);

c->flags &= ~CLIENT_PENDING_READ;

listDelNode(server.clients_pending_read,ln);

/* Clients can become paused while executing the queued commands,

* so we need to check in between each command. If a pause was

* executed, we still remove the command and it will get picked up

* later when clients are unpaused and we re-queue all clients. */

if (clientsArePaused()) continue;

if (processPendingCommandsAndResetClient(c) == C_ERR) {

/* If the client is no longer valid, we avoid

* processing the client later. So we just go

* to the next. */

continue;

}

processInputBuffer(c);

/* We may have pending replies if a thread readQueryFromClient() produced

* replies and did not install a write handler (it can't).

*/

if (!(c->flags & CLIENT_PENDING_WRITE) && clientHasPendingReplies(c))

clientInstallWriteHandler(c);

}

networking.c的processCommand函数的部分代码,执行命令处理:

/* Exec the command */

if (c->flags & CLIENT_MULTI &&

c->cmd->proc != execCommand && c->cmd->proc != discardCommand &&

c->cmd->proc != multiCommand && c->cmd->proc != watchCommand)

{

queueMultiCommand(c);

addReply(c,shared.queued);

} else {

call(c,CMD_CALL_FULL);

c->woff = server.master_repl_offset;

if (listLength(server.ready_keys))

handleClientsBlockedOnKeys();

}

(7)写数据到缓冲区。processCommand函数调用addReply,addReply调用prepareClientToWrite将数据写到缓冲区。prepareClientToWrite调用clientInstallWriteHandler,clientInstallWriteHandler调用listAddNodeHead将任务写到clients_pending_write全局队列,又开始任务派发到IO多线程处理。

接下来的写流程和du流程差不多。

networking.c

/* This function puts the client in the queue of clients that should write

* their output buffers to the socket. Note that it does not *yet* install

* the write handler, to start clients are put in a queue of clients that need

* to write, so we try to do that before returning in the event loop (see the

* handleClientsWithPendingWrites() function).

* If we fail and there is more data to write, compared to what the socket

* buffers can hold, then we'll really install the handler. */

void clientInstallWriteHandler(client *c) {

/* Schedule the client to write the output buffers to the socket only

* if not already done and, for slaves, if the slave can actually receive

* writes at this stage. */

if (!(c->flags & CLIENT_PENDING_WRITE) &&

(c->replstate == REPL_STATE_NONE ||

(c->replstate == SLAVE_STATE_ONLINE && !c->repl_put_online_on_ack)))

{

/* Here instead of installing the write handler, we just flag the

* client and put it into a list of clients that have something

* to write to the socket. This way before re-entering the event

* loop, we can try to directly write to the client sockets avoiding

* a system call. We'll only really install the write handler if

* we'll not be able to write the whole reply at once. */

c->flags |= CLIENT_PENDING_WRITE;

listAddNodeHead(server.clients_pending_write,c);

}

}

/* This function is called every time we are going to transmit new data

* to the client. The behavior is the following:

*

* If the client should receive new data (normal clients will) the function

* returns C_OK, and make sure to install the write handler in our event

* loop so that when the socket is writable new data gets written.

*

* If the client should not receive new data, because it is a fake client

* (used to load AOF in memory), a master or because the setup of the write

* handler failed, the function returns C_ERR.

*

* The function may return C_OK without actually installing the write

* event handler in the following cases:

*

* 1) The event handler should already be installed since the output buffer

* already contains something.

* 2) The client is a slave but not yet online, so we want to just accumulate

* writes in the buffer but not actually sending them yet.

*

* Typically gets called every time a reply is built, before adding more

* data to the clients output buffers. If the function returns C_ERR no

* data should be appended to the output buffers. */

int prepareClientToWrite(client *c) {

/* If it's the Lua client we always return ok without installing any

* handler since there is no socket at all. */

if (c->flags & (CLIENT_LUA|CLIENT_MODULE)) return C_OK;

/* If CLIENT_CLOSE_ASAP flag is set, we need not write anything. */

if (c->flags & CLIENT_CLOSE_ASAP) return C_ERR;

/* CLIENT REPLY OFF / SKIP handling: don't send replies. */

if (c->flags & (CLIENT_REPLY_OFF|CLIENT_REPLY_SKIP)) return C_ERR;

/* Masters don't receive replies, unless CLIENT_MASTER_FORCE_REPLY flag

* is set. */

if ((c->flags & CLIENT_MASTER) &&

!(c->flags & CLIENT_MASTER_FORCE_REPLY)) return C_ERR;

if (!c->conn) return C_ERR; /* Fake client for AOF loading. */

/* Schedule the client to write the output buffers to the socket, unless

* it should already be setup to do so (it has already pending data).

*

* If CLIENT_PENDING_READ is set, we're in an IO thread and should

* not install a write handler. Instead, it will be done by

* handleClientsWithPendingReadsUsingThreads() upon return.

*/

if (!clientHasPendingReplies(c) && !(c->flags & CLIENT_PENDING_READ))

clientInstallWriteHandler(c);

/* Authorize the caller to queue in the output buffer of this client. */

return C_OK;

}

/* -----------------------------------------------------------------------------

* Higher level functions to queue data on the client output buffer.

* The following functions are the ones that commands implementations will call.

* -------------------------------------------------------------------------- */

/* Add the object 'obj' string representation to the client output buffer. */

void addReply(client *c, robj *obj) {

if (prepareClientToWrite(c) != C_OK) return;

if (sdsEncodedObject(obj)) {

if (_addReplyToBuffer(c,obj->ptr,sdslen(obj->ptr)) != C_OK)

_addReplyProtoToList(c,obj->ptr,sdslen(obj->ptr));

} else if (obj->encoding == OBJ_ENCODING_INT) {

/* For integer encoded strings we just convert it into a string

* using our optimized function, and attach the resulting string

* to the output buffer. */

char buf[32];

size_t len = ll2string(buf,sizeof(buf),(long)obj->ptr);

if (_addReplyToBuffer(c,buf,len) != C_OK)

_addReplyProtoToList(c,buf,len);

} else {

serverPanic("Wrong obj->encoding in addReply()");

}

}

总结

- redis单线程是指命令处理在一个单线程中。

- IO多线程只处理IO事件,redis主线程永远用于处理命令。

- IO多线程源码分析。

5581

5581

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?