Android进程间通信(IPC)机制Binder简要介绍和学习计划

在Android系统中,每一个应用程序都是由一些Activity和Service组成的,一般Service运行在独立的进程中,而Activity有可能运行在同一个进程中,也有可能运行在不同的进程中。那么,不在同一个进程的Activity或者Service是如何通信的呢?这就是本文中要介绍的Binder进程间通信机制了。

我们知道,Android系统是基于Linux内核的,而Linux内核继承和兼容了丰富的Unix系统进程间通信(IPC)机制。有传统的管道(Pipe)、信号(Signal)和跟踪(Trace),这三项通信手段只能用于父进程与子进程之间,或者兄弟进程之间;后来又增加了命令管道(Named Pipe),使得进程间通信不再局限于父子进程或者兄弟进程之间;为了更好地支持商业应用中的事务处理,在AT&T的Unix系统V中,又增加了三种称为“System V IPC”的进程间通信机制,分别是报文队列(Message)、共享内存(Share Memory)和信号量(Semaphore);后来BSD Unix对“System V IPC”机制进行了重要的扩充,提供了一种称为插口(Socket)的进程间通信机制。若想进一步详细了解这些进程间通信机制,建议参考Android学习启动篇一文中提到《Linux内核源代码情景分析》一书。

但是,Android系统没有采用上述提到的各种进程间通信机制,而是采用Binder机制,难道是因为考虑到了移动设备硬件性能较差、内存较低的特点?不得而知。Binder其实也不是Android提出来的一套新的进程间通信机制,它是基于OpenBinder来实现的。OpenBinder最先是由Be Inc.开发的,接着Palm Inc.也跟着使用。现在OpenBinder的作者Dianne Hackborn就是在Google工作,负责Android平台的开发工作。

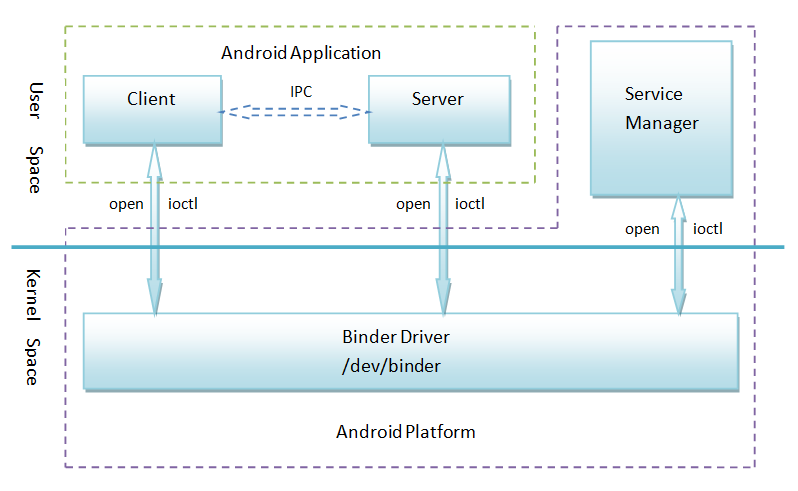

前面一再提到,Binder是一种进程间通信机制,它是一种类似于COM和CORBA分布式组件架构,通俗一点,其实是提供远程过程调用(RPC)功能。从英文字面上意思看,Binder具有粘结剂的意思,那么它把什么东西粘结在一起呢?在Android系统的Binder机制中,由一系统组件组成,分别是Client、Server、Service Manager和Binder驱动程序,其中Client、Server和Service Manager运行在用户空间,Binder驱动程序运行内核空间。Binder就是一种把这四个组件粘合在一起的粘结剂了,其中,核心组件便是Binder驱动程序了,Service Manager提供了辅助管理的功能,Client和Server正是在Binder驱动和Service Manager提供的基础设施上,进行Client-Server之间的通信。Service Manager和Binder驱动已经在Android平台中实现好,开发者只要按照规范实现自己的Client和Server组件就可以了。说起来简单,做起难,对初学者来说,Android系统的Binder机制是最难理解的了,而Binder机制无论从系统开发还是应用开发的角度来看,都是Android系统中最重要的组成,因此,很有必要深入了解Binder的工作方式。要深入了解Binder的工作方式,最好的方式莫过于是阅读Binder相关的源代码了,Linux的鼻祖Linus Torvalds曾经曰过一句名言RTFSC:Read The Fucking Source Code。

虽说阅读Binder的源代码是学习Binder机制的最好的方式,但是也绝不能打无准备之仗,因为Binder的相关源代码是比较枯燥无味而且比较难以理解的,如果能够辅予一些理论知识,那就更好了。闲话少说,网上关于Binder机制的资料还是不少的,这里就不想再详细写一遍了,强烈推荐下面两篇文章:

Android深入浅出之Binder机制一文从情景出发,深入地介绍了Binder在用户空间的三个组件Client、Server和Service Manager的相互关系,Android Binder设计与实现一文则是详细地介绍了内核空间的Binder驱动程序的数据结构和设计原理。非常感谢这两位作者给我们带来这么好的Binder学习资料。总结一下,Android系统Binder机制中的四个组件Client、Server、Service Manager和Binder驱动程序的关系如下图所示:

1. Client、Server和Service Manager实现在用户空间中,Binder驱动程序实现在内核空间中

2. Binder驱动程序和Service Manager在Android平台中已经实现,开发者只需要在用户空间实现自己的Client和Server

3. Binder驱动程序提供设备文件/dev/binder与用户空间交互,Client、Server和Service Manager通过open和ioctl文件操作函数与Binder驱动程序进行通信

4. Client和Server之间的进程间通信通过Binder驱动程序间接实现

5. Service Manager是一个守护进程,用来管理Server,并向Client提供查询Server接口的能力

至此,对Binder机制总算是有了一个感性的认识,但仍然感到不能很好地从上到下贯穿整个IPC通信过程,于是,打算通过下面四个情景来分析Binder源代码,以进一步理解Binder机制:

1. Service Manager是如何成为一个守护进程的?即Service Manager是如何告知Binder驱动程序它是Binder机制的上下文管理者。

2. Server和Client是如何获得Service Manager接口的?即defaultServiceManager接口是如何实现的。

4 Service Manager是如何为Client提供服务的?即IServiceManager::getService接口是如何实现的。

在接下来的四篇文章中,将按照这四个情景来分析Binder源代码,都将会涉及到用户空间到内核空间的Binder相关源代码。这里为什么没有Client和Server是如何进行进程间通信的情景呢? 这是因为Service Manager在作为守护进程的同时,它也充当Server角色。因此,只要我们能够理解第三和第四个情景,也就理解了Binder机制中Client和Server是如何通过Binder驱动程序进行进程间通信的了。

为了方便描述Android系统进程间通信Binder机制的原理和实现,在接下来的四篇文章中,我们都是基于C/C++语言来介绍Binder机制的实现的,但是,我们在Android系统开发应用程序时,都是基于Java语言的,因此,我们会在最后一篇文章中,详细介绍Android系统进程间通信Binder机制在应用程序框架层的Java接口实现:

5. Android系统进程间通信Binder机制在应用程序框架层的Java接口源代码分析。

浅谈Service Manager成为Android进程间通信(IPC)机制Binder守护进程之路

上一篇文章Android进程间通信(IPC)机制Binder简要介绍和学习计划简要介绍了Android系统进程间通信机制Binder的总体架构,它由Client、Server、Service Manager和驱动程序Binder四个组件构成。本文着重介绍组件Service Manager,它是整个Binder机制的守护进程,用来管理开发者创建的各种Server,并且向Client提供查询Server远程接口的功能。

既然Service Manager组件是用来管理Server并且向Client提供查询Server远程接口的功能,那么,Service Manager就必然要和Server以及Client进行通信了。我们知道,Service Manger、Client和Server三者分别是运行在独立的进程当中,这样它们之间的通信也属于进程间通信了,而且也是采用Binder机制进行进程间通信,因此,Service Manager在充当Binder机制的守护进程的角色的同时,也在充当Server的角色,然而,它是一种特殊的Server,下面我们将会看到它的特殊之处。

与Service Manager相关的源代码较多,这里不会完整去分析每一行代码,主要是带着Service Manager是如何成为整个Binder机制中的守护进程这条主线来一步一步地深入分析相关源代码,包括从用户空间到内核空间的相关源代码。希望读者在阅读下面的内容之前,先阅读一下前一篇文章提到的两个参考资料Android深入浅出之Binder机制和Android Binder设计与实现,熟悉相关概念和数据结构,这有助于理解下面要分析的源代码。

Service Manager在用户空间的源代码位于frameworks/base/cmds/servicemanager目录下,主要是由binder.h、binder.c和service_manager.c三个文件组成。Service Manager的入口位于service_manager.c文件中的main函数:

- int main(int argc, char **argv)

- {

- struct binder_state *bs;

- void *svcmgr = BINDER_SERVICE_MANAGER;

- bs = binder_open(128*1024);

- if (binder_become_context_manager(bs)) {

- LOGE("cannot become context manager (%s)\n", strerror(errno));

- return -1;

- }

- svcmgr_handle = svcmgr;

- binder_loop(bs, svcmgr_handler);

- return 0;

- }

int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

if (binder_become_context_manager(bs)) {

LOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

return 0;

}struct binder_state定义在frameworks/base/cmds/servicemanager/binder.c文件中:

- struct binder_state

- {

- int fd;

- void *mapped;

- unsigned mapsize;

- };

struct binder_state

{

int fd;

void *mapped;

unsigned mapsize;

};宏BINDER_SERVICE_MANAGER定义frameworks/base/cmds/servicemanager/binder.h文件中:

- /* the one magic object */

- #define BINDER_SERVICE_MANAGER ((void*) 0)

/* the one magic object */

#define BINDER_SERVICE_MANAGER ((void*) 0)函数首先是执行打开Binder设备文件的操作binder_open,这个函数位于frameworks/base/cmds/servicemanager/binder.c文件中:

- struct binder_state *binder_open(unsigned mapsize)

- {

- struct binder_state *bs;

- bs = malloc(sizeof(*bs));

- if (!bs) {

- errno = ENOMEM;

- return 0;

- }

- bs->fd = open("/dev/binder", O_RDWR);

- if (bs->fd < 0) {

- fprintf(stderr,"binder: cannot open device (%s)\n",

- strerror(errno));

- goto fail_open;

- }

- bs->mapsize = mapsize;

- bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

- if (bs->mapped == MAP_FAILED) {

- fprintf(stderr,"binder: cannot map device (%s)\n",

- strerror(errno));

- goto fail_map;

- }

- /* TODO: check version */

- return bs;

- fail_map:

- close(bs->fd);

- fail_open:

- free(bs);

- return 0;

- }

struct binder_state *binder_open(unsigned mapsize)

{

struct binder_state *bs;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return 0;

}

bs->fd = open("/dev/binder", O_RDWR);

if (bs->fd < 0) {

fprintf(stderr,"binder: cannot open device (%s)\n",

strerror(errno));

goto fail_open;

}

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

/* TODO: check version */

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return 0;

}- static struct file_operations binder_fops = {

- .owner = THIS_MODULE,

- .poll = binder_poll,

- .unlocked_ioctl = binder_ioctl,

- .mmap = binder_mmap,

- .open = binder_open,

- .flush = binder_flush,

- .release = binder_release,

- };

- static struct miscdevice binder_miscdev = {

- .minor = MISC_DYNAMIC_MINOR,

- .name = "binder",

- .fops = &binder_fops

- };

- static int __init binder_init(void)

- {

- int ret;

- binder_proc_dir_entry_root = proc_mkdir("binder", NULL);

- if (binder_proc_dir_entry_root)

- binder_proc_dir_entry_proc = proc_mkdir("proc", binder_proc_dir_entry_root);

- ret = misc_register(&binder_miscdev);

- if (binder_proc_dir_entry_root) {

- create_proc_read_entry("state", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_state, NULL);

- create_proc_read_entry("stats", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_stats, NULL);

- create_proc_read_entry("transactions", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transactions, NULL);

- create_proc_read_entry("transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log);

- create_proc_read_entry("failed_transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log_failed);

- }

- return ret;

- }

- device_initcall(binder_init);

static struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

static struct miscdevice binder_miscdev = {

.minor = MISC_DYNAMIC_MINOR,

.name = "binder",

.fops = &binder_fops

};

static int __init binder_init(void)

{

int ret;

binder_proc_dir_entry_root = proc_mkdir("binder", NULL);

if (binder_proc_dir_entry_root)

binder_proc_dir_entry_proc = proc_mkdir("proc", binder_proc_dir_entry_root);

ret = misc_register(&binder_miscdev);

if (binder_proc_dir_entry_root) {

create_proc_read_entry("state", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_state, NULL);

create_proc_read_entry("stats", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_stats, NULL);

create_proc_read_entry("transactions", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transactions, NULL);

create_proc_read_entry("transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log);

create_proc_read_entry("failed_transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log_failed);

}

return ret;

}

device_initcall(binder_init);创建设备文件的地方在misc_register函数里面,关于misc设备的注册,我们在Android日志系统驱动程序Logger源代码分析一文中有提到,有兴趣的读取不访去了解一下。其余的逻辑主要是在/proc目录创建各种Binder相关的文件,供用户访问。从设备文件的操作方法binder_fops可以看出,前面的binder_open函数执行语句:

- bs->fd = open("/dev/binder", O_RDWR);

bs->fd = open("/dev/binder", O_RDWR);就进入到Binder驱动程序的binder_open函数了:

- static int binder_open(struct inode *nodp, struct file *filp)

- {

- struct binder_proc *proc;

- if (binder_debug_mask & BINDER_DEBUG_OPEN_CLOSE)

- printk(KERN_INFO "binder_open: %d:%d\n", current->group_leader->pid, current->pid);

- proc = kzalloc(sizeof(*proc), GFP_KERNEL);

- if (proc == NULL)

- return -ENOMEM;

- get_task_struct(current);

- proc->tsk = current;

- INIT_LIST_HEAD(&proc->todo);

- init_waitqueue_head(&proc->wait);

- proc->default_priority = task_nice(current);

- mutex_lock(&binder_lock);

- binder_stats.obj_created[BINDER_STAT_PROC]++;

- hlist_add_head(&proc->proc_node, &binder_procs);

- proc->pid = current->group_leader->pid;

- INIT_LIST_HEAD(&proc->delivered_death);

- filp->private_data = proc;

- mutex_unlock(&binder_lock);

- if (binder_proc_dir_entry_proc) {

- char strbuf[11];

- snprintf(strbuf, sizeof(strbuf), "%u", proc->pid);

- remove_proc_entry(strbuf, binder_proc_dir_entry_proc);

- create_proc_read_entry(strbuf, S_IRUGO, binder_proc_dir_entry_proc, binder_read_proc_proc, proc);

- }

- return 0;

- }

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

if (binder_debug_mask & BINDER_DEBUG_OPEN_CLOSE)

printk(KERN_INFO "binder_open: %d:%d\n", current->group_leader->pid, current->pid);

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

proc->tsk = current;

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

proc->default_priority = task_nice(current);

mutex_lock(&binder_lock);

binder_stats.obj_created[BINDER_STAT_PROC]++;

hlist_add_head(&proc->proc_node, &binder_procs);

proc->pid = current->group_leader->pid;

INIT_LIST_HEAD(&proc->delivered_death);

filp->private_data = proc;

mutex_unlock(&binder_lock);

if (binder_proc_dir_entry_proc) {

char strbuf[11];

snprintf(strbuf, sizeof(strbuf), "%u", proc->pid);

remove_proc_entry(strbuf, binder_proc_dir_entry_proc);

create_proc_read_entry(strbuf, S_IRUGO, binder_proc_dir_entry_proc, binder_read_proc_proc, proc);

}

return 0;

}- static HLIST_HEAD(binder_procs);

static HLIST_HEAD(binder_procs);- struct binder_proc {

- struct hlist_node proc_node;

- struct rb_root threads;

- struct rb_root nodes;

- struct rb_root refs_by_desc;

- struct rb_root refs_by_node;

- int pid;

- struct vm_area_struct *vma;

- struct task_struct *tsk;

- struct files_struct *files;

- struct hlist_node deferred_work_node;

- int deferred_work;

- void *buffer;

- ptrdiff_t user_buffer_offset;

- struct list_head buffers;

- struct rb_root free_buffers;

- struct rb_root allocated_buffers;

- size_t free_async_space;

- struct page **pages;

- size_t buffer_size;

- uint32_t buffer_free;

- struct list_head todo;

- wait_queue_head_t wait;

- struct binder_stats stats;

- struct list_head delivered_death;

- int max_threads;

- int requested_threads;

- int requested_threads_started;

- int ready_threads;

- long default_priority;

- };

struct binder_proc {

struct hlist_node proc_node;

struct rb_root threads;

struct rb_root nodes;

struct rb_root refs_by_desc;

struct rb_root refs_by_node;

int pid;

struct vm_area_struct *vma;

struct task_struct *tsk;

struct files_struct *files;

struct hlist_node deferred_work_node;

int deferred_work;

void *buffer;

ptrdiff_t user_buffer_offset;

struct list_head buffers;

struct rb_root free_buffers;

struct rb_root allocated_buffers;

size_t free_async_space;

struct page **pages;

size_t buffer_size;

uint32_t buffer_free;

struct list_head todo;

wait_queue_head_t wait;

struct binder_stats stats;

struct list_head delivered_death;

int max_threads;

int requested_threads;

int requested_threads_started;

int ready_threads;

long default_priority;

};这样,打开设备文件/dev/binder的操作就完成了,接着是对打开的设备文件进行内存映射操作mmap:

- bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);对应Binder驱动程序的binder_mmap函数:

- static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

- {

- int ret;

- struct vm_struct *area;

- struct binder_proc *proc = filp->private_data;

- const char *failure_string;

- struct binder_buffer *buffer;

- if ((vma->vm_end - vma->vm_start) > SZ_4M)

- vma->vm_end = vma->vm_start + SZ_4M;

- if (binder_debug_mask & BINDER_DEBUG_OPEN_CLOSE)

- printk(KERN_INFO

- "binder_mmap: %d %lx-%lx (%ld K) vma %lx pagep %lx\n",

- proc->pid, vma->vm_start, vma->vm_end,

- (vma->vm_end - vma->vm_start) / SZ_1K, vma->vm_flags,

- (unsigned long)pgprot_val(vma->vm_page_prot));

- if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) {

- ret = -EPERM;

- failure_string = "bad vm_flags";

- goto err_bad_arg;

- }

- vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE;

- if (proc->buffer) {

- ret = -EBUSY;

- failure_string = "already mapped";

- goto err_already_mapped;

- }

- area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP);

- if (area == NULL) {

- ret = -ENOMEM;

- failure_string = "get_vm_area";

- goto err_get_vm_area_failed;

- }

- proc->buffer = area->addr;

- proc->user_buffer_offset = vma->vm_start - (uintptr_t)proc->buffer;

- #ifdef CONFIG_CPU_CACHE_VIPT

- if (cache_is_vipt_aliasing()) {

- while (CACHE_COLOUR((vma->vm_start ^ (uint32_t)proc->buffer))) {

- printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p bad alignment\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer);

- vma->vm_start += PAGE_SIZE;

- }

- }

- #endif

- proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL);

- if (proc->pages == NULL) {

- ret = -ENOMEM;

- failure_string = "alloc page array";

- goto err_alloc_pages_failed;

- }

- proc->buffer_size = vma->vm_end - vma->vm_start;

- vma->vm_ops = &binder_vm_ops;

- vma->vm_private_data = proc;

- if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) {

- ret = -ENOMEM;

- failure_string = "alloc small buf";

- goto err_alloc_small_buf_failed;

- }

- buffer = proc->buffer;

- INIT_LIST_HEAD(&proc->buffers);

- list_add(&buffer->entry, &proc->buffers);

- buffer->free = 1;

- binder_insert_free_buffer(proc, buffer);

- proc->free_async_space = proc->buffer_size / 2;

- barrier();

- proc->files = get_files_struct(current);

- proc->vma = vma;

- /*printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer);*/

- return 0;

- err_alloc_small_buf_failed:

- kfree(proc->pages);

- proc->pages = NULL;

- err_alloc_pages_failed:

- vfree(proc->buffer);

- proc->buffer = NULL;

- err_get_vm_area_failed:

- err_already_mapped:

- err_bad_arg:

- printk(KERN_ERR "binder_mmap: %d %lx-%lx %s failed %d\n", proc->pid, vma->vm_start, vma->vm_end, failure_string, ret);

- return ret;

- }

static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{

int ret;

struct vm_struct *area;

struct binder_proc *proc = filp->private_data;

const char *failure_string;

struct binder_buffer *buffer;

if ((vma->vm_end - vma->vm_start) > SZ_4M)

vma->vm_end = vma->vm_start + SZ_4M;

if (binder_debug_mask & BINDER_DEBUG_OPEN_CLOSE)

printk(KERN_INFO

"binder_mmap: %d %lx-%lx (%ld K) vma %lx pagep %lx\n",

proc->pid, vma->vm_start, vma->vm_end,

(vma->vm_end - vma->vm_start) / SZ_1K, vma->vm_flags,

(unsigned long)pgprot_val(vma->vm_page_prot));

if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) {

ret = -EPERM;

failure_string = "bad vm_flags";

goto err_bad_arg;

}

vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE;

if (proc->buffer) {

ret = -EBUSY;

failure_string = "already mapped";

goto err_already_mapped;

}

area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP);

if (area == NULL) {

ret = -ENOMEM;

failure_string = "get_vm_area";

goto err_get_vm_area_failed;

}

proc->buffer = area->addr;

proc->user_buffer_offset = vma->vm_start - (uintptr_t)proc->buffer;

#ifdef CONFIG_CPU_CACHE_VIPT

if (cache_is_vipt_aliasing()) {

while (CACHE_COLOUR((vma->vm_start ^ (uint32_t)proc->buffer))) {

printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p bad alignment\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer);

vma->vm_start += PAGE_SIZE;

}

}

#endif

proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL);

if (proc->pages == NULL) {

ret = -ENOMEM;

failure_string = "alloc page array";

goto err_alloc_pages_failed;

}

proc->buffer_size = vma->vm_end - vma->vm_start;

vma->vm_ops = &binder_vm_ops;

vma->vm_private_data = proc;

if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) {

ret = -ENOMEM;

failure_string = "alloc small buf";

goto err_alloc_small_buf_failed;

}

buffer = proc->buffer;

INIT_LIST_HEAD(&proc->buffers);

list_add(&buffer->entry, &proc->buffers);

buffer->free = 1;

binder_insert_free_buffer(proc, buffer);

proc->free_async_space = proc->buffer_size / 2;

barrier();

proc->files = get_files_struct(current);

proc->vma = vma;

/*printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer);*/

return 0;

err_alloc_small_buf_failed:

kfree(proc->pages);

proc->pages = NULL;

err_alloc_pages_failed:

vfree(proc->buffer);

proc->buffer = NULL;

err_get_vm_area_failed:

err_already_mapped:

err_bad_arg:

printk(KERN_ERR "binder_mmap: %d %lx-%lx %s failed %d\n", proc->pid, vma->vm_start, vma->vm_end, failure_string, ret);

return ret;

}函数首先通过filp->private_data得到在打开设备文件/dev/binder时创建的struct binder_proc结构。内存映射信息放在vma参数中,注意,这里的vma的数据类型是struct vm_area_struct,它表示的是一块连续的虚拟地址空间区域,在函数变量声明的地方,我们还看到有一个类似的结构体struct vm_struct,这个数据结构也是表示一块连续的虚拟地址空间区域,那么,这两者的区别是什么呢?在Linux中,struct vm_area_struct表示的虚拟地址是给进程使用的,而struct vm_struct表示的虚拟地址是给内核使用的,它们对应的物理页面都可以是不连续的。struct vm_area_struct表示的地址空间范围是0~3G,而struct vm_struct表示的地址空间范围是(3G + 896M + 8M) ~ 4G。struct vm_struct表示的地址空间范围为什么不是3G~4G呢?原来,3G ~ (3G + 896M)范围的地址是用来映射连续的物理页面的,这个范围的虚拟地址和对应的实际物理地址有着简单的对应关系,即对应0~896M的物理地址空间,而(3G + 896M) ~ (3G + 896M + 8M)是安全保护区域(例如,所有指向这8M地址空间的指针都是非法的),因此struct vm_struct使用(3G + 896M + 8M) ~ 4G地址空间来映射非连续的物理页面。有关Linux的内存管理知识,可以参考Android学习启动篇一文提到的《Understanding the Linux Kernel》一书中的第8章。

这里为什么会同时使用进程虚拟地址空间和内核虚拟地址空间来映射同一个物理页面呢?这就是Binder进程间通信机制的精髓所在了,同一个物理页面,一方映射到进程虚拟地址空间,一方面映射到内核虚拟地址空间,这样,进程和内核之间就可以减少一次内存拷贝了,提到了进程间通信效率。举个例子如,Client要将一块内存数据传递给Server,一般的做法是,Client将这块数据从它的进程空间拷贝到内核空间中,然后内核再将这个数据从内核空间拷贝到Server的进程空间,这样,Server就可以访问这个数据了。但是在这种方法中,执行了两次内存拷贝操作,而采用我们上面提到的方法,只需要把Client进程空间的数据拷贝一次到内核空间,然后Server与内核共享这个数据就可以了,整个过程只需要执行一次内存拷贝,提高了效率。

binder_mmap的原理讲完了,这个函数的逻辑就好理解了。不过,这里还是先要解释一下struct binder_proc结构体的几个成员变量。buffer成员变量是一个void*指针,它表示要映射的物理内存在内核空间中的起始位置;buffer_size成员变量是一个size_t类型的变量,表示要映射的内存的大小;pages成员变量是一个struct page*类型的数组,struct page是用来描述物理页面的数据结构;user_buffer_offset成员变量是一个ptrdiff_t类型的变量,它表示的是内核使用的虚拟地址与进程使用的虚拟地址之间的差值,即如果某个物理页面在内核空间中对应的虚拟地址是addr的话,那么这个物理页面在进程空间对应的虚拟地址就为addr + user_buffer_offset。

再解释一下Binder驱动程序管理这个内存映射地址空间的方法,即是如何管理buffer ~ (buffer + buffer_size)这段地址空间的,这个地址空间被划分为一段一段来管理,每一段是结构体struct binder_buffer来描述:

- struct binder_buffer {

- struct list_head entry; /* free and allocated entries by addesss */

- struct rb_node rb_node; /* free entry by size or allocated entry */

- /* by address */

- unsigned free : 1;

- unsigned allow_user_free : 1;

- unsigned async_transaction : 1;

- unsigned debug_id : 29;

- struct binder_transaction *transaction;

- struct binder_node *target_node;

- size_t data_size;

- size_t offsets_size;

- uint8_t data[0];

- };

struct binder_buffer {

struct list_head entry; /* free and allocated entries by addesss */

struct rb_node rb_node; /* free entry by size or allocated entry */

/* by address */

unsigned free : 1;

unsigned allow_user_free : 1;

unsigned async_transaction : 1;

unsigned debug_id : 29;

struct binder_transaction *transaction;

struct binder_node *target_node;

size_t data_size;

size_t offsets_size;

uint8_t data[0];

};终于可以回到binder_mmap这个函数来了,首先是对参数作一些健康体检(sanity check),例如,要映射的内存大小不能超过SIZE_4M,即4M,回到service_manager.c中的main 函数,这里传进来的值是128 * 1024个字节,即128K,这个检查没有问题。通过健康体检后,调用get_vm_area函数获得一个空闲的vm_struct区间,并初始化proc结构体的buffer、user_buffer_offset、pages和buffer_size和成员变量,接着调用binder_update_page_range来为虚拟地址空间proc->buffer ~ proc->buffer + PAGE_SIZE分配一个空闲的物理页面,同时这段地址空间使用一个binder_buffer来描述,分别插入到proc->buffers链表和proc->free_buffers红黑树中去,最后,还初始化了proc结构体的free_async_space、files和vma三个成员变量。

这里,我们继续进入到binder_update_page_range函数中去看一下Binder驱动程序是如何实现把一个物理页面同时映射到内核空间和进程空间去的:

- static int binder_update_page_range(struct binder_proc *proc, int allocate,

- void *start, void *end, struct vm_area_struct *vma)

- {

- void *page_addr;

- unsigned long user_page_addr;

- struct vm_struct tmp_area;

- struct page **page;

- struct mm_struct *mm;

- if (binder_debug_mask & BINDER_DEBUG_BUFFER_ALLOC)

- printk(KERN_INFO "binder: %d: %s pages %p-%p\n",

- proc->pid, allocate ? "allocate" : "free", start, end);

- if (end <= start)

- return 0;

- if (vma)

- mm = NULL;

- else

- mm = get_task_mm(proc->tsk);

- if (mm) {

- down_write(&mm->mmap_sem);

- vma = proc->vma;

- }

- if (allocate == 0)

- goto free_range;

- if (vma == NULL) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed to "

- "map pages in userspace, no vma\n", proc->pid);

- goto err_no_vma;

- }

- for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) {

- int ret;

- struct page **page_array_ptr;

- page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

- BUG_ON(*page);

- *page = alloc_page(GFP_KERNEL | __GFP_ZERO);

- if (*page == NULL) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "for page at %p\n", proc->pid, page_addr);

- goto err_alloc_page_failed;

- }

- tmp_area.addr = page_addr;

- tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */;

- page_array_ptr = page;

- ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr);

- if (ret) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "to map page at %p in kernel\n",

- proc->pid, page_addr);

- goto err_map_kernel_failed;

- }

- user_page_addr =

- (uintptr_t)page_addr + proc->user_buffer_offset;

- ret = vm_insert_page(vma, user_page_addr, page[0]);

- if (ret) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "to map page at %lx in userspace\n",

- proc->pid, user_page_addr);

- goto err_vm_insert_page_failed;

- }

- /* vm_insert_page does not seem to increment the refcount */

- }

- if (mm) {

- up_write(&mm->mmap_sem);

- mmput(mm);

- }

- return 0;

- free_range:

- for (page_addr = end - PAGE_SIZE; page_addr >= start;

- page_addr -= PAGE_SIZE) {

- page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

- if (vma)

- zap_page_range(vma, (uintptr_t)page_addr +

- proc->user_buffer_offset, PAGE_SIZE, NULL);

- err_vm_insert_page_failed:

- unmap_kernel_range((unsigned long)page_addr, PAGE_SIZE);

- err_map_kernel_failed:

- __free_page(*page);

- *page = NULL;

- err_alloc_page_failed:

- ;

- }

- err_no_vma:

- if (mm) {

- up_write(&mm->mmap_sem);

- mmput(mm);

- }

- return -ENOMEM;

- }

static int binder_update_page_range(struct binder_proc *proc, int allocate,

void *start, void *end, struct vm_area_struct *vma)

{

void *page_addr;

unsigned long user_page_addr;

struct vm_struct tmp_area;

struct page **page;

struct mm_struct *mm;

if (binder_debug_mask & BINDER_DEBUG_BUFFER_ALLOC)

printk(KERN_INFO "binder: %d: %s pages %p-%p\n",

proc->pid, allocate ? "allocate" : "free", start, end);

if (end <= start)

return 0;

if (vma)

mm = NULL;

else

mm = get_task_mm(proc->tsk);

if (mm) {

down_write(&mm->mmap_sem);

vma = proc->vma;

}

if (allocate == 0)

goto free_range;

if (vma == NULL) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed to "

"map pages in userspace, no vma\n", proc->pid);

goto err_no_vma;

}

for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) {

int ret;

struct page **page_array_ptr;

page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

BUG_ON(*page);

*page = alloc_page(GFP_KERNEL | __GFP_ZERO);

if (*page == NULL) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"for page at %p\n", proc->pid, page_addr);

goto err_alloc_page_failed;

}

tmp_area.addr = page_addr;

tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */;

page_array_ptr = page;

ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %p in kernel\n",

proc->pid, page_addr);

goto err_map_kernel_failed;

}

user_page_addr =

(uintptr_t)page_addr + proc->user_buffer_offset;

ret = vm_insert_page(vma, user_page_addr, page[0]);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %lx in userspace\n",

proc->pid, user_page_addr);

goto err_vm_insert_page_failed;

}

/* vm_insert_page does not seem to increment the refcount */

}

if (mm) {

up_write(&mm->mmap_sem);

mmput(mm);

}

return 0;

free_range:

for (page_addr = end - PAGE_SIZE; page_addr >= start;

page_addr -= PAGE_SIZE) {

page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

if (vma)

zap_page_range(vma, (uintptr_t)page_addr +

proc->user_buffer_offset, PAGE_SIZE, NULL);

err_vm_insert_page_failed:

unmap_kernel_range((unsigned long)page_addr, PAGE_SIZE);

err_map_kernel_failed:

__free_page(*page);

*page = NULL;

err_alloc_page_failed:

;

}

err_no_vma:

if (mm) {

up_write(&mm->mmap_sem);

mmput(mm);

}

return -ENOMEM;

}- for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) {

- int ret;

- struct page **page_array_ptr;

- page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

- BUG_ON(*page);

- *page = alloc_page(GFP_KERNEL | __GFP_ZERO);

- if (*page == NULL) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "for page at %p\n", proc->pid, page_addr);

- goto err_alloc_page_failed;

- }

- tmp_area.addr = page_addr;

- tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */;

- page_array_ptr = page;

- ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr);

- if (ret) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "to map page at %p in kernel\n",

- proc->pid, page_addr);

- goto err_map_kernel_failed;

- }

- user_page_addr =

- (uintptr_t)page_addr + proc->user_buffer_offset;

- ret = vm_insert_page(vma, user_page_addr, page[0]);

- if (ret) {

- printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

- "to map page at %lx in userspace\n",

- proc->pid, user_page_addr);

- goto err_vm_insert_page_failed;

- }

- /* vm_insert_page does not seem to increment the refcount */

- }

for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) {

int ret;

struct page **page_array_ptr;

page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

BUG_ON(*page);

*page = alloc_page(GFP_KERNEL | __GFP_ZERO);

if (*page == NULL) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"for page at %p\n", proc->pid, page_addr);

goto err_alloc_page_failed;

}

tmp_area.addr = page_addr;

tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */;

page_array_ptr = page;

ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %p in kernel\n",

proc->pid, page_addr);

goto err_map_kernel_failed;

}

user_page_addr =

(uintptr_t)page_addr + proc->user_buffer_offset;

ret = vm_insert_page(vma, user_page_addr, page[0]);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %lx in userspace\n",

proc->pid, user_page_addr);

goto err_vm_insert_page_failed;

}

/* vm_insert_page does not seem to increment the refcount */

}这样,frameworks/base/cmds/servicemanager/binder.c文件中的binder_open函数就描述完了,回到frameworks/base/cmds/servicemanager/service_manager.c文件中的main函数,下一步就是调用binder_become_context_manager来通知Binder驱动程序自己是Binder机制的上下文管理者,即守护进程。binder_become_context_manager函数位于frameworks/base/cmds/servicemanager/binder.c文件中:

- int binder_become_context_manager(struct binder_state *bs)

- {

- return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

- }

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}- #define BINDER_SET_CONTEXT_MGR _IOW('b', 7, int)

#define BINDER_SET_CONTEXT_MGR _IOW('b', 7, int)- static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

- {

- int ret;

- struct binder_proc *proc = filp->private_data;

- struct binder_thread *thread;

- unsigned int size = _IOC_SIZE(cmd);

- void __user *ubuf = (void __user *)arg;

- /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

- ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret)

- return ret;

- mutex_lock(&binder_lock);

- thread = binder_get_thread(proc);

- if (thread == NULL) {

- ret = -ENOMEM;

- goto err;

- }

- switch (cmd) {

- ......

- case BINDER_SET_CONTEXT_MGR:

- if (binder_context_mgr_node != NULL) {

- printk(KERN_ERR "binder: BINDER_SET_CONTEXT_MGR already set\n");

- ret = -EBUSY;

- goto err;

- }

- if (binder_context_mgr_uid != -1) {

- if (binder_context_mgr_uid != current->cred->euid) {

- printk(KERN_ERR "binder: BINDER_SET_"

- "CONTEXT_MGR bad uid %d != %d\n",

- current->cred->euid,

- binder_context_mgr_uid);

- ret = -EPERM;

- goto err;

- }

- } else

- binder_context_mgr_uid = current->cred->euid;

- binder_context_mgr_node = binder_new_node(proc, NULL, NULL);

- if (binder_context_mgr_node == NULL) {

- ret = -ENOMEM;

- goto err;

- }

- binder_context_mgr_node->local_weak_refs++;

- binder_context_mgr_node->local_strong_refs++;

- binder_context_mgr_node->has_strong_ref = 1;

- binder_context_mgr_node->has_weak_ref = 1;

- break;

- ......

- default:

- ret = -EINVAL;

- goto err;

- }

- ret = 0;

- err:

- if (thread)

- thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

- mutex_unlock(&binder_lock);

- wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret && ret != -ERESTARTSYS)

- printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

- return ret;

- }

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

mutex_lock(&binder_lock);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

......

case BINDER_SET_CONTEXT_MGR:

if (binder_context_mgr_node != NULL) {

printk(KERN_ERR "binder: BINDER_SET_CONTEXT_MGR already set\n");

ret = -EBUSY;

goto err;

}

if (binder_context_mgr_uid != -1) {

if (binder_context_mgr_uid != current->cred->euid) {

printk(KERN_ERR "binder: BINDER_SET_"

"CONTEXT_MGR bad uid %d != %d\n",

current->cred->euid,

binder_context_mgr_uid);

ret = -EPERM;

goto err;

}

} else

binder_context_mgr_uid = current->cred->euid;

binder_context_mgr_node = binder_new_node(proc, NULL, NULL);

if (binder_context_mgr_node == NULL) {

ret = -ENOMEM;

goto err;

}

binder_context_mgr_node->local_weak_refs++;

binder_context_mgr_node->local_strong_refs++;

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

break;

......

default:

ret = -EINVAL;

goto err;

}

ret = 0;

err:

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

mutex_unlock(&binder_lock);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

return ret;

}- struct binder_thread {

- struct binder_proc *proc;

- struct rb_node rb_node;

- int pid;

- int looper;

- struct binder_transaction *transaction_stack;

- struct list_head todo;

- uint32_t return_error; /* Write failed, return error code in read buf */

- uint32_t return_error2; /* Write failed, return error code in read */

- /* buffer. Used when sending a reply to a dead process that */

- /* we are also waiting on */

- wait_queue_head_t wait;

- struct binder_stats stats;

- };

struct binder_thread {

struct binder_proc *proc;

struct rb_node rb_node;

int pid;

int looper;

struct binder_transaction *transaction_stack;

struct list_head todo;

uint32_t return_error; /* Write failed, return error code in read buf */

uint32_t return_error2; /* Write failed, return error code in read */

/* buffer. Used when sending a reply to a dead process that */

/* we are also waiting on */

wait_queue_head_t wait;

struct binder_stats stats;

};- enum {

- BINDER_LOOPER_STATE_REGISTERED = 0x01,

- BINDER_LOOPER_STATE_ENTERED = 0x02,

- BINDER_LOOPER_STATE_EXITED = 0x04,

- BINDER_LOOPER_STATE_INVALID = 0x08,

- BINDER_LOOPER_STATE_WAITING = 0x10,

- BINDER_LOOPER_STATE_NEED_RETURN = 0x20

- };

enum {

BINDER_LOOPER_STATE_REGISTERED = 0x01,

BINDER_LOOPER_STATE_ENTERED = 0x02,

BINDER_LOOPER_STATE_EXITED = 0x04,

BINDER_LOOPER_STATE_INVALID = 0x08,

BINDER_LOOPER_STATE_WAITING = 0x10,

BINDER_LOOPER_STATE_NEED_RETURN = 0x20

};另外一个数据结构是struct binder_node,它表示一个binder实体:

- struct binder_node {

- int debug_id;

- struct binder_work work;

- union {

- struct rb_node rb_node;

- struct hlist_node dead_node;

- };

- struct binder_proc *proc;

- struct hlist_head refs;

- int internal_strong_refs;

- int local_weak_refs;

- int local_strong_refs;

- void __user *ptr;

- void __user *cookie;

- unsigned has_strong_ref : 1;

- unsigned pending_strong_ref : 1;

- unsigned has_weak_ref : 1;

- unsigned pending_weak_ref : 1;

- unsigned has_async_transaction : 1;

- unsigned accept_fds : 1;

- int min_priority : 8;

- struct list_head async_todo;

- };

struct binder_node {

int debug_id;

struct binder_work work;

union {

struct rb_node rb_node;

struct hlist_node dead_node;

};

struct binder_proc *proc;

struct hlist_head refs;

int internal_strong_refs;

int local_weak_refs;

int local_strong_refs;

void __user *ptr;

void __user *cookie;

unsigned has_strong_ref : 1;

unsigned pending_strong_ref : 1;

unsigned has_weak_ref : 1;

unsigned pending_weak_ref : 1;

unsigned has_async_transaction : 1;

unsigned accept_fds : 1;

int min_priority : 8;

struct list_head async_todo;

};现在回到binder_ioctl函数中,首先是通过filp->private_data获得proc变量,这里binder_mmap函数是一样的。接着通过binder_get_thread函数获得线程信息,我们来看一下这个函数:

- static struct binder_thread *binder_get_thread(struct binder_proc *proc)

- {

- struct binder_thread *thread = NULL;

- struct rb_node *parent = NULL;

- struct rb_node **p = &proc->threads.rb_node;

- while (*p) {

- parent = *p;

- thread = rb_entry(parent, struct binder_thread, rb_node);

- if (current->pid < thread->pid)

- p = &(*p)->rb_left;

- else if (current->pid > thread->pid)

- p = &(*p)->rb_right;

- else

- break;

- }

- if (*p == NULL) {

- thread = kzalloc(sizeof(*thread), GFP_KERNEL);

- if (thread == NULL)

- return NULL;

- binder_stats.obj_created[BINDER_STAT_THREAD]++;

- thread->proc = proc;

- thread->pid = current->pid;

- init_waitqueue_head(&thread->wait);

- INIT_LIST_HEAD(&thread->todo);

- rb_link_node(&thread->rb_node, parent, p);

- rb_insert_color(&thread->rb_node, &proc->threads);

- thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

- thread->return_error = BR_OK;

- thread->return_error2 = BR_OK;

- }

- return thread;

- }

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

if (*p == NULL) {

thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (thread == NULL)

return NULL;

binder_stats.obj_created[BINDER_STAT_THREAD]++;

thread->proc = proc;

thread->pid = current->pid;

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

rb_link_node(&thread->rb_node, parent, p);

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}回到binder_ioctl函数,继续往下面,有两个全局变量binder_context_mgr_node和binder_context_mgr_uid,它定义如下:

- static struct binder_node *binder_context_mgr_node;

- static uid_t binder_context_mgr_uid = -1;

static struct binder_node *binder_context_mgr_node;

static uid_t binder_context_mgr_uid = -1;- static struct binder_node *

- binder_new_node(struct binder_proc *proc, void __user *ptr, void __user *cookie)

- {

- struct rb_node **p = &proc->nodes.rb_node;

- struct rb_node *parent = NULL;

- struct binder_node *node;

- while (*p) {

- parent = *p;

- node = rb_entry(parent, struct binder_node, rb_node);

- if (ptr < node->ptr)

- p = &(*p)->rb_left;

- else if (ptr > node->ptr)

- p = &(*p)->rb_right;

- else

- return NULL;

- }

- node = kzalloc(sizeof(*node), GFP_KERNEL);

- if (node == NULL)

- return NULL;

- binder_stats.obj_created[BINDER_STAT_NODE]++;

- rb_link_node(&node->rb_node, parent, p);

- rb_insert_color(&node->rb_node, &proc->nodes);

- node->debug_id = ++binder_last_id;

- node->proc = proc;

- node->ptr = ptr;

- node->cookie = cookie;

- node->work.type = BINDER_WORK_NODE;

- INIT_LIST_HEAD(&node->work.entry);

- INIT_LIST_HEAD(&node->async_todo);

- if (binder_debug_mask & BINDER_DEBUG_INTERNAL_REFS)

- printk(KERN_INFO "binder: %d:%d node %d u%p c%p created\n",

- proc->pid, current->pid, node->debug_id,

- node->ptr, node->cookie);

- return node;

- }

static struct binder_node *

binder_new_node(struct binder_proc *proc, void __user *ptr, void __user *cookie)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

return NULL;

}

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (node == NULL)

return NULL;

binder_stats.obj_created[BINDER_STAT_NODE]++;

rb_link_node(&node->rb_node, parent, p);

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = ++binder_last_id;

node->proc = proc;

node->ptr = ptr;

node->cookie = cookie;

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

if (binder_debug_mask & BINDER_DEBUG_INTERNAL_REFS)

printk(KERN_INFO "binder: %d:%d node %d u%p c%p created\n",

proc->pid, current->pid, node->debug_id,

node->ptr, node->cookie);

return node;

}binder_new_node返回到binder_ioctl函数后,就把新建的binder_node指针保存在binder_context_mgr_node中了,紧接着,又初始化了binder_context_mgr_node的引用计数值。

这样,BINDER_SET_CONTEXT_MGR命令就执行完毕了,binder_ioctl函数返回之前,执行了下面语句:

- if (thread)

- thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;回忆上面执行binder_get_thread时,thread->looper = BINDER_LOOPER_STATE_NEED_RETURN,执行了这条语句后,thread->looper = 0。

回到frameworks/base/cmds/servicemanager/service_manager.c文件中的main函数,下一步就是调用binder_loop函数进入循环,等待Client来请求了。binder_loop函数定义在frameworks/base/cmds/servicemanager/binder.c文件中:

- void binder_loop(struct binder_state *bs, binder_handler func)

- {

- int res;

- struct binder_write_read bwr;

- unsigned readbuf[32];

- bwr.write_size = 0;

- bwr.write_consumed = 0;

- bwr.write_buffer = 0;

- readbuf[0] = BC_ENTER_LOOPER;

- binder_write(bs, readbuf, sizeof(unsigned));

- for (;;) {

- bwr.read_size = sizeof(readbuf);

- bwr.read_consumed = 0;

- bwr.read_buffer = (unsigned) readbuf;

- res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

- if (res < 0) {

- LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

- break;

- }

- res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

- if (res == 0) {

- LOGE("binder_loop: unexpected reply?!\n");

- break;

- }

- if (res < 0) {

- LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

- break;

- }

- }

- }

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

if (res == 0) {

LOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}这里又要介绍一下设备文件/dev/binder文件操作函数ioctl的操作码BINDER_WRITE_READ了,首先看定义:

- #define BINDER_WRITE_READ _IOWR('b', 1, struct binder_write_read)

#define BINDER_WRITE_READ _IOWR('b', 1, struct binder_write_read)- struct binder_write_read {

- signed long write_size; /* bytes to write */

- signed long write_consumed; /* bytes consumed by driver */

- unsigned long write_buffer;

- signed long read_size; /* bytes to read */

- signed long read_consumed; /* bytes consumed by driver */

- unsigned long read_buffer;

- };

struct binder_write_read {

signed long write_size; /* bytes to write */

signed long write_consumed; /* bytes consumed by driver */

unsigned long write_buffer;

signed long read_size; /* bytes to read */

signed long read_consumed; /* bytes consumed by driver */

unsigned long read_buffer;

};- struct binder_transaction_data {

- /* The first two are only used for bcTRANSACTION and brTRANSACTION,

- * identifying the target and contents of the transaction.

- */

- union {

- size_t handle; /* target descriptor of command transaction */

- void *ptr; /* target descriptor of return transaction */

- } target;

- void *cookie; /* target object cookie */

- unsigned int code; /* transaction command */

- /* General information about the transaction. */

- unsigned int flags;

- pid_t sender_pid;

- uid_t sender_euid;

- size_t data_size; /* number of bytes of data */

- size_t offsets_size; /* number of bytes of offsets */

- /* If this transaction is inline, the data immediately

- * follows here; otherwise, it ends with a pointer to

- * the data buffer.

- */

- union {

- struct {

- /* transaction data */

- const void *buffer;

- /* offsets from buffer to flat_binder_object structs */

- const void *offsets;

- } ptr;

- uint8_t buf[8];

- } data;

- };

struct binder_transaction_data {

/* The first two are only used for bcTRANSACTION and brTRANSACTION,

* identifying the target and contents of the transaction.

*/

union {

size_t handle; /* target descriptor of command transaction */

void *ptr; /* target descriptor of return transaction */

} target;

void *cookie; /* target object cookie */

unsigned int code; /* transaction command */

/* General information about the transaction. */

unsigned int flags;

pid_t sender_pid;

uid_t sender_euid;

size_t data_size; /* number of bytes of data */

size_t offsets_size; /* number of bytes of offsets */

/* If this transaction is inline, the data immediately

* follows here; otherwise, it ends with a pointer to

* the data buffer.

*/

union {

struct {

/* transaction data */

const void *buffer;

/* offsets from buffer to flat_binder_object structs */

const void *offsets;

} ptr;

uint8_t buf[8];

} data;

};

flags成员变量表示事务标志:

- enum transaction_flags {

- TF_ONE_WAY = 0x01, /* this is a one-way call: async, no return */

- TF_ROOT_OBJECT = 0x04, /* contents are the component's root object */

- TF_STATUS_CODE = 0x08, /* contents are a 32-bit status code */

- TF_ACCEPT_FDS = 0x10, /* allow replies with file descriptors */

- };

enum transaction_flags {

TF_ONE_WAY = 0x01, /* this is a one-way call: async, no return */

TF_ROOT_OBJECT = 0x04, /* contents are the component's root object */

TF_STATUS_CODE = 0x08, /* contents are a 32-bit status code */

TF_ACCEPT_FDS = 0x10, /* allow replies with file descriptors */

};sender_pid和sender_euid表示发送者进程的pid和euid。

data_size表示data.buffer缓冲区的大小,offsets_size表示data.offsets缓冲区的大小。这里需要解释一下data成员变量,命令的真正要传输的数据就保存在data.buffer缓冲区中,前面的一成员变量都是一些用来描述数据的特征的。data.buffer所表示的缓冲区数据分为两类,一类是普通数据,Binder驱动程序不关心,一类是Binder实体或者Binder引用,这需要Binder驱动程序介入处理。为什么呢?想想,如果一个进程A传递了一个Binder实体或Binder引用给进程B,那么,Binder驱动程序就需要介入维护这个Binder实体或者引用的引用计数,防止B进程还在使用这个Binder实体时,A却销毁这个实体,这样的话,B进程就会crash了。所以在传输数据时,如果数据中含有Binder实体和Binder引和,就需要告诉Binder驱动程序它们的具体位置,以便Binder驱动程序能够去维护它们。data.offsets的作用就在这里了,它指定在data.buffer缓冲区中,所有Binder实体或者引用的偏移位置。每一个Binder实体或者引用,通过struct flat_binder_object 来表示:

- /*

- * This is the flattened representation of a Binder object for transfer

- * between processes. The 'offsets' supplied as part of a binder transaction

- * contains offsets into the data where these structures occur. The Binder

- * driver takes care of re-writing the structure type and data as it moves

- * between processes.

- */

- struct flat_binder_object {

- /* 8 bytes for large_flat_header. */

- unsigned long type;

- unsigned long flags;

- /* 8 bytes of data. */

- union {

- void *binder; /* local object */

- signed long handle; /* remote object */

- };

- /* extra data associated with local object */

- void *cookie;

- };

/*

* This is the flattened representation of a Binder object for transfer

* between processes. The 'offsets' supplied as part of a binder transaction

* contains offsets into the data where these structures occur. The Binder

* driver takes care of re-writing the structure type and data as it moves

* between processes.

*/

struct flat_binder_object {

/* 8 bytes for large_flat_header. */

unsigned long type;

unsigned long flags;

/* 8 bytes of data. */

union {

void *binder; /* local object */

signed long handle; /* remote object */

};

/* extra data associated with local object */

void *cookie;

};- enum {

- BINDER_TYPE_BINDER = B_PACK_CHARS('s', 'b', '*', B_TYPE_LARGE),

- BINDER_TYPE_WEAK_BINDER = B_PACK_CHARS('w', 'b', '*', B_TYPE_LARGE),

- BINDER_TYPE_HANDLE = B_PACK_CHARS('s', 'h', '*', B_TYPE_LARGE),

- BINDER_TYPE_WEAK_HANDLE = B_PACK_CHARS('w', 'h', '*', B_TYPE_LARGE),

- BINDER_TYPE_FD = B_PACK_CHARS('f', 'd', '*', B_TYPE_LARGE),

- };

enum {

BINDER_TYPE_BINDER = B_PACK_CHARS('s', 'b', '*', B_TYPE_LARGE),

BINDER_TYPE_WEAK_BINDER = B_PACK_CHARS('w', 'b', '*', B_TYPE_LARGE),

BINDER_TYPE_HANDLE = B_PACK_CHARS('s', 'h', '*', B_TYPE_LARGE),

BINDER_TYPE_WEAK_HANDLE = B_PACK_CHARS('w', 'h', '*', B_TYPE_LARGE),

BINDER_TYPE_FD = B_PACK_CHARS('f', 'd', '*', B_TYPE_LARGE),

};type和flags的具体意义可以参考Android Binder设计与实现一文。

最后,binder表示这是一个Binder实体,handle表示这是一个Binder引用,当这是一个Binder实体时,cookie才有意义,表示附加数据,由进程自己解释。

数据结构分析完了,回到binder_loop函数中,首先是执行BC_ENTER_LOOPER命令:

- readbuf[0] = BC_ENTER_LOOPER;

- binder_write(bs, readbuf, sizeof(unsigned));

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));- int binder_write(struct binder_state *bs, void *data, unsigned len)

- {

- struct binder_write_read bwr;

- int res;

- bwr.write_size = len;

- bwr.write_consumed = 0;

- bwr.write_buffer = (unsigned) data;

- bwr.read_size = 0;

- bwr.read_consumed = 0;

- bwr.read_buffer = 0;

- res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

- if (res < 0) {

- fprintf(stderr,"binder_write: ioctl failed (%s)\n",

- strerror(errno));

- }

- return res;

- }

int binder_write(struct binder_state *bs, void *data, unsigned len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (unsigned) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}- static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

- {

- int ret;

- struct binder_proc *proc = filp->private_data;

- struct binder_thread *thread;

- unsigned int size = _IOC_SIZE(cmd);

- void __user *ubuf = (void __user *)arg;

- /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

- ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret)

- return ret;

- mutex_lock(&binder_lock);

- thread = binder_get_thread(proc);

- if (thread == NULL) {

- ret = -ENOMEM;

- goto err;

- }

- switch (cmd) {

- case BINDER_WRITE_READ: {

- struct binder_write_read bwr;

- if (size != sizeof(struct binder_write_read)) {

- ret = -EINVAL;

- goto err;

- }

- if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

- ret = -EFAULT;

- goto err;

- }

- if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

- printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n",

- proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer);

- if (bwr.write_size > 0) {

- ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

- if (ret < 0) {

- bwr.read_consumed = 0;

- if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

- ret = -EFAULT;

- goto err;

- }

- }

- if (bwr.read_size > 0) {

- ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

- if (!list_empty(&proc->todo))

- wake_up_interruptible(&proc->wait);

- if (ret < 0) {

- if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

- ret = -EFAULT;

- goto err;

- }

- }

- if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

- printk(KERN_INFO "binder: %d:%d wrote %ld of %ld, read return %ld of %ld\n",

- proc->pid, thread->pid, bwr.write_consumed, bwr.write_size, bwr.read_consumed, bwr.read_size);

- if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

- ret = -EFAULT;

- goto err;

- }

- break;

- }

- ......

- default:

- ret = -EINVAL;

- goto err;

- }

- ret = 0;

- err:

- if (thread)

- thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

- mutex_unlock(&binder_lock);

- wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret && ret != -ERESTARTSYS)

- printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

- return ret;

- }

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

mutex_lock(&binder_lock);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n",

proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

printk(KERN_INFO "binder: %d:%d wrote %ld of %ld, read return %ld of %ld\n",

proc->pid, thread->pid, bwr.write_consumed, bwr.write_size, bwr.read_consumed, bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

}

......

default:

ret = -EINVAL;

goto err;

}

ret = 0;

err:

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

mutex_unlock(&binder_lock);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

return ret;

}首先是通过copy_from_user(&bwr, ubuf, sizeof(bwr))语句把用户传递进来的参数转换成struct binder_write_read结构体,并保存在本地变量bwr中,这里可以看出bwr.write_size等于4,于是进入binder_thread_write函数,这里我们只关注BC_ENTER_LOOPER相关的代码:

- int

- binder_thread_write(struct binder_proc *proc, struct binder_thread *thread,

- void __user *buffer, int size, signed long *consumed)

- {

- uint32_t cmd;

- void __user *ptr = buffer + *consumed;

- void __user *end = buffer + size;

- while (ptr < end && thread->return_error == BR_OK) {

- if (get_user(cmd, (uint32_t __user *)ptr))

- return -EFAULT;

- ptr += sizeof(uint32_t);

- if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

- binder_stats.bc[_IOC_NR(cmd)]++;

- proc->stats.bc[_IOC_NR(cmd)]++;

- thread->stats.bc[_IOC_NR(cmd)]++;

- }

- switch (cmd) {

- ......

- case BC_ENTER_LOOPER:

- if (binder_debug_mask & BINDER_DEBUG_THREADS)

- printk(KERN_INFO "binder: %d:%d BC_ENTER_LOOPER\n",

- proc->pid, thread->pid);

- if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

- thread->looper |= BINDER_LOOPER_STATE_INVALID;

- binder_user_error("binder: %d:%d ERROR:"

- " BC_ENTER_LOOPER called after "

- "BC_REGISTER_LOOPER\n",

- proc->pid, thread->pid);

- }

- thread->looper |= BINDER_LOOPER_STATE_ENTERED;

- break;

- ......

- default:

- printk(KERN_ERR "binder: %d:%d unknown command %d\n", proc->pid, thread->pid, cmd);

- return -EINVAL;

- }

- *consumed = ptr - buffer;

- }

- return 0;

- }

int

binder_thread_write(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed)

{

uint32_t cmd;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

......

case BC_ENTER_LOOPER:

if (binder_debug_mask & BINDER_DEBUG_THREADS)

printk(KERN_INFO "binder: %d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("binder: %d:%d ERROR:"

" BC_ENTER_LOOPER called after "

"BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

......

default:

printk(KERN_ERR "binder: %d:%d unknown command %d\n", proc->pid, thread->pid, cmd);

return -EINVAL;

}

*consumed = ptr - buffer;

}

return 0;

}回到binder_ioctl函数,由于bwr.read_size == 0,binder_thread_read函数就不会被执行了,这样,binder_ioctl的任务就完成了。

回到binder_loop函数,进入for循环:

- for (;;) {

- bwr.read_size = sizeof(readbuf);

- bwr.read_consumed = 0;

- bwr.read_buffer = (unsigned) readbuf;

- res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

- if (res < 0) {

- LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

- break;

- }

- res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

- if (res == 0) {

- LOGE("binder_loop: unexpected reply?!\n");

- break;

- }

- if (res < 0) {

- LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

- break;

- }

- }

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

if (res == 0) {

LOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}- bwr.write_size = 0;

- bwr.write_consumed = 0;

- bwr.write_buffer = 0;

- readbuf[0] = BC_ENTER_LOOPER;

- bwr.read_size = sizeof(readbuf);

- bwr.read_consumed = 0;

- bwr.read_buffer = (unsigned) readbuf;

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;- static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

- {

- int ret;

- struct binder_proc *proc = filp->private_data;

- struct binder_thread *thread;

- unsigned int size = _IOC_SIZE(cmd);

- void __user *ubuf = (void __user *)arg;

- /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

- ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret)

- return ret;

- mutex_lock(&binder_lock);

- thread = binder_get_thread(proc);

- if (thread == NULL) {

- ret = -ENOMEM;

- goto err;

- }

- switch (cmd) {

- case BINDER_WRITE_READ: {

- struct binder_write_read bwr;

- if (size != sizeof(struct binder_write_read)) {

- ret = -EINVAL;

- goto err;

- }

- if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

- ret = -EFAULT;

- goto err;

- }

- if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

- printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n",

- proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer);

- if (bwr.write_size > 0) {

- ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

- if (ret < 0) {

- bwr.read_consumed = 0;

- if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

- ret = -EFAULT;

- goto err;

- }

- }

- if (bwr.read_size > 0) {

- ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

- if (!list_empty(&proc->todo))

- wake_up_interruptible(&proc->wait);

- if (ret < 0) {

- if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

- ret = -EFAULT;

- goto err;

- }

- }

- if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

- printk(KERN_INFO "binder: %d:%d wrote %ld of %ld, read return %ld of %ld\n",

- proc->pid, thread->pid, bwr.write_consumed, bwr.write_size, bwr.read_consumed, bwr.read_size);

- if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

- ret = -EFAULT;

- goto err;

- }

- break;

- }

- ......

- default:

- ret = -EINVAL;

- goto err;

- }

- ret = 0;

- err:

- if (thread)

- thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

- mutex_unlock(&binder_lock);

- wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- if (ret && ret != -ERESTARTSYS)

- printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

- return ret;

- }

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

mutex_lock(&binder_lock);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n",

proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (binder_debug_mask & BINDER_DEBUG_READ_WRITE)

printk(KERN_INFO "binder: %d:%d wrote %ld of %ld, read return %ld of %ld\n",

proc->pid, thread->pid, bwr.write_consumed, bwr.write_size, bwr.read_consumed, bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

}

......

default:

ret = -EINVAL;

goto err;

}

ret = 0;

err:

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

mutex_unlock(&binder_lock);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

return ret;

}- static int

- binder_thread_read(struct binder_proc *proc, struct binder_thread *thread,

- void __user *buffer, int size, signed long *consumed, int non_block)

- {

- void __user *ptr = buffer + *consumed;

- void __user *end = buffer + size;

- int ret = 0;

- int wait_for_proc_work;

- if (*consumed == 0) {

- if (put_user(BR_NOOP, (uint32_t __user *)ptr))

- return -EFAULT;

- ptr += sizeof(uint32_t);

- }

- retry:

- wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo);

- if (thread->return_error != BR_OK && ptr < end) {

- if (thread->return_error2 != BR_OK) {

- if (put_user(thread->return_error2, (uint32_t __user *)ptr))

- return -EFAULT;

- ptr += sizeof(uint32_t);

- if (ptr == end)

- goto done;

- thread->return_error2 = BR_OK;

- }

- if (put_user(thread->return_error, (uint32_t __user *)ptr))

- return -EFAULT;

- ptr += sizeof(uint32_t);

- thread->return_error = BR_OK;

- goto done;

- }

- thread->looper |= BINDER_LOOPER_STATE_WAITING;

- if (wait_for_proc_work)

- proc->ready_threads++;

- mutex_unlock(&binder_lock);

- if (wait_for_proc_work) {

- if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

- BINDER_LOOPER_STATE_ENTERED))) {

- binder_user_error("binder: %d:%d ERROR: Thread waiting "

- "for process work before calling BC_REGISTER_"

- "LOOPER or BC_ENTER_LOOPER (state %x)\n",

- proc->pid, thread->pid, thread->looper);

- wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

- }

- binder_set_nice(proc->default_priority);

- if (non_block) {

- if (!binder_has_proc_work(proc, thread))

- ret = -EAGAIN;

- } else

- ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

- } else {

- if (non_block) {

- if (!binder_has_thread_work(thread))

- ret = -EAGAIN;

- } else

- ret = wait_event_interruptible(thread->wait, binder_has_thread_work(thread));

- }

- .......

- }

static int

binder_thread_read(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo);

if (thread->return_error != BR_OK && ptr < end) {

if (thread->return_error2 != BR_OK) {

if (put_user(thread->return_error2, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (ptr == end)

goto done;

thread->return_error2 = BR_OK;

}

if (put_user(thread->return_error, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

thread->return_error = BR_OK;

goto done;

}

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

proc->ready_threads++;

mutex_unlock(&binder_lock);

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("binder: %d:%d ERROR: Thread waiting "

"for process work before calling BC_REGISTER_"

"LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

if (!binder_has_thread_work(thread))

ret = -EAGAIN;

} else

ret = wait_event_interruptible(thread->wait, binder_has_thread_work(thread));

}

.......

}至此,我们就从源代码一步一步地分析完Service Manager是如何成为Android进程间通信(IPC)机制Binder守护进程的了。总结一下,Service Manager是成为Android进程间通信(IPC)机制Binder守护进程的过程是这样的:

1. 打开/dev/binder文件:open("/dev/binder", O_RDWR);

2. 建立128K内存映射:mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

3. 通知Binder驱动程序它是守护进程:binder_become_context_manager(bs);

4. 进入循环等待请求的到来:binder_loop(bs, svcmgr_handler);

在这个过程中,在Binder驱动程序中建立了一个struct binder_proc结构、一个struct binder_thread结构和一个struct binder_node结构,这样,Service Manager就在Android系统的进程间通信机制Binder担负起守护进程的职责了

浅谈Android系统进程间通信(IPC)机制Binder中的Server和Client获得Service Manager接口之路

在阅读本文之前,希望读者先阅读Android进程间通信(IPC)机制Binder简要介绍和学习计划一文提到的参考资料Android深入浅出之Binder机制,这样可以加深对本文的理解。

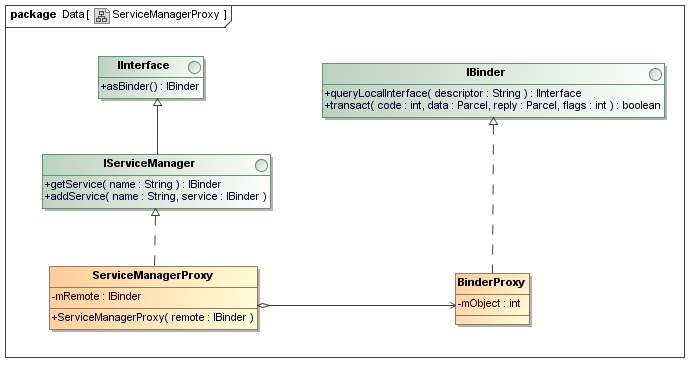

我们知道,Service Manager在Binder机制中既充当守护进程的角色,同时它也充当着Server角色,然而它又与一般的Server不一样。对于普通的Server来说,Client如果想要获得Server的远程接口,那么必须通过Service Manager远程接口提供的getService接口来获得,这本身就是一个使用Binder机制来进行进程间通信的过程。而对于Service Manager这个Server来说,Client如果想要获得Service Manager远程接口,却不必通过进程间通信机制来获得,因为Service Manager远程接口是一个特殊的Binder引用,它的引用句柄一定是0。

获取Service Manager远程接口的函数是defaultServiceManager,这个函数声明在frameworks/base/include/binder/IServiceManager.h文件中:

- sp<IServiceManager> defaultServiceManager();

sp<IServiceManager> defaultServiceManager();实现在frameworks/base/libs/binder/IServiceManager.cpp文件中:

- sp<IServiceManager> defaultServiceManager()

- {

- if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

- {

- AutoMutex _l(gDefaultServiceManagerLock);

- if (gDefaultServiceManager == NULL) {

- gDefaultServiceManager = interface_cast<IServiceManager>(

- ProcessState::self()->getContextObject(NULL));

- }

- }

- return gDefaultServiceManager;

- }

sp<IServiceManager> defaultServiceManager()

{

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

{

AutoMutex _l(gDefaultServiceManagerLock);

if (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast<IServiceManager>(

ProcessState::self()->getContextObject(NULL));

}

}

return gDefaultServiceManager;

}- Mutex gDefaultServiceManagerLock;

- sp<IServiceManager> gDefaultServiceManager;

Mutex gDefaultServiceManagerLock;

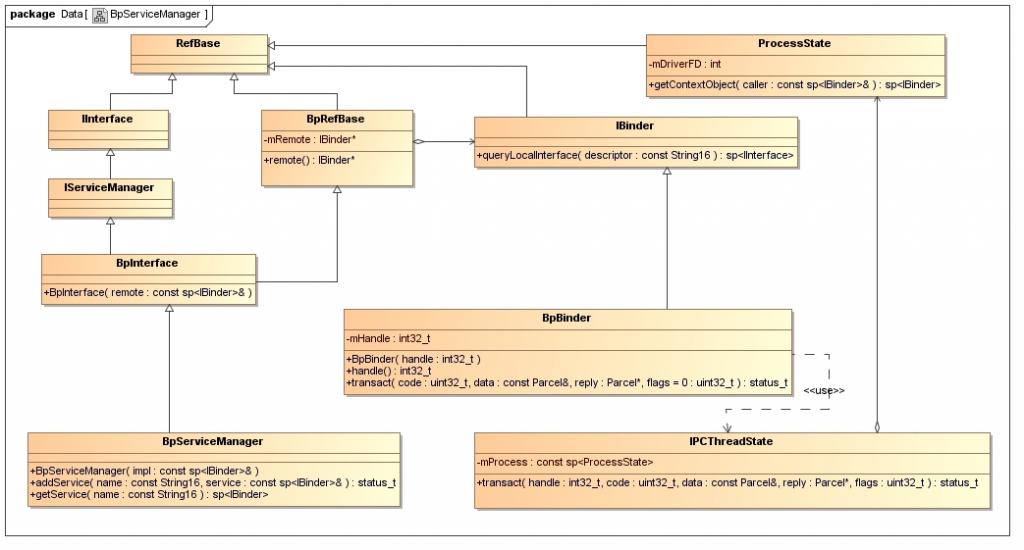

sp<IServiceManager> gDefaultServiceManager;在继续介绍interface_cast<IServiceManager>(ProcessState::self()->getContextObject(NULL))的实现之前,先来看一个类图,这能够帮助我们了解Service Manager远程接口的创建过程。

参考资料Android深入浅出之Binder机制一文的读者,应该会比较容易理解这个图。这个图表明了,BpServiceManager类继承了BpInterface<IServiceManager>类,BpInterface是一个模板类,它定义在frameworks/base/include/binder/IInterface.h文件中:

- template<typename INTERFACE>

- class BpInterface : public INTERFACE, public BpRefBase

- {

- public:

- BpInterface(const sp<IBinder>& remote);

- protected:

- virtual IBinder* onAsBinder();

- };

template<typename INTERFACE>