用Docker搭建Elasticsearch、Kibana、Logstash 同步MySQL数据到ES

参考资料:

https://www.51cto.com/article/744327.html

https://blog.csdn.net/weixin_44792186/article/details/121451220

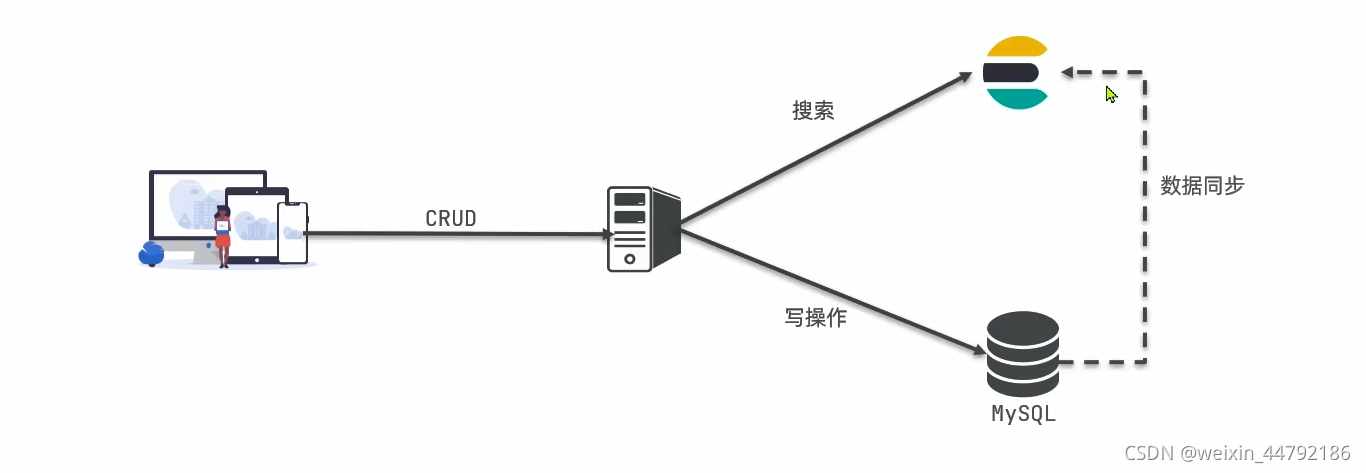

日常业务中涉及很多统计查询需求,MySQL往往不能很好支持,我们常采用ElasticSearch作为技术栈的补充,本次我们就实战下怎么用Docker部署实现这套流程。

目录结构

我们项目的文件目录结构如下:

├── docker-compose.yml #docker-compose 模板文件

├──elk #存放ElasticSearch相关工具的目录,包括elasticsearch、kibana、logstash

| ├── elasticsearch #elasticsearch的挂载目录

| │ ├── config

| │ │ └── elasticsearch.yml #elasticsearch配置文件

| │ ├── data

| │ ├── logs

| │ └── plugins

| ├── kibana #elasticsearch的挂载目录

| │ └── config

| │ └── kibana.yml #kibana的配置文件

| └── logstash #logstash的挂载目录

| └── config #logstash的配置目录

| ├── log #logstash日志存放

| ├── logstash.conf #logstash关于从MySQL获取数据的配置

| ├── logstash.yml #Logstash配置选项可以控制Logstash的执行

| ├── mysql-connector-java-8.0.28.jar #java连接mysql的组件包

| ├── mysql-connector-java-8.0.28.jar:Zone.Identifier

| └── pipelines.yml #Logstash配置文件中定义多个pipeline的地方

|

└── mysql #mysql的挂载目录

├── conf #mysql的配置目录

│ ├── my.cnf

├── data #mysql的数据目录

配置文件

我们为这些建好需要配置的目录和文件:

功能演示,对安全相关配置做简单处理

docker-compose.yml

version: '3'

services:

elasticsearch:

image: elasticsearch:7.17.7

ports:

- "9200:9200"

- "9300:9300"

environment:

- cluster.name=elasticsearch

- discovery.type=single-node

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- ./elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins

- ./elk/elasticsearch/data:/usr/share/elasticsearch/data

- ./elk/elasticsearch/logs:/usr/share/elasticsearch/logs

networks:

default:

ipv4_address: 172.25.0.2

kibana:

image: kibana:7.17.7

ports:

- "5601:5601"

depends_on:

- elasticsearch

environment:

I18N_LOCALE: zh-CN

volumes:

- ./elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

default:

ipv4_address: 172.25.0.3

# 暂时不启用logstash,等mysql数据库准备好了再启用

# logstash:

# image: logstash:7.17.7

# ports:

# - "5044:5044"

# volumes:

# - ./elk/logstash/config:/usr/share/logstash/config

# depends_on:

# - elasticsearch

# networks:

# default:

# ipv4_address: 172.25.0.4

mysql:

image: mysql:5.7

ports:

- "3306:3306"

volumes:

- ./mysql/data:/var/lib/mysql

- ./mysql/conf:/etc/mysql/conf.d

environment:

MYSQL_ROOT_PASSWORD: root

networks:

default:

ipv4_address: 172.25.0.5

networks:

proxy:

external: true

default:

driver: bridge

ipam:

config:

- subnet: 172.25.0.0/16 # 这里使用了固定ip, 请按您的主机网络做调整

elk/elasticsearch/config/elasticsearch.yml

http.host: 0.0.0.0

xpack.security.enabled: false

elk/kibana/config/kibana.yml

server.host: 0.0.0.0

elasticsearch.hosts: [ "http://172.25.0.2:9200" ]

elk/logstash/config/logstash.conf

这里设置了一分钟同步一次sys_log

input {

stdin {

}

jdbc {

jdbc_connection_string => "jdbc:mysql://172.25.0.5:3306/test?useUnicode=true&characterEncoding=utf8&serverTimeznotallow=UTC"

jdbc_user => "root"

jdbc_password => "root"

jdbc_driver_library => "/usr/share/logstash/config/mysql-connector-java-8.0.28.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "300000"

statement => "SELECT id, title, business_type, method, request_method, oper_name, oper_url, oper_ip, oper_time FROM sys_log"

schedule => "*/1 * * * *"

use_column_value => false

tracking_column_type => "timestamp"

tracking_column => "oper_time"

record_last_run => true

jdbc_default_timezone => "Asia/Shanghai"

last_run_metadata_path => "/usr/share/logstash/config/log"

}

}

output {

elasticsearch {

hosts => ["172.25.0.2"]

index => "sys_log"

document_id => "%{id}"

}

stdout {

codec => json_lines

}

}

elk/logstash/config/logstash.yml

http.host: 0.0.0.0

xpack.monitoring.elasticsearch.hosts: [ "http://172.25.0.2:9200" ]

elk/logstash/config/pipelines.yml

- pipeline.id: sys_log

path.config: "/usr/share/logstash/config/logstash.conf"

elk/logstash/config/mysql-connector-java-8.0.28.jar

下载地址:https://mvnrepository.com/artifact/mysql/mysql-connector-java

创建 elk/logstash/config/log

touch elk/logstash/config/log

mysql/conf/my.cnf

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

对elk文件夹做开放权限

演示场景,生产环境不可这么操作

sudo chmod -R 777 elk

第一次启动

各项配置文件创建好了,我们执行docker-compose up -d启动各个容器

创建需要传输的数据库和表

连接mysql

创建实验数据库:test

CREATE DATABASE test

CHARACTER SET utf8

COLLATE utf8_general_ci;

创建表:sys_log

use test;

CREATE TABLE `sys_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT '日志主键',

`title` varchar(50) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '模块标题',

`business_type` int(2) NULL DEFAULT 0 COMMENT '业务类型(0其它 1新增 2修改 3删除)',

`method` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '方法名称',

`request_method` varchar(10) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '请求方式',

`oper_name` varchar(50) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '操作人员',

`oper_url` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '请求URL',

`oper_ip` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT '' COMMENT '主机地址',

`oper_time` datetime(0) NULL DEFAULT NULL COMMENT '操作时间',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1585197503834284034 CHARACTER SET = utf8 COLLATE = utf8_general_ci COMMENT = '操作日志记录' ROW_FORMAT = Dynamic;

新建es的索引

进入 http://localhost:5601/app/dev_tools#/console

请按您的网络环境的ip访问kibana

PUT /sys_log

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0,

"index": {

"max_result_window": 100000000

}

},

"mappings": {

"dynamic": "strict",

"properties": {

"@timestamp": {

"type": "date"

},

"@version": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"business_type": {

"type": "integer"

},

"title": {

"type": "text"

},

"method": {

"type": "text"

},

"request_method": {

"type": "text"

},

"oper_name": {

"type": "text"

},

"oper_url": {

"type": "text"

},

"oper_ip": {

"type": "text"

},

"oper_time": {

"type": "date"

},

"id": {

"type": "long"

}

}

}

}

启动logstash

修改 docker-compose.yml

version: '3'

services:

elasticsearch:

image: elasticsearch:7.17.7

ports:

- "9200:9200"

- "9300:9300"

environment:

- cluster.name=elasticsearch

- discovery.type=single-node

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- ./elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins

- ./elk/elasticsearch/data:/usr/share/elasticsearch/data

- ./elk/elasticsearch/logs:/usr/share/elasticsearch/logs

networks:

default:

ipv4_address: 172.25.0.2

kibana:

image: kibana:7.17.7

ports:

- "5601:5601"

depends_on:

- elasticsearch

environment:

I18N_LOCALE: zh-CN

volumes:

- ./elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

default:

ipv4_address: 172.25.0.3

logstash:

image: logstash:7.17.7

ports:

- "5044:5044"

volumes:

- ./elk/logstash/config:/usr/share/logstash/config

depends_on:

- elasticsearch

networks:

default:

ipv4_address: 172.25.0.4

mysql:

image: mysql:5.7

ports:

- "3306:3306"

volumes:

- ./mysql/data:/var/lib/mysql

- ./mysql/conf:/etc/mysql/conf.d

environment:

MYSQL_ROOT_PASSWORD: root

networks:

default:

ipv4_address: 172.25.0.5

networks:

proxy:

external: true

default:

driver: bridge

ipam:

config:

- subnet: 172.25.0.0/16

重新读取docker-compose.yml 启动容器

docker-compose down

docker-compose up -d

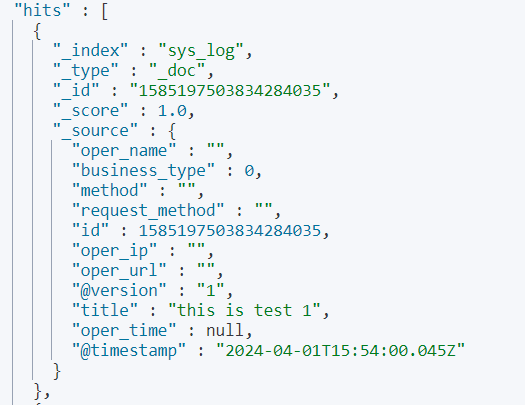

测试是否正常运行

在mysql中新增一条数据

INSERT INTO `test`.`sys_log` (`title`) VALUES ( 'this is test 1');

过一分钟后看es有没有数据

GET /sys_log/_search

{

"query": {

"match_all": {}

}

}

可以看到存在数据

4246

4246

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?