问题描述

使用ES search after查询报如下错误,fielddata缓存熔断异常。

org.frameworkset.elasticsearch.ElasticSearchException: {"error":{"root_cause":[{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13181907968/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13181907968,"bytes_limit":13181806182,"durability":"PERMANENT"},{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13187108998/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13187108998,"bytes_limit":13181806182,"durability":"PERMANENT"},{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13182738143/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13182738143,"bytes_limit":13181806182,"durability":"PERMANENT"},{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13183488574/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13183488574,"bytes_limit":13181806182,"durability":"PERMANENT"},{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13185677559/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13185677559,"bytes_limit":13181806182,"durability":"PERMANENT"},{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13185174477/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13185174477,"bytes_limit":13181806182,"durability":"PERMANENT"}],"type":"search_phase_execution_exception","reason":"all shards failed","phase":"query","grouped":true,"failed_shards":[{"shard":0,"index":"mmyz-notice2","node":"RFo7x1R3Qjy3rsNaJgRx5w","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13181907968/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13181907968/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13181907968/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13181907968,"bytes_limit":13181806182,"durability":"PERMANENT"}}}},{"shard":1,"index":"mmyz-notice2","node":"RFo7x1R3Qjy3rsNaJgRx5w","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13187108998/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13187108998/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13187108998/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13187108998,"bytes_limit":13181806182,"durability":"PERMANENT"}}}},{"shard":2,"index":"mmyz-notice2","node":"f8S1zzPZRnS_wkBwidNB4Q","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13182738143/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13182738143/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13182738143/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13182738143,"bytes_limit":13181806182,"durability":"PERMANENT"}}}},{"shard":3,"index":"mmyz-notice2","node":"M6k9iyVfQL2xQgRz0eWLxg","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13183488574/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13183488574/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13183488574/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13183488574,"bytes_limit":13181806182,"durability":"PERMANENT"}}}},{"shard":4,"index":"mmyz-notice2","node":"V8A-Jfe2RwK6_42oBlvpKQ","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13185677559/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13185677559/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13185677559/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13185677559,"bytes_limit":13181806182,"durability":"PERMANENT"}}}},{"shard":5,"index":"mmyz-notice2","node":"NsPWLx1GQFKPRGX51Dk_mQ","reason":{"type":"exception","reason":"java.util.concurrent.ExecutionException: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13185174477/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"execution_exception","reason":"execution_exception: CircuitBreakingException[[fielddata] Data too large, data for [_id] would be [13185174477/12.2gb], which is larger than the limit of [13181806182/12.2gb]]","caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13185174477/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13185174477,"bytes_limit":13181806182,"durability":"PERMANENT"}}}}],"caused_by":{"type":"circuit_breaking_exception","reason":"[fielddata] Data too large, data for [_id] would be [13181907968/12.2gb], which is larger than the limit of [13181806182/12.2gb]","bytes_wanted":13181907968,"bytes_limit":13181806182,"durability":"PERMANENT"}},"status":500}

at org.frameworkset.elasticsearch.handler.BaseExceptionResponseHandler.handleException(BaseExceptionResponseHandler.java:77)

at org.frameworkset.elasticsearch.handler.BaseExceptionResponseHandler.handleException(BaseExceptionResponseHandler.java:48)

at org.frameworkset.elasticsearch.handler.ElasticSearchResponseHandler.handleResponse(ElasticSearchResponseHandler.java:61)

at org.frameworkset.elasticsearch.handler.ElasticSearchResponseHandler.handleResponse(ElasticSearchResponseHandler.java:16)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:222)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:164)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:139)

at org.frameworkset.spi.remote.http.HttpRequestUtil.sendBody(HttpRequestUtil.java:1285)

at org.frameworkset.spi.remote.http.HttpRequestUtil.sendJsonBody(HttpRequestUtil.java:1264)

at org.frameworkset.elasticsearch.client.RestSearchExecutorUtil._executeRequest(RestSearchExecutorUtil.java:103)

at org.frameworkset.elasticsearch.client.RestSearchExecutor.executeRequest(RestSearchExecutor.java:242)

at org.frameworkset.elasticsearch.client.ElasticSearchRestClient$5.execute(ElasticSearchRestClient.java:1244)

at org.frameworkset.elasticsearch.client.ElasticSearchRestClient._executeHttp(ElasticSearchRestClient.java:899)

at org.frameworkset.elasticsearch.client.ElasticSearchRestClient.executeRequest(ElasticSearchRestClient.java:1229)

at org.frameworkset.elasticsearch.client.ElasticSearchRestClient.executeRequest(ElasticSearchRestClient.java:1212)

at org.frameworkset.elasticsearch.client.RestClientUtil.searchList(RestClientUtil.java:2828)

at org.frameworkset.elasticsearch.client.ConfigRestClientUtil.searchList(ConfigRestClientUtil.java:625)

at net.yto.security.cipher.config.SecurityBulkProcessor2.searchAfter(SecurityBulkProcessor2.java:265)

其他查询也是如此。

经排查,是ES默认的缓存设置让缓存区只进不出引起的。

概念原理

- ES缓存区

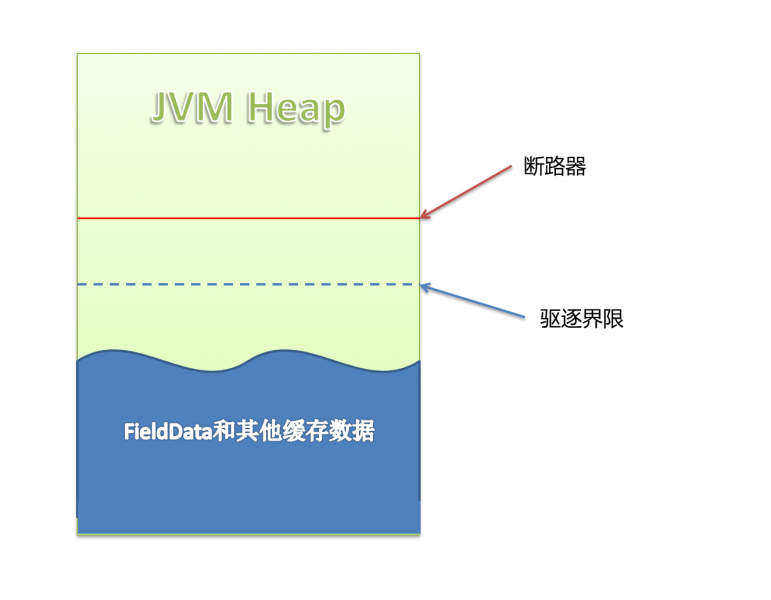

首先看下ES的缓存机制。ES在查询时,会将索引数据缓存在内存(JVM)中:

上图是ES的JVM Heap中的状况,可以看到有两条界限:驱逐线 和 断路器。当缓存数据到达驱逐线时,会自动驱逐掉部分数据,把缓存保持在安全的范围内。当用户准备执行某个查询操作时,断路器就起作用了,缓存数据+当前查询需要缓存的数据量到达断路器限制时,会返回Data too large错误,阻止用户进行这个查询操作。ES把缓存数据分成两类,FieldData和其他数据,我们接下来详细看FieldData,它是造成我们这次异常的“元凶”。

- FieldData

ES配置中提到的FieldData指的是字段数据。当排序(sort),统计(aggs)时,ES把涉及到的字段数据全部读取到内存(JVM Heap)中进行操作。相当于进行了数据缓存,提升查询效率。

监控FieldData使用了多少内存以及是否有数据被驱逐的。

Fielddata缓存可以通过下面的方式来监控:

对于单个索引使用:

GET /_stats/fielddata?fields=*对于单个节点使用 :

GET /_nodes/stats/indices/fielddata?fields=*或者甚至单个节点单个索引

GET /_nodes/stats/indices/fielddata?level=indices&fields=*Cache配置

indices.fielddata.cache.size 配置fieldData的Cache大小,可以配百分比也可以配一个准确的数值。cache到达约定的内存大小时会自动清理,驱逐一部分FieldData数据以便容纳新数据。默认值为unbounded无限。

indices.fielddata.cache.expire用于约定多久没有访问到的数据会被驱逐,默认值为-1,即无限。expire配置不推荐使用,按时间驱逐数据会大量消耗性能。而且这个设置在不久之后的版本中将会废弃。

- 断路器

fieldData的缓存配置中,有一个点会引起我们的疑问:fielddata的大小是在数据被加载之后才校验的。假如下一个查询准备加载进来的fieldData让缓存区超过可用堆大小会发生什么?

很遗憾的是,它将产生一个OOM异常。

断路器就是用来控制cache加载的,它预估当前查询申请使用内存的量,并加以限制。

断路器的配置如下:

indices.breaker.fielddata.limit这个 fielddata 断路器限制fielddata的大小,默认情况下为堆大小的60%。

indices.breaker.request.limit这个 request 断路器估算完成查询的其他部分要求的结构的大小, 默认情况下限制它们到堆大小的40%。

断路器限制可以通过文件 config/elasticsearch.yml 指定

https://www.elastic.co/guide/cn/elasticsearch/guide/current/_limiting_memory_usage.html

- search after

Paginate search results | Elasticsearch Guide [7.13] | Elastic

search after分页查询是需要一组排序值来检索命中下一页。

具体分析

在我们产生Data too large异常时,对集群FieldData单个索引_id字段监控的返回结果如下:

GET index/_stats/fielddata?fields=_id{

"_shards" : {

"total" : 12,

"successful" : 12,

"failed" : 0

},

"_all" : {

"primaries" : {

"fielddata" : {

"memory_size_in_bytes" : 3896965844,

"evictions" : 0,

"fields" : {

"stationCode" : {

"memory_size_in_bytes" : 28864

},

"_id" : {

"memory_size_in_bytes" : 3896936980

},

"smsSupplier" : {

"memory_size_in_bytes" : 0

}

}

}

},

"total" : {

"fielddata" : {

"memory_size_in_bytes" : 8356891112,

"evictions" : 0,

"fields" : {

"stationCode" : {

"memory_size_in_bytes" : 28864

},

"_id" : {

"memory_size_in_bytes" : 8356862248

},

"smsSupplier" : {

"memory_size_in_bytes" : 0

}

}

}

}

},

"indices" : {

"index" : {

"uuid" : "SvEPsxfwSDmk1ryAruRAzg",

"primaries" : {

"fielddata" : {

"memory_size_in_bytes" : 3896965844,

"evictions" : 0,

"fields" : {

"stationCode" : {

"memory_size_in_bytes" : 28864

},

"_id" : {

"memory_size_in_bytes" : 3896936980

},

"smsSupplier" : {

"memory_size_in_bytes" : 0

}

}

}

},

"total" : {

"fielddata" : {

"memory_size_in_bytes" : 8356891112,

"evictions" : 0,

"fields" : {

"stationCode" : {

"memory_size_in_bytes" : 28864

},

"_id" : {

"memory_size_in_bytes" : 8356862248

},

"smsSupplier" : {

"memory_size_in_bytes" : 0

}

}

}

}

}

}

}

可以看到memory_size_in_bytes用到了整个JVM内存的60%(可用上限),而evictions(驱逐)为0。且经过一段时间观察,字段所占内存大小都没有变化。由此推断,当下的缓存处于无法有效驱逐的状态。

Data too large异常就是由于fielddata.cache的默认值为unbounded导致的了。

而问题便是我们的dsl语句使用了_id排序导致的。

...

"track_total_hits": true,

"sort": [

{"_id": "asc"}

]

...问题点找到了。

那么现在矛盾的是search必须使用_id,但又无法继续执行,ES集群未配置fielddata缓存过期策略,fielddata会常驻缓存。我们如何解决呢?

方案思路

1.首先考虑的是调优dsl,使用search after即需要ES的分页功能场景。

那么是有三种方案可以考虑,search from+size,search after, scroll;

search from+size和scroll验证后并不符合我们的需求,排除;

那么只剩下search after,那么我们是否可以用其他field来构造一组排序,遗憾的是也排除了。

所以只能使用原dsl,无优化空间;

2.调整线上缓存配置indices.fielddata.cache.size和断路器配置indices.breaker.fielddata.limit,需要做两者的衡量,由于是生产环境,有一定的风险性。待辨证;

3.加大内存,涉及硬件升级,集群稳定性,目前堆内存已经是31G,正常内存占用率不过50~60%,考虑成本和集成等没必要,排除;

4.那么我们还有什么方案呢,fielddata不能自动失效,要是能主动清理掉fielddata缓存,是不是也是一种方案呢?

有的,主动清理缓存的方案如下:

POST index/_cache/clear但是我们又需要考虑到生产上如果主动清理索引缓存,对已有的性能查询是有否有影响呢?

我们查询官方文档和测试验证,把已有的fielddata缓存干掉,牺牲一定的查询性能是可以的,需要做一个平衡。主动清理索引缓存后,验证查询就恢复正常了。

再查询fielddata可以看到

GET index/_stats/fielddata?fields=_id该索引缓存已清零

{

"_shards" : {

"total" : 12,

"successful" : 12,

"failed" : 0

},

"_all" : {

"primaries" : {

"fielddata" : {

"memory_size_in_bytes" : 0,

"evictions" : 0,

"fields" : {

"_id" : {

"memory_size_in_bytes" : 0

}

}

}

},

"total" : {

"fielddata" : {

"memory_size_in_bytes" : 0,

"evictions" : 0,

"fields" : {

"_id" : {

"memory_size_in_bytes" : 0

}

}

}

}

},

"indices" : {

"index" : {

"uuid" : "i84CMGeJReWZsNchvMHtPA",

"primaries" : {

"fielddata" : {

"memory_size_in_bytes" : 0,

"evictions" : 0,

"fields" : {

"_id" : {

"memory_size_in_bytes" : 0

}

}

}

},

"total" : {

"fielddata" : {

"memory_size_in_bytes" : 0,

"evictions" : 0,

"fields" : {

"_id" : {

"memory_size_in_bytes" : 0

}

}

}

}

}

}

}

Get!

参考

ElasticSearch:从[FIELDDATA]Data too large错误看FieldData配置_雷雨中的双桅船-CSDN博客_indices.fielddata.cache.size

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?