FFmpeg一个集录制、转换、音/视频编码解码功能,强大的音频处理方案,如何在Android平台上运行?

ffmpeg下载地址:https://ffmpeg.org/download.html#build-mac

1 ,接下来我们需要使用ndk去编译获取ffmpeg工具库,生成.so名称规范化,有了这些库才可以利用jni使用在我们Android平台上.(建议在Linux,max上去编译,在window下是有很多问题出现)

修改ffmpeg配置,ffmpeg文件夹下面的configure文件.

原处:

SLIBNAME_WITH_MAJOR='$(SLIBNAME).$(LIBMAJOR)'

LIB_INSTALL_EXTRA_CMD='$$(RANLIB) "$(LIBDIR)/$(LIBNAME)"'

SLIB_INSTALL_NAME='$(SLIBNAME_WITH_VERSION)'

SLIB_INSTALL_LINKS='$(SLIBNAME_WITH_MAJOR) $(SLIBNAME)'改为:

SLIBNAME_WITH_MAJOR='$(SLIBPREF)$(FULLNAME)-$(LIBMAJOR)$(SLIBSUF)'

LIB_INSTALL_EXTRA_CMD='$$(RANLIB) "$(LIBDIR)/$(LIBNAME)"'

SLIB_INSTALL_NAME='$(SLIBNAME_WITH_MAJOR)'

SLIB_INSTALL_LINKS='$(SLIBNAME)'2 ,我们需要建立一个脚本文件去,执行这些操作.这里需要你window有执行脚步文件的环境,因为window本身不支持.

NDK=$HOME/Desktop/adt/android-ndk-r9改成你对应的ndk目录

#!/bin/bash

NDK=你对应的ndk目录

SYSROOT=$NDK/platforms/android-9/arch-arm/

TOOLCHAIN=$NDK/toolchains/arm-linux-androideabi-4.8/prebuilt/windows-x86_64

function build_one {

./configure \

--prefix=$PREFIX \

--enable-shared \

--disable-static \

--disable-doc \

--disable-ffmpeg \

--disable-ffplay \

--disable-ffprobe \

--disable-ffserver \

--disable-avdevice \

--disable-doc \

--disable-symver \

--cross-prefix=$TOOLCHAIN/bin/arm-linux-androideabi- \

--target-os=linux \

--arch=arm \

--enable-cross-compile \

--sysroot=$SYSROOT \

--extra-cflags="-Os -fpic $ADDI_CFLAGS" \

--extra-ldflags="$ADDI_LDFLAGS" \

$ADDITIONAL_CONFIGURE_FLAG

make clean

make

make install

}

CPU=arm

PREFIX=$(pwd)/android/$CPU

ADDI_CFLAGS="-marm"

build_one3 ,编译完成后,生成的android文件夹.里面有include,lib,android.mk.

inclide—>每个对应库的头文件.

lib—>每个库文件,在Android下编译的.

Android.mk—>在Android平台下,通过这个配置文件编译生成so新库文件.

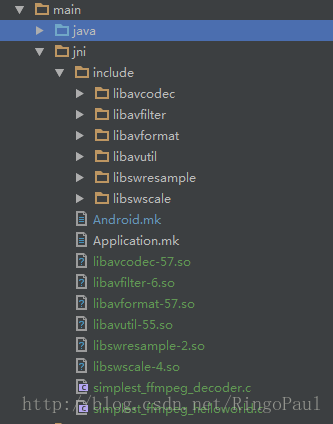

4, 在main/下新建文件夹jni,拷贝所生成的include,和相应的so文件和Android.mk文件.(这里的Application.mk,和另2个c文件是后面建的,后面会补充到)

5,新建simplest_ffmpeg_decoder.c文件,编写解码器功能.

#include <stdio.h>

#include <time.h>

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/log.h"

#ifdef ANDROID

#include <jni.h>

#include <android/log.h>

#define LOGE(format, ...) __android_log_print(ANDROID_LOG_ERROR, "(>_<)", format, ##__VA_ARGS__)

#define LOGI(format, ...) __android_log_print(ANDROID_LOG_INFO, "(^_^)", format, ##__VA_ARGS__)

#else

#define LOGE(format, ...) printf("(>_<) " format "\n", ##__VA_ARGS__)

#define LOGI(format, ...) printf("(^_^) " format "\n", ##__VA_ARGS__)

#endif

//Output FFmpeg's av_log()

void custom_log(void *ptr, int level, const char* fmt, va_list vl){

FILE *fp=fopen("/storage/emulated/0/av_log.txt","a+");

if(fp){

vfprintf(fp,fmt,vl);

fflush(fp);

fclose(fp);

}

}

JNIEXPORT jint JNICALL Java_interest_myndk_MainActivity_decode

(JNIEnv *env, jobject obj, jstring input_jstr, jstring output_jstr)

{

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame,*pFrameYUV;

uint8_t *out_buffer;

AVPacket *packet;

int y_size;

int ret, got_picture;

struct SwsContext *img_convert_ctx;

FILE *fp_yuv;

int frame_cnt;

clock_t time_start, time_finish;

double time_duration = 0.0;

char input_str[500]={0};

char output_str[500]={0};

char info[1000]={0};

sprintf(input_str,"%s",(*env)->GetStringUTFChars(env,input_jstr, NULL));

sprintf(output_str,"%s",(*env)->GetStringUTFChars(env,output_jstr, NULL));

//FFmpeg av_log() callback

av_log_set_callback(custom_log);

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if(avformat_open_input(&pFormatCtx,input_str,NULL,NULL)!=0){

LOGE("Couldn't open input stream.\n");

return -1;

}

if(avformat_find_stream_info(pFormatCtx,NULL)<0){

LOGE("Couldn't find stream information.\n");

return -1;

}

videoindex=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

if(videoindex==-1){

LOGE("Couldn't find a video stream.\n");

return -1;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL){

LOGE("Couldn't find Codec.\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0){

LOGE("Couldn't open codec.\n");

return -1;

}

pFrame=av_frame_alloc();

pFrameYUV=av_frame_alloc();

out_buffer=(unsigned char *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize,out_buffer,

AV_PIX_FMT_YUV420P,pCodecCtx->width, pCodecCtx->height,1);

packet=(AVPacket *)av_malloc(sizeof(AVPacket));

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

sprintf(info, "[Input ]%s\n", input_str);

sprintf(info, "%s[Output ]%s\n",info,output_str);

sprintf(info, "%s[Format ]%s\n",info, pFormatCtx->iformat->name);

sprintf(info, "%s[Codec ]%s\n",info, pCodecCtx->codec->name);

sprintf(info, "%s[Resolution]%dx%d\n",info, pCodecCtx->width,pCodecCtx->height);

fp_yuv=fopen(output_str,"wb+");

if(fp_yuv==NULL){

printf("Cannot open output file.\n");

return -1;

}

frame_cnt=0;

time_start = clock();

while(av_read_frame(pFormatCtx, packet)>=0){

if(packet->stream_index==videoindex){

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if(ret < 0){

LOGE("Decode Error.\n");

return -1;

}

if(got_picture){

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

}

av_free_packet(packet);

}

//flush decoder

//FIX: Flush Frames remained in Codec

while (1) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

time_finish = clock();

time_duration=(double)(time_finish - time_start);

sprintf(info, "%s[Time ]%fms\n",info,time_duration);

sprintf(info, "%s[Count ]%d\n",info,frame_cnt);

sws_freeContext(img_convert_ctx);

fclose(fp_yuv);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

6,修改Android.mk文件,配置生成so文件.

LOCAL_PATH := $(call my-dir)

# FFmpeg library

include $(CLEAR_VARS)

LOCAL_MODULE := avcodec

LOCAL_SRC_FILES := libavcodec-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avfilter

LOCAL_SRC_FILES := libavfilter-6.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avformat

LOCAL_SRC_FILES := libavformat-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avutil

LOCAL_SRC_FILES := libavutil-55.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swresample

LOCAL_SRC_FILES := libswresample-2.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swscale

LOCAL_SRC_FILES := libswscale-4.so

include $(PREBUILT_SHARED_LIBRARY)

# Program

include $(CLEAR_VARS)

//你所要生成so文件名,生成后是带lib的---libdecoder.so

LOCAL_MODULE := decoder

//生成so库的功能c源文件

LOCAL_SRC_FILES :=simplest_ffmpeg_decoder.c

//其他所需库的头文件路径

LOCAL_C_INCLUDES += $(LOCAL_PATH)/include

LOCAL_LDLIBS := -llog -lz

//这里要配置的是ffmpeg的各个so库文件名

LOCAL_SHARED_LIBRARIES := avcodec avdevice avfilter avformat avutil postproc swresample swscale

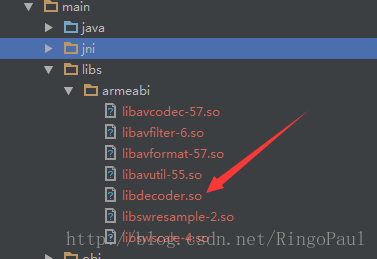

include $(BUILD_SHARED_LIBRARY)7,切换到main路径下,打开命令窗口,执行ndk-build,在main路径下的libs中会生成libdecoder.so

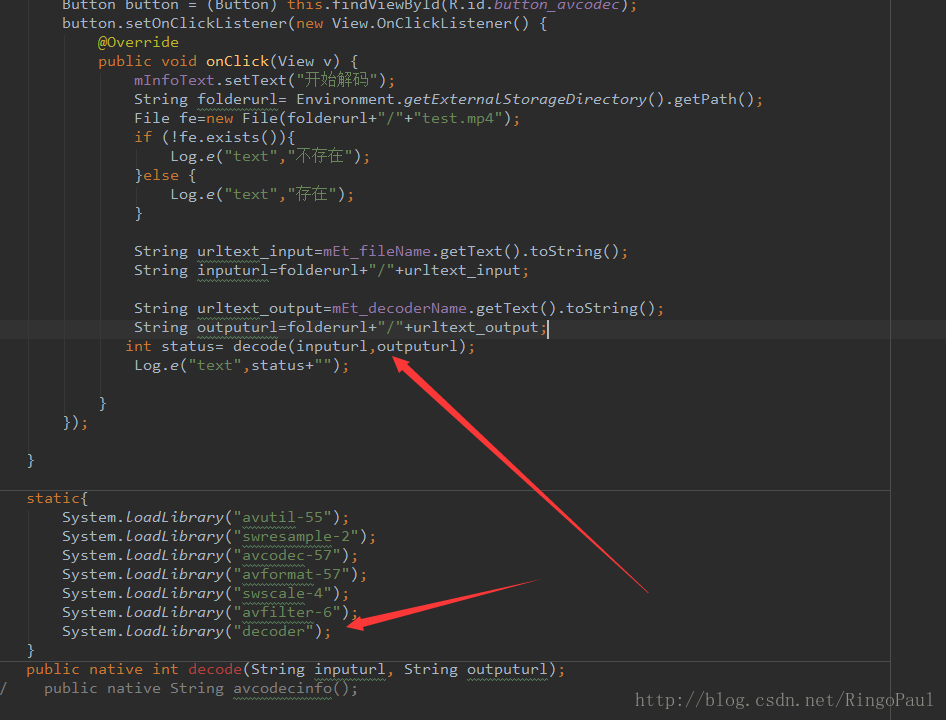

8, 在Activity中加载各个资源库包,并调用libdecoder.so库中的decoder()方法.

MP4文件是预先放好在我们sd卡路径下,decoder()方法所需要的是解析文件的路径与解析完成所生成文件的路径.

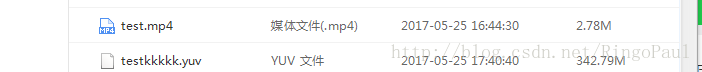

生成后:

723

723

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?