Haystack+Elasticsearch7 建立索引时默认的分词器为 snowball,比较适用于英文,

但显然对于中文分词来说并不友好,因此需将其更改为中文分词器

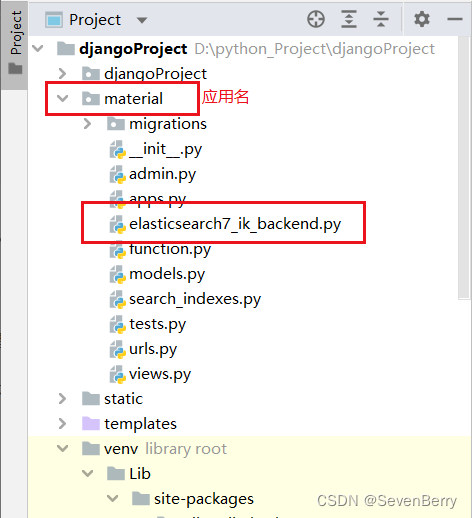

本项目结构如下图所示:

1 继承并重写 elasticsearch 搜索引擎

在 material 应用下新建名为 elasticsearch7_ik_backend.py 的文件,继承 Elasticsearch7SearchBackend(后端) 和 Elasticsearch7SearchEngine(搜索引擎) 并重写建立索引时的分词器设置

from haystack.backends.elasticsearch7_backend import Elasticsearch7SearchBackend, Elasticsearch7SearchEngine

DEFAULT_FIELD_MAPPING = {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

}

class Elasticsearc7IkSearchBackend(Elasticsearch7SearchBackend):

def __init__(self, *args, **kwargs):

self.DEFAULT_SETTINGS['settings']['analysis']['analyzer']['ik_analyzer'] = {

"type": "custom",

"tokenizer": "ik_max_word",

}

super(Elasticsearc7IkSearchBackend, self).__init__(*args, **kwargs)

class Elasticsearch7IkSearchEngine(Elasticsearch7SearchEngine):

backend = Elasticsearc7IkSearchBackend

2 修改后端引擎

在项目的配置文件 settings.py 中,将 haystack 原来的后端引擎 Elasticsearch7SearchEngine 修改为自己刚刚重新定义的 Elasticsearc7IkSearchBackend

HAYSTACK_CONNECTIONS = {

'default': {

# 项目名.文件名.搜索引擎名

'ENGINE': 'material.elasticsearch7_ik_backend.Elasticsearch7IkSearchEngine',

'URL': 'http://127.0.0.1:9200/',

'INDEX_NAME': 'template_v3.0', # 索引库名称

},

}3 重建索引

使用修改分词器后的后端引擎,重新建立索引

python manage.py rebuild_index补充

haystack 原先加载的是 ...\venv\Lib\site-packages\haystack\backends 文件夹下的 elasticsearch7_backend.py 文件,打开即可看到 elasticsearch7 引擎的默认配置

若用上述方法建立出来的索引字段仍使用 snowball 分词器,则将原先elasticsearch7_backend.py 文件中的 DEFAULT_FIELD_MAPPING 也修改为 ik 分词器(或许是因为版本问题)

DEFAULT_FIELD_MAPPING = {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart",

}附:elasticsearch7_backend.py 文件原内容(需要被修改的部分)

DEFAULT_FIELD_MAPPING = {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart",

# "analyzer": "snowball",

}

class Elasticsearch7SearchBackend(ElasticsearchSearchBackend):

# Settings to add an n-gram & edge n-gram analyzer.

DEFAULT_SETTINGS = {

"settings": {

"index": {

"max_ngram_diff": 2,

},

"analysis": {

"analyzer": {

"ngram_analyzer": {

"tokenizer": "standard",

"filter": [

"haystack_ngram",

"lowercase",

],

},

"edgengram_analyzer": {

"tokenizer": "standard",

"filter": [

"haystack_edgengram",

"lowercase",

],

},

},

"filter": {

"haystack_ngram": {

"type": "ngram",

"min_gram": 3,

"max_gram": 4,

},

"haystack_edgengram": {

"type": "edge_ngram",

"min_gram": 2,

"max_gram": 15,

},

},

},

},

}

def __init__(self, connection_alias, **connection_options):

super().__init__(connection_alias, **connection_options)

self.content_field_name = None

def build_search_kwargs(

self,

query_string,

sort_by=None,

start_offset=0,

end_offset=None,

fields="",

highlight=False,

facets=None,

date_facets=None,

query_facets=None,

narrow_queries=None,

spelling_query=None,

within=None,

dwithin=None,

distance_point=None,

models=None,

limit_to_registered_models=None,

result_class=None,

**extra_kwargs

):

index = haystack.connections[self.connection_alias].get_unified_index()

content_field = index.document_field

......

return kwargs

def _build_search_query_dwithin(self, dwithin):

......

def _build_search_query_within(self, within):

......

def _process_hits(self, raw_results):

......

def _process_results(

self,

raw_results,

highlight=False,

result_class=None,

distance_point=None,

geo_sort=False,

):

......

def _get_common_mapping(self):

return {

DJANGO_CT: {

"type": "keyword",

},

DJANGO_ID: {

"type": "keyword",

},

}

def build_schema(self, fields):

......

return (content_field_name, mapping)

class Elasticsearch7SearchQuery(ElasticsearchSearchQuery):

def add_field_facet(self, field, **options):

self.facets[field] = options.copy()

class Elasticsearch7SearchEngine(BaseEngine):

backend = Elasticsearch7SearchBackend

query = Elasticsearch7SearchQuery

630

630

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?