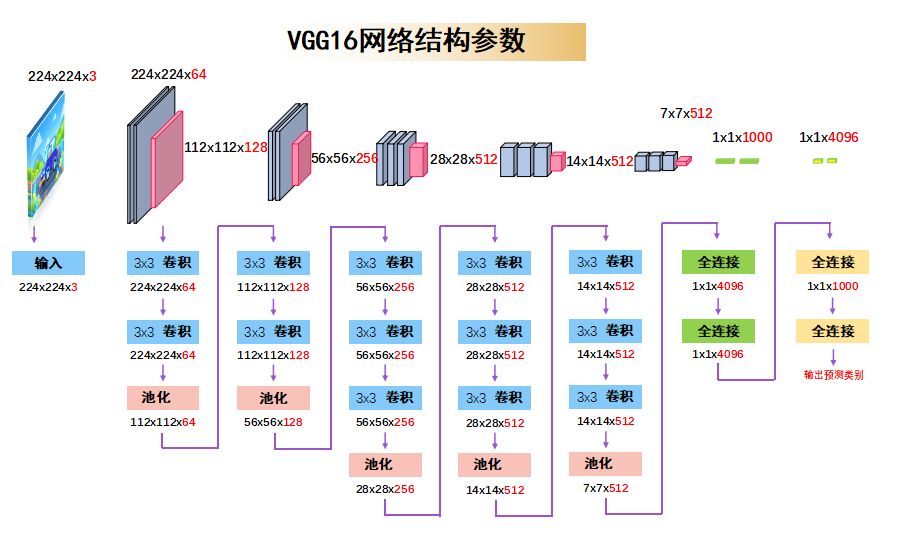

网络概述:网络架构如下(编码中若忽略正则化准确率低)

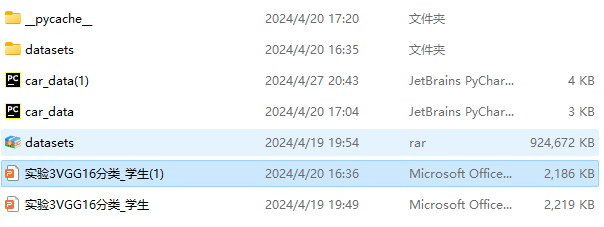

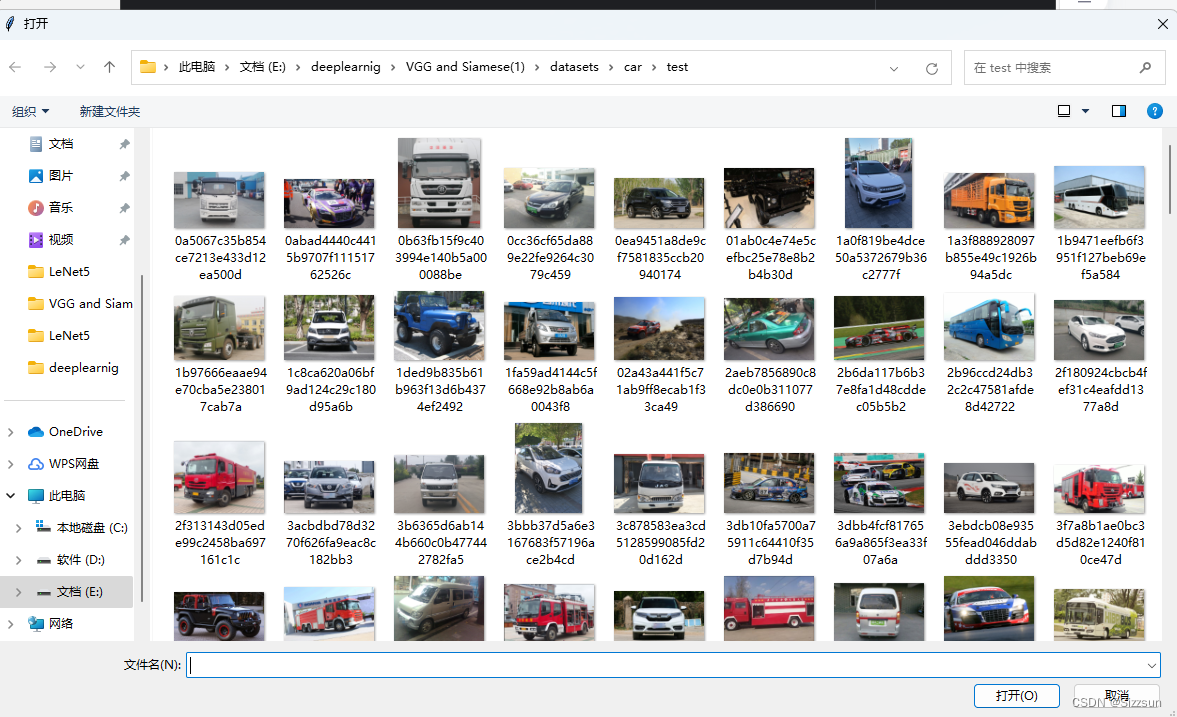

文件夹内容

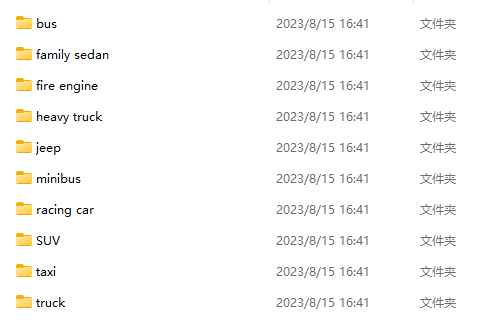

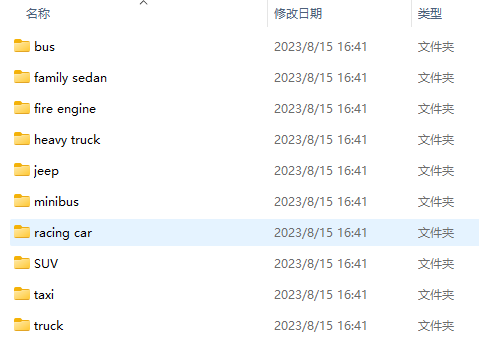

在datasets-car文件夹中包含三类文件夹,其中train和val文件夹内部格式一致,test文件夹直接为纯图组成:

程序1car_data_origin:

from torch.utils.data import DataLoader, Dataset

from torchvision import transforms as T

import os

from PIL import Image

class Car(Dataset):

def __init__(self, root, transforms=None):

imgs = []

labels = []

# 定义车辆类别和对应标签的字典

class_labels = {

"truck": 0,

"taxi": 1,

"minibus": 2,

"fire engine": 3,

"racing car": 4,

"SUV": 5,

"bus": 6,

"jeep": 7,

"family sedan": 8,

"heavy truck": 9

}

for class_name in os.listdir(root):

class_path = os.path.join(root, class_name)

if os.path.isdir(class_path):

label = class_labels.get(class_name, None)

if label is not None:

for img_name in os.listdir(class_path):

img_path = os.path.join(class_path, img_name)

if os.path.isfile(img_path):

imgs.append(img_path)

labels.append(label)

self.imgs = imgs

self.labels = labels

self.transforms = transforms

def __getitem__(self, index):

img_path = self.imgs[index]

label = self.labels[index]

data = Image.open(img_path)

if data.mode != "RGB":

data = data.convert("RGB")

if self.transforms is not None:

data = self.transforms(data)

return data, label

def __len__(self):

return len(self.imgs)

if __name__ == "__main__":

root = "./datasets/car/train"

train_dataset = Car(root)

train_dataloader = DataLoader(train_dataset, batch_size=32, shuffle=True)

for data, label in train_dataset:

print(data.size) # 直接访问元组的元素,不需要使用括号

程序二VGG16(每次卷积完后、激活函数前增加归一化操作):

import torch

import torch.nn as nn

class VGG16(nn.Module):

def __init__(self):

super(VGG16, self).__init__()

self.conv_layers = nn.Sequential(

# 第一组卷积层和池化层

nn.Conv2d(3, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64), # 添加批量归一化

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第二组卷积层和池化层

nn.Conv2d(64, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第三组卷积层和池化层

nn.Conv2d(128, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第四组卷积层和池化层

nn.Conv2d(256, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第五组卷积层和池化层

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.fc_layers = nn.Sequential(

# 全连接层

nn.Linear(512 * 7 * 7, 4096),

nn.BatchNorm1d(4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.BatchNorm1d(4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 10) # 输出层,10个类别的分类

)

def forward(self, x):

x = self.conv_layers(x)

x = x.view(x.size(0), -1)

x = self.fc_layers(x)

return x

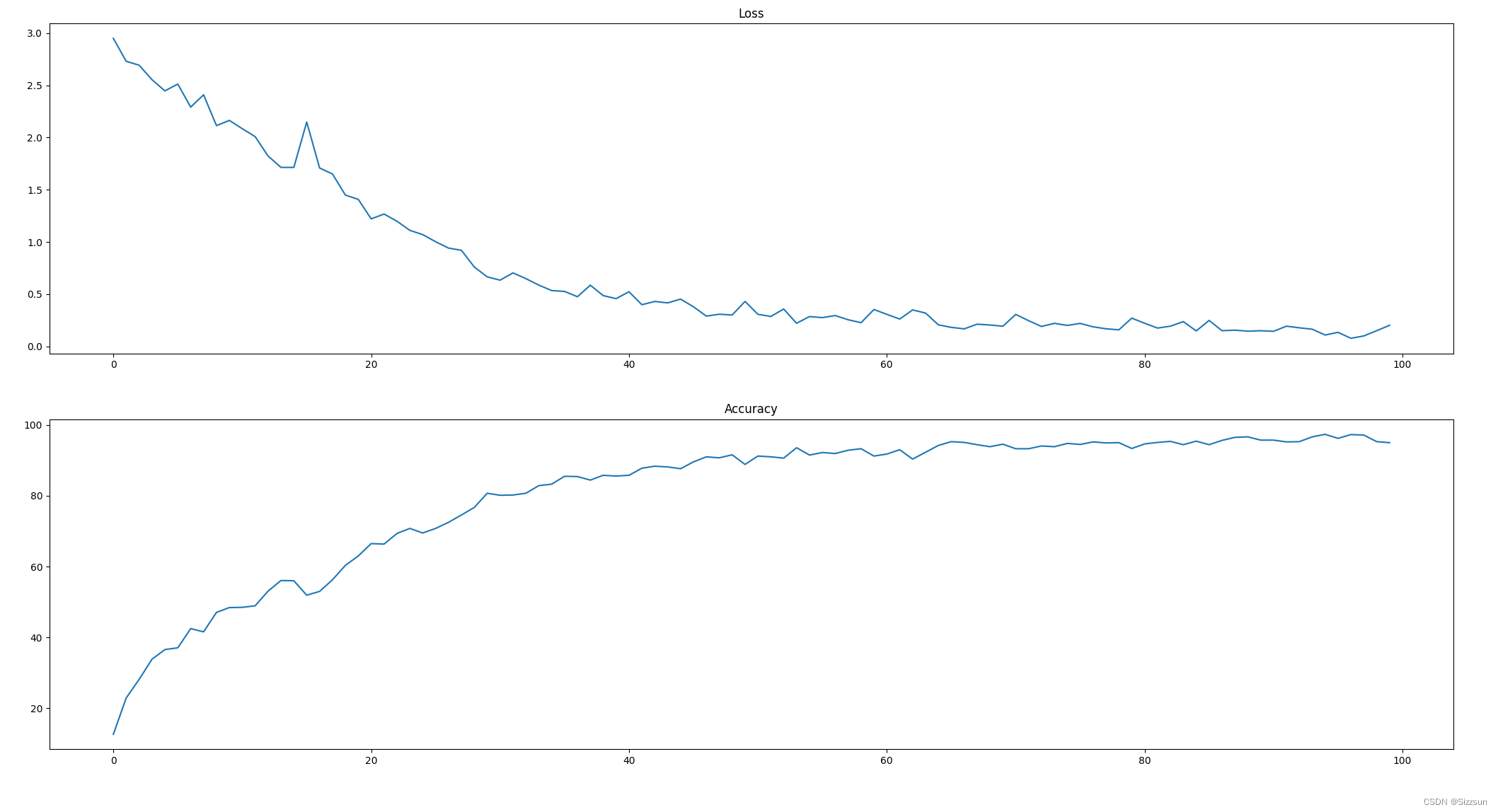

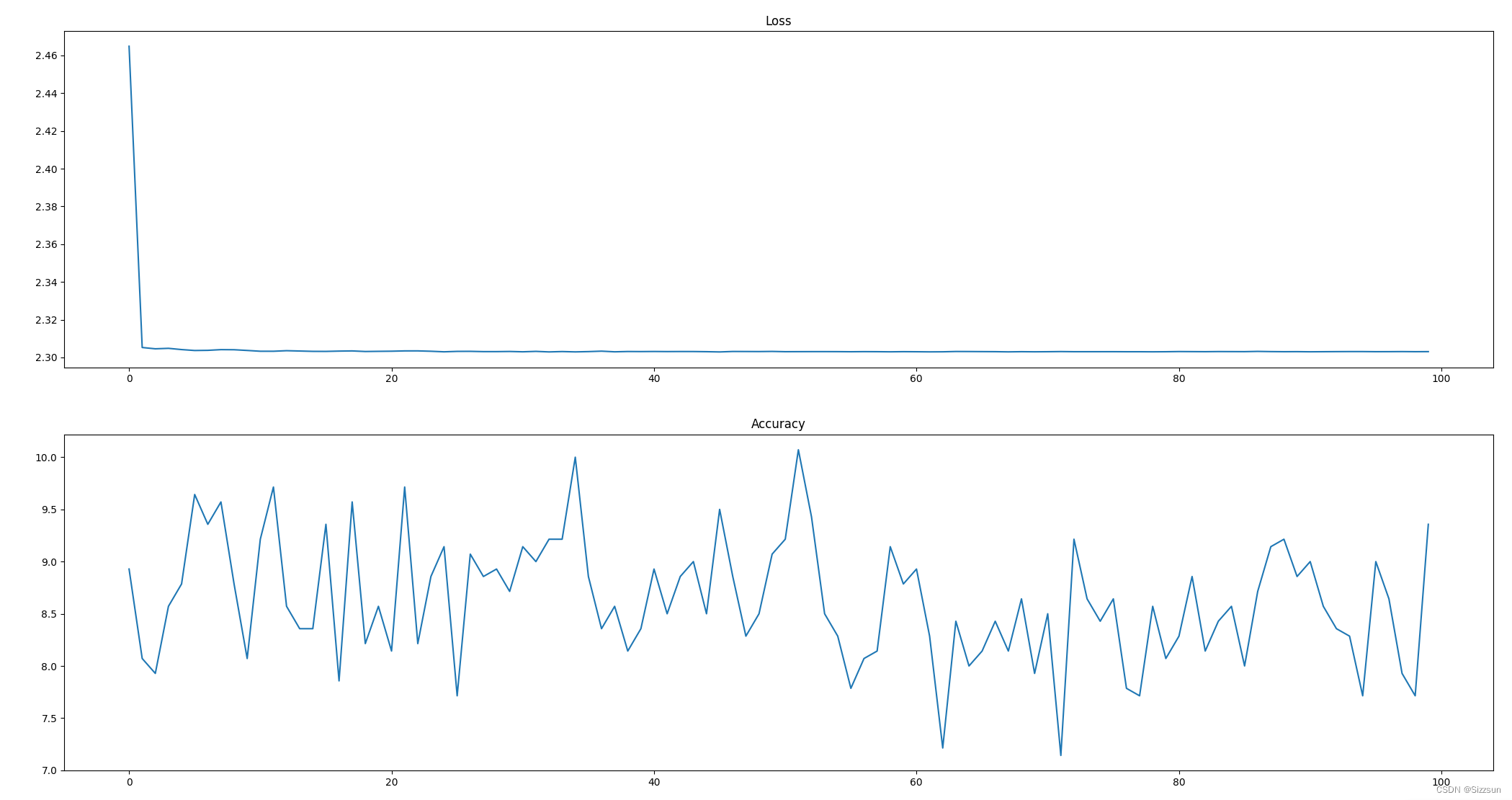

运行结果:

程序三Train_VGG16:

import os

import time

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import transforms

from VGG16 import VGG16

from car_data_origin import Car

import matplotlib.pyplot as plt

def main():

# 数据处理和加载

pipline_train = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))

])

pipline_test = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))

])

train_data = Car(root="E:/deeplearnig/VGG and Siamese(1)/datasets/car/train", transforms=pipline_train)

test_data = Car(root="E:/deeplearnig/VGG and Siamese(1)/datasets/car/val", transforms=pipline_test)

trainloader = DataLoader(train_data, batch_size=8, shuffle=True)

testloader = DataLoader(test_data, batch_size=4, shuffle=False)

# 创建模型,部署到GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = VGG16().to(device)

# 损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 100

Loss = []

Accuracy = []

for epoch in range(1, epochs + 1):

start_time = time.time()

train_loss, train_acc = train_runner(model, device, trainloader, optimizer, criterion, epoch)

test_acc, test_loss = test_runner(model, device, testloader, criterion)

end_time = time.time()

Loss.append(train_loss)

Accuracy.append(train_acc)

print(f"Epoch {epoch}/{epochs}, Time: {end_time - start_time:.2f}s")

print(f"Train Accuracy: {train_acc:.2f}%, Train Average Loss: {train_loss:.6f}")

print(f"Test Accuracy: {test_acc:.2f}%, Test Average Loss: {test_loss:.6f}\n")

print('Finished Training')

# 保存模型

savePath = "../models/"

if not os.path.exists(savePath):

os.mkdir(savePath)

torch.save(model.state_dict(), savePath + 'VGG16_car.pth')

print("Model saved.")

plt.subplot(2, 1, 1)

plt.plot(Loss)

plt.title('Loss')

plt.subplot(2, 1, 2)

plt.plot(Accuracy)

plt.title('Accuracy')

plt.show()

def train_runner(model, device, trainloader, optimizer, criterion, epoch):

# 训练模型

model.train()

total_loss = 0.0

correct = 0

total = 0

for batch_idx, (inputs, targets) in enumerate(trainloader):

inputs, targets = inputs.to(device), targets.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets)

loss.backward()

optimizer.step()

total_loss += loss.item()

_, predicted = outputs.max(1)

total += targets.size(0)

correct += predicted.eq(targets).sum().item()

avg_loss = total_loss / len(trainloader)

accuracy = 100. * correct / total

return avg_loss, accuracy

def test_runner(model, device, testloader, criterion):

# 模型验证

model.eval()

correct = 0

total = 0

test_loss = 0.0

with torch.no_grad():

for data, target in testloader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += criterion(output, target).item()

_, predicted = output.max(1)

total += target.size(0)

correct += predicted.eq(target).sum().item()

avg_loss = test_loss / len(testloader)

accuracy = 100. * correct / total

return accuracy, avg_loss

if __name__ == '__main__':

main()

运行结果:

C:\Users\Administrator\.conda\envs\pytorch\python.exe "E:\deeplearnig\VGG and Siamese(1)\Train_VGG16.py"

Epoch 1/100, Time: 44.18s

Train Accuracy: 12.64%, Train Average Loss: 2.950671

Test Accuracy: 17.00%, Test Average Loss: 2.664477

Epoch 2/100, Time: 42.79s

Train Accuracy: 22.93%, Train Average Loss: 2.730200

Test Accuracy: 32.00%, Test Average Loss: 2.620904

Epoch 3/100, Time: 43.07s

Train Accuracy: 28.14%, Train Average Loss: 2.692980

Test Accuracy: 27.00%, Test Average Loss: 2.449761

Epoch 4/100, Time: 42.63s

Train Accuracy: 33.86%, Train Average Loss: 2.554127

Test Accuracy: 30.50%, Test Average Loss: 2.129170

Epoch 5/100, Time: 42.90s

Train Accuracy: 36.57%, Train Average Loss: 2.447231

Test Accuracy: 40.00%, Test Average Loss: 1.961281

Epoch 6/100, Time: 43.05s

Train Accuracy: 37.07%, Train Average Loss: 2.512312

Test Accuracy: 50.50%, Test Average Loss: 1.510240

Epoch 7/100, Time: 42.84s

Train Accuracy: 42.50%, Train Average Loss: 2.291613

Test Accuracy: 59.00%, Test Average Loss: 1.390003

Epoch 8/100, Time: 42.55s

Train Accuracy: 41.57%, Train Average Loss: 2.409683

Test Accuracy: 41.00%, Test Average Loss: 3.413687

Epoch 9/100, Time: 42.38s

Train Accuracy: 47.07%, Train Average Loss: 2.114320

Test Accuracy: 57.00%, Test Average Loss: 2.988450

Epoch 10/100, Time: 42.89s

Train Accuracy: 48.43%, Train Average Loss: 2.164377

Test Accuracy: 54.00%, Test Average Loss: 3.032696

Epoch 11/100, Time: 43.06s

Train Accuracy: 48.50%, Train Average Loss: 2.084977

Test Accuracy: 46.00%, Test Average Loss: 2.505732

Epoch 12/100, Time: 43.27s

Train Accuracy: 48.93%, Train Average Loss: 2.008778

Test Accuracy: 56.00%, Test Average Loss: 1.591382

Epoch 13/100, Time: 42.66s

Train Accuracy: 53.07%, Train Average Loss: 1.823400

Test Accuracy: 59.00%, Test Average Loss: 1.575225

Epoch 14/100, Time: 42.58s

Train Accuracy: 56.07%, Train Average Loss: 1.714480

Test Accuracy: 59.50%, Test Average Loss: 1.320819

Epoch 15/100, Time: 43.01s

Train Accuracy: 56.00%, Train Average Loss: 1.713857

Test Accuracy: 59.00%, Test Average Loss: 1.701987

Epoch 16/100, Time: 42.74s

Train Accuracy: 51.93%, Train Average Loss: 2.148327

Test Accuracy: 43.50%, Test Average Loss: 2.414334

Epoch 17/100, Time: 43.49s

Train Accuracy: 53.00%, Train Average Loss: 1.708398

Test Accuracy: 60.50%, Test Average Loss: 1.448982

Epoch 18/100, Time: 43.62s

Train Accuracy: 56.29%, Train Average Loss: 1.650544

Test Accuracy: 51.50%, Test Average Loss: 2.163751

Epoch 19/100, Time: 43.37s

Train Accuracy: 60.36%, Train Average Loss: 1.448843

Test Accuracy: 68.00%, Test Average Loss: 1.161868

Epoch 20/100, Time: 43.07s

Train Accuracy: 63.00%, Train Average Loss: 1.407892

Test Accuracy: 60.00%, Test Average Loss: 1.858408

Epoch 21/100, Time: 47.64s

Train Accuracy: 66.50%, Train Average Loss: 1.220983

Test Accuracy: 55.00%, Test Average Loss: 4.102234

Epoch 22/100, Time: 43.61s

Train Accuracy: 66.36%, Train Average Loss: 1.267141

Test Accuracy: 71.00%, Test Average Loss: 1.201189

Epoch 23/100, Time: 43.05s

Train Accuracy: 69.36%, Train Average Loss: 1.198333

Test Accuracy: 62.50%, Test Average Loss: 1.538909

Epoch 24/100, Time: 42.85s

Train Accuracy: 70.79%, Train Average Loss: 1.110783

Test Accuracy: 69.00%, Test Average Loss: 1.423777

Epoch 25/100, Time: 43.27s

Train Accuracy: 69.50%, Train Average Loss: 1.070163

Test Accuracy: 62.50%, Test Average Loss: 1.775788

Epoch 26/100, Time: 45.39s

Train Accuracy: 70.79%, Train Average Loss: 1.001934

Test Accuracy: 66.00%, Test Average Loss: 1.426365

Epoch 27/100, Time: 42.90s

Train Accuracy: 72.50%, Train Average Loss: 0.941015

Test Accuracy: 63.50%, Test Average Loss: 1.966777

Epoch 28/100, Time: 42.77s

Train Accuracy: 74.57%, Train Average Loss: 0.919872

Test Accuracy: 67.50%, Test Average Loss: 1.406872

Epoch 29/100, Time: 42.82s

Train Accuracy: 76.71%, Train Average Loss: 0.759704

Test Accuracy: 67.00%, Test Average Loss: 1.410608

Epoch 30/100, Time: 42.87s

Train Accuracy: 80.71%, Train Average Loss: 0.665533

Test Accuracy: 67.50%, Test Average Loss: 1.323388

Epoch 31/100, Time: 43.18s

Train Accuracy: 80.14%, Train Average Loss: 0.633734

Test Accuracy: 67.50%, Test Average Loss: 1.191376

Epoch 32/100, Time: 42.54s

Train Accuracy: 80.21%, Train Average Loss: 0.703288

Test Accuracy: 66.50%, Test Average Loss: 1.574172

Epoch 33/100, Time: 43.16s

Train Accuracy: 80.71%, Train Average Loss: 0.648511

Test Accuracy: 68.50%, Test Average Loss: 1.253326

Epoch 34/100, Time: 42.75s

Train Accuracy: 82.86%, Train Average Loss: 0.586593

Test Accuracy: 66.00%, Test Average Loss: 1.300445

Epoch 35/100, Time: 43.32s

Train Accuracy: 83.29%, Train Average Loss: 0.534224

Test Accuracy: 68.50%, Test Average Loss: 1.600032

Epoch 36/100, Time: 43.07s

Train Accuracy: 85.50%, Train Average Loss: 0.525826

Test Accuracy: 68.50%, Test Average Loss: 1.450944

Epoch 37/100, Time: 42.20s

Train Accuracy: 85.43%, Train Average Loss: 0.475364

Test Accuracy: 72.00%, Test Average Loss: 1.250811

Epoch 38/100, Time: 42.45s

Train Accuracy: 84.43%, Train Average Loss: 0.585558

Test Accuracy: 67.50%, Test Average Loss: 1.663046

Epoch 39/100, Time: 42.30s

Train Accuracy: 85.79%, Train Average Loss: 0.485690

Test Accuracy: 68.50%, Test Average Loss: 1.362564

Epoch 40/100, Time: 42.59s

Train Accuracy: 85.57%, Train Average Loss: 0.457015

Test Accuracy: 71.00%, Test Average Loss: 1.482029

Epoch 41/100, Time: 42.82s

Train Accuracy: 85.79%, Train Average Loss: 0.522534

Test Accuracy: 72.00%, Test Average Loss: 1.352253

Epoch 42/100, Time: 42.80s

Train Accuracy: 87.79%, Train Average Loss: 0.398443

Test Accuracy: 72.00%, Test Average Loss: 1.256099

Epoch 43/100, Time: 42.44s

Train Accuracy: 88.36%, Train Average Loss: 0.429865

Test Accuracy: 74.50%, Test Average Loss: 1.474210

Epoch 44/100, Time: 42.87s

Train Accuracy: 88.14%, Train Average Loss: 0.416003

Test Accuracy: 73.50%, Test Average Loss: 1.274462

Epoch 45/100, Time: 43.02s

Train Accuracy: 87.64%, Train Average Loss: 0.452182

Test Accuracy: 74.00%, Test Average Loss: 1.450168

Epoch 46/100, Time: 43.64s

Train Accuracy: 89.57%, Train Average Loss: 0.378843

Test Accuracy: 72.00%, Test Average Loss: 1.351240

Epoch 47/100, Time: 43.05s

Train Accuracy: 91.00%, Train Average Loss: 0.289240

Test Accuracy: 72.50%, Test Average Loss: 1.386482

Epoch 48/100, Time: 42.51s

Train Accuracy: 90.71%, Train Average Loss: 0.307707

Test Accuracy: 78.00%, Test Average Loss: 1.279066

Epoch 49/100, Time: 42.66s

Train Accuracy: 91.57%, Train Average Loss: 0.299976

Test Accuracy: 74.00%, Test Average Loss: 1.304493

Epoch 50/100, Time: 42.62s

Train Accuracy: 88.86%, Train Average Loss: 0.429909

Test Accuracy: 73.00%, Test Average Loss: 1.382795

Epoch 51/100, Time: 42.46s

Train Accuracy: 91.21%, Train Average Loss: 0.306615

Test Accuracy: 76.00%, Test Average Loss: 1.309700

Epoch 52/100, Time: 42.42s

Train Accuracy: 91.00%, Train Average Loss: 0.286100

Test Accuracy: 72.00%, Test Average Loss: 1.635651

Epoch 53/100, Time: 42.21s

Train Accuracy: 90.64%, Train Average Loss: 0.357244

Test Accuracy: 72.50%, Test Average Loss: 1.313712

Epoch 54/100, Time: 42.36s

Train Accuracy: 93.57%, Train Average Loss: 0.220612

Test Accuracy: 76.00%, Test Average Loss: 1.292393

Epoch 55/100, Time: 42.95s

Train Accuracy: 91.50%, Train Average Loss: 0.284906

Test Accuracy: 73.00%, Test Average Loss: 1.575787

Epoch 56/100, Time: 42.63s

Train Accuracy: 92.21%, Train Average Loss: 0.275172

Test Accuracy: 74.00%, Test Average Loss: 1.624438

Epoch 57/100, Time: 43.36s

Train Accuracy: 91.93%, Train Average Loss: 0.294722

Test Accuracy: 71.00%, Test Average Loss: 1.263066

Epoch 58/100, Time: 42.36s

Train Accuracy: 92.86%, Train Average Loss: 0.254413

Test Accuracy: 77.00%, Test Average Loss: 1.346407

Epoch 59/100, Time: 42.28s

Train Accuracy: 93.29%, Train Average Loss: 0.226384

Test Accuracy: 74.00%, Test Average Loss: 1.384903

Epoch 60/100, Time: 44.00s

Train Accuracy: 91.21%, Train Average Loss: 0.352764

Test Accuracy: 76.00%, Test Average Loss: 1.272360

Epoch 61/100, Time: 54.58s

Train Accuracy: 91.79%, Train Average Loss: 0.306122

Test Accuracy: 76.00%, Test Average Loss: 1.465147

Epoch 62/100, Time: 43.22s

Train Accuracy: 93.00%, Train Average Loss: 0.261215

Test Accuracy: 75.00%, Test Average Loss: 1.587256

Epoch 63/100, Time: 42.37s

Train Accuracy: 90.36%, Train Average Loss: 0.348683

Test Accuracy: 71.00%, Test Average Loss: 1.548762

Epoch 64/100, Time: 42.73s

Train Accuracy: 92.29%, Train Average Loss: 0.318729

Test Accuracy: 76.00%, Test Average Loss: 1.787213

Epoch 65/100, Time: 42.29s

Train Accuracy: 94.21%, Train Average Loss: 0.205660

Test Accuracy: 76.50%, Test Average Loss: 1.267424

Epoch 66/100, Time: 42.07s

Train Accuracy: 95.29%, Train Average Loss: 0.181558

Test Accuracy: 71.50%, Test Average Loss: 1.549532

Epoch 67/100, Time: 42.14s

Train Accuracy: 95.07%, Train Average Loss: 0.167123

Test Accuracy: 72.50%, Test Average Loss: 1.525913

Epoch 68/100, Time: 41.84s

Train Accuracy: 94.43%, Train Average Loss: 0.211938

Test Accuracy: 75.50%, Test Average Loss: 1.643690

Epoch 69/100, Time: 41.91s

Train Accuracy: 93.86%, Train Average Loss: 0.204199

Test Accuracy: 76.50%, Test Average Loss: 1.277502

Epoch 70/100, Time: 42.11s

Train Accuracy: 94.57%, Train Average Loss: 0.192549

Test Accuracy: 72.00%, Test Average Loss: 1.818104

Epoch 71/100, Time: 42.13s

Train Accuracy: 93.29%, Train Average Loss: 0.305557

Test Accuracy: 74.00%, Test Average Loss: 1.566282

Epoch 72/100, Time: 42.02s

Train Accuracy: 93.29%, Train Average Loss: 0.245612

Test Accuracy: 72.50%, Test Average Loss: 1.571880

Epoch 73/100, Time: 42.07s

Train Accuracy: 94.07%, Train Average Loss: 0.190021

Test Accuracy: 77.00%, Test Average Loss: 1.300863

Epoch 74/100, Time: 42.09s

Train Accuracy: 93.86%, Train Average Loss: 0.220239

Test Accuracy: 79.00%, Test Average Loss: 1.434459

Epoch 75/100, Time: 42.46s

Train Accuracy: 94.79%, Train Average Loss: 0.200344

Test Accuracy: 76.00%, Test Average Loss: 1.651057

Epoch 76/100, Time: 42.53s

Train Accuracy: 94.50%, Train Average Loss: 0.219200

Test Accuracy: 74.50%, Test Average Loss: 1.448879

Epoch 77/100, Time: 42.81s

Train Accuracy: 95.21%, Train Average Loss: 0.186794

Test Accuracy: 77.50%, Test Average Loss: 1.706803

Epoch 78/100, Time: 42.01s

Train Accuracy: 94.93%, Train Average Loss: 0.167908

Test Accuracy: 77.00%, Test Average Loss: 1.343045

Epoch 79/100, Time: 42.11s

Train Accuracy: 95.00%, Train Average Loss: 0.157969

Test Accuracy: 78.00%, Test Average Loss: 1.484615

Epoch 80/100, Time: 42.20s

Train Accuracy: 93.36%, Train Average Loss: 0.269918

Test Accuracy: 75.50%, Test Average Loss: 1.431945

Epoch 81/100, Time: 42.15s

Train Accuracy: 94.64%, Train Average Loss: 0.220715

Test Accuracy: 74.50%, Test Average Loss: 1.453883

Epoch 82/100, Time: 42.17s

Train Accuracy: 95.07%, Train Average Loss: 0.174723

Test Accuracy: 78.50%, Test Average Loss: 1.574471

Epoch 83/100, Time: 42.12s

Train Accuracy: 95.36%, Train Average Loss: 0.193206

Test Accuracy: 75.50%, Test Average Loss: 1.527657

Epoch 84/100, Time: 43.10s

Train Accuracy: 94.43%, Train Average Loss: 0.236524

Test Accuracy: 69.50%, Test Average Loss: 1.732961

Epoch 85/100, Time: 52.68s

Train Accuracy: 95.43%, Train Average Loss: 0.147453

Test Accuracy: 72.00%, Test Average Loss: 1.825806

Epoch 86/100, Time: 42.43s

Train Accuracy: 94.43%, Train Average Loss: 0.247971

Test Accuracy: 79.00%, Test Average Loss: 1.411562

Epoch 87/100, Time: 42.41s

Train Accuracy: 95.64%, Train Average Loss: 0.149416

Test Accuracy: 80.00%, Test Average Loss: 1.355214

Epoch 88/100, Time: 42.32s

Train Accuracy: 96.50%, Train Average Loss: 0.154553

Test Accuracy: 78.00%, Test Average Loss: 1.720926

Epoch 89/100, Time: 42.99s

Train Accuracy: 96.64%, Train Average Loss: 0.144905

Test Accuracy: 77.00%, Test Average Loss: 1.885772

Epoch 90/100, Time: 42.34s

Train Accuracy: 95.71%, Train Average Loss: 0.149564

Test Accuracy: 74.50%, Test Average Loss: 1.985403

Epoch 91/100, Time: 41.87s

Train Accuracy: 95.71%, Train Average Loss: 0.143841

Test Accuracy: 76.00%, Test Average Loss: 1.520910

Epoch 92/100, Time: 42.20s

Train Accuracy: 95.21%, Train Average Loss: 0.193025

Test Accuracy: 74.50%, Test Average Loss: 1.806884

Epoch 93/100, Time: 42.28s

Train Accuracy: 95.29%, Train Average Loss: 0.177240

Test Accuracy: 76.50%, Test Average Loss: 1.725439

Epoch 94/100, Time: 42.14s

Train Accuracy: 96.64%, Train Average Loss: 0.163867

Test Accuracy: 70.00%, Test Average Loss: 2.068439

Epoch 95/100, Time: 42.56s

Train Accuracy: 97.36%, Train Average Loss: 0.108272

Test Accuracy: 74.00%, Test Average Loss: 1.719326

Epoch 96/100, Time: 41.91s

Train Accuracy: 96.21%, Train Average Loss: 0.134013

Test Accuracy: 74.50%, Test Average Loss: 1.552624

Epoch 97/100, Time: 43.43s

Train Accuracy: 97.29%, Train Average Loss: 0.076644

Test Accuracy: 76.50%, Test Average Loss: 1.303036

Epoch 98/100, Time: 43.42s

Train Accuracy: 97.14%, Train Average Loss: 0.099385

Test Accuracy: 75.50%, Test Average Loss: 1.585183

Epoch 99/100, Time: 42.50s

Train Accuracy: 95.29%, Train Average Loss: 0.149875

Test Accuracy: 74.50%, Test Average Loss: 1.940277

Epoch 100/100, Time: 42.87s

Train Accuracy: 95.00%, Train Average Loss: 0.201341

Test Accuracy: 73.50%, Test Average Loss: 1.784691

Finished Training

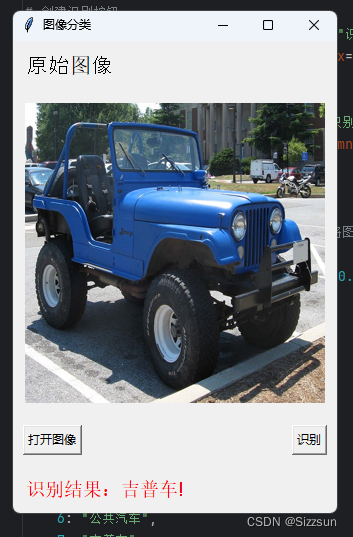

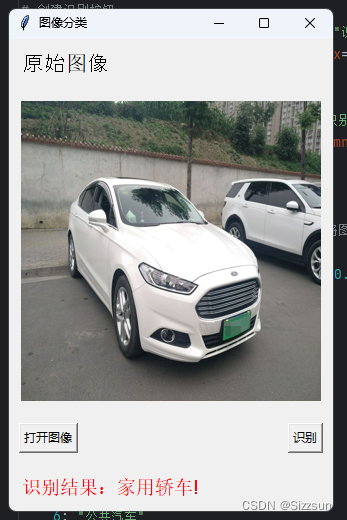

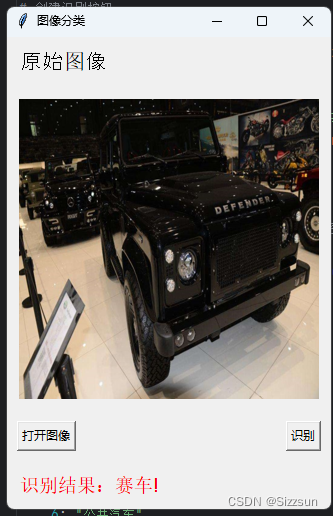

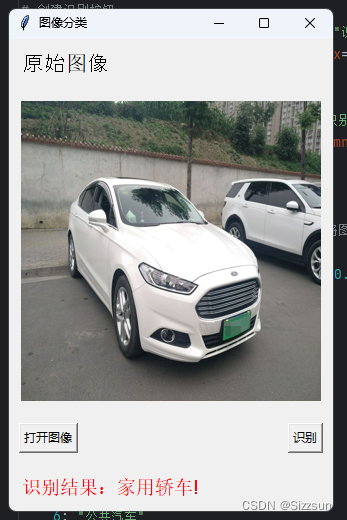

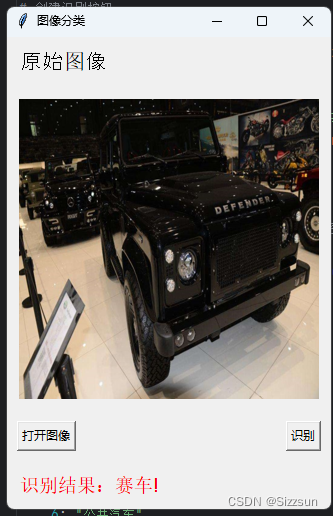

Model saved.程序四:(界面开发要求:先显示左上角为“图像分类”标题字样的对话框,界面上方为“原始图像”,界面左下角为“打开图像”按钮,右下角为“识别”按钮,点击“打开图像”会跳转到电脑文件夹去选择图片,选好的图片会呈现在界面中央,点击“识别”按钮,按钮右侧会显示红色的识别结果(该结果为键而不是数字代替)加感叹号)

import torch

import torch.nn.functional as F

import torchvision.transforms as transforms

from PIL import Image, ImageTk

import tkinter as tk

from tkinter import filedialog

from VGG16 import VGG16

def open_image():

fpath = filedialog.askopenfilename()

img = Image.open(fpath)

img = img.resize((300, 300)) # 调整图片大小以适应界面

img_tk = ImageTk.PhotoImage(img)

panel.configure(image=img_tk)

panel.image = img_tk # 保持图片对象的引用

def recognize():

img_tensor = transform(img)

img_tensor = img_tensor.to(device)

img_tensor = img_tensor.unsqueeze(0) # 图片扩展多一维,适配模型输入格式

# 预测

with torch.no_grad():

output = model(img_tensor)

probabilities = F.softmax(output, dim=1)

predicted_class = torch.argmax(probabilities, dim=1).item()

# 更新识别结果标签

label_result.config(text=f"识别结果:{class_labels[predicted_class]}!")

# 创建窗口

window = tk.Tk()

window.title("图像分类")

# 加载模型

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = VGG16()

model.load_state_dict(torch.load('../models/VGG16_car.pth'))

model = model.to(device)

model.eval() # 把模型转为test模式

# 创建标签

label_title = tk.Label(window, text="原始图像", font=("Arial", 16))

label_title.grid(row=0, column=0, columnspan=2, sticky="w", padx=10, pady=10)

# 创建图片显示面板

panel = tk.Label(window)

panel.grid(row=1, column=0, columnspan=2, padx=10, pady=10)

# 创建打开图像按钮

btn_open = tk.Button(window, text="打开图像", command=open_image)

btn_open.grid(row=2, column=0, padx=10, pady=10, sticky="w")

# 创建识别按钮

btn_recognize = tk.Button(window, text="识别", command=recognize)

btn_recognize.grid(row=2, column=1, padx=10, pady=10, sticky="e")

# 加载图片预处理变换

transform = transforms.Compose([

transforms.Resize((224, 224)), # 将图片尺寸resize到224x224

transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) # 标准化

])

# 定义车辆类别和对应标签的字典

class_labels = {

0: "卡车",

1: "出租车",

2: "面包车",

3: "消防车",

4: "赛车",

5: "SUV",

6: "公共汽车",

7: "吉普车",

8: "家用轿车",

9: "重型卡车"

}

# 运行窗口

window.mainloop()

运行结果:

![]()

注意点:

如果程序二VGG16,不加归一层,其训练过程的准确率始终低于20%,且不稳定,不随训练次数增加呈上升态势,架构如下所示

import torch

import torch.nn as nn

class VGG16(nn.Module):

def __init__(self):

super(VGG16, self).__init__()

self.conv_layers = nn.Sequential(

# 第一组卷积层和池化层

nn.Conv2d(3, 64, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第二组卷积层和池化层

nn.Conv2d(64, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第三组卷积层和池化层

nn.Conv2d(128, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第四组卷积层和池化层

nn.Conv2d(256, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第五组卷积层和池化层

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.fc_layers = nn.Sequential(

# 全连接层

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 10) # 输出层,10个类别的分类

)

def forward(self, x):

x = self.conv_layers(x)

x = x.view(x.size(0), -1)

x = self.fc_layers(x)

return x

相对归一化后较差的运行结果:

epoach=10的运行结果:

C:\Users\Administrator\.conda\envs\pytorch\python.exe "E:\deeplearnig\VGG and Siamese(1)\Train_VGG16.py"

Train Epoch: 1 [0/1400 (0%)] Loss: 2.299627

Train Epoch: 1 [800/1400 (57%)] Loss: 2.314925

Train Epoch: 1 Accuracy: 8.43% Average Loss: 2.323766

Test Accuracy: 10.00% Average Loss: 2.302881

Epoch 1/10, Time: 43.30s

Train Epoch: 2 [0/1400 (0%)] Loss: 2.306078

Train Epoch: 2 [800/1400 (57%)] Loss: 2.261153

Train Epoch: 2 Accuracy: 9.14% Average Loss: 2.304938

Test Accuracy: 10.00% Average Loss: 2.302833

Epoch 2/10, Time: 40.78s

Train Epoch: 3 [0/1400 (0%)] Loss: 2.295484

Train Epoch: 3 [800/1400 (57%)] Loss: 2.291479

Train Epoch: 3 Accuracy: 8.50% Average Loss: 2.305857

Test Accuracy: 10.00% Average Loss: 2.302801

Epoch 3/10, Time: 40.63s

Train Epoch: 4 [0/1400 (0%)] Loss: 2.309008

Train Epoch: 4 [800/1400 (57%)] Loss: 2.304273

Train Epoch: 4 Accuracy: 8.71% Average Loss: 2.304601

Test Accuracy: 10.00% Average Loss: 2.302620

Epoch 4/10, Time: 40.75s

Train Epoch: 5 [0/1400 (0%)] Loss: 2.296353

Train Epoch: 5 [800/1400 (57%)] Loss: 2.309209

Train Epoch: 5 Accuracy: 9.64% Average Loss: 2.304228

Test Accuracy: 10.00% Average Loss: 2.302635

Epoch 5/10, Time: 41.02s

Train Epoch: 6 [0/1400 (0%)] Loss: 2.303827

Train Epoch: 6 [800/1400 (57%)] Loss: 2.305665

Train Epoch: 6 Accuracy: 9.29% Average Loss: 2.303980

Test Accuracy: 10.00% Average Loss: 2.302634

Epoch 6/10, Time: 41.28s

Train Epoch: 7 [0/1400 (0%)] Loss: 2.304016

Train Epoch: 7 [800/1400 (57%)] Loss: 2.300594

Train Epoch: 7 Accuracy: 9.57% Average Loss: 2.304021

Test Accuracy: 10.00% Average Loss: 2.302621

Epoch 7/10, Time: 41.13s

Train Epoch: 8 [0/1400 (0%)] Loss: 2.298562

Train Epoch: 8 [800/1400 (57%)] Loss: 2.306801

Train Epoch: 8 Accuracy: 9.21% Average Loss: 2.303603

Test Accuracy: 10.00% Average Loss: 2.302627

Epoch 8/10, Time: 41.00s

Train Epoch: 9 [0/1400 (0%)] Loss: 2.307916

Train Epoch: 9 [800/1400 (57%)] Loss: 2.303983

Train Epoch: 9 Accuracy: 7.64% Average Loss: 2.303652

Test Accuracy: 10.00% Average Loss: 2.302603

Epoch 9/10, Time: 41.35s

Train Epoch: 10 [0/1400 (0%)] Loss: 2.299228

Train Epoch: 10 [800/1400 (57%)] Loss: 2.303137

Train Epoch: 10 Accuracy: 8.43% Average Loss: 2.303806

Test Accuracy: 10.00% Average Loss: 2.302609

Epoch 10/10, Time: 41.49s

Finished Training

Model saved.

libpng warning: iCCP: known incorrect sRGB profile

libpng warning: iCCP: known incorrect sRGB profile

libpng warning: iCCP: known incorrect sRGB profile

libpng warning: iCCP: known incorrect sRGB profile没有归一化的epoach=100的运行结果:

上述两张图的结果,都是对正则化(归一)未合理使用,导致准确性非常低 ,在构建网络过程中,应注意规避。

总结:使用VGG16网络,注意归一化的使用,忽略归一化会使得训练准确性降低。合理的归一化VGG16网络在100次训练后,在训练集上汽车识别准确率高达95% ,合理开发界面,完成图像分类任务。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?