main.py代码如下:

import requests

import json

from elasticsearch import Elasticsearch

from datetime import datetime

from queue import Queue

from threading import Thread

import time

import random

class MyThread(Thread):

def __init__(self, func):

super(MyThread, self).__init__()

self.func = func

def run(self):

self.func()

class Lagou(object):

def __init__(self, positionname, start_num=1):

self.origin = 'http://www.lagou.com'

self.cookie = ("Cookie: ctk=1468304345; "

"JSESSIONID=7EEE619B6201AF72DEDDD895B862B2A0; "

"LGMOID=20160712141905-A5E4B025F73A2D76BC116066179D097C; _gat=1; "

"user_trace_token=20160712141906-895f24c3-47f8-11e6-9718-525400f775ce; "

"PRE_UTM=; PRE_HOST=; PRE_SITE=; PRE_LAND=http%3A%2F%2Fwww.lagou.com%2F; "

"LGUID=20160712141906-895f278b-47f8-11e6-9718-525400f775ce; "

"index_location_city=%E5%85%A8%E5%9B%BD; "

"SEARCH_ID=4b90786888e644e8a056d830e2c332a1; "

"_ga=GA1.2.938889728.1468304346; "

"Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1468304346; "

"Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1468304392; "

"LGSID=20160712141906-895f25ed-47f8-11e6-9718-525400f775ce; "

"LGRID=20160712141951-a4502cd0-47f8-11e6-9718-525400f775ce")

self.user_agent = ('User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/51.0.2704.106 Safari/537.36')

self.referer = 'http://www.lagou.com/zhaopin/Python/?labelWords=label'

self.headers = {'cookie': self.cookie,

'origin': self.origin,

'User-Agent': self.user_agent,

'Referer': self.referer,

'X-Requested-With': 'XMLHttpRequest'

}

self.url = 'http://www.lagou.com/jobs/positionAjax.json?'

self.date_post = Queue()

self.date_dict = Queue()

self.es = Elasticsearch(hosts='127.0.0.1')

self.es_index_name = 'lagou'

self.page_number_start = start_num

self.positionName = positionname

self.proxies0 = {

'http': ''

}

self.proxies1 = {

'http': ''

}

self.proxies2 = {

'http': ''

}

self.proxies3 = {

'http': ''

}

self.proxies4 = {

'http': ''

}

self.proxies5 = {

'http': ''

}

def post_date(self, n):

"""生成post data 并放入实例化的Queue"""

date = {'first': 'true',

'pn': n,

'kd': self.positionName}

self.date_post.put(date)

def code(self, date):

"""随机选择代理点post连接,并返回数据"""

try:

proxies_list = (self.proxies0, self.proxies1, self.proxies2,self.proxies3, self.proxies4, self.proxies5)

proxies = random.choice(proxies_list)

print(proxies)

request = requests.post(url=self.url, headers=self.headers,params=date,proxies=proxies, timeout=15)

date_str = request.content.decode('utf-8')

return date_str

except:

print('请求出错')

self.date_post.put(date)

def json_dict(self, date_str, n):

"""由原始的数据,解析出有用的数据"""

try:

dict_date = json.loads(date_str)

date_list = dict_date['content']['positionResult']['result']

if len(date_list) == 0:

return 0

else:

n += 1

self.post_date(n)

return date_list

except:

print(date_str)

return None

def dict_put(self, date_list):

"""由列表解析字典,添加时间字段,并放入Queue"""

for i in date_list:

i['@timestamp'] = datetime.utcnow().strftime('%Y-%m-%dT%H:%M:%S.%f')

i['Jobname'] = self.positionName

self.date_dict.put(i)

def work_date(self):

"""执行函数,获取数据"""

while True:

time.sleep(10)

date = self.date_post.get()

num = date['pn']

print(num, self.positionName)

self.date_post.task_done()

date_str = self.code(date)

if date_str:

date_list = self.json_dict(date_str, num)

else:

continue

if None:

self.date_post.put(date)

continue

elif date_list == 0:

break

else:

self.dict_put(date_list)

def es_index(self, dict_data):

"""数据插入es"""

try:

print(dict_data)

self.date_dict.task_done()

es_id = dict_data['positionId']

date = json.dumps(dict_data)

self.es.index(index=self.es_index_name, doc_type='job', id=es_id, body=date)

except:

print(dict_data)

time.sleep(5)

self.date_dict.put(dict_data)

def work_es(self):

"""数据插入执行函数"""

while True:

dict_data = self.date_dict.get()

self.es_index(dict_data)

def run(self):

self.post_date(self.page_number_start)

thread_date = MyThread(self.work_date)

thread_date.start()

for _ in list(range(5)):

thread_es = MyThread(self.work_es)

thread_es.setDaemon(True)

thread_es.start()

thread_date.join()

self.date_post.join()

self.date_dict.join()

if __name__ == '__main__':

with open('jobnames', 'r') as f:

job_list = f.readlines()

for i in job_list:

name = i.strip('\n')

x = Lagou(positionname=name)

x.run()

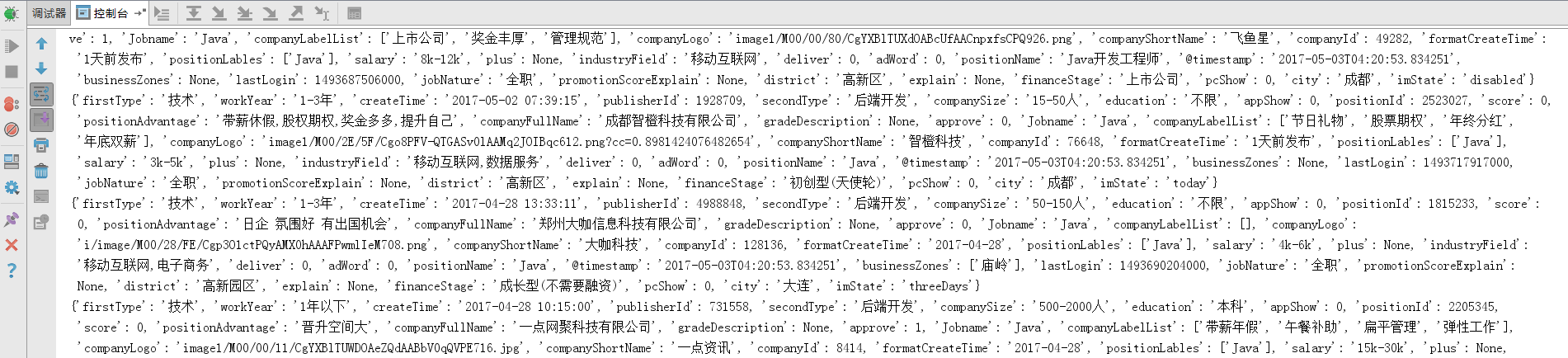

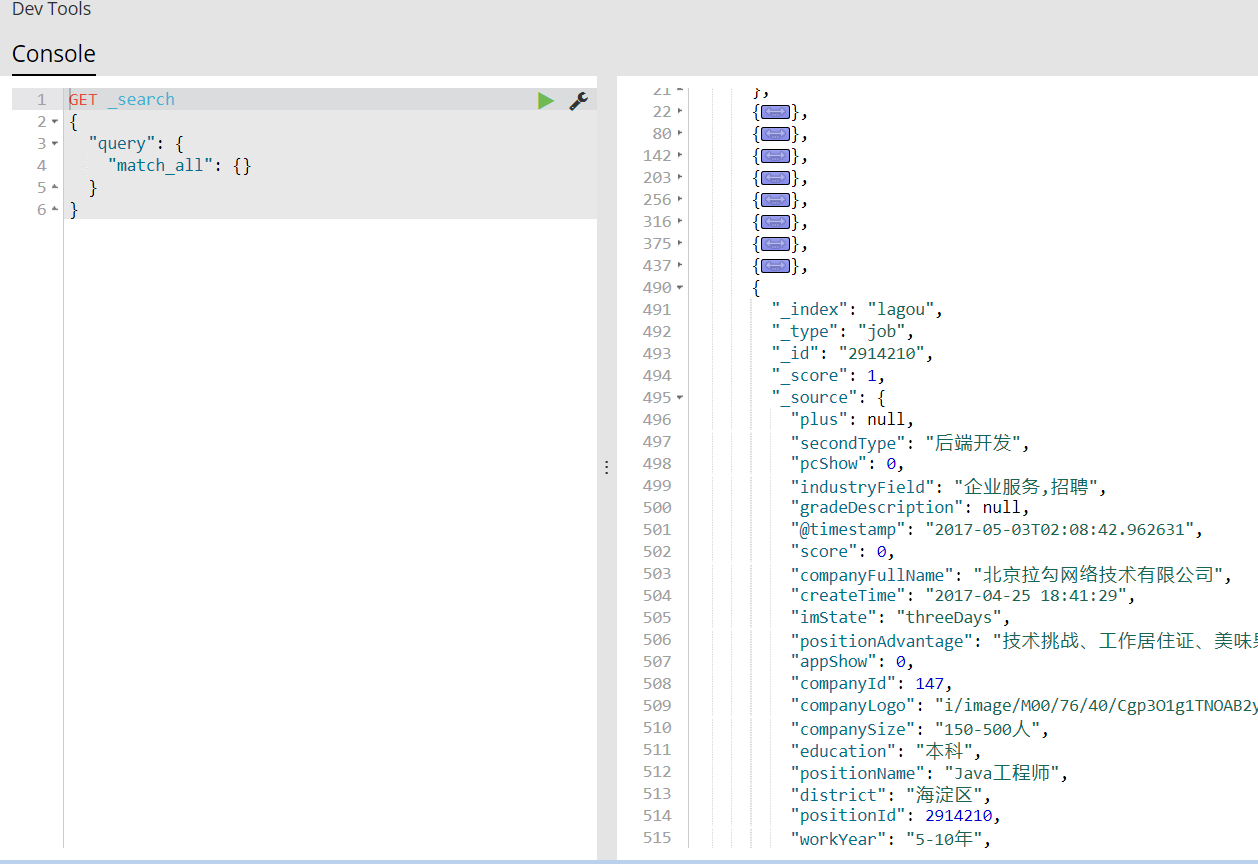

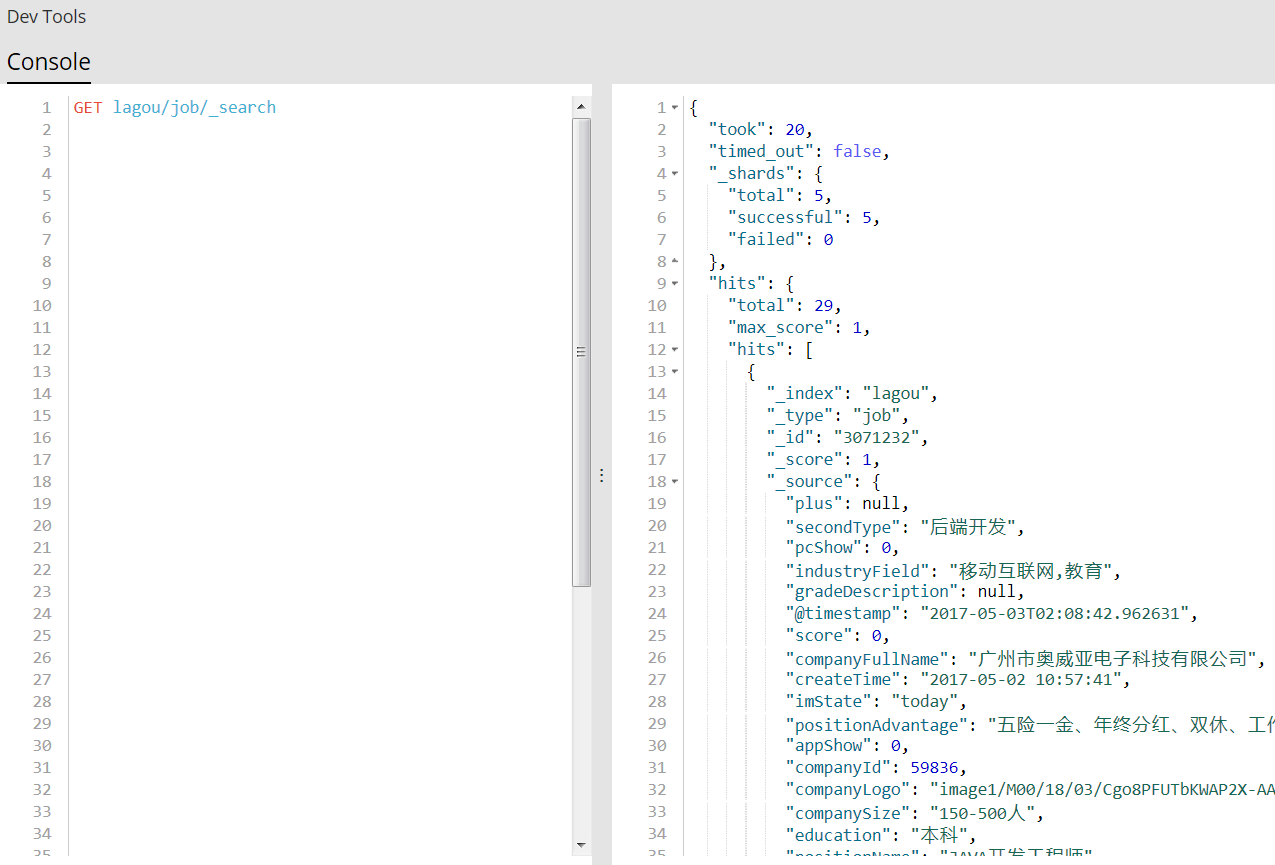

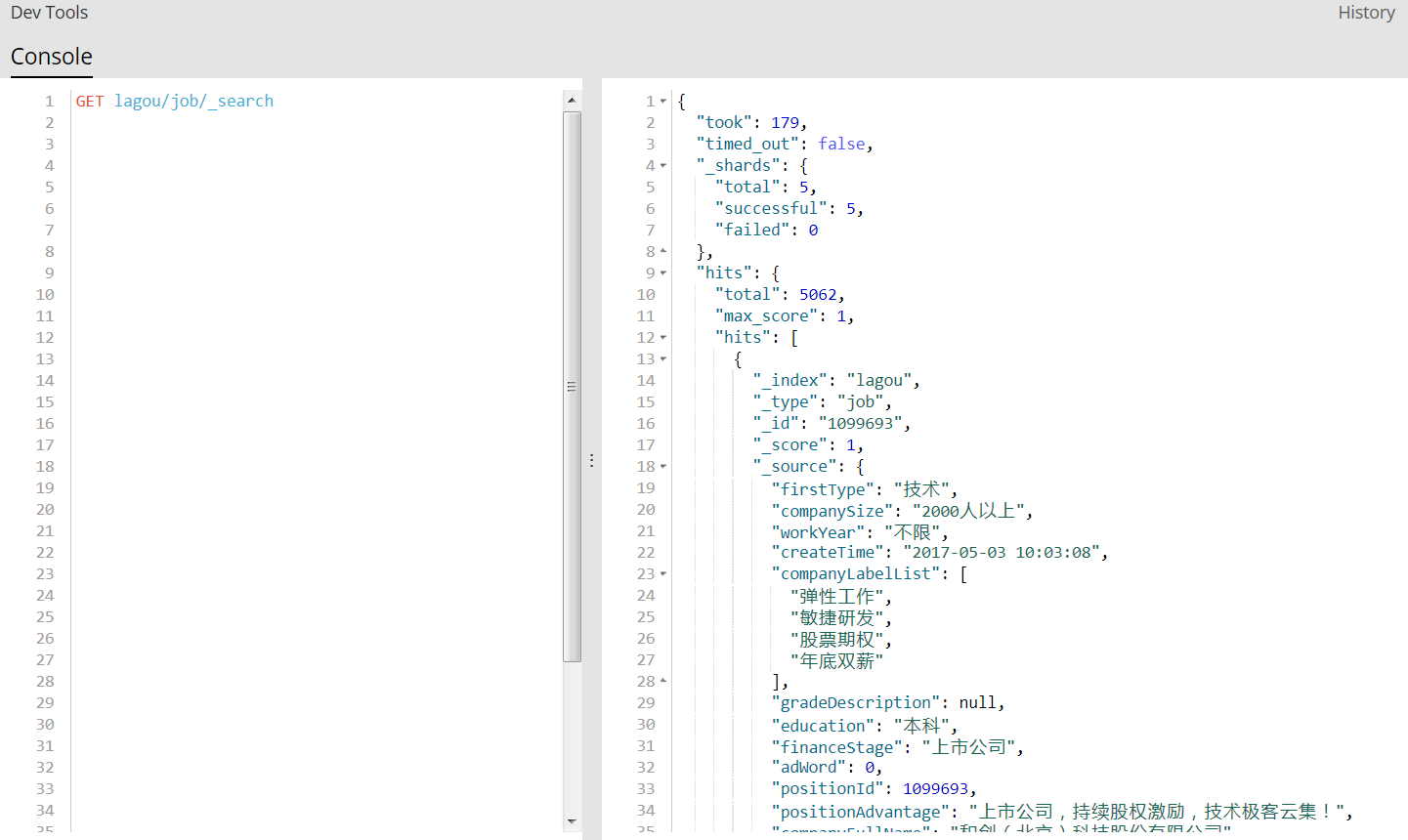

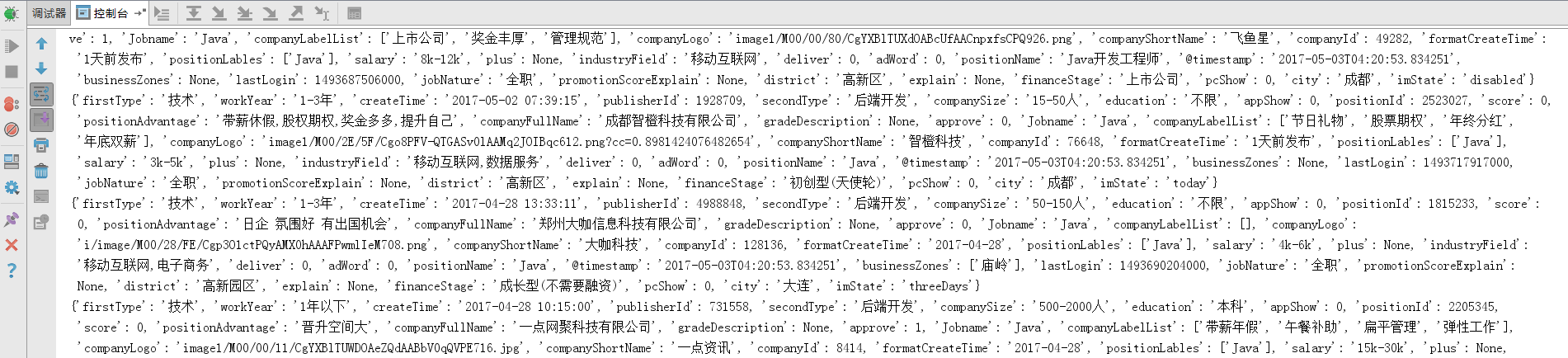

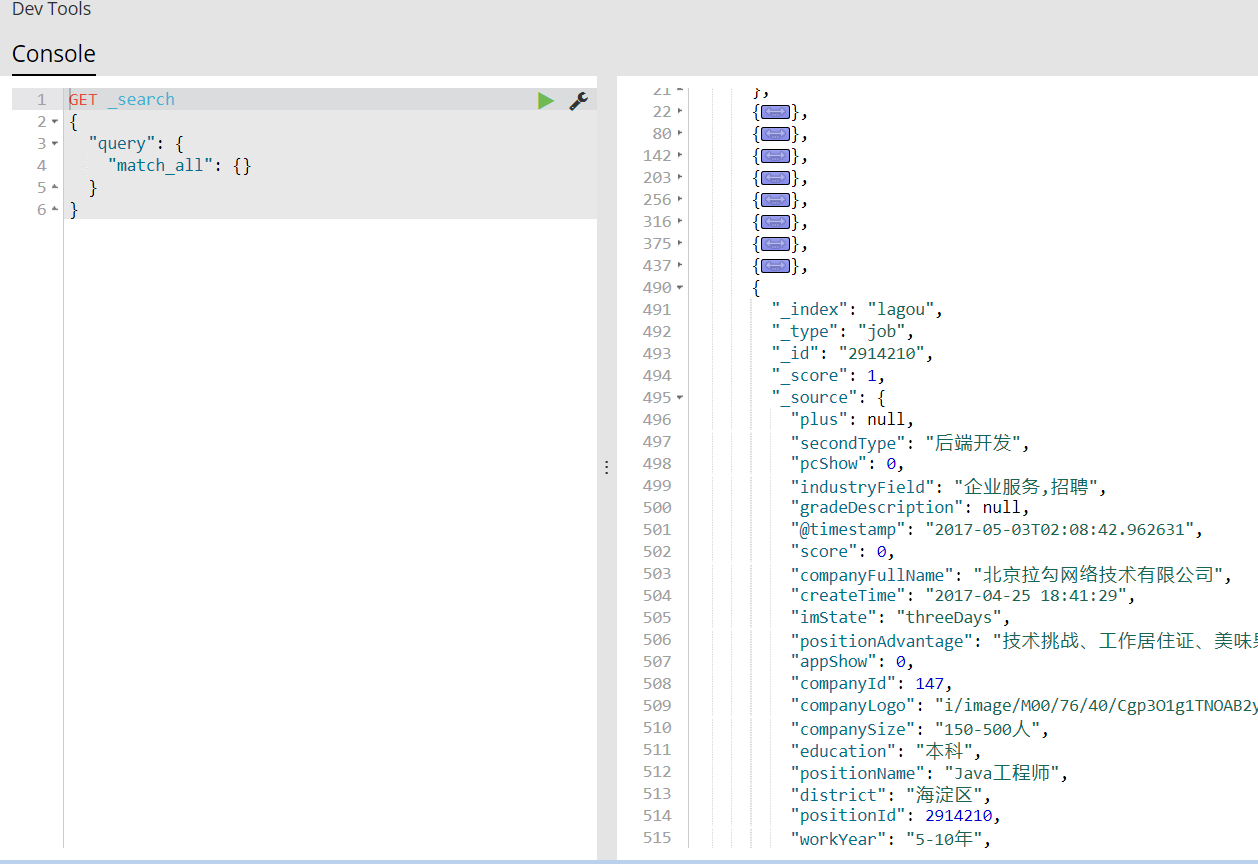

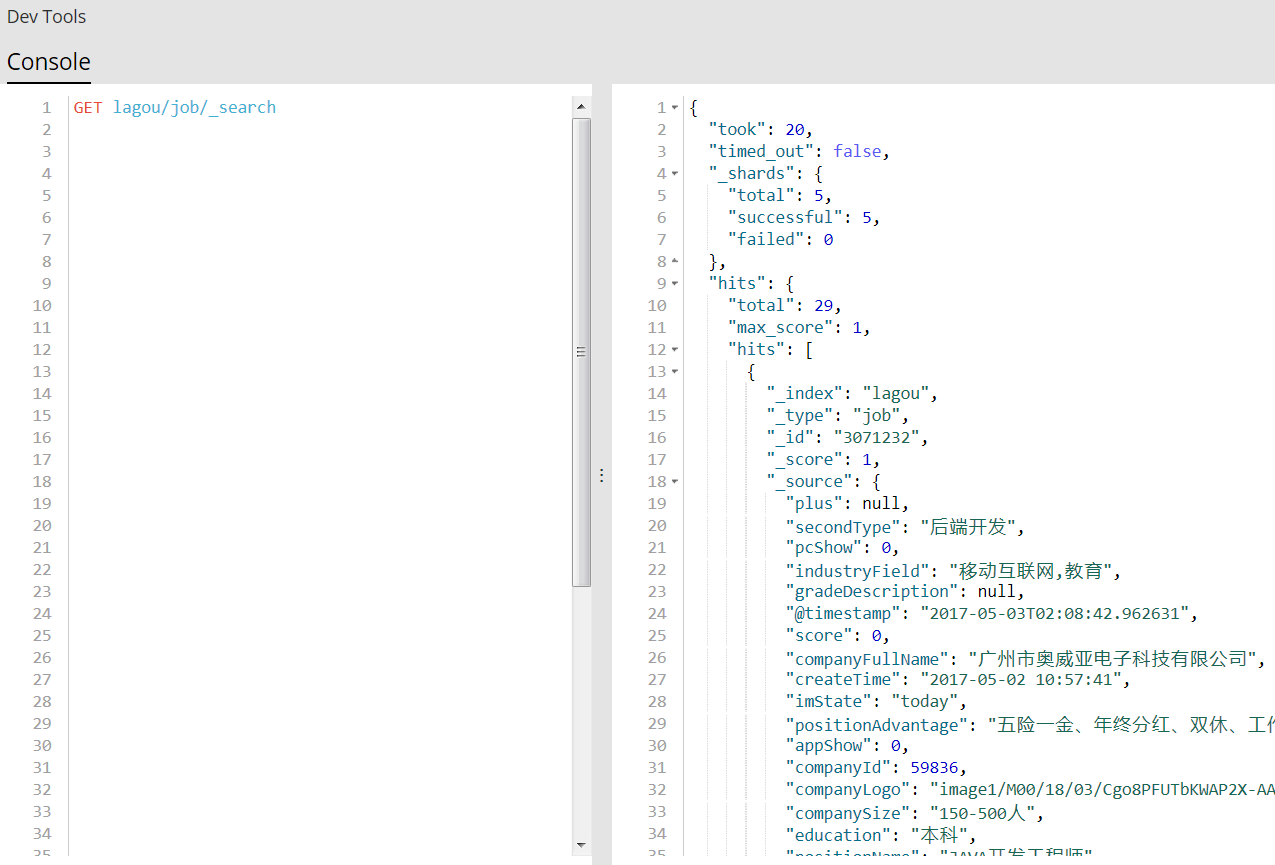

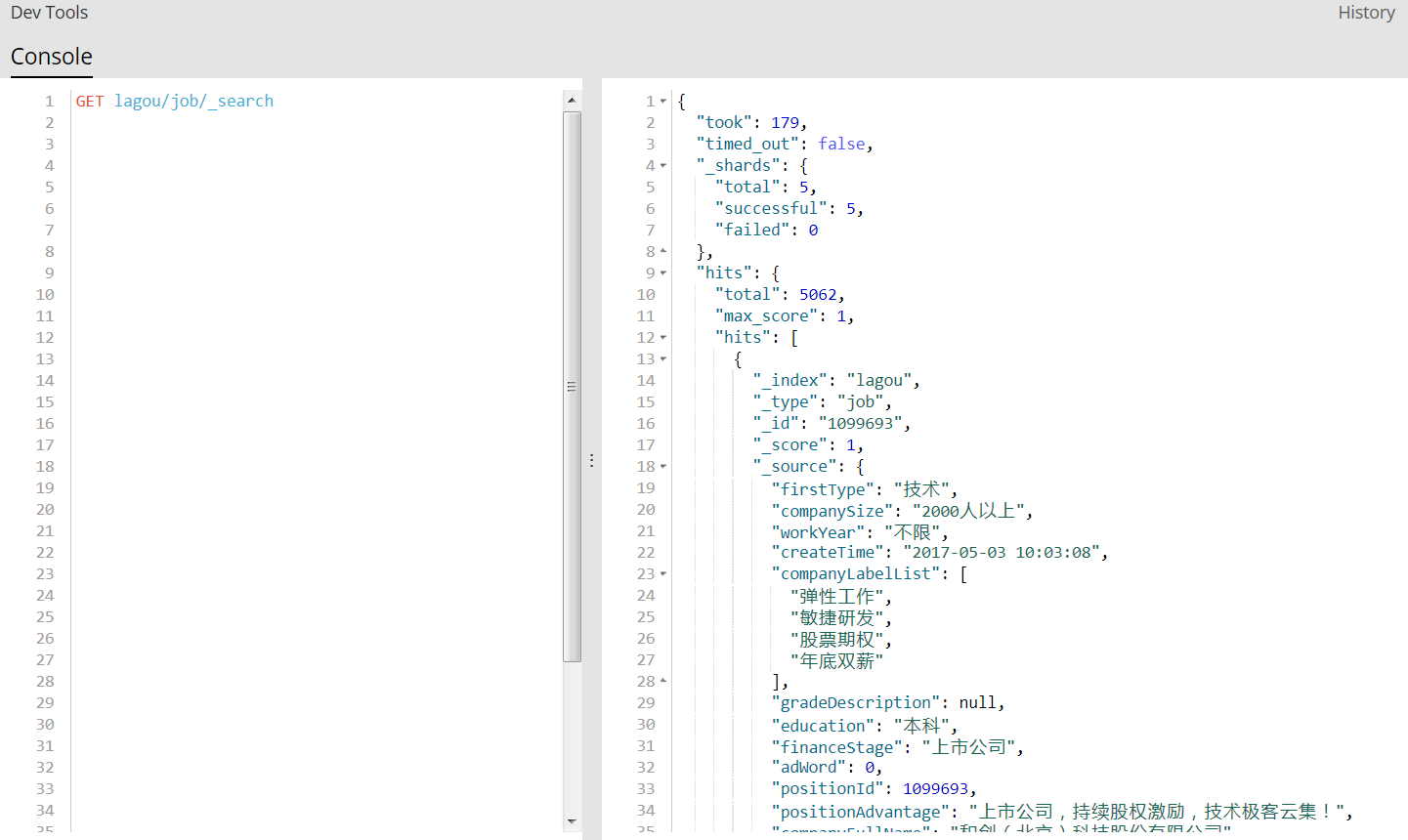

运行结果如图:

爬取拉勾网首页的所有job名称并写入文件

job.py代码:

import requests

import lxml

import bs4

origin = 'http://www.lagou.com'

cookie = ('Cookie: user_trace_token=20160615104959-0ac678b0e03a46c89a313518c24'

'ff856; LGMOID=20160710094357-447236C62C36EDA1D297A38266ACC1C3; '

'LGUID=20160710094359-c5cb0e3b-463f-11e6-a4ae-5254005c3644; '''

'index_location_city=%E6%9D%AD%E5%B7%9E; '''

'LGRID=20160710094631-208fc272-4640-11e6-955d-525400f775ce;' ''

'_ga=GA1.2.954770427.1465959000; ctk=1468117465; '''

'JSESSIONID=F827D8AB3ACD05553D0CC9634A5B6096; '''

'SEARCH_ID=5c0a948f7c02452f9532b4d2dde92762; '''

'Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1465958999,1468115038; '''

'Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1468117466')

user_agent = ('User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36'

'(KHTML, like Gecko) Chrome/51.0.2704.106 Safari/537.36')

headers = {'cookie': cookie,

'origin': origin,

'User-Agent': user_agent,

}

url = 'http://www.lagou.com'

r = requests.get(url=url, headers=headers)

page = r.content.decode('utf-8')

soup = bs4.BeautifulSoup(page, 'lxml')

date = []

jobnames = soup.select('#sidebar > div.mainNavs > div > div.menu_sub.dn > dl > dd > a')

for i in jobnames:

jobname = i.get_text().replace('/', '+')

date.append(jobname)

with open('jobnames', 'w') as f:

for i in date:

f.write(i + '\n')

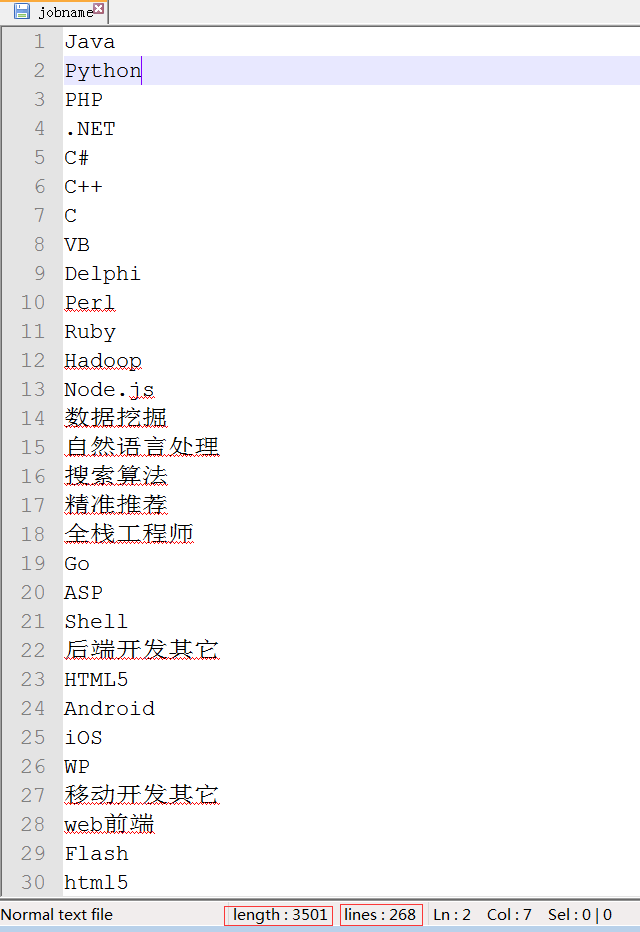

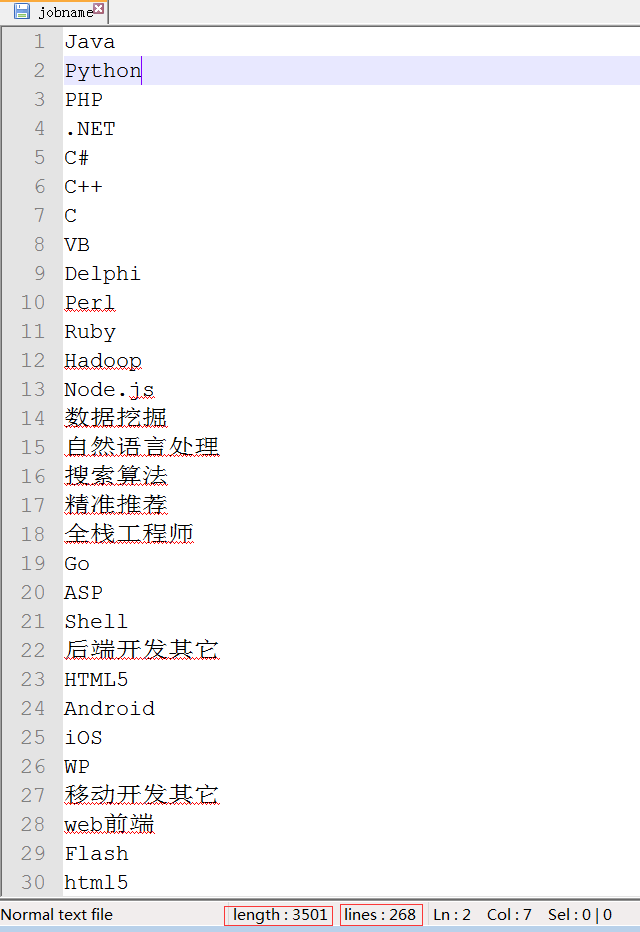

运行结果如图:

jobnames文件内容:

8389

8389

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?