SparkStreaming连接Mongo

目录:

| com.jinghang.sparkStreamingmongo | service | ErrorMapService |

| com.jinghang.sparkStreamingmongo | service | ErrorReduceService |

| com.jinghang.sparkStreamingmongo | utils | Constant |

| com.jinghang.sparkStreamingmongo | SparkStreamingMongo | |

| com.jinghang.mongo.dao | ErrorDao | |

| com.jinghang.entry | AppErrorAnaly |

AppErrorAnaly

package com.jinghang.entry;

import java.io.Serializable;

public class AppErrorAnaly implements Serializable{

private static final long serialVersionUID = 1L;

private String timeValue;//分析存储的时间,可以是天,小时,月,周

private String appId;//应用id

private String appVersion;//版本

private String appChannel;//渠道

private String appPlatform;//平台

private String deviceStyle;//设备类型

private String osType;//操作系统

private Long errorCnt;//错误的发生次数

private String errorId;//错误id

public String getTimeValue() {

return timeValue;

}

public void setTimeValue(String timeValue) {

this.timeValue = timeValue;

}

public String getAppId() {

return appId;

}

public void setAppId(String appId) {

this.appId = appId;

}

public String getAppVersion() {

return appVersion;

}

public void setAppVersion(String appVersion) {

this.appVersion = appVersion;

}

public String getAppChannel() {

return appChannel;

}

public void setAppChannel(String appChannel) {

this.appChannel = appChannel;

}

public String getAppPlatform() {

return appPlatform;

}

public void setAppPlatform(String appPlatform) {

this.appPlatform = appPlatform;

}

public String getDeviceStyle() {

return deviceStyle;

}

public void setDeviceStyle(String deviceStyle) {

this.deviceStyle = deviceStyle;

}

public String getOsType() {

return osType;

}

public void setOsType(String osType) {

this.osType = osType;

}

public Long getErrorCnt() {

return errorCnt;

}

public void setErrorCnt(Long errorCnt) {

this.errorCnt = errorCnt;

}

public String getErrorId() {

return errorId;

}

public void setErrorId(String errorId) {

this.errorId = errorId;

}

}ErrorDao

package com.jinghang.mongo.dao;

import java.io.IOException;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.bson.Document;

import org.bson.types.ObjectId;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.jinghang.utils.PropertityUtils;

import com.mongodb.MongoClient;

import com.mongodb.client.FindIterable;

import com.mongodb.client.MongoCollection;

import com.mongodb.client.MongoCursor;

import com.mongodb.client.MongoDatabase;

public class ErrorDao {

private static Log log = LogFactory.getLog(ErrorDao.class);

private ErrorDao errorDao = null;

private ObjectMapper objectMapper = new ObjectMapper();

private MongoClient mongoClient = new MongoClient(PropertityUtils.getValue("mongoaddr"),

Integer.valueOf(PropertityUtils.getValue("mongoport")));

public ErrorDao() {

log.info("ErrorDao is created!!");

}

public Document findoneby(String tablename, String appId,String appVersion, String appChannel,

String appPlatform,String osType,String deviceStyle, String timeValue, String errorId) {

//获取数据库 appId

MongoDatabase mongoDatabase = mongoClient.getDatabase(appId);

MongoCollection<Document> mongoCollection = mongoDatabase.getCollection(tablename);

Document doc = new Document();

doc.put("appId", appId);

doc.put("appVersion", appVersion);

doc.put("appChannel", appChannel);

doc.put("appPlatform", appPlatform);

doc.put("osType", osType);

doc.put("deviceStyle", deviceStyle);

doc.put("timeValue", timeValue);

doc.put("errorId", errorId);

FindIterable<Document> findIterable = mongoCollection.find(doc);

MongoCursor<Document> mongoCursor = findIterable.iterator();

if (mongoCursor.hasNext()) {

return mongoCursor.next();

}else {

return null;

}

}

public void saveorupdatemongo(String tablename, Document doc) {

String appId = doc.getString("appId");

MongoDatabase mongoDatabase = mongoClient.getDatabase(appId);

MongoCollection<Document> mongoCollection = mongoDatabase.getCollection(tablename);

if (!doc.containsKey("_id")) {

ObjectId objectId = new ObjectId();

doc.put("_id", objectId);

mongoCollection.insertOne(doc);

return;

}

Document matchDocument = new Document();

String objectId = doc.get("_id").toString();

matchDocument.put("_id",new ObjectId(objectId));

FindIterable<Document> findIterable = mongoCollection.find(matchDocument);

if (findIterable.iterator().hasNext()) {

mongoCollection.updateOne(matchDocument, new Document("$set", doc));

try {

log.info("come into saveorupdatemongo ---- update---"+objectMapper.writeValueAsString(doc));

} catch (IOException e) {

e.printStackTrace();

}

}else {

mongoCollection.insertOne(doc);

try {

log.info("come into saveorupdatemongo ---- insert---"+objectMapper.writeValueAsString(doc));

} catch (IOException e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) {

log.info("test");

}

}ErrorMapService

package com.jinghang.mongo.dao;

import java.io.IOException;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.bson.Document;

import org.bson.types.ObjectId;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.jinghang.utils.PropertityUtils;

import com.mongodb.MongoClient;

import com.mongodb.client.FindIterable;

import com.mongodb.client.MongoCollection;

import com.mongodb.client.MongoCursor;

import com.mongodb.client.MongoDatabase;

public class ErrorDao {

private static Log log = LogFactory.getLog(ErrorDao.class);

private ErrorDao errorDao = null;

private ObjectMapper objectMapper = new ObjectMapper();

private MongoClient mongoClient = new MongoClient(PropertityUtils.getValue("mongoaddr"),

Integer.valueOf(PropertityUtils.getValue("mongoport")));

public ErrorDao() {

log.info("ErrorDao is created!!");

}

public Document findoneby(String tablename, String appId,String appVersion, String appChannel,

String appPlatform,String osType,String deviceStyle, String timeValue, String errorId) {

//获取数据库 appId

MongoDatabase mongoDatabase = mongoClient.getDatabase(appId);

MongoCollection<Document> mongoCollection = mongoDatabase.getCollection(tablename);

Document doc = new Document();

doc.put("appId", appId);

doc.put("appVersion", appVersion);

doc.put("appChannel", appChannel);

doc.put("appPlatform", appPlatform);

doc.put("osType", osType);

doc.put("deviceStyle", deviceStyle);

doc.put("timeValue", timeValue);

doc.put("errorId", errorId);

FindIterable<Document> findIterable = mongoCollection.find(doc);

MongoCursor<Document> mongoCursor = findIterable.iterator();

if (mongoCursor.hasNext()) {

return mongoCursor.next();

}else {

return null;

}

}

public void saveorupdatemongo(String tablename, Document doc) {

String appId = doc.getString("appId");

MongoDatabase mongoDatabase = mongoClient.getDatabase(appId);

MongoCollection<Document> mongoCollection = mongoDatabase.getCollection(tablename);

if (!doc.containsKey("_id")) {

ObjectId objectId = new ObjectId();

doc.put("_id", objectId);

mongoCollection.insertOne(doc);

return;

}

Document matchDocument = new Document();

String objectId = doc.get("_id").toString();

matchDocument.put("_id",new ObjectId(objectId));

FindIterable<Document> findIterable = mongoCollection.find(matchDocument);

if (findIterable.iterator().hasNext()) {

mongoCollection.updateOne(matchDocument, new Document("$set", doc));

try {

log.info("come into saveorupdatemongo ---- update---"+objectMapper.writeValueAsString(doc));

} catch (IOException e) {

e.printStackTrace();

}

}else {

mongoCollection.insertOne(doc);

try {

log.info("come into saveorupdatemongo ---- insert---"+objectMapper.writeValueAsString(doc));

} catch (IOException e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) {

log.info("test");

}

}ErrorReduceService

package com.jinghang.sparkStreamingmongo.service;

import java.io.IOException;

import java.util.HashMap;

import java.util.List;

import java.util.Map.Entry;

import java.util.Set;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.bson.Document;

import org.bson.types.ObjectId;

import org.codehaus.jackson.map.ObjectMapper;

import com.jinghang.entry.AppErrorAnaly;

import com.jinghang.mongo.dao.ErrorDao;

import com.jinghang.sparkStreamingmongo.utils.Constant;

import scala.Tuple2;

public class ErrorReduceService {

private static Log log = LogFactory.getLog(ErrorReduceService.class);

private ErrorDao errorDao = null;

private static ObjectMapper objectMapper = new ObjectMapper();

public static void main(String[] args) {

}

public ErrorReduceService() {

log.info("create ReduceUtils");

errorDao = new ErrorDao();

}

public void doReduceProcess(List<Tuple2<String, Integer>> collect) {

HashMap<String,Document> cacheMap = new HashMap<>();

for (Tuple2<String, Integer> tuple2 : collect) {

String keys = tuple2._1;

if (!keys.startsWith("ErrorInfoDaily")) {

continue;

}

/*

"ErrorInfoDaily"+Constant.SPLITSTRING

+"errorCnt"+Constant.SPLITSTRING

+appId+Constant.SPLITSTRING

+appVersion+Constant.SPLITSTRING

+appChannel+Constant.SPLITSTRING

+appPlatform+Constant.SPLITSTRING

+osType+Constant.SPLITSTRING

+deviceStyle+Constant.SPLITSTRING

+errorId+Constant.SPLITSTRING

+transfertime(createdAtMs);

*/

String[] splitvalues = keys.split(Constant.SPLITSTRING);

String tablename = splitvalues[0]; // ErrorInfoDaily

String fieldname = splitvalues[1];//errorCnt

String appId = splitvalues[2];//appId

String appVersion = splitvalues[3];//appVersion

String appChannel = splitvalues[4];//appChannel

String appPlatform = splitvalues[5];//appPlatform

String osType = splitvalues[6];//osType

String deviceStyle = splitvalues[7];//deviceStyle

String errorId = splitvalues[8];//errorId

String timeValue = splitvalues[9];// yyMMdd

Document doc = null;

String keyTemp = tablename+Constant.SPLITSTRING

+appId+Constant.SPLITSTRING

+appVersion+Constant.SPLITSTRING

+appChannel+Constant.SPLITSTRING

+appPlatform+Constant.SPLITSTRING

+osType+Constant.SPLITSTRING

+deviceStyle+Constant.SPLITSTRING

+timeValue+Constant.SPLITSTRING

+errorId;

if (cacheMap.get(keyTemp) != null) {

doc = cacheMap.get(keyTemp);

}else {

doc = errorDao.findoneby(tablename,appId,appVersion,appChannel,

appPlatform,osType,deviceStyle,timeValue,errorId);

}

if (doc == null) {

AppErrorAnaly appErrorAnaly = new AppErrorAnaly();

appErrorAnaly.setTimeValue(timeValue);

appErrorAnaly.setAppId(appId);

appErrorAnaly.setAppVersion(appVersion);

appErrorAnaly.setAppChannel(appChannel);

appErrorAnaly.setAppPlatform(appPlatform);

appErrorAnaly.setDeviceStyle(deviceStyle);

appErrorAnaly.setOsType(osType);

appErrorAnaly.setErrorId(errorId);

try {

//将对象 变成json字符串

String jsonString = objectMapper.writeValueAsString(appErrorAnaly);

//将json字符串 变成 doc 对象

doc = Document.parse(jsonString);

//_id

ObjectId objectId = new ObjectId();

doc.put("_id", objectId);

} catch (IOException e) {

e.printStackTrace();

}

}

// errorCnt 获取错误日志的次数

if (doc.getLong(fieldname) == null) {

doc.put(fieldname, Long.valueOf(tuple2._2+""));

}else {

Long sumpre = doc.getLong(fieldname);//之前的值

sumpre = sumpre + Long.valueOf(tuple2._2+"");

doc.put(fieldname, sumpre);

}

cacheMap.put(keyTemp, doc);

dosave(cacheMap);

}

}

private void dosave(HashMap<String, Document> cacheMap) {

Set<Entry<String,Document>> entrySet = cacheMap.entrySet();

for (Entry<String, Document> map : entrySet) {

String key = map.getKey();

//ErrorInfoDaily

String tableName = key.split(Constant.SPLITSTRING)[0];

Document doc = map.getValue();

errorDao.saveorupdatemongo(tableName, doc);

}

}

}Constant

package com.jinghang.sparkStreamingmongo.utils;

public class Constant {

public static final String SPLITSTRING = "####";

}

SparkStreamingMongo

package com.jinghang.sparkStreamingmongo;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFlatMapFunction;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaInputDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka010.ConsumerStrategies;

import org.apache.spark.streaming.kafka010.KafkaUtils;

import org.apache.spark.streaming.kafka010.LocationStrategies;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.jinghang.entry.AppErrorLog;

import com.jinghang.sparkStreamingmongo.service.ErrorMapService;

import com.jinghang.sparkStreamingmongo.service.ErrorReduceService;

import com.jinghang.utils.PropertityUtils;

import scala.Tuple2;

public class SparkStreamingMongo {

private static HashMap<String, Object> kafkaParams = new HashMap<>();

private static String topicerror = PropertityUtils.getValue("topicerror");

private static String appstartupspark = PropertityUtils.getValue("appstartupspark");

private static List<String> topics = Arrays.asList(topicerror,appstartupspark);

private static ObjectMapper objectMapper = new ObjectMapper();

private static ErrorMapService errorMapService = null;

private static ErrorReduceService errorReduceService = null;

private static void processmapresuce(JavaStreamingContext jssc,

JavaDStream<ConsumerRecord<String, String>> repartitionedDSteam) {

if (errorMapService == null) {

errorMapService = new ErrorMapService();

}

if (errorReduceService == null) {

errorReduceService = new ErrorReduceService();

}

JavaDStream<String> lines = repartitionedDSteam.map(new Function<ConsumerRecord<String,String>, String>() {

private static final long serialVersionUID = 1L;

@Override

public String call(ConsumerRecord<String, String> record) throws Exception {

//取出kafka 的value

String temp = record.value();

System.err.println(temp);

return temp;

}

});

JavaPairDStream<String, Integer> pairDStream = lines.mapPartitionsToPair(new PairFlatMapFunction<Iterator<String>, String, Integer>() {

private static final long serialVersionUID = 1L;

@Override

public Iterator<Tuple2<String, Integer>> call(Iterator<String> it) throws Exception {

ArrayList<Tuple2<String, Integer>> tuple2list = new ArrayList<Tuple2<String, Integer>>();

while (it.hasNext()) {

String line = it.next();

//com.jinghang.entry.AppErrorLog:{dasfd:dasfasdf,dasfads:dsafadsf}

String[] splited = line.split(":", 2);

AppErrorLog appErrorLog = (AppErrorLog) objectMapper.readValue(splited[1], Class.forName(splited[0]));

errorMapService.processmap(tuple2list,appErrorLog);

}

return tuple2list.iterator();

}

});

JavaPairDStream<String, Integer> wordCounts = pairDStream.reduceByKey(new Function2<Integer, Integer, Integer>() {

private static final long serialVersionUID = 1L;

@Override

public Integer call(Integer v1, Integer v2) throws Exception {

return v1+v2;

}

});

wordCounts.foreachRDD(new VoidFunction<JavaPairRDD<String,Integer>>() {

private static final long serialVersionUID = 1L;

@Override

public void call(JavaPairRDD<String, Integer> res) throws Exception {

System.err.println(res.collect());

errorReduceService.doReduceProcess(res.collect());

}

});

}

public static void main(String[] args) {

SparkConf conf = new SparkConf().setAppName("SparkStreamingReceive").setMaster("local[3]");

conf.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer");

JavaSparkContext jsc = new JavaSparkContext(conf);

JavaStreamingContext jssc = new JavaStreamingContext(jsc, Durations.seconds(5));

kafkaParams.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, PropertityUtils.getValue("brokerList"));

kafkaParams.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

kafkaParams.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

kafkaParams.put(ConsumerConfig.GROUP_ID_CONFIG, PropertityUtils.getValue("groupid"));

kafkaParams.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, PropertityUtils.getValue("offsetreset"));

kafkaParams.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, PropertityUtils.getValue("autocommit"));

JavaInputDStream<ConsumerRecord<String, String>> directStream = KafkaUtils.createDirectStream(jssc,

LocationStrategies.PreferConsistent(),

ConsumerStrategies.<String, String>Subscribe(topics, kafkaParams));

//增大分区 提高并行度

JavaDStream<ConsumerRecord<String, String>> repartitionedDSteam = directStream.repartition(2);

processmapresuce(jssc,repartitionedDSteam);

jssc.start();

try {

jssc.awaitTermination();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

运行:AppInfoService ->SparkStreamingMongo -> AppClient

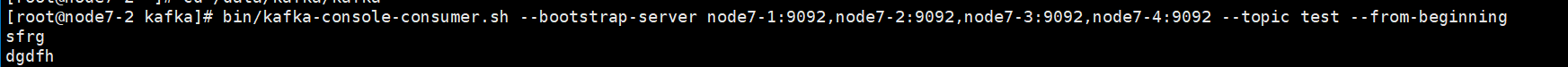

kafka随便启动一台客户端,mongodb启动与properties文件一直

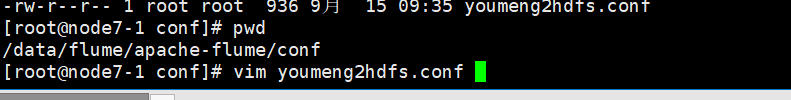

Flume部分:

kafka->flume->hdfs

flume: nohup bin/flume-ng agent --conf conf --conf-file conf/youmeng2hdfs.conf --name appErrorLog -Dflume.root.logger=INFO,console &

appErrorLog.sources = s1

appErrorLog.channels = c1

appErrorLog.sinks = s1

appErrorLog.sources.s1.type = org.apache.flume.source.kafka.KafkaSource

appErrorLog.sources.s1.zookeeperConnect = node7-1:2181,node7-2:2181,node7-3:2181

appErrorLog.sources.s1.topic = apperrorhive

appErrorLog.sources.s1.groupId = group01

appErrorLog.sources.s1.channels = c1

appErrorLog.sources.s1.interceptors = i1

appErrorLog.sources.s1.interceptors.i1.type = timestamp

appErrorLog.sources.s1.kafka.consumer.timeout.ms = 1000

appErrorLog.channels.c1.type = memory

appErrorLog.channels.c1.capacity = 1000

appErrorLog.channels.c1.transactionCapacity = 1000

appErrorLog.sinks.s1.type = hdfs

appErrorLog.sinks.s1.hdfs.path =/flume/appErrorLog/%y-%m-%d

appErrorLog.sinks.s1.hdfs.fileType = DataStream

appErrorLog.sinks.s1.hdfs.rollSize = 0

appErrorLog.sinks.s1.hdfs.rollCount = 0

appErrorLog.sinks.s1.hdfs.rollInterval = 30

appErrorLog.sinks.s1.channel = c1

hive:

1.create database youmeng;

2.use youmeng;

3.create external table youmeng.appErrorLog

create external table youmeng.appErrorLog(

> createtime string,

> appId string,

> deviceId string,

> appVersion string,

> appChannel string,

> appPlatform string,

> osType string,

> deviceStyle string,

> errorBrief string,

> errorDetail string,

> timesecond string

> )

> PARTITIONED BY (dates String)

> ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

> LOCATION '/flume/appErrorLog/';4.alter table youmeng.appErrorLog add partition (dates="20-09-15")location '20-09-15';

5.出现目录

6.运行代码:AppInfoService ->SparkStreamingMongo -> AppClient

select * from apperrorlog;

hdfs上

1452

1452

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?