1、要导入的数据文件格式

将如下格式的文本文件导入 HBase,第一个字符串为 rowkey, 第二个数字作为 Value,所有列中,必须有一个作为 rowkey,rowkey 可以不是第一列,

例如要将如下本文中数字部分作为 rowkey,调整 importtsv.columns 参数中 rowkey 对应参数的位置即可

the,213

to,99

and,58

a,53

you,43

is,37

will,32

in,32

of,31

it,28文件可在 这里下载

2、准备数据文件和表

将文件放到 HDFS、创建 HBase 表 wordcount:

[root@com5 ~]# hdfs dfs -put word_count.csv

[root@com5 ~]# hdfs dfs -ls /user/root

Found 2 items

drwx------ - root supergroup 0 2014-03-05 17:05 /user/root/.Trash

-rw-r--r-- 3 root supergroup 7217 2014-03-10 09:38 /user/root/word_count.csv

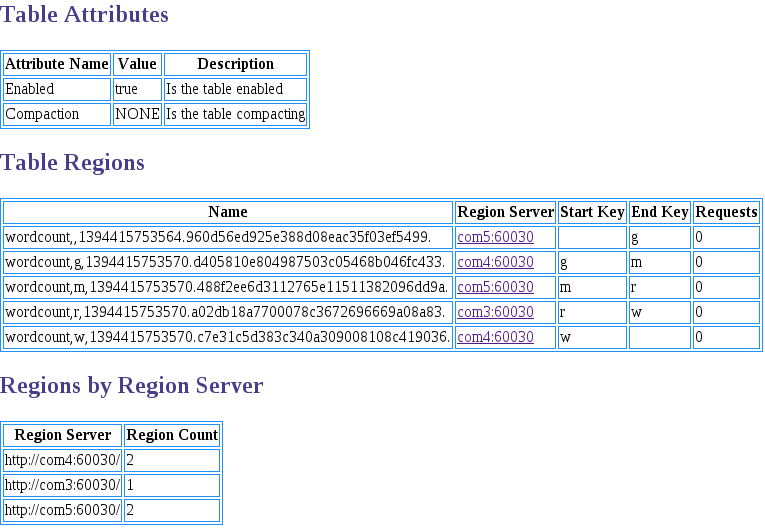

hbase(main):002:0> create 'wordcount', {NAME => 'f'}, {SPLITS => ['g', 'm', 'r', 'w']}创建的 wordcount 表:

3、创建 HFile 文件

[root@com5 ~]# hadoop jar /usr/lib/hbase/hbase-0.94.6-cdh4.5.0-security.jar importtsv \

> -Dimporttsv.separator=, \

> -Dimporttsv.bulk.output=hfile_output \

> -Dimporttsv.columns=HBASE_ROW_KEY,f:count wordcount word_count.csvimporttsv.columns 参数中必须有且只能有一个 HBASE_ROW_KEY,这就是 rowkey,上面参数标明将第一列作为 rowkey,第二列作为 f:count 的值

注意这里使用了 importtsv.bulk.output 参数指定结果文件目录,如果没有这个参数将会直接导入 HBase 表

导入时,这个方法会报错:

String org.apache.hadoop.mapreduce.lib.partition.TotalOrderPartitioner.getPartitionFile(Configuration conf)

/**

* Get the path to the SequenceFile storing the sorted partition keyset.

* @see #setPartitionFile(Configuration, Path)

*/

public static String getPartitionFile(Configuration conf) {

return conf.get(PARTITIONER_PATH, DEFAULT_PATH);

}

conf.get("mapreduce.totalorderpartitioner.path","_partition.lst")java.lang.Exception: java.lang.IllegalArgumentException: Can't read partitions file

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:406)

Caused by: java.lang.IllegalArgumentException: Can't read partitions file

at org.apache.hadoop.mapreduce.lib.partition.TotalOrderPartitioner.setConf(TotalOrderPartitioner.java:108)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:73)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:133)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.<init>(MapTask.java:587)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:656)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:330)

at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:268)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:441)

at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:303)

at java.util.concurrent.FutureTask.run(FutureTask.java:138)

at java.util.concurrent.ThreadPoolExecutor$Worker.runTask(ThreadPoolExecutor.java:886)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:908)

at java.lang.Thread.run(Thread.java:662)

Caused by: java.io.FileNotFoundException: File file:/root/_partition.lst does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:468)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:380)

at org.apache.hadoop.io.SequenceFile$Reader.<init>(SequenceFile.java:1704)

at org.apache.hadoop.io.SequenceFile$Reader.<init>(SequenceFile.java:1728)

at org.apache.hadoop.mapreduce.lib.partition.TotalOrderPartitioner.readPartitions(TotalOrderPartitioner.java:293)

at org.apache.hadoop.mapreduce.lib.partition.TotalOrderPartitioner.setConf(TotalOrderPartitioner.java:80)

... 12 more对于我,这算个比较奇怪的问题了,不知道这是不是 cdh4.5 特有的,它竟然非得要去读一个不存在的 Mapper 的结果文件

这里没有深究,因为可以人为满足这个条件

首先创建一个空的 SequenceFile,这个文件将用来作为分区的依据,它的 key 和 value 必须分别为 ImmutableBytesWritable 和 NullWritable

private static final String[] KEYS = { "g", "m", "r", "w" };

public static void main(String[] args) throws IOException {

String uri = "hdfs://com3:8020/tmp/_partition.lst";

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path path = new Path(uri);

ImmutableBytesWritable key = new ImmutableBytesWritable();

NullWritable value = NullWritable.get();

SequenceFile.Writer writer = null;

try {

writer = SequenceFile.createWriter(fs, conf, path, key.getClass(),

value.getClass());

} finally {

IOUtils.closeStream(writer);

}

}因为导入数据是由 hbase 用户来运行的,所以除此之外还会有权限问题,我重新将数据数据 word_count.csv 放到了 HDFS 的 /tmp 目录下

然后重新运行 importcsv,结束后修改结果目录所属用户为 hbase

Usage: importtsv -Dimporttsv.columns=a,b,c <tablename> <inputdir>

# hadoop jar /usr/lib/hbase/hbase-0.94.6-cdh4.5.0-security.jar importtsv \

-Dimporttsv.separator=, \

-Dimporttsv.bulk.output=/tmp/bulk_output \

-Dimporttsv.columns=HBASE_ROW_KEY,f:count wordcount /tmp/word_count.csv

sudo -u hdfs hdfs dfs -chown -R hbase:hbase /tmp/bulk_output

[root@com5 ~]# hdfs dfs -ls -R /tmp/bulk_output

-rw-r--r-- 3 hbase hbase 0 2014-03-10 23:43 /tmp/bulk_output/_SUCCESS

drwxr-xr-x - hbase hbase 0 2014-03-10 23:43 /tmp/bulk_output/f

-rw-r--r-- 3 hbase hbase 26005 2014-03-10 23:43 /tmp/bulk_output/f/e60f1c870f8040158e18efe2a96e18644、将结果文件导入 HBase

# sudo -u hbase hbase org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles /tmp/bulk_output wordcount参数为 importcsv输出目录和 HBase 表名

此时即可查看导入结果:

[root@com5 ~]# wc -l word_count.csv

722 word_count.csv

hbase(main):019:0* count 'wordcount'

722 row(s) in 0.3490 seconds

hbase(main):014:0> scan 'wordcount'

ROW COLUMN+CELL

a column=f:count, timestamp=1394466220054, value=53

able column=f:count, timestamp=1394466220054, value=1

about column=f:count, timestamp=1394466220054, value=1

access column=f:count, timestamp=1394466220054, value=1

according column=f:count, timestamp=1394466220054, value=3

across column=f:count, timestamp=1394466220054, value=1

added column=f:count, timestamp=1394466220054, value=1

additional column=f:count, timestamp=1394466220054, value=1

创建 SequenceFile 的 POM:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cloudera</groupId>

<artifactId>cdh4.5</artifactId>

<version>0.0.1-SNAPSHOT</version>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<!-- <scope>test</scope> -->

</dependency>

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-all</artifactId>

<version>1.9.5</version>

<!-- <scope>test</scope> -->

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-annotations</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-archives</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-assemblies</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.0.0-cdh4.5.0</version>

<exclusions>

<exclusion>

<artifactId>

hadoop-mapreduce-client-core

</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-datajoin</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-dist</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-distcp</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-app</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.0.0-cdh4.5.0</version>

<exclusions>

<exclusion>

<artifactId>

hadoop-mapreduce-client-core

</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-hs</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-hs-plugins</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-shuffle</artifactId>

<version>2.0.0-cdh4.5.0</version>

<exclusions>

<exclusion>

<artifactId>

hadoop-mapreduce-client-core

</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-examples</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-api</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-applications-distributedshell</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-applications-unmanaged-am-launcher</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-client</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-common</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-common</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-nodemanager</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-resourcemanager</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-tests</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-server-web-proxy</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-site</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

<version>2.0.0-mr1-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-examples</artifactId>

<version>2.0.0-mr1-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-minicluster</artifactId>

<version>2.0.0-cdh4.5.0</version>

<exclusions>

<exclusion>

<artifactId>

hadoop-mapreduce-client-core

</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-streaming</artifactId>

<version>2.0.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-hbase-handler</artifactId>

<version>0.10.0-cdh4.5.0</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase</artifactId>

<version>0.94.6-cdh4.5.0</version>

</dependency>

</dependencies>

</project>

参考:

http://blog.cloudera.com/blog/2013/09/how-to-use-hbase-bulk-loading-and-why/

1943

1943

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?