目录

- 前言

- 一、数据仓库环境准备

- 二、数仓开发之ODS层(原始数据层)

- 1. 日志表

- 2. 业务表

- 2.1. 活动信息表(全量表)

- 2.2. 活动规则表(全量表)

- 2.3. 一级品类表(全量表)

- 2.4. 二级品类表(全量表)

- 2.5. 三级品类表(全量表)

- 2.6. 编码字典表(全量表)

- 2.7. 省份表(全量表)

- 2.8. 地区表(全量表)

- 2.9. 品牌表(全量表)

- 2.10. 购物车表(全量表)

- 2.11. 优惠券信息表(全量表)

- 2.12. 商品平台属性表(全量表)

- 2.13. 商品表(全量表)

- 2.14. 商品销售属性值表(全量表)

- 2.15. SPU表(全量表)

- 2.16. 购物车表(增量表)

- 2.17. 评论表(增量表)

- 2.18. 优惠券领用表(增量表)

- 2.19. 收藏表(增量表)

- 2.20. 订单明细表(增量表)

- 2.21. 订单明细活动关联表(增量表)

- 2.22 订单明细优惠券关联表(增量表)

- 2.23. 订单表(增量表)

- 2.24. 退单表(增量表)

- 2.25. 订单状态流水表(增量表)

- 2.26. 支付表(增量表)

- 2.27. 退款表(增量表)

- 2.28. 用户表(增量表)

- 2.29. 数据装载脚本(日期可选)

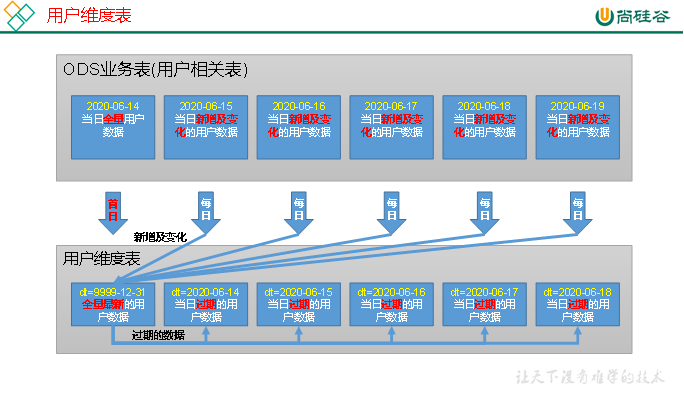

- 三、数仓开发之DIM层(公共维度层)

- 小知识

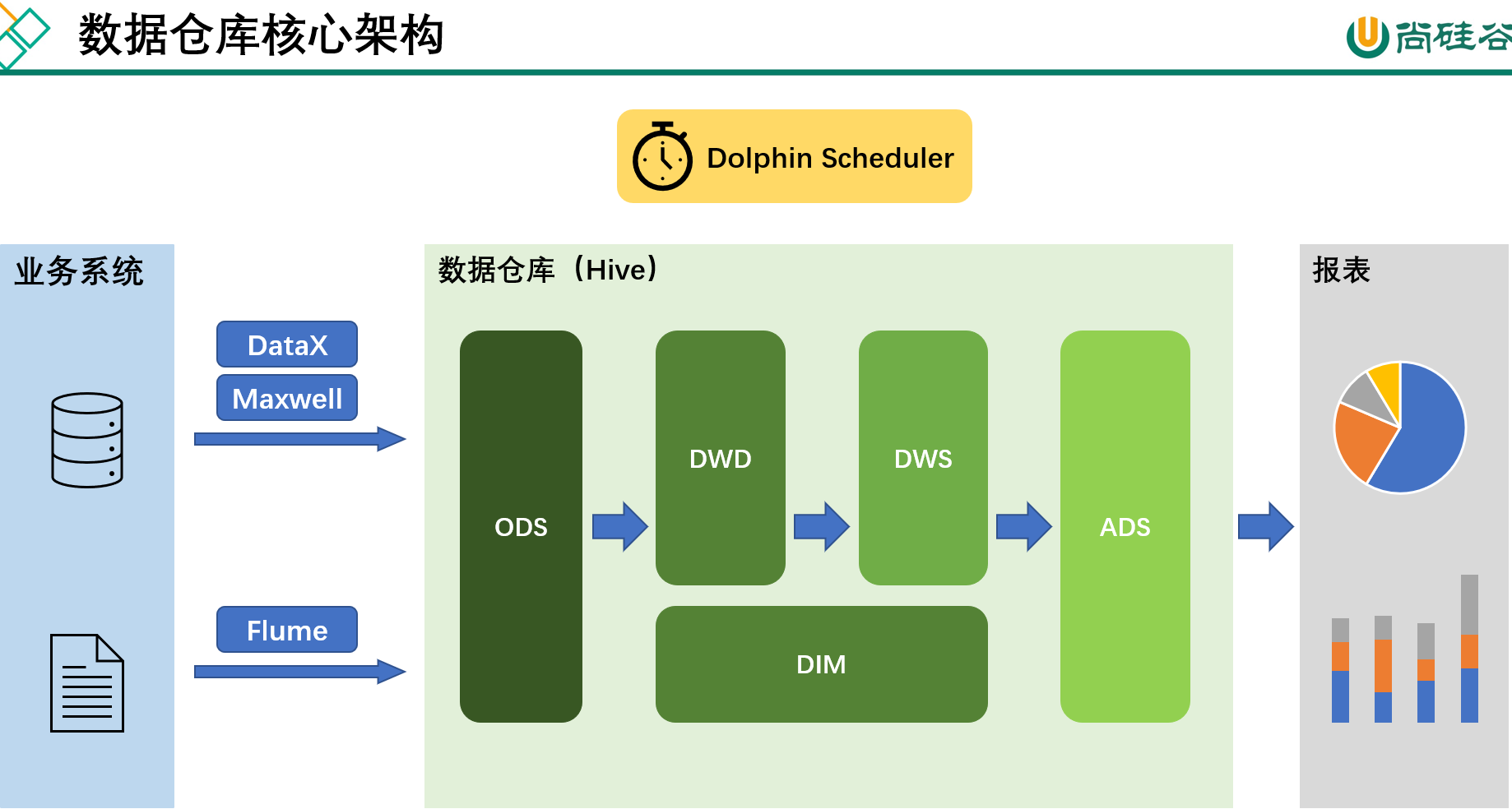

前言

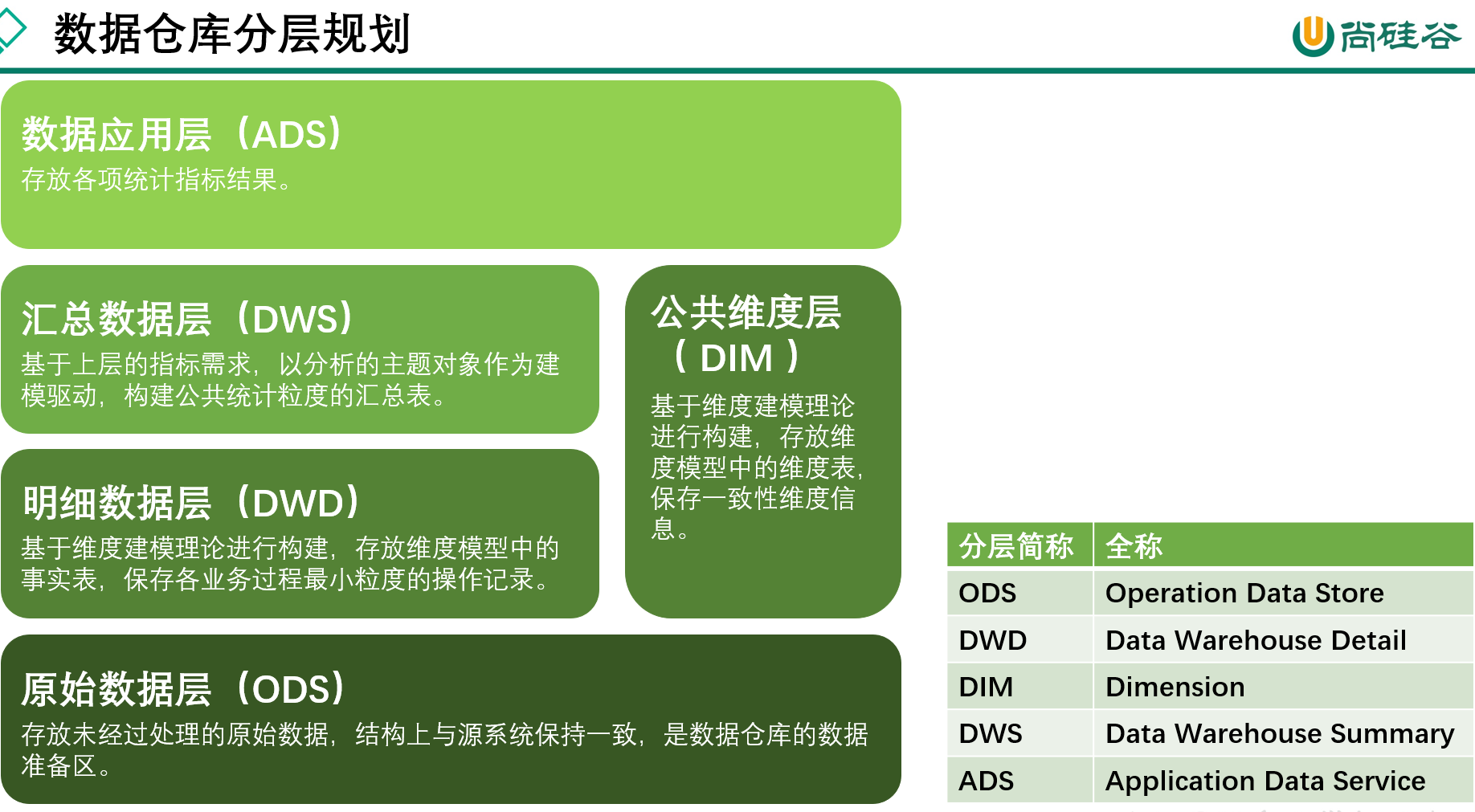

几个层次复习一下

颜色由深到浅表示数据量由多至少

一、数据仓库环境准备

1. 数据仓库运行环境

1.1. Hive on Spark配置

🔨Hive引擎简介:Hive引擎包括默认MR、Tez、Spark

Hive on Spark:Hive既作为存储元数据又负责SQL的解析优化,语法是HQL语法,执行引擎变成了Spark,Spark负责采用RDD执行(生态好组件多)Spark on Hive: Hive只作为存储元数据,Spark负责SQL解析优化,语法是Spark SQL语法,Spark负责采用RDD执行(相比来说快一点)

🔨 兼容性说明:官网下载的Hive3.1.3和Spark3.0.0默认是不兼容的。因为Hive3.1.3支持的Spark版本是2.3.0,所以需要我们重新编译Hive3.1.3版本(查看源码pom.xml可以得知引入的是什么版本,升3.0.0也从这下手)

- 编译步骤:官网下载Hive3.1.3源码,修改pom文件中引用的Spark版本为3.0.0,如果编译通过,直接打包获取jar包。如果报错,就根据提示,修改相关方法,直到不报错,打包获取jar包,之前安装的资料中的hive-3.1.2已经修改好了源码可以直接用,👉点击前往查看安装3.1.2版本

🔨 在Hive所在节点部署Spark(如果之前已经部署了Spark,则该步骤可以跳过)

# 懒得修bug放弃折腾!直接上传资料中的(微信公众号回复数仓5.0即可)

# 一条龙代码!

cd /opt/software;tar -zxvf spark-3.0.0-bin-hadoop3.2.tgz -C /opt/module/;mv /opt/module/spark-3.0.0-bin-hadoop3.2 /opt/module/spark;vim /etc/profile.d/my_env.sh

# 添加如下内容

# SPARK_HOME

export SPARK_HOME=/opt/module/spark

export PATH=$PATH:$SPARK_HOME/bin

# 刷新一下,在hive中创建spark配置文件

source /etc/profile.d/my_env.sh;vim /opt/module/hive/conf/spark-defaults.conf

# 添加如下内容

spark.master yarn

spark.eventLog.enabled true

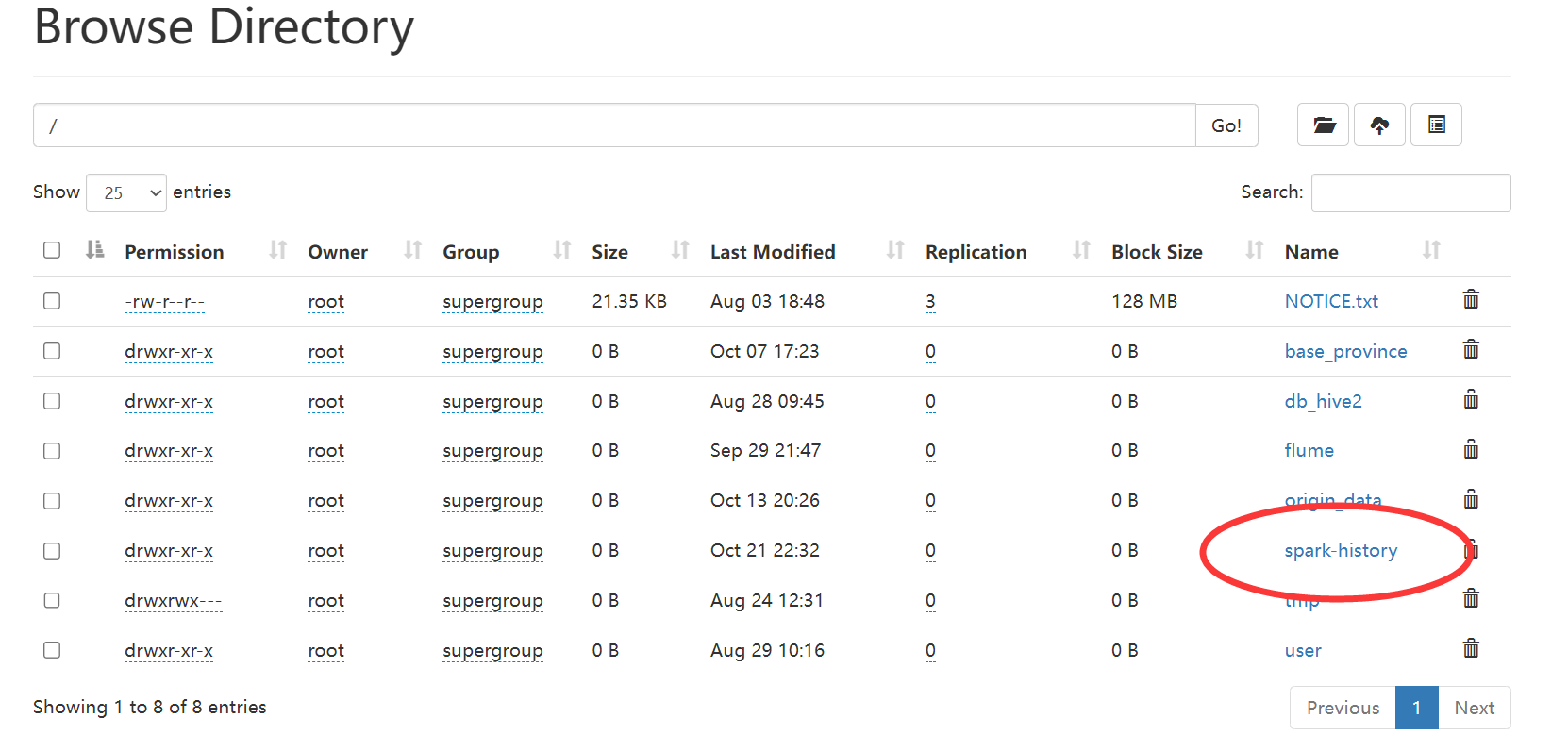

spark.eventLog.dir hdfs://hadoop102:8020/spark-history

spark.executor.memory 1g

spark.driver.memory 1g

# 在HDFS创建如下路径,用于存储历史日志

hadoop fs -mkdir /spark-history

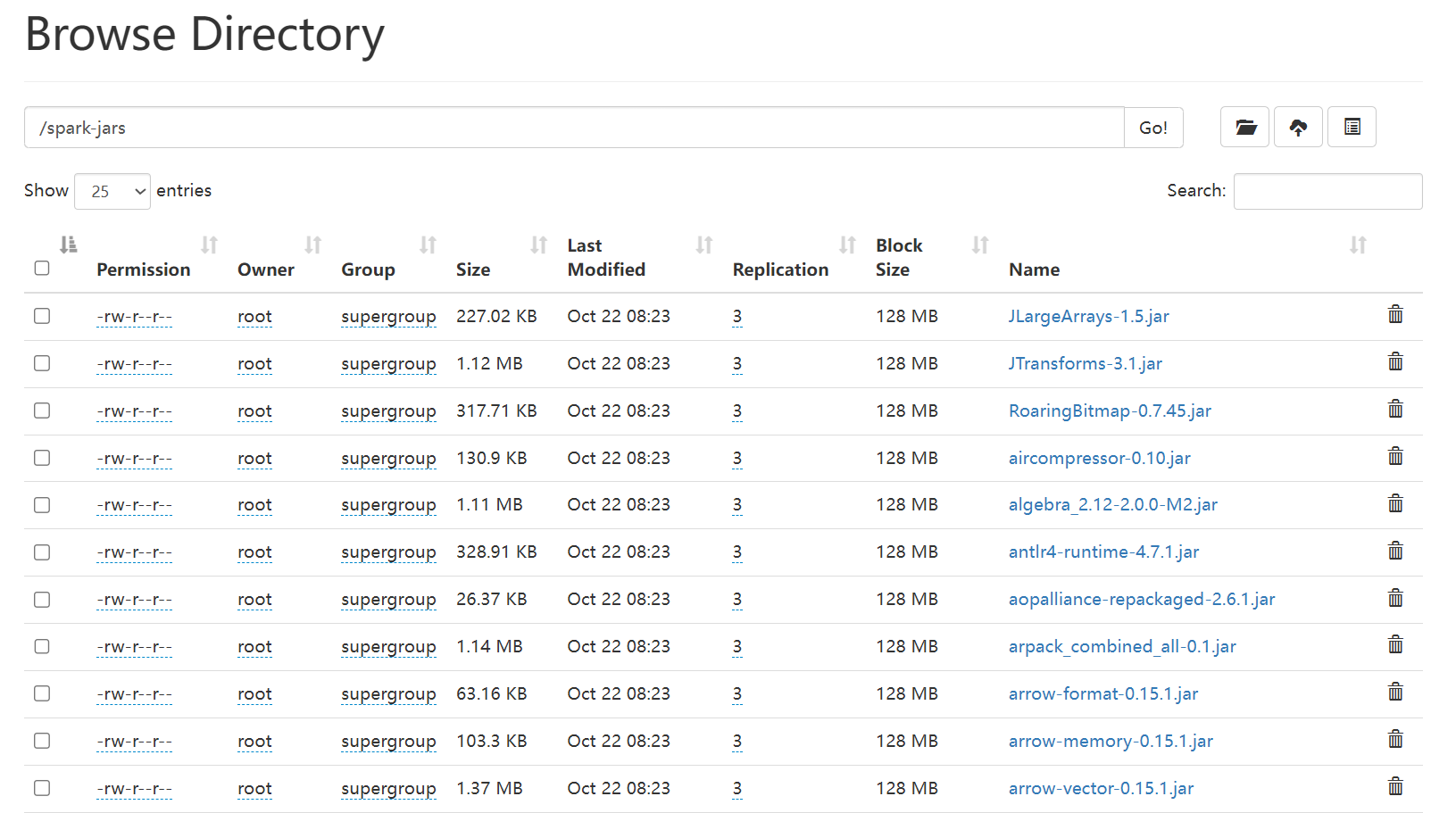

🔨向HDFS上传Spark纯净版jar包

说明1️⃣:由于Spark3.0.0非纯净版默认支持的是hive2.3.7版本,直接使用会和安装的Hive3.1.2出现兼容性问题。所以采用Spark纯净版jar包,不包含hadoop和hive相关依赖,避免冲突

说明2️⃣:Hive任务最终由Spark来执行,Spark任务资源分配由Yarn来调度,该任务有可能被分配到集群的任何一个节点。所以需要将Spark的依赖上传到HDFS集群路径,这样集群中任何一个节点都能获取到

# 上传并解压spark-3.0.0-bin-without-hadoop.tgz

cd /opt/software;tar -zxvf /opt/software/spark-3.0.0-bin-without-hadoop.tgz

# 上传Spark纯净版jar包到HDFS

hadoop fs -mkdir /spark-jars;hadoop fs -put spark-3.0.0-bin-without-hadoop/jars/* /spark-jars

# 修改hive-site.xml文件

vim /opt/module/hive/conf/hive-site.xml

# 添加如下内容(注意要在configuration标签里面添加)

<!--Spark依赖位置(注意:端口号8020必须和namenode的端口号一致)-->

<property>

<name>spark.yarn.jars</name>

<value>hdfs://hadoop102:8020/spark-jars/*</value>

</property>

<!--Hive执行引擎-->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

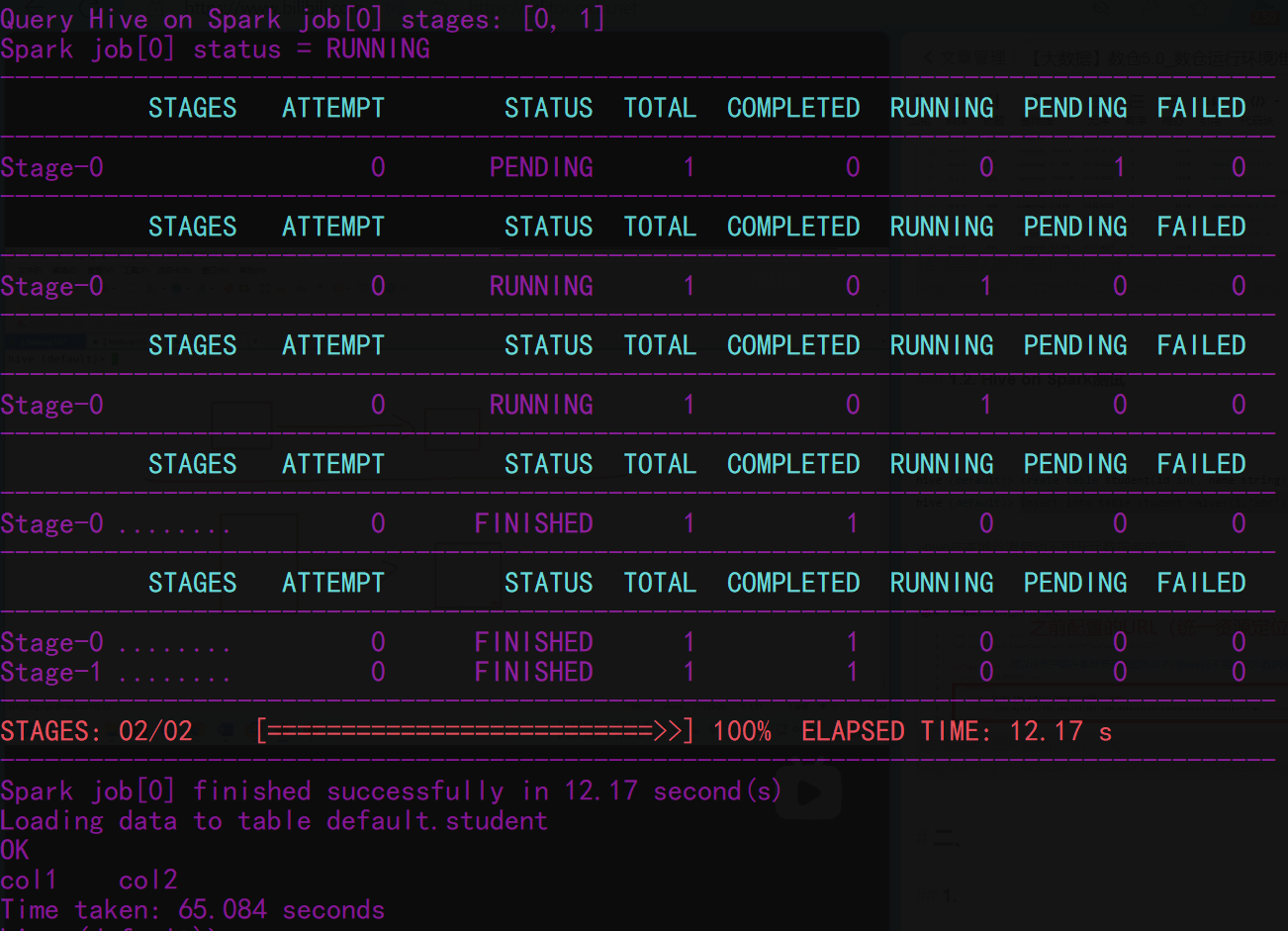

1.2. Hive on Spark测试

MR一个sql 对应一个job ,spark则是一个会话(多个SQL)对应一个job

hive

hive (default)> create table student(id int, name string);

hive (default)> insert into table student values(1,'abc');

hive (default)> insert into table student values(2,'abc');

# spark只要客户端会话(对应一个Job)在就不会被停止任务运行

quit;

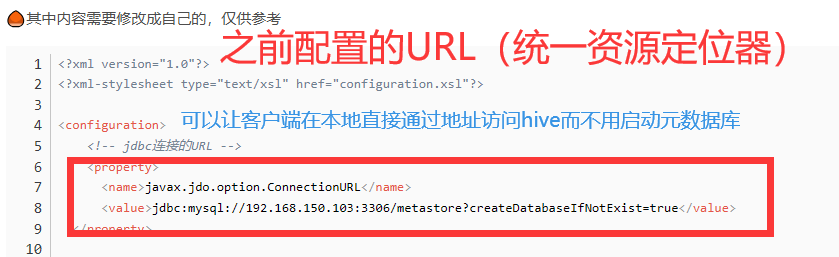

hive在本地丝滑启动不用开元数据库的原因:

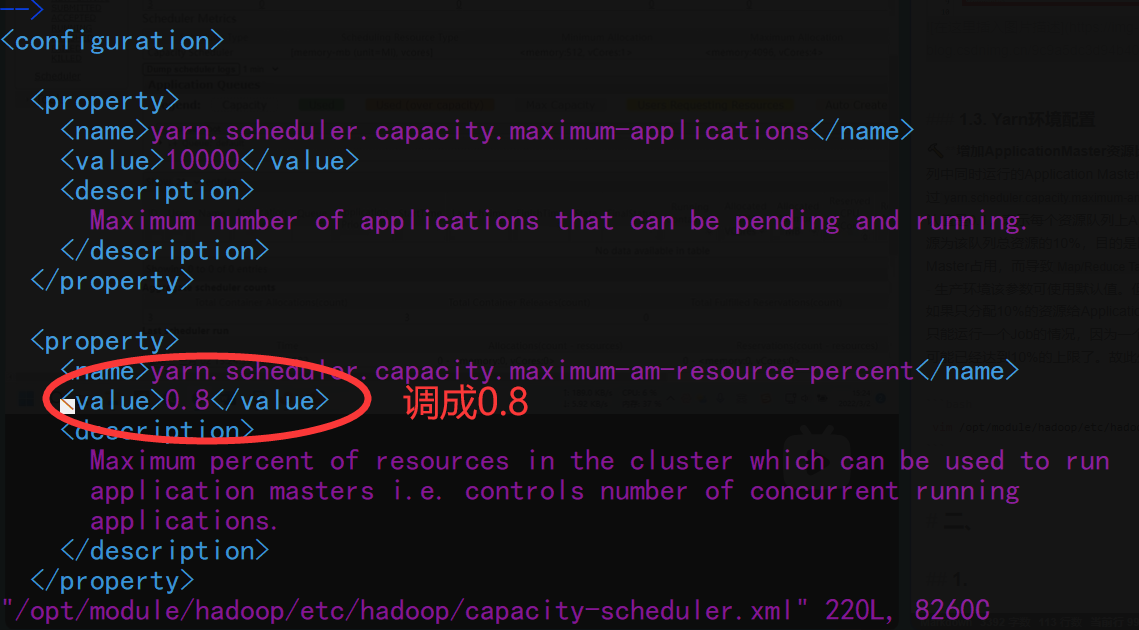

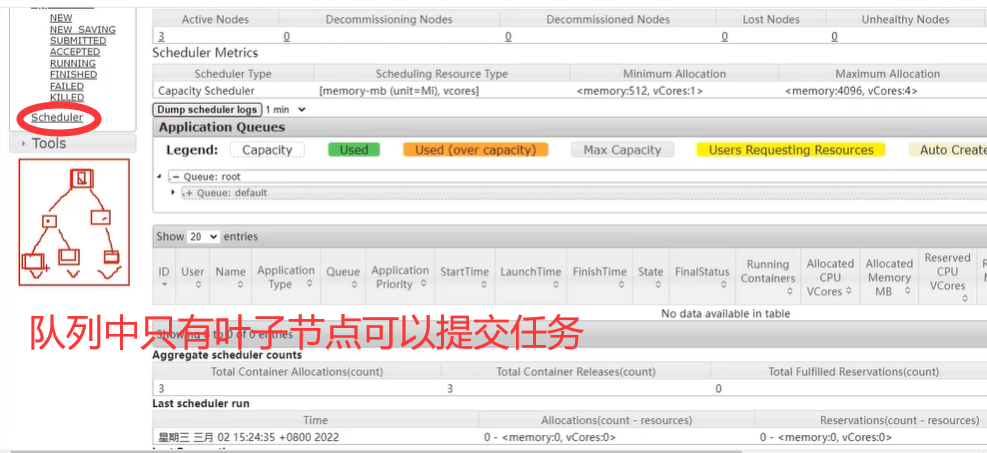

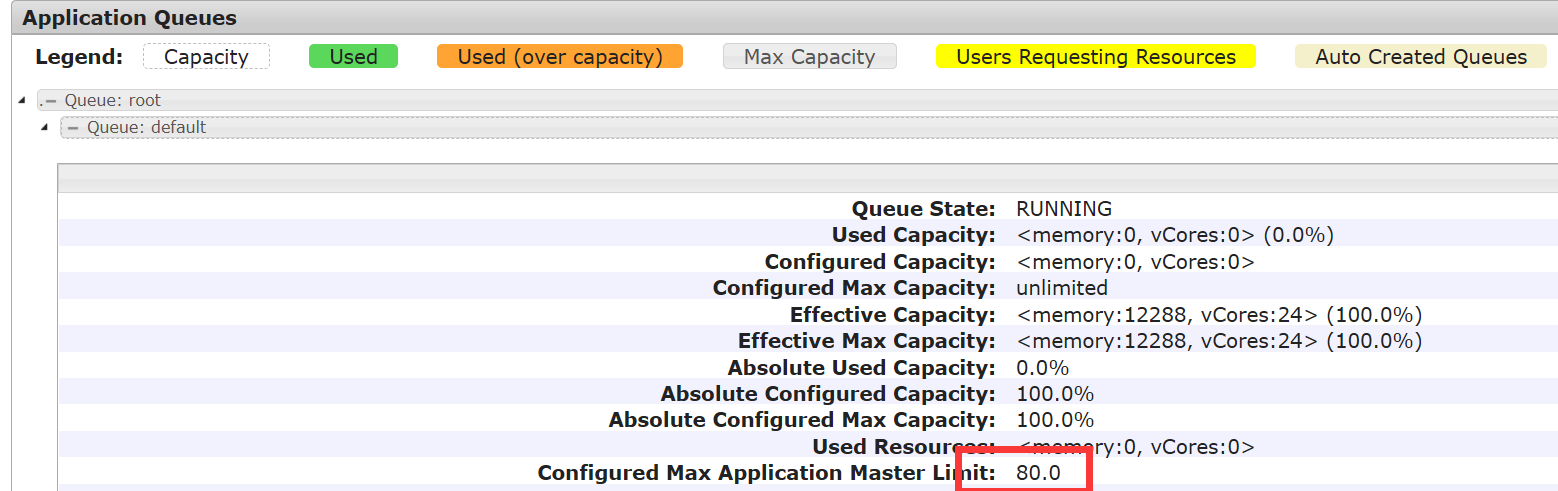

1.3. Yarn环境配置

🔨增加ApplicationMaster资源比例:容量调度器对每个资源队列中同时运行的Application Master占用的资源进行了限制,该限制通过yarn.scheduler.capacity.maximum-am-resource-percent参数实现,其默认值是0.1,表示每个资源队列上Application Master最多可使用的资源为该队列总资源的10%,目的是防止大部分资源都被Application Master占用,而导致Map/Reduce Task无法执行

- 生产环境该参数可使用默认值。但学习环境,集群资源总数很少,如果只分配10%的资源给Application Master,则可能出现,同一时刻只能运行一个Job的情况,因为一个Application Master使用的资源就可能已经达到10%的上限了。故此处可将该值适当调大

cd /opt/module/hadoop/etc/hadoop/;vim capacity-scheduler.xml

# 分发

xsync capacity-scheduler.xml

# 重新启动yarn集群

myhadoop.sh stop

myhadoop.sh start

2. 数据仓库开发环境

数仓开发工具可选用DBeaver或者DataGrip(这里我们选择DG)。两者都需要用到JDBC协议连接到Hive,故需要启动HiveServer2

# 后台启动hiveserver2

cd /opt/module/hive;nohup bin/hive --service metastore &

nohup bin/hiveserver2 1>/dev/null 2>/dev/null &

# 打开DG

-- 测试一下

show databases;

show tables;

create database gmall;

3. 模拟数据准备

通常企业在开始搭建数仓时,业务系统中会存在历史数据,一般是业务数据库存在历史数据,而用户行为日志无历史数据。假定数仓上线的日期为2020-06-14,为模拟真实场景,需准备以下数据。(在执行以下操作之前,先将HDFS上/origin_data路径下之前的数据删除)

3.1. 用户行为日志

🔨用户行为日志,一般是没有历史数据的,故日志只需要准备2020-06-14一天的数据。具体操作如下:

# kill掉hiveserver

# 启动日志采集通道,包括Flume、Kafak等

cluster.sh start

# 修改两个日志服务器(hadoop102、hadoop103)中的/opt/module/applog/application.yml配置文件,将mock.date参数改为2020-06-14

vim /opt/module/applog/application.yml

# 执行日志生成脚本

lg.sh

# 观察HDFS是否出现相应文件

🤯HDFS发现没有出现相应文件,jpsall发现Maxwell又没有启动起来

# 查看Maxwell日志

tail -50 /opt/module/maxwell/logs/MaxwellDaemon.out

# 发现报错

Maxwell couldn't find the requested binlog

# maxwell成功启动

# 执行lg.sh后仍然没有数据

ssh hadoop104

cd /opt/module/flume-1.9.0/job;ll

# 发现没有kafka_to_hdfs_log.conf配置文件😅什么时候删掉了都不知道

vim kafka_to_hdfs_log.conf

======================================添加如下内容===================================

#定义组件

a1.sources=r1

a1.channels=c1

a1.sinks=k1

#配置source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092

a1.sources.r1.kafka.topics=topic_log

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.ygy.gmall.flume.interceptor.TimestampInterceptor$Builder

#配置channel

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume-1.9.0/checkpoint/behavior1

a1.channels.c1.dataDirs = /opt/module/flume-1.9.0/data/behavior1

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1000000

a1.channels.c1.keep-alive = 6

#配置sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_log/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = log

a1.sinks.k1.hdfs.round = false

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

#控制输出文件类型

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = gzip

#组装

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

😅重启一下就ok了

cluster.sh stop

cluster.sh start

lg.sh

3.2. 业务数据

业务数据一般存在历史数据,此处需准备2020-06-10至2020-06-14共五天的数据

3.2.1. 数据导入MySQL

🔨生成模拟数据

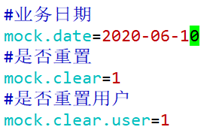

-

修改hadoop102节点上的

cd /opt/module/db_log/;vim application.properties文件,将mock.date、mock.clear、mock.clear.user三个参数调整为如图所示的值

-

执行模拟生成业务数据的命令,生成第一天2020-06-10的历史数据

java -jar gmall2020-mock-db-2021-11-14.jar -

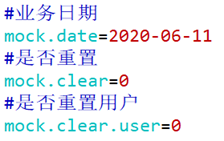

将

mock.date、mock.clear,mock.clear.user三个参数调整为如图所示的值

-

执行模拟生成业务数据的命令,生成第二天2020-06-11的历史数据

java -jar gmall2020-mock-db-2021-11-14.jar -

之后只修改

mock.date参数,依次改为2020-06-12,2020-06-13,2020-06-14,并分别生成对应日期的数据java -jar gmall2020-mock-db-2021-11-14.jar

3.2.2. 数据同步到HDFS

🔨全量表同步

# 执行全量表同步脚本

mysql_to_hdfs_full.sh all 2020-06-14

# 观察HDFS上是否出现全量表数据

🔨增量表首日全量同步

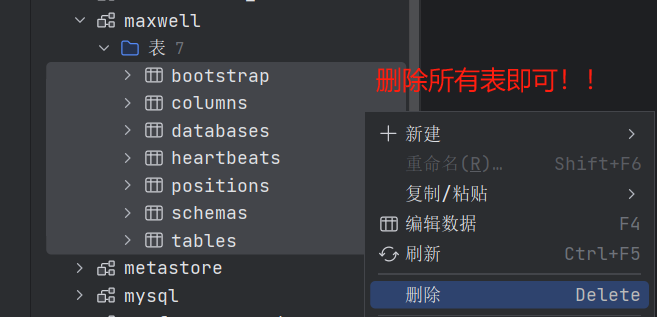

- 清除Maxwell断点记录

由于Maxwell支持断点续传,而上述重新生成业务数据的过程,会产生大量的binlog操作日志,这些日志我们并不需要。故此处需清除Maxwell的断点记录,另其从binlog最新的位置开始采集。

# 关闭Maxwell。

mxw.sh stop

# 清空Maxwell数据库,相当于初始化Maxwell

drop table maxwell.bootstrap;

drop table maxwell.columns;

drop table maxwell.databases;

drop table maxwell.heartbeats;

drop table maxwell.positions;

drop table maxwell.schemas;

drop table maxwell.tables;

# 修改Maxwell配置文件中的mock_date参数

vim /opt/module/maxwell/config.properties

# 增加

mock_date=2020-06-14

# 启动增量表数据通道,包括Maxwell、Kafka、Flume

# 执行增量表首日全量同步脚本

mysql_to_kafka_inc_init.sh all

遇上报错看hive和yarn(103:8088上看history)日志

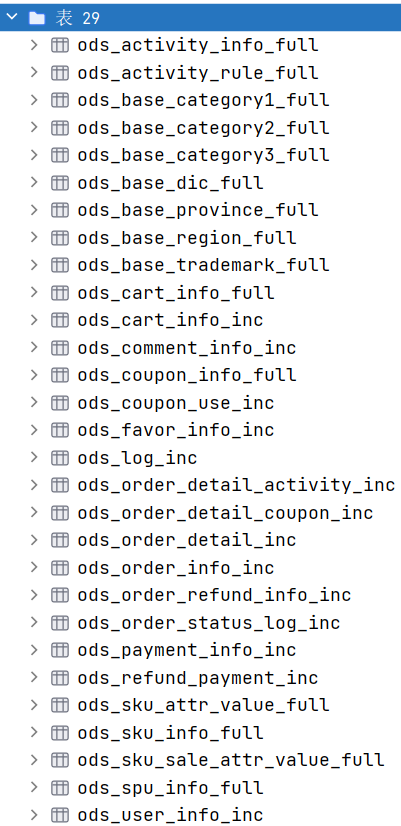

二、数仓开发之ODS层(原始数据层)

🔨ODS层的设计要点如下:

- ODS层的表结构设计依托于从业务系统同步过来(load)的数据结构(dataX是tsv,maxwell是json)

- ODS层要保存全部历史数据,故其压缩格式应选择压缩比较高的,此处选择gzip

- ODS层表名的命名规范为:

ods_表名_单分区增量全量标识(inc/full)

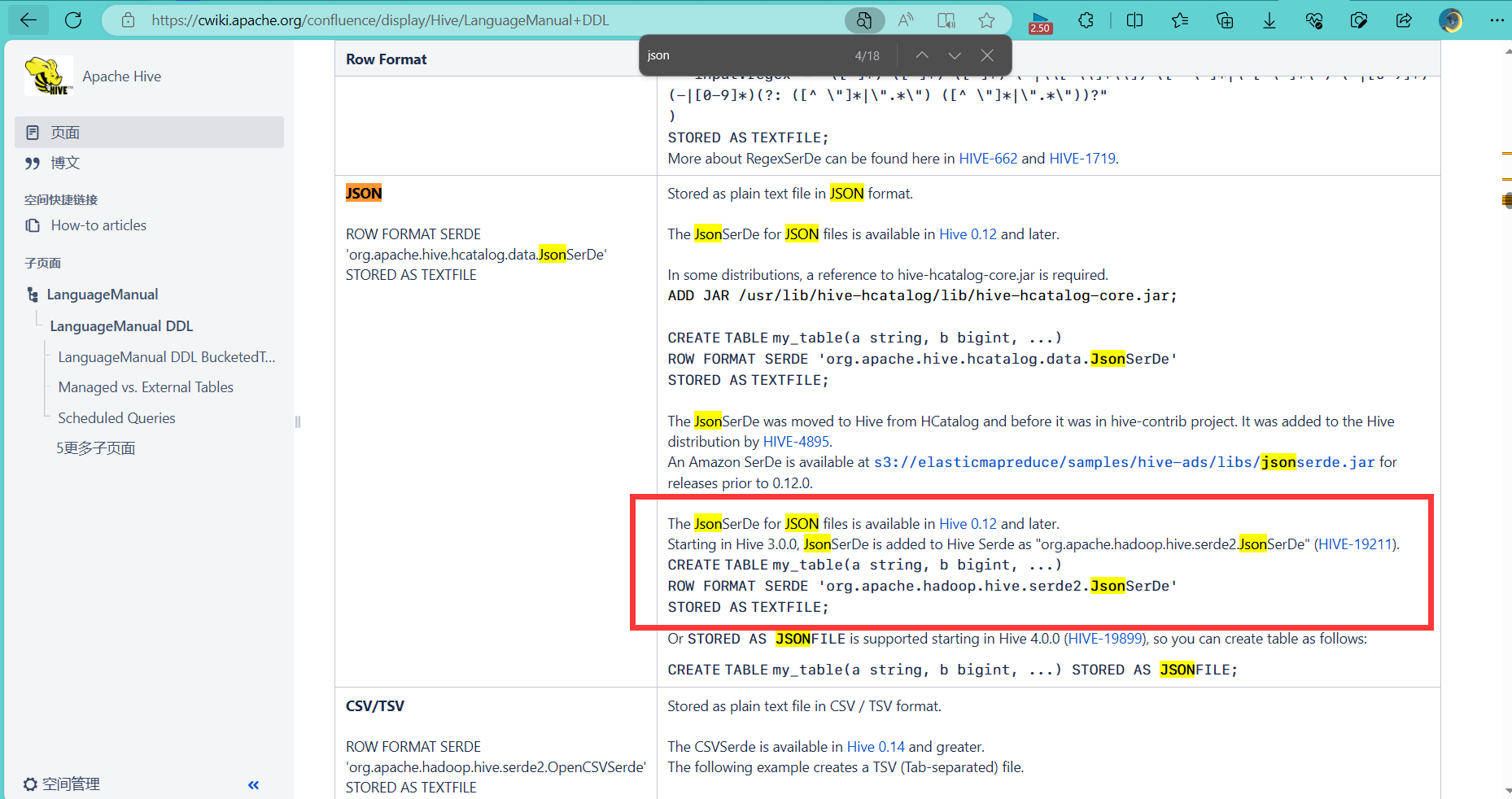

1. 日志表

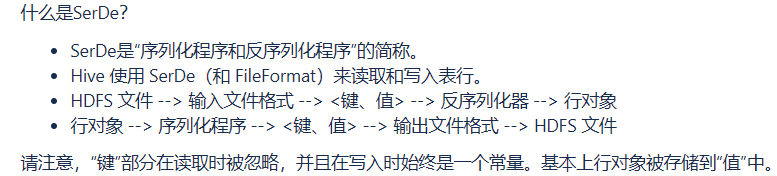

👉点击前往官网查看json建表语句

CREATE TABLE my_table(a string, b bigint, ...) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe' STORED AS TEXTFILE;

🔨页面日志、启动日志建表

-- 建表数据,嵌套用复杂数据类型

-- 未声明默认使用TextFile,可以直接将Gzip或者Bzip2压缩格式的文本文件直接导入

-- 若采用了其他方式压缩的数据包则要参考官网使用不同的建表语法

DROP TABLE IF EXISTS ods_log_inc;

CREATE EXTERNAL TABLE ods_log_inc

(

`common` STRUCT<ar :STRING,ba :STRING,ch :STRING,is_new :STRING,md :STRING,mid :STRING,os :STRING,uid :STRING,vc

:STRING> COMMENT '公共信息',

`page` STRUCT<during_time :STRING,item :STRING,item_type :STRING,last_page_id :STRING,page_id

:STRING,source_type :STRING> COMMENT '页面信息',

`actions` ARRAY<STRUCT<action_id:STRING,item:STRING,item_type:STRING,ts:BIGINT>> COMMENT '动作信息',

`displays` ARRAY<STRUCT<display_type :STRING,item :STRING,item_type :STRING,`order` :STRING,pos_id

:STRING>> COMMENT '曝光信息',

`start` STRUCT<entry :STRING,loading_time :BIGINT,open_ad_id :BIGINT,open_ad_ms :BIGINT,open_ad_skip_ms

:BIGINT> COMMENT '启动信息',

`err` STRUCT<error_code:BIGINT,msg:STRING> COMMENT '错误信息',

`ts` BIGINT COMMENT '时间戳'

) COMMENT '活动信息表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_log_inc/';

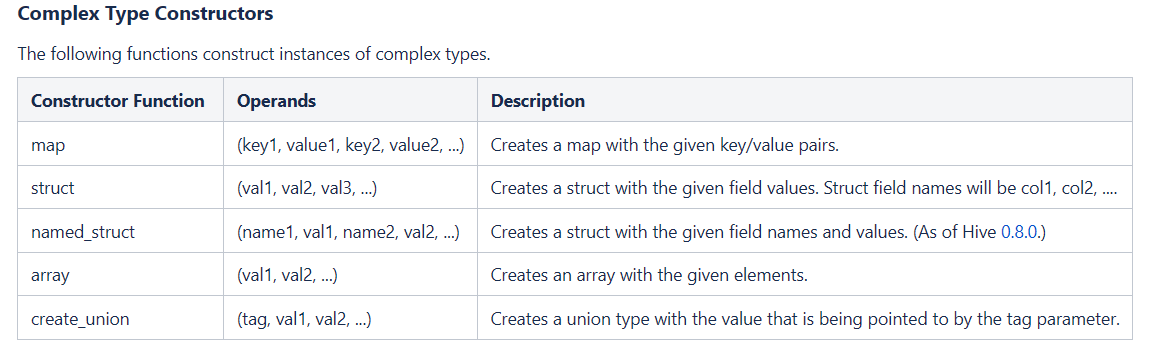

复杂数据类型:点击前往👉了解更多关于hive函数UDF

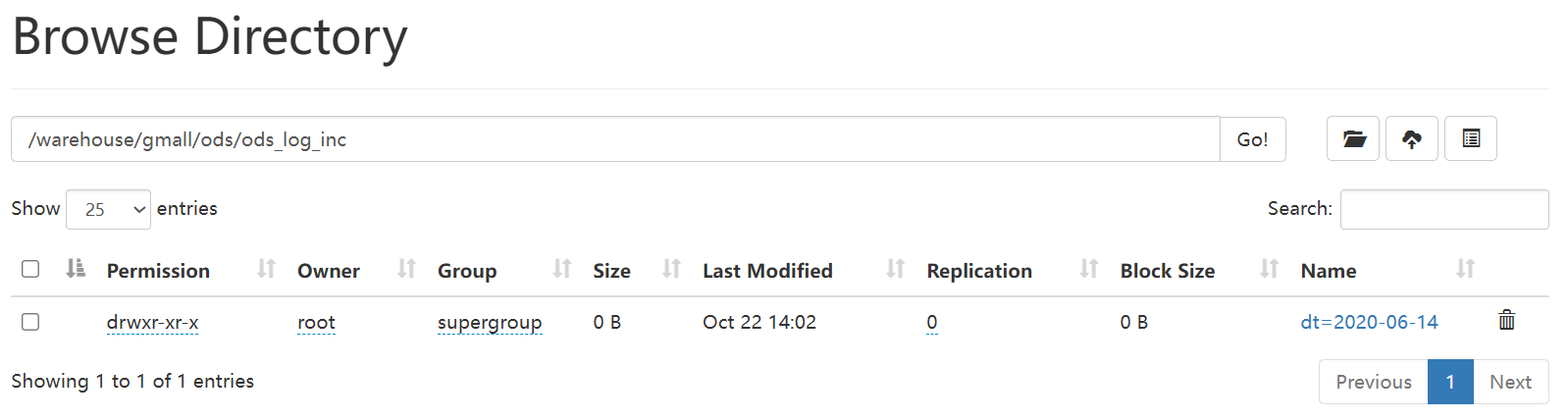

🔨数据装载

# 装载逻辑相同,只是每天日期发生了更改,考虑使用脚本省心省力

load data inpath '/origin_data/gmall/log/topic_log/2020-06-14' into table ods_log_inc partition(dt='2020-06-14');

带LOCAL表示从你的linux服务器上面加载文件到HIVE表内容里面,Local本地上面对应的这个文件不会消失.

不带LOCAL标识从你的HDFS上面文件移动到HIVE表内容里面,Hdfs路径下对应的该文件就会消失

🔨每日数据装载脚本:vim ~/bin/hdfs_to_ods_log.sh

#!/bin/bash

# 定义变量方便修改

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

echo ================== 日志日期为 $do_date ==================

sql="

load data inpath '/origin_data/$APP/log/topic_log/$do_date' into table ${APP}.ods_log_inc partition(dt='$do_date');

"

hive -e "$sql"

date -d:display time described by STRING, not ‘now’

[root@hadoop102 module]# date -d ‘-1 day’

2024年 01月 22日 星期一 19:38:26 CST

%F:完整日期格式,等价于 %Y-%m-%d

[root@hadoop102 module]# date -d ‘-1 day’ +%F

2024-01-22

🌰测试一下:

# 加上权限

chmod 777 hdfs_to_ods_log.sh

# 使用

hdfs_to_ods_log.sh 2020-06-14

2. 业务表

2.1. 活动信息表(全量表)

DROP TABLE IF EXISTS ods_activity_info_full;

CREATE EXTERNAL TABLE ods_activity_info_full

(

`id` STRING COMMENT '活动id',

`activity_name` STRING COMMENT '活动名称',

`activity_type` STRING COMMENT '活动类型',

`activity_desc` STRING COMMENT '活动描述',

`start_time` STRING COMMENT '开始时间',

`end_time` STRING COMMENT '结束时间',

`create_time` STRING COMMENT '创建时间'

) COMMENT '活动信息表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_activity_info_full/';

2.2. 活动规则表(全量表)

DROP TABLE IF EXISTS ods_activity_rule_full;

CREATE EXTERNAL TABLE ods_activity_rule_full

(

`id` STRING COMMENT '编号',

`activity_id` STRING COMMENT '类型',

`activity_type` STRING COMMENT '活动类型',

`condition_amount` DECIMAL(16, 2) COMMENT '满减金额',

`condition_num` BIGINT COMMENT '满减件数',

`benefit_amount` DECIMAL(16, 2) COMMENT '优惠金额',

`benefit_discount` DECIMAL(16, 2) COMMENT '优惠折扣',

`benefit_level` STRING COMMENT '优惠级别'

) COMMENT '活动规则表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_activity_rule_full/';

2.3. 一级品类表(全量表)

DROP TABLE IF EXISTS ods_base_category1_full;

CREATE EXTERNAL TABLE ods_base_category1_full

(

`id` STRING COMMENT '编号',

`name` STRING COMMENT '分类名称'

) COMMENT '一级品类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_category1_full/';

2.4. 二级品类表(全量表)

DROP TABLE IF EXISTS ods_base_category2_full;

CREATE EXTERNAL TABLE ods_base_category2_full

(

`id` STRING COMMENT '编号',

`name` STRING COMMENT '二级分类名称',

`category1_id` STRING COMMENT '一级分类编号'

) COMMENT '二级品类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_category2_full/';

2.5. 三级品类表(全量表)

DROP TABLE IF EXISTS ods_base_category3_full;

CREATE EXTERNAL TABLE ods_base_category3_full

(

`id` STRING COMMENT '编号',

`name` STRING COMMENT '三级分类名称',

`category2_id` STRING COMMENT '二级分类编号'

) COMMENT '三级品类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_category3_full/';

2.6. 编码字典表(全量表)

DROP TABLE IF EXISTS ods_base_dic_full;

CREATE EXTERNAL TABLE ods_base_dic_full

(

`dic_code` STRING COMMENT '编号',

`dic_name` STRING COMMENT '编码名称',

`parent_code` STRING COMMENT '父编号',

`create_time` STRING COMMENT '创建日期',

`operate_time` STRING COMMENT '修改日期'

) COMMENT '编码字典表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_dic_full/';

2.7. 省份表(全量表)

DROP TABLE IF EXISTS ods_base_province_full;

CREATE EXTERNAL TABLE ods_base_province_full

(

`id` STRING COMMENT '编号',

`name` STRING COMMENT '省份名称',

`region_id` STRING COMMENT '地区ID',

`area_code` STRING COMMENT '地区编码',

`iso_code` STRING COMMENT '旧版ISO-3166-2编码,供可视化使用',

`iso_3166_2` STRING COMMENT '新版IOS-3166-2编码,供可视化使用'

) COMMENT '省份表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_province_full/';

2.8. 地区表(全量表)

DROP TABLE IF EXISTS ods_base_region_full;

CREATE EXTERNAL TABLE ods_base_region_full

(

`id` STRING COMMENT '编号',

`region_name` STRING COMMENT '地区名称'

) COMMENT '地区表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_region_full/';

2.9. 品牌表(全量表)

DROP TABLE IF EXISTS ods_base_trademark_full;

CREATE EXTERNAL TABLE ods_base_trademark_full

(

`id` STRING COMMENT '编号',

`tm_name` STRING COMMENT '品牌名称',

`logo_url` STRING COMMENT '品牌logo的图片路径'

) COMMENT '品牌表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_base_trademark_full/';

2.10. 购物车表(全量表)

DROP TABLE IF EXISTS ods_cart_info_full;

CREATE EXTERNAL TABLE ods_cart_info_full

(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'sku_id',

`cart_price` DECIMAL(16, 2) COMMENT '放入购物车时价格',

`sku_num` BIGINT COMMENT '数量',

`img_url` BIGINT COMMENT '商品图片地址',

`sku_name` STRING COMMENT 'sku名称 (冗余)',

`is_checked` STRING COMMENT '是否被选中',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '修改时间',

`is_ordered` STRING COMMENT '是否已经下单',

`order_time` STRING COMMENT '下单时间',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号'

) COMMENT '购物车全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_cart_info_full/';

2.11. 优惠券信息表(全量表)

DROP TABLE IF EXISTS ods_coupon_info_full;

CREATE EXTERNAL TABLE ods_coupon_info_full

(

`id` STRING COMMENT '购物券编号',

`coupon_name` STRING COMMENT '购物券名称',

`coupon_type` STRING COMMENT '购物券类型 1 现金券 2 折扣券 3 满减券 4 满件打折券',

`condition_amount` DECIMAL(16, 2) COMMENT '满额数',

`condition_num` BIGINT COMMENT '满件数',

`activity_id` STRING COMMENT '活动编号',

`benefit_amount` DECIMAL(16, 2) COMMENT '减金额',

`benefit_discount` DECIMAL(16, 2) COMMENT '折扣',

`create_time` STRING COMMENT '创建时间',

`range_type` STRING COMMENT '范围类型 1、商品 2、品类 3、品牌',

`limit_num` BIGINT COMMENT '最多领用次数',

`taken_count` BIGINT COMMENT '已领用次数',

`start_time` STRING COMMENT '开始领取时间',

`end_time` STRING COMMENT '结束领取时间',

`operate_time` STRING COMMENT '修改时间',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券信息表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_coupon_info_full/';

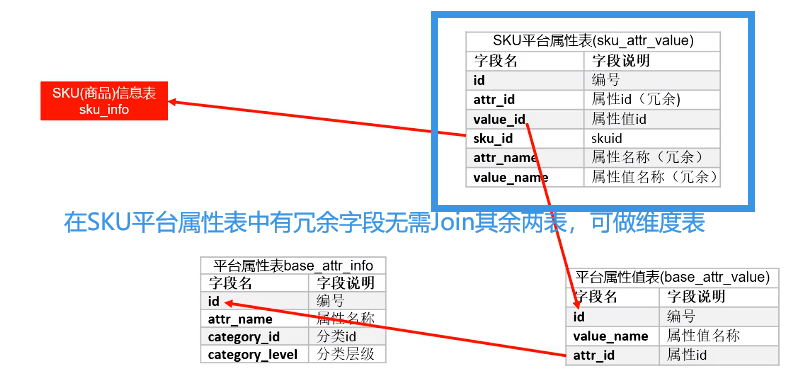

2.12. 商品平台属性表(全量表)

DROP TABLE IF EXISTS ods_sku_attr_value_full;

CREATE EXTERNAL TABLE ods_sku_attr_value_full

(

`id` STRING COMMENT '编号',

`attr_id` STRING COMMENT '平台属性ID',

`value_id` STRING COMMENT '平台属性值ID',

`sku_id` STRING COMMENT '商品ID',

`attr_name` STRING COMMENT '平台属性名称',

`value_name` STRING COMMENT '平台属性值名称'

) COMMENT 'sku平台属性表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_sku_attr_value_full/';

2.13. 商品表(全量表)

DROP TABLE IF EXISTS ods_sku_info_full;

CREATE EXTERNAL TABLE ods_sku_info_full

(

`id` STRING COMMENT 'skuId',

`spu_id` STRING COMMENT 'spuid',

`price` DECIMAL(16, 2) COMMENT '价格',

`sku_name` STRING COMMENT '商品名称',

`sku_desc` STRING COMMENT '商品描述',

`weight` DECIMAL(16, 2) COMMENT '重量',

`tm_id` STRING COMMENT '品牌id',

`category3_id` STRING COMMENT '品类id',

`sku_default_igm` STRING COMMENT '商品图片地址',

`is_sale` STRING COMMENT '是否在售',

`create_time` STRING COMMENT '创建时间'

) COMMENT 'SKU商品表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_sku_info_full/';

2.14. 商品销售属性值表(全量表)

DROP TABLE IF EXISTS ods_sku_sale_attr_value_full;

CREATE EXTERNAL TABLE ods_sku_sale_attr_value_full

(

`id` STRING COMMENT '编号',

`sku_id` STRING COMMENT 'sku_id',

`spu_id` STRING COMMENT 'spu_id',

`sale_attr_value_id` STRING COMMENT '销售属性值id',

`sale_attr_id` STRING COMMENT '销售属性id',

`sale_attr_name` STRING COMMENT '销售属性名称',

`sale_attr_value_name` STRING COMMENT '销售属性值名称'

) COMMENT 'sku销售属性名称'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_sku_sale_attr_value_full/';

2.15. SPU表(全量表)

DROP TABLE IF EXISTS ods_spu_info_full;

CREATE EXTERNAL TABLE ods_spu_info_full

(

`id` STRING COMMENT 'spu_id',

`spu_name` STRING COMMENT 'spu名称',

`description` STRING COMMENT '描述信息',

`category3_id` STRING COMMENT '品类id',

`tm_id` STRING COMMENT '品牌id'

) COMMENT 'SPU商品表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/gmall/ods/ods_spu_info_full/';

2.16. 购物车表(增量表)

DROP TABLE IF EXISTS ods_cart_info_inc;

CREATE EXTERNAL TABLE ods_cart_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,user_id :STRING,sku_id :STRING,cart_price :DECIMAL(16, 2),sku_num :BIGINT,img_url :STRING,sku_name

:STRING,is_checked :STRING,create_time :STRING,operate_time :STRING,is_ordered :STRING,order_time

:STRING,source_type :STRING,source_id :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '购物车增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_cart_info_inc/';

2.17. 评论表(增量表)

DROP TABLE IF EXISTS ods_comment_info_inc;

CREATE EXTERNAL TABLE ods_comment_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,user_id :STRING,nick_name :STRING,head_img :STRING,sku_id :STRING,spu_id :STRING,order_id

:STRING,appraise :STRING,comment_txt :STRING,create_time :STRING,operate_time :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '评价表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_comment_info_inc/';

2.18. 优惠券领用表(增量表)

DROP TABLE IF EXISTS ods_coupon_use_inc;

CREATE EXTERNAL TABLE ods_coupon_use_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,coupon_id :STRING,user_id :STRING,order_id :STRING,coupon_status :STRING,get_time :STRING,using_time

:STRING,used_time :STRING,expire_time :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '优惠券领用表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_coupon_use_inc/';

2.19. 收藏表(增量表)

DROP TABLE IF EXISTS ods_favor_info_inc;

CREATE EXTERNAL TABLE ods_favor_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,user_id :STRING,sku_id :STRING,spu_id :STRING,is_cancel :STRING,create_time :STRING,cancel_time

:STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '收藏表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_favor_info_inc/';

2.20. 订单明细表(增量表)

DROP TABLE IF EXISTS ods_order_detail_inc;

CREATE EXTERNAL TABLE ods_order_detail_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,order_id :STRING,sku_id :STRING,sku_name :STRING,img_url :STRING,order_price

:DECIMAL(16, 2),sku_num :BIGINT,create_time :STRING,source_type :STRING,source_id :STRING,split_total_amount

:DECIMAL(16, 2),split_activity_amount :DECIMAL(16, 2),split_coupon_amount

:DECIMAL(16, 2)> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单明细表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_detail_inc/';

2.21. 订单明细活动关联表(增量表)

DROP TABLE IF EXISTS ods_order_detail_activity_inc;

CREATE EXTERNAL TABLE ods_order_detail_activity_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,order_id :STRING,order_detail_id :STRING,activity_id :STRING,activity_rule_id :STRING,sku_id

:STRING,create_time :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单明细活动关联表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_detail_activity_inc/';

2.22 订单明细优惠券关联表(增量表)

DROP TABLE IF EXISTS ods_order_detail_coupon_inc;

CREATE EXTERNAL TABLE ods_order_detail_coupon_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,order_id :STRING,order_detail_id :STRING,coupon_id :STRING,coupon_use_id :STRING,sku_id

:STRING,create_time :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单明细优惠券关联表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_detail_coupon_inc/';

2.23. 订单表(增量表)

DROP TABLE IF EXISTS ods_order_info_inc;

CREATE EXTERNAL TABLE ods_order_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,consignee :STRING,consignee_tel :STRING,total_amount :DECIMAL(16, 2),order_status :STRING,user_id

:STRING,payment_way :STRING,delivery_address :STRING,order_comment :STRING,out_trade_no :STRING,trade_body

:STRING,create_time :STRING,operate_time :STRING,expire_time :STRING,process_status :STRING,tracking_no

:STRING,parent_order_id :STRING,img_url :STRING,province_id :STRING,activity_reduce_amount

:DECIMAL(16, 2),coupon_reduce_amount :DECIMAL(16, 2),original_total_amount :DECIMAL(16, 2),freight_fee

:DECIMAL(16, 2),freight_fee_reduce :DECIMAL(16, 2),refundable_time :DECIMAL(16, 2)> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_info_inc/';

2.24. 退单表(增量表)

DROP TABLE IF EXISTS ods_order_refund_info_inc;

CREATE EXTERNAL TABLE ods_order_refund_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,user_id :STRING,order_id :STRING,sku_id :STRING,refund_type :STRING,refund_num :BIGINT,refund_amount

:DECIMAL(16, 2),refund_reason_type :STRING,refund_reason_txt :STRING,refund_status :STRING,create_time

:STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '退单表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_refund_info_inc/';

2.25. 订单状态流水表(增量表)

DROP TABLE IF EXISTS ods_order_status_log_inc;

CREATE EXTERNAL TABLE ods_order_status_log_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,order_id :STRING,order_status :STRING,operate_time :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '退单表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_order_status_log_inc/';

2.26. 支付表(增量表)

DROP TABLE IF EXISTS ods_payment_info_inc;

CREATE EXTERNAL TABLE ods_payment_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,out_trade_no :STRING,order_id :STRING,user_id :STRING,payment_type :STRING,trade_no

:STRING,total_amount :DECIMAL(16, 2),subject :STRING,payment_status :STRING,create_time :STRING,callback_time

:STRING,callback_content :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '支付表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_payment_info_inc/';

2.27. 退款表(增量表)

DROP TABLE IF EXISTS ods_refund_payment_inc;

CREATE EXTERNAL TABLE ods_refund_payment_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,out_trade_no :STRING,order_id :STRING,sku_id :STRING,payment_type :STRING,trade_no :STRING,total_amount

:DECIMAL(16, 2),subject :STRING,refund_status :STRING,create_time :STRING,callback_time :STRING,callback_content

:STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '退款表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_refund_payment_inc/';

2.28. 用户表(增量表)

DROP TABLE IF EXISTS ods_user_info_inc;

CREATE EXTERNAL TABLE ods_user_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` BIGINT COMMENT '变动时间',

`data` STRUCT<id :STRING,login_name :STRING,nick_name :STRING,passwd :STRING,name :STRING,phone_num :STRING,email

:STRING,head_img :STRING,user_level :STRING,birthday :STRING,gender :STRING,create_time :STRING,operate_time

:STRING,status :STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '用户表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/gmall/ods/ods_user_info_inc/';

2.29. 数据装载脚本(日期可选)

🔨在hadoop102vim ~/bin/hdfs_to_ods_db.sh

#!/bin/bash

APP=gmall

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d '-1 day' +%F`

fi

# 接受若干个参数,每个参数就是一张ods表名

load_data(){

sql=""

for i in $*; do

#判断路径是否存在

hadoop fs -test -e /origin_data/$APP/db/${i:4}/$do_date

#路径存在方可装载数据

if [[ $? = 0 ]]; then

sql=$sql"load data inpath '/origin_data/$APP/db/${i:4}/$do_date' OVERWRITE into table ${APP}.$i partition(dt='$do_date');"

fi

done

hive -e "$sql"

}

case $1 in

"ods_activity_info_full")

load_data "ods_activity_info_full"

;;

"ods_activity_rule_full")

load_data "ods_activity_rule_full"

;;

"ods_base_category1_full")

load_data "ods_base_category1_full"

;;

"ods_base_category2_full")

load_data "ods_base_category2_full"

;;

"ods_base_category3_full")

load_data "ods_base_category3_full"

;;

"ods_base_dic_full")

load_data "ods_base_dic_full"

;;

"ods_base_province_full")

load_data "ods_base_province_full"

;;

"ods_base_region_full")

load_data "ods_base_region_full"

;;

"ods_base_trademark_full")

load_data "ods_base_trademark_full"

;;

"ods_cart_info_full")

load_data "ods_cart_info_full"

;;

"ods_coupon_info_full")

load_data "ods_coupon_info_full"

;;

"ods_sku_attr_value_full")

load_data "ods_sku_attr_value_full"

;;

"ods_sku_info_full")

load_data "ods_sku_info_full"

;;

"ods_sku_sale_attr_value_full")

load_data "ods_sku_sale_attr_value_full"

;;

"ods_spu_info_full")

load_data "ods_spu_info_full"

;;

"ods_cart_info_inc")

load_data "ods_cart_info_inc"

;;

"ods_comment_info_inc")

load_data "ods_comment_info_inc"

;;

"ods_coupon_use_inc")

load_data "ods_coupon_use_inc"

;;

"ods_favor_info_inc")

load_data "ods_favor_info_inc"

;;

"ods_order_detail_inc")

load_data "ods_order_detail_inc"

;;

"ods_order_detail_activity_inc")

load_data "ods_order_detail_activity_inc"

;;

"ods_order_detail_coupon_inc")

load_data "ods_order_detail_coupon_inc"

;;

"ods_order_info_inc")

load_data "ods_order_info_inc"

;;

"ods_order_refund_info_inc")

load_data "ods_order_refund_info_inc"

;;

"ods_order_status_log_inc")

load_data "ods_order_status_log_inc"

;;

"ods_payment_info_inc")

load_data "ods_payment_info_inc"

;;

"ods_refund_payment_inc")

load_data "ods_refund_payment_inc"

;;

"ods_user_info_inc")

load_data "ods_user_info_inc"

;;

"all")

load_data "ods_activity_info_full" "ods_activity_rule_full" "ods_base_category1_full" "ods_base_category2_full" "ods_base_category3_full" "ods_base_dic_full" "ods_base_province_full" "ods_base_region_full" "ods_base_trademark_full" "ods_cart_info_full" "ods_coupon_info_full" "ods_sku_attr_value_full" "ods_sku_info_full" "ods_sku_sale_attr_value_full" "ods_spu_info_full" "ods_cart_info_inc" "ods_comment_info_inc" "ods_coupon_use_inc" "ods_favor_info_inc" "ods_order_detail_inc" "ods_order_detail_activity_inc" "ods_order_detail_coupon_inc" "ods_order_info_inc" "ods_order_refund_info_inc" "ods_order_status_log_inc" "ods_payment_info_inc" "ods_refund_payment_inc" "ods_user_info_inc"

;;

esac

🌰举个例子:

# 先添加执行权限

chmod 777 hdfs_to_ods_db.sh

# 试一下

hdfs_to_ods_db.sh all 2020-06-14

- 如果不小心删除了其中某一个表,可以使用命令

msck repair table 表名;来恢复分区表数据

MSCK REPAIR TABLE是 SQL 中的一个命令,用于修复分布式数据库中的表和索引。这个命令在 Apache Hive 中特别有用,因为 Hive 是一个基于 Hadoop 的数据仓库工具,它使用元数据来跟踪和管理存储在分布式文件系统(如 Hadoop Distributed File System,HDFS)上的数据表和索引。当表或索引的元数据与实际存储的数据不一致时,可以使用 MSCK REPAIR TABLE 命令来修复这种不一致性。例如,如果某个表的元数据显示它有 100 行数据,但实际上只有 90 行,或者某些行已被删除或修改,这个命令可以帮助更新元数据以反映实际情况。

注意:在运行 MSCK REPAIR TABLE 命令之前,确保数据库已经停止或处于只读模式,以避免任何可能的数据损坏或不一致。此外,始终在执行此类操作之前备份重要数据,以防万一发生意外情况。

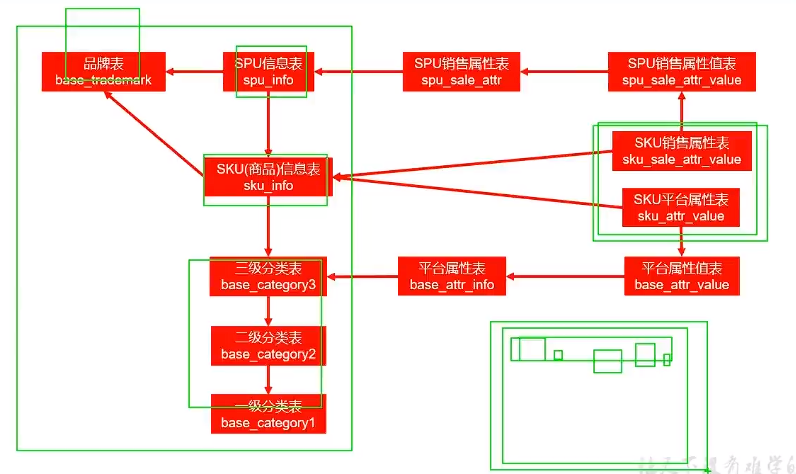

三、数仓开发之DIM层(公共维度层)

DIM层设计要点:

(1)DIM层的设计依据是维度建模理论,该层存储维度模型的维度表。

(2)DIM层的数据存储格式为orc列式存储+snappy压缩。(保证读取速度)

(3)DIM层表名的命名规范为dim_表名_全量表或者拉链表标识(full/zip)

(根据业务总线矩阵设计维度表)

1. 商品维度表

DROP TABLE IF EXISTS dim_sku_full;

CREATE EXTERNAL TABLE dim_sku_full

(

`id` STRING COMMENT 'sku_id',

`price` DECIMAL(16, 2) COMMENT '商品价格',

`sku_name` STRING COMMENT '商品名称',

`sku_desc` STRING COMMENT '商品描述',

`weight` DECIMAL(16, 2) COMMENT '重量',

`is_sale` BOOLEAN COMMENT '是否在售',

`spu_id` STRING COMMENT 'spu编号',

`spu_name` STRING COMMENT 'spu名称',

`category3_id` STRING COMMENT '三级分类id',

`category3_name` STRING COMMENT '三级分类名称',

`category2_id` STRING COMMENT '二级分类id',

`category2_name` STRING COMMENT '二级分类名称',

`category1_id` STRING COMMENT '一级分类id',

`category1_name` STRING COMMENT '一级分类名称',

`tm_id` STRING COMMENT '品牌id',

`tm_name` STRING COMMENT '品牌名称',

`sku_attr_values` ARRAY<STRUCT<attr_id :STRING,value_id :STRING,attr_name :STRING,value_name:STRING>> COMMENT '平台属性',

`sku_sale_attr_values` ARRAY<STRUCT<sale_attr_id :STRING,sale_attr_value_id :STRING,sale_attr_name :STRING,sale_attr_value_name:STRING>> COMMENT '销售属性',

`create_time` STRING COMMENT '创建时间'

) COMMENT '商品维度表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_sku_full/'

TBLPROPERTIES ('orc.compress' = 'snappy');

-- 数据装载,CTE(Common Table Expressions)

with

sku as

(

select

id,

price,

sku_name,

sku_desc,

weight,

is_sale,

spu_id,

category3_id,

tm_id,

create_time

from ods_sku_info_full

where dt='2020-06-14'

),

spu as

(

select

id,

spu_name

from ods_spu_info_full

where dt='2020-06-14'

),

c3 as

(

select

id,

name,

category2_id

from ods_base_category3_full

where dt='2020-06-14'

),

c2 as

(

select

id,

name,

category1_id

from ods_base_category2_full

where dt='2020-06-14'

),

c1 as

(

select

id,

name

from ods_base_category1_full

where dt='2020-06-14'

),

tm as

(

select

id,

tm_name

from ods_base_trademark_full

where dt='2020-06-14'

),

attr as

(

select

sku_id,

collect_set(named_struct('attr_id',attr_id,'value_id',value_id,'attr_name',attr_name,'value_name',value_name)) attrs

from ods_sku_attr_value_full

where dt='2020-06-14'

group by sku_id

),

sale_attr as

(

select

sku_id,

collect_set(named_struct('sale_attr_id',sale_attr_id,'sale_attr_value_id',sale_attr_value_id,'sale_attr_name',sale_attr_name,'sale_attr_value_name',sale_attr_value_name)) sale_attrs

from ods_sku_sale_attr_value_full

where dt='2020-06-14'

group by sku_id

)

insert overwrite table dim_sku_full partition(dt='2020-06-14')

select

sku.id,

sku.price,

sku.sku_name,

sku.sku_desc,

sku.weight,

sku.is_sale,

sku.spu_id,

spu.spu_name,

sku.category3_id,

c3.name,

c3.category2_id,

c2.name,

c2.category1_id,

c1.name,

sku.tm_id,

tm.tm_name,

attr.attrs,

sale_attr.sale_attrs,

sku.create_time

from sku

left join spu on sku.spu_id=spu.id

left join c3 on sku.category3_id=c3.id

left join c2 on c3.category2_id=c2.id

left join c1 on c2.category1_id=c1.id

left join tm on sku.tm_id=tm.id

left join attr on sku.id=attr.sku_id

left join sale_attr on sku.id=sale_attr.sku_id;

CTE(Common Table Expressions)是SQL语言中的一个功能,它允许在查询中定义一个临时的结果集,这个结果集可以在主查询中被引用。CTE(Common Table Expressions)通常用于将复杂的查询分解成更小的、更容易管理的部分,从而提高查询的可读性和可维护性。CTE的使用是通过WITH子句来定义的,它有一个名称和一条SELECT语句,该语句定义了临时的结果集。一旦定义了CTE,就可以在主查询中通过CTE的名称来引用它。

🌰以下是一个简单的示例,演示了如何使用CTE:

WITH temp_cte AS (

SELECT department, AVG(salary) AS avg_salary

FROM employees

GROUP BY department

)

SELECT department, avg_salary

FROM temp_cte

WHERE avg_salary > 5000;

- 在上面的示例中,我们首先在WITH子句中定义了一个名为temp_cte的CTE,它计算每个部门的平均工资。然后,在主查询中,我们通过temp_cte的名称引用了该CTE,并筛选出平均工资大于5000的部门。需要注意的是,CTE只在当前会话中有效,一旦会话结束,CTE就会被自动删除。此外,一个查询中可以定义多个CTE,并且每个CTE都可以有一个唯一的名称。

2. 优惠券维度表

DROP TABLE IF EXISTS dim_coupon_full;

CREATE EXTERNAL TABLE dim_coupon_full

(

`id` STRING COMMENT '购物券编号',

`coupon_name` STRING COMMENT '购物券名称',

`coupon_type_code` STRING COMMENT '购物券类型编码',

`coupon_type_name` STRING COMMENT '购物券类型名称',

`condition_amount` DECIMAL(16, 2) COMMENT '满额数',

`condition_num` BIGINT COMMENT '满件数',

`activity_id` STRING COMMENT '活动编号',

`benefit_amount` DECIMAL(16, 2) COMMENT '减金额',

`benefit_discount` DECIMAL(16, 2) COMMENT '折扣',

`benefit_rule` STRING COMMENT '优惠规则:满元*减*元,满*件打*折',

`create_time` STRING COMMENT '创建时间',

`range_type_code` STRING COMMENT '优惠范围类型编码',

`range_type_name` STRING COMMENT '优惠范围类型名称',

`limit_num` BIGINT COMMENT '最多领取次数',

`taken_count` BIGINT COMMENT '已领取次数',

`start_time` STRING COMMENT '可以领取的开始日期',

`end_time` STRING COMMENT '可以领取的结束日期',

`operate_time` STRING COMMENT '修改时间',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券维度表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_coupon_full/'

TBLPROPERTIES ('orc.compress' = 'snappy');

-- 数据装载

insert overwrite table dim_coupon_full partition(dt='2020-06-14')

select

id,

coupon_name,

coupon_type,

coupon_dic.dic_name,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

case coupon_type

when '3201' then concat('满',condition_amount,'元减',benefit_amount,'元')

when '3202' then concat('满',condition_num,'件打',10*(1-benefit_discount),'折')

when '3203' then concat('减',benefit_amount,'元')

end benefit_rule,

create_time,

range_type,

range_dic.dic_name,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from

(

select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from ods_coupon_info_full

where dt='2020-06-14'

)ci

left join

(

select

dic_code,

dic_name

from ods_base_dic_full

where dt='2020-06-14'

and parent_code='32'

)coupon_dic

on ci.coupon_type=coupon_dic.dic_code

left join

(

select

dic_code,

dic_name

from ods_base_dic_full

where dt='2020-06-14'

and parent_code='33'

)range_dic

on ci.range_type=range_dic.dic_code;

3. 活动维度表

DROP TABLE IF EXISTS dim_activity_full;

CREATE EXTERNAL TABLE dim_activity_full

(

`activity_rule_id` STRING COMMENT '活动规则ID',

`activity_id` STRING COMMENT '活动ID',

`activity_name` STRING COMMENT '活动名称',

`activity_type_code` STRING COMMENT '活动类型编码',

`activity_type_name` STRING COMMENT '活动类型名称',

`activity_desc` STRING COMMENT '活动描述',

`start_time` STRING COMMENT '开始时间',

`end_time` STRING COMMENT '结束时间',

`create_time` STRING COMMENT '创建时间',

`condition_amount` DECIMAL(16, 2) COMMENT '满减金额',

`condition_num` BIGINT COMMENT '满减件数',

`benefit_amount` DECIMAL(16, 2) COMMENT '优惠金额',

`benefit_discount` DECIMAL(16, 2) COMMENT '优惠折扣',

`benefit_rule` STRING COMMENT '优惠规则',

`benefit_level` STRING COMMENT '优惠级别'

) COMMENT '活动信息表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_activity_full/'

TBLPROPERTIES ('orc.compress' = 'snappy');

-- 数据装载

insert overwrite table dim_activity_full partition(dt='2020-06-14')

select

rule.id,

info.id,

activity_name,

rule.activity_type,

dic.dic_name,

activity_desc,

start_time,

end_time,

create_time,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

case rule.activity_type

when '3101' then concat('满',condition_amount,'元减',benefit_amount,'元')

when '3102' then concat('满',condition_num,'件打',10*(1-benefit_discount),'折')

when '3103' then concat('打',10*(1-benefit_discount),'折')

end benefit_rule,

benefit_level

from

(

select

id,

activity_id,

activity_type,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from ods_activity_rule_full

where dt='2020-06-14'

)rule

left join

(

select

id,

activity_name,

activity_type,

activity_desc,

start_time,

end_time,

create_time

from ods_activity_info_full

where dt='2020-06-14'

)info

on rule.activity_id=info.id

left join

(

select

dic_code,

dic_name

from ods_base_dic_full

where dt='2020-06-14'

and parent_code='31'

)dic

on rule.activity_type=dic.dic_code;

4. 地区维度表

DROP TABLE IF EXISTS dim_province_full;

CREATE EXTERNAL TABLE dim_province_full

(

`id` STRING COMMENT 'id',

`province_name` STRING COMMENT '省市名称',

`area_code` STRING COMMENT '地区编码',

`iso_code` STRING COMMENT '旧版ISO-3166-2编码,供可视化使用',

`iso_3166_2` STRING COMMENT '新版IOS-3166-2编码,供可视化使用',

`region_id` STRING COMMENT '地区id',

`region_name` STRING COMMENT '地区名称'

) COMMENT '地区维度表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_province_full/'

TBLPROPERTIES ('orc.compress' = 'snappy');

-- 数据装载

insert overwrite table dim_province_full partition(dt='2020-06-14')

select

province.id,

province.name,

province.area_code,

province.iso_code,

province.iso_3166_2,

region_id,

region_name

from

(

select

id,

name,

region_id,

area_code,

iso_code,

iso_3166_2

from ods_base_province_full

where dt='2020-06-14'

)province

left join

(

select

id,

region_name

from ods_base_region_full

where dt='2020-06-14'

)region

on province.region_id=region.id;

5. 日期维度表

DROP TABLE IF EXISTS dim_date;

CREATE EXTERNAL TABLE dim_date

(

`date_id` STRING COMMENT '日期ID',

`week_id` STRING COMMENT '周ID,一年中的第几周',

`week_day` STRING COMMENT '周几',

`day` STRING COMMENT '每月的第几天',

`month` STRING COMMENT '一年中的第几月',

`quarter` STRING COMMENT '一年中的第几季度',

`year` STRING COMMENT '年份',

`is_workday` STRING COMMENT '是否是工作日',

`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_date/'

TBLPROPERTIES ('orc.compress' = 'snappy');

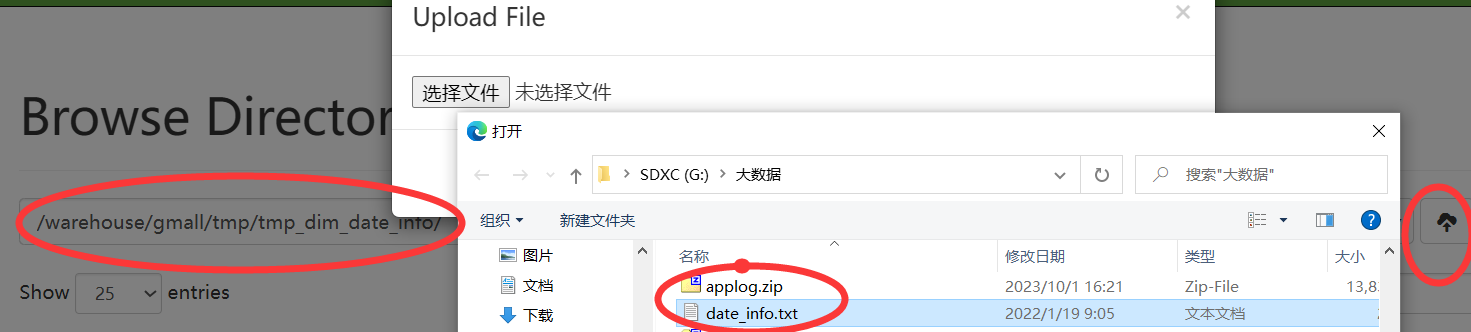

🔨数据装载:通常情况下,时间维度表的数据并不是来自于业务系统,而是手动写入,并且由于时间维度表数据的可预见性,无须每日导入,一般可一次性导入一年的数据

-- 创建临时表

DROP TABLE IF EXISTS tmp_dim_date_info;

CREATE EXTERNAL TABLE tmp_dim_date_info (

`date_id` STRING COMMENT '日',

`week_id` STRING COMMENT '周ID',

`week_day` STRING COMMENT '周几',

`day` STRING COMMENT '每月的第几天',

`month` STRING COMMENT '第几月',

`quarter` STRING COMMENT '第几季度',

`year` STRING COMMENT '年',

`is_workday` STRING COMMENT '是否是工作日',

`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/tmp/tmp_dim_date_info/';

-- 将数据文件上传到HFDS上临时表路径/warehouse/gmall/tmp/tmp_dim_date_info

2020-01-01 1 3 1 1 1 2020 0 元旦

2020-01-02 1 4 2 1 1 2020 1 \N

2020-01-03 1 5 3 1 1 2020 1 \N

2020-01-04 1 6 4 1 1 2020 0 \N

2020-01-05 1 7 5 1 1 2020 0 \N

2020-01-06 2 1 6 1 1 2020 1 \N

2020-01-07 2 2 7 1 1 2020 1 \N

2020-01-08 2 3 8 1 1 2020 1 \N

2020-01-09 2 4 9 1 1 2020 1 \N

2020-01-10 2 5 10 1 1 2020 1 \N

2020-01-11 2 6 11 1 1 2020 0 \N

2020-01-12 2 7 12 1 1 2020 0 \N

2020-01-13 3 1 13 1 1 2020 1 \N

2020-01-14 3 2 14 1 1 2020 1 \N

2020-01-15 3 3 15 1 1 2020 1 \N

2020-01-16 3 4 16 1 1 2020 1 \N

2020-01-17 3 5 17 1 1 2020 1 \N

2020-01-18 3 6 18 1 1 2020 0 \N

2020-01-19 3 7 19 1 1 2020 1 \N

2020-01-20 4 1 20 1 1 2020 1 \N

2020-01-21 4 2 21 1 1 2020 1 \N

2020-01-22 4 3 22 1 1 2020 1 \N

2020-01-23 4 4 23 1 1 2020 1 \N

2020-01-24 4 5 24 1 1 2020 0 春节

2020-01-25 4 6 25 1 1 2020 0 春节

2020-01-26 4 7 26 1 1 2020 0 春节

2020-01-27 5 1 27 1 1 2020 0 春节

2020-01-28 5 2 28 1 1 2020 0 春节

2020-01-29 5 3 29 1 1 2020 0 春节

2020-01-30 5 4 30 1 1 2020 0 春节

2020-01-31 5 5 31 1 1 2020 0 春节

2020-02-01 5 6 1 2 1 2020 0 春节

2020-02-02 5 7 2 2 1 2020 0 春节

2020-02-03 6 1 3 2 1 2020 1 \N

2020-02-04 6 2 4 2 1 2020 1 \N

2020-02-05 6 3 5 2 1 2020 1 \N

2020-02-06 6 4 6 2 1 2020 1 \N

2020-02-07 6 5 7 2 1 2020 1 \N

2020-02-08 6 6 8 2 1 2020 0 \N

2020-02-09 6 7 9 2 1 2020 0 \N

2020-02-10 7 1 10 2 1 2020 1 \N

2020-02-11 7 2 11 2 1 2020 1 \N

2020-02-12 7 3 12 2 1 2020 1 \N

2020-02-13 7 4 13 2 1 2020 1 \N

2020-02-14 7 5 14 2 1 2020 1 \N

2020-02-15 7 6 15 2 1 2020 0 \N

2020-02-16 7 7 16 2 1 2020 0 \N

2020-02-17 8 1 17 2 1 2020 1 \N

2020-02-18 8 2 18 2 1 2020 1 \N

2020-02-19 8 3 19 2 1 2020 1 \N

2020-02-20 8 4 20 2 1 2020 1 \N

2020-02-21 8 5 21 2 1 2020 1 \N

2020-02-22 8 6 22 2 1 2020 0 \N

2020-02-23 8 7 23 2 1 2020 0 \N

2020-02-24 9 1 24 2 1 2020 1 \N

2020-02-25 9 2 25 2 1 2020 1 \N

2020-02-26 9 3 26 2 1 2020 1 \N

2020-02-27 9 4 27 2 1 2020 1 \N

2020-02-28 9 5 28 2 1 2020 1 \N

2020-02-29 9 6 29 2 1 2020 0 \N

2020-03-01 9 7 1 3 1 2020 0 \N

2020-03-02 10 1 2 3 1 2020 1 \N

2020-03-03 10 2 3 3 1 2020 1 \N

2020-03-04 10 3 4 3 1 2020 1 \N

2020-03-05 10 4 5 3 1 2020 1 \N

2020-03-06 10 5 6 3 1 2020 1 \N

2020-03-07 10 6 7 3 1 2020 0 \N

2020-03-08 10 7 8 3 1 2020 0 \N

2020-03-09 11 1 9 3 1 2020 1 \N

2020-03-10 11 2 10 3 1 2020 1 \N

2020-03-11 11 3 11 3 1 2020 1 \N

2020-03-12 11 4 12 3 1 2020 1 \N

2020-03-13 11 5 13 3 1 2020 1 \N

2020-03-14 11 6 14 3 1 2020 0 \N

2020-03-15 11 7 15 3 1 2020 0 \N

2020-03-16 12 1 16 3 1 2020 1 \N

2020-03-17 12 2 17 3 1 2020 1 \N

2020-03-18 12 3 18 3 1 2020 1 \N

2020-03-19 12 4 19 3 1 2020 1 \N

2020-03-20 12 5 20 3 1 2020 1 \N

2020-03-21 12 6 21 3 1 2020 0 \N

2020-03-22 12 7 22 3 1 2020 0 \N

2020-03-23 13 1 23 3 1 2020 1 \N

2020-03-24 13 2 24 3 1 2020 1 \N

2020-03-25 13 3 25 3 1 2020 1 \N

2020-03-26 13 4 26 3 1 2020 1 \N

2020-03-27 13 5 27 3 1 2020 1 \N

2020-03-28 13 6 28 3 1 2020 0 \N

2020-03-29 13 7 29 3 1 2020 0 \N

2020-03-30 14 1 30 3 1 2020 1 \N

2020-03-31 14 2 31 3 1 2020 1 \N

2020-04-01 14 3 1 4 2 2020 1 \N

2020-04-02 14 4 2 4 2 2020 1 \N

2020-04-03 14 5 3 4 2 2020 1 \N

2020-04-04 14 6 4 4 2 2020 0 清明节

2020-04-05 14 7 5 4 2 2020 0 清明节

2020-04-06 15 1 6 4 2 2020 0 清明节

2020-04-07 15 2 7 4 2 2020 1 \N

2020-04-08 15 3 8 4 2 2020 1 \N

2020-04-09 15 4 9 4 2 2020 1 \N

2020-04-10 15 5 10 4 2 2020 1 \N

2020-04-11 15 6 11 4 2 2020 0 \N

2020-04-12 15 7 12 4 2 2020 0 \N

2020-04-13 16 1 13 4 2 2020 1 \N

2020-04-14 16 2 14 4 2 2020 1 \N

2020-04-15 16 3 15 4 2 2020 1 \N

2020-04-16 16 4 16 4 2 2020 1 \N

2020-04-17 16 5 17 4 2 2020 1 \N

2020-04-18 16 6 18 4 2 2020 0 \N

2020-04-19 16 7 19 4 2 2020 0 \N

2020-04-20 17 1 20 4 2 2020 1 \N

2020-04-21 17 2 21 4 2 2020 1 \N

2020-04-22 17 3 22 4 2 2020 1 \N

2020-04-23 17 4 23 4 2 2020 1 \N

2020-04-24 17 5 24 4 2 2020 1 \N

2020-04-25 17 6 25 4 2 2020 0 \N

2020-04-26 17 7 26 4 2 2020 1 \N

2020-04-27 18 1 27 4 2 2020 1 \N

2020-04-28 18 2 28 4 2 2020 1 \N

2020-04-29 18 3 29 4 2 2020 1 \N

2020-04-30 18 4 30 4 2 2020 1 \N

2020-05-01 18 5 1 5 2 2020 0 劳动节

2020-05-02 18 6 2 5 2 2020 0 劳动节

2020-05-03 18 7 3 5 2 2020 0 劳动节

2020-05-04 19 1 4 5 2 2020 0 劳动节

2020-05-05 19 2 5 5 2 2020 0 劳动节

2020-05-06 19 3 6 5 2 2020 1 \N

2020-05-07 19 4 7 5 2 2020 1 \N

2020-05-08 19 5 8 5 2 2020 1 \N

2020-05-09 19 6 9 5 2 2020 1 \N

2020-05-10 19 7 10 5 2 2020 0 \N

2020-05-11 20 1 11 5 2 2020 1 \N

2020-05-12 20 2 12 5 2 2020 1 \N

2020-05-13 20 3 13 5 2 2020 1 \N

2020-05-14 20 4 14 5 2 2020 1 \N

2020-05-15 20 5 15 5 2 2020 1 \N

2020-05-16 20 6 16 5 2 2020 0 \N

2020-05-17 20 7 17 5 2 2020 0 \N

2020-05-18 21 1 18 5 2 2020 1 \N

2020-05-19 21 2 19 5 2 2020 1 \N

2020-05-20 21 3 20 5 2 2020 1 \N

2020-05-21 21 4 21 5 2 2020 1 \N

2020-05-22 21 5 22 5 2 2020 1 \N

2020-05-23 21 6 23 5 2 2020 0 \N

2020-05-24 21 7 24 5 2 2020 0 \N

2020-05-25 22 1 25 5 2 2020 1 \N

2020-05-26 22 2 26 5 2 2020 1 \N

2020-05-27 22 3 27 5 2 2020 1 \N

2020-05-28 22 4 28 5 2 2020 1 \N

2020-05-29 22 5 29 5 2 2020 1 \N

2020-05-30 22 6 30 5 2 2020 0 \N

2020-05-31 22 7 31 5 2 2020 0 \N

2020-06-01 23 1 1 6 2 2020 1 \N

2020-06-02 23 2 2 6 2 2020 1 \N

2020-06-03 23 3 3 6 2 2020 1 \N

2020-06-04 23 4 4 6 2 2020 1 \N

2020-06-05 23 5 5 6 2 2020 1 \N

2020-06-06 23 6 6 6 2 2020 0 \N

2020-06-07 23 7 7 6 2 2020 0 \N

2020-06-08 24 1 8 6 2 2020 1 \N

2020-06-09 24 2 9 6 2 2020 1 \N

2020-06-10 24 3 10 6 2 2020 1 \N

2020-06-11 24 4 11 6 2 2020 1 \N

2020-06-12 24 5 12 6 2 2020 1 \N

2020-06-13 24 6 13 6 2 2020 0 \N

2020-06-14 24 7 14 6 2 2020 0 \N

2020-06-15 25 1 15 6 2 2020 1 \N

2020-06-16 25 2 16 6 2 2020 1 \N

2020-06-17 25 3 17 6 2 2020 1 \N

2020-06-18 25 4 18 6 2 2020 1 \N

2020-06-19 25 5 19 6 2 2020 1 \N

2020-06-20 25 6 20 6 2 2020 0 \N

2020-06-21 25 7 21 6 2 2020 0 \N

2020-06-22 26 1 22 6 2 2020 1 \N

2020-06-23 26 2 23 6 2 2020 1 \N

2020-06-24 26 3 24 6 2 2020 1 \N

2020-06-25 26 4 25 6 2 2020 0 端午节

2020-06-26 26 5 26 6 2 2020 0 端午节

2020-06-27 26 6 27 6 2 2020 0 端午节

2020-06-28 26 7 28 6 2 2020 1 \N

2020-06-29 27 1 29 6 2 2020 1 \N

2020-06-30 27 2 30 6 2 2020 1 \N

2020-07-01 27 3 1 7 3 2020 1 \N

2020-07-02 27 4 2 7 3 2020 1 \N

2020-07-03 27 5 3 7 3 2020 1 \N

2020-07-04 27 6 4 7 3 2020 0 \N

2020-07-05 27 7 5 7 3 2020 0 \N

2020-07-06 28 1 6 7 3 2020 1 \N

2020-07-07 28 2 7 7 3 2020 1 \N

2020-07-08 28 3 8 7 3 2020 1 \N

2020-07-09 28 4 9 7 3 2020 1 \N

2020-07-10 28 5 10 7 3 2020 1 \N

2020-07-11 28 6 11 7 3 2020 0 \N

2020-07-12 28 7 12 7 3 2020 0 \N

2020-07-13 29 1 13 7 3 2020 1 \N

2020-07-14 29 2 14 7 3 2020 1 \N

2020-07-15 29 3 15 7 3 2020 1 \N

2020-07-16 29 4 16 7 3 2020 1 \N

2020-07-17 29 5 17 7 3 2020 1 \N

2020-07-18 29 6 18 7 3 2020 0 \N

2020-07-19 29 7 19 7 3 2020 0 \N

2020-07-20 30 1 20 7 3 2020 1 \N

2020-07-21 30 2 21 7 3 2020 1 \N

2020-07-22 30 3 22 7 3 2020 1 \N

2020-07-23 30 4 23 7 3 2020 1 \N

2020-07-24 30 5 24 7 3 2020 1 \N

2020-07-25 30 6 25 7 3 2020 0 \N

2020-07-26 30 7 26 7 3 2020 0 \N

2020-07-27 31 1 27 7 3 2020 1 \N

2020-07-28 31 2 28 7 3 2020 1 \N

2020-07-29 31 3 29 7 3 2020 1 \N

2020-07-30 31 4 30 7 3 2020 1 \N

2020-07-31 31 5 31 7 3 2020 1 \N

2020-08-01 31 6 1 8 3 2020 0 \N

2020-08-02 31 7 2 8 3 2020 0 \N

2020-08-03 32 1 3 8 3 2020 1 \N

2020-08-04 32 2 4 8 3 2020 1 \N

2020-08-05 32 3 5 8 3 2020 1 \N

2020-08-06 32 4 6 8 3 2020 1 \N

2020-08-07 32 5 7 8 3 2020 1 \N

2020-08-08 32 6 8 8 3 2020 0 \N

2020-08-09 32 7 9 8 3 2020 0 \N

2020-08-10 33 1 10 8 3 2020 1 \N

2020-08-11 33 2 11 8 3 2020 1 \N

2020-08-12 33 3 12 8 3 2020 1 \N

2020-08-13 33 4 13 8 3 2020 1 \N

2020-08-14 33 5 14 8 3 2020 1 \N

2020-08-15 33 6 15 8 3 2020 0 \N

2020-08-16 33 7 16 8 3 2020 0 \N

2020-08-17 34 1 17 8 3 2020 1 \N

2020-08-18 34 2 18 8 3 2020 1 \N

2020-08-19 34 3 19 8 3 2020 1 \N

2020-08-20 34 4 20 8 3 2020 1 \N

2020-08-21 34 5 21 8 3 2020 1 \N

2020-08-22 34 6 22 8 3 2020 0 \N

2020-08-23 34 7 23 8 3 2020 0 \N

2020-08-24 35 1 24 8 3 2020 1 \N

2020-08-25 35 2 25 8 3 2020 1 \N

2020-08-26 35 3 26 8 3 2020 1 \N

2020-08-27 35 4 27 8 3 2020 1 \N

2020-08-28 35 5 28 8 3 2020 1 \N

2020-08-29 35 6 29 8 3 2020 0 \N

2020-08-30 35 7 30 8 3 2020 0 \N

2020-08-31 36 1 31 8 3 2020 1 \N

2020-09-01 36 2 1 9 3 2020 1 \N

2020-09-02 36 3 2 9 3 2020 1 \N

2020-09-03 36 4 3 9 3 2020 1 \N

2020-09-04 36 5 4 9 3 2020 1 \N

2020-09-05 36 6 5 9 3 2020 0 \N

2020-09-06 36 7 6 9 3 2020 0 \N

2020-09-07 37 1 7 9 3 2020 1 \N

2020-09-08 37 2 8 9 3 2020 1 \N

2020-09-09 37 3 9 9 3 2020 1 \N

2020-09-10 37 4 10 9 3 2020 1 \N

2020-09-11 37 5 11 9 3 2020 1 \N

2020-09-12 37 6 12 9 3 2020 0 \N

2020-09-13 37 7 13 9 3 2020 0 \N

2020-09-14 38 1 14 9 3 2020 1 \N

2020-09-15 38 2 15 9 3 2020 1 \N

2020-09-16 38 3 16 9 3 2020 1 \N

2020-09-17 38 4 17 9 3 2020 1 \N

2020-09-18 38 5 18 9 3 2020 1 \N

2020-09-19 38 6 19 9 3 2020 0 \N

2020-09-20 38 7 20 9 3 2020 0 \N

2020-09-21 39 1 21 9 3 2020 1 \N

2020-09-22 39 2 22 9 3 2020 1 \N

2020-09-23 39 3 23 9 3 2020 1 \N

2020-09-24 39 4 24 9 3 2020 1 \N

2020-09-25 39 5 25 9 3 2020 1 \N

2020-09-26 39 6 26 9 3 2020 0 \N

2020-09-27 39 7 27 9 3 2020 1 \N

2020-09-28 40 1 28 9 3 2020 1 \N

2020-09-29 40 2 29 9 3 2020 1 \N

2020-09-30 40 3 30 9 3 2020 1 \N

2020-10-01 40 4 1 10 4 2020 0 国庆节、中秋节

2020-10-02 40 5 2 10 4 2020 0 国庆节、中秋节

2020-10-03 40 6 3 10 4 2020 0 国庆节、中秋节

2020-10-04 40 7 4 10 4 2020 0 国庆节、中秋节

2020-10-05 41 1 5 10 4 2020 0 国庆节、中秋节

2020-10-06 41 2 6 10 4 2020 0 国庆节、中秋节

2020-10-07 41 3 7 10 4 2020 0 国庆节、中秋节

2020-10-08 41 4 8 10 4 2020 0 国庆节、中秋节

2020-10-09 41 5 9 10 4 2020 1 \N

2020-10-10 41 6 10 10 4 2020 1 \N

2020-10-11 41 7 11 10 4 2020 0 \N

2020-10-12 42 1 12 10 4 2020 1 \N

2020-10-13 42 2 13 10 4 2020 1 \N

2020-10-14 42 3 14 10 4 2020 1 \N

2020-10-15 42 4 15 10 4 2020 1 \N

2020-10-16 42 5 16 10 4 2020 1 \N

2020-10-17 42 6 17 10 4 2020 0 \N

2020-10-18 42 7 18 10 4 2020 0 \N

2020-10-19 43 1 19 10 4 2020 1 \N

2020-10-20 43 2 20 10 4 2020 1 \N

2020-10-21 43 3 21 10 4 2020 1 \N

2020-10-22 43 4 22 10 4 2020 1 \N

2020-10-23 43 5 23 10 4 2020 1 \N

2020-10-24 43 6 24 10 4 2020 0 \N

2020-10-25 43 7 25 10 4 2020 0 \N

2020-10-26 44 1 26 10 4 2020 1 \N

2020-10-27 44 2 27 10 4 2020 1 \N

2020-10-28 44 3 28 10 4 2020 1 \N

2020-10-29 44 4 29 10 4 2020 1 \N

2020-10-30 44 5 30 10 4 2020 1 \N

2020-10-31 44 6 31 10 4 2020 0 \N

2020-11-01 44 7 1 11 4 2020 0 \N

2020-11-02 45 1 2 11 4 2020 1 \N

2020-11-03 45 2 3 11 4 2020 1 \N

2020-11-04 45 3 4 11 4 2020 1 \N

2020-11-05 45 4 5 11 4 2020 1 \N

2020-11-06 45 5 6 11 4 2020 1 \N

2020-11-07 45 6 7 11 4 2020 0 \N

2020-11-08 45 7 8 11 4 2020 0 \N

2020-11-09 46 1 9 11 4 2020 1 \N

2020-11-10 46 2 10 11 4 2020 1 \N

2020-11-11 46 3 11 11 4 2020 1 \N

2020-11-12 46 4 12 11 4 2020 1 \N

2020-11-13 46 5 13 11 4 2020 1 \N

2020-11-14 46 6 14 11 4 2020 0 \N

2020-11-15 46 7 15 11 4 2020 0 \N

2020-11-16 47 1 16 11 4 2020 1 \N

2020-11-17 47 2 17 11 4 2020 1 \N

2020-11-18 47 3 18 11 4 2020 1 \N

2020-11-19 47 4 19 11 4 2020 1 \N

2020-11-20 47 5 20 11 4 2020 1 \N

2020-11-21 47 6 21 11 4 2020 0 \N

2020-11-22 47 7 22 11 4 2020 0 \N

2020-11-23 48 1 23 11 4 2020 1 \N

2020-11-24 48 2 24 11 4 2020 1 \N

2020-11-25 48 3 25 11 4 2020 1 \N

2020-11-26 48 4 26 11 4 2020 1 \N

2020-11-27 48 5 27 11 4 2020 1 \N

2020-11-28 48 6 28 11 4 2020 0 \N

2020-11-29 48 7 29 11 4 2020 0 \N

2020-11-30 49 1 30 11 4 2020 1 \N

2020-12-01 49 2 1 12 4 2020 1 \N

2020-12-02 49 3 2 12 4 2020 1 \N

2020-12-03 49 4 3 12 4 2020 1 \N

2020-12-04 49 5 4 12 4 2020 1 \N

2020-12-05 49 6 5 12 4 2020 0 \N

2020-12-06 49 7 6 12 4 2020 0 \N

2020-12-07 50 1 7 12 4 2020 1 \N

2020-12-08 50 2 8 12 4 2020 1 \N

2020-12-09 50 3 9 12 4 2020 1 \N

2020-12-10 50 4 10 12 4 2020 1 \N

2020-12-11 50 5 11 12 4 2020 1 \N

2020-12-12 50 6 12 12 4 2020 0 \N

2020-12-13 50 7 13 12 4 2020 0 \N

2020-12-14 51 1 14 12 4 2020 1 \N

2020-12-15 51 2 15 12 4 2020 1 \N

2020-12-16 51 3 16 12 4 2020 1 \N

2020-12-17 51 4 17 12 4 2020 1 \N

2020-12-18 51 5 18 12 4 2020 1 \N

2020-12-19 51 6 19 12 4 2020 0 \N

2020-12-20 51 7 20 12 4 2020 0 \N

2020-12-21 52 1 21 12 4 2020 1 \N

2020-12-22 52 2 22 12 4 2020 1 \N

2020-12-23 52 3 23 12 4 2020 1 \N

2020-12-24 52 4 24 12 4 2020 1 \N

2020-12-25 52 5 25 12 4 2020 1 \N

2020-12-26 52 6 26 12 4 2020 0 \N

2020-12-27 52 7 27 12 4 2020 0 \N

2020-12-28 53 1 28 12 4 2020 1 \N

2020-12-29 53 2 29 12 4 2020 1 \N

2020-12-30 53 3 30 12 4 2020 1 \N

2020-12-31 53 4 31 12 4 2020 1 \N

2021-01-01 1 5 1 1 1 2021 0 元旦

2021-01-02 1 6 2 1 1 2021 0 元旦

2021-01-03 1 7 3 1 1 2021 0 元旦

2021-01-04 2 1 4 1 1 2021 1 \N

2021-01-05 2 2 5 1 1 2021 1 \N

2021-01-06 2 3 6 1 1 2021 1 \N

2021-01-07 2 4 7 1 1 2021 1 \N

2021-01-08 2 5 8 1 1 2021 1 \N

2021-01-09 2 6 9 1 1 2021 0 \N

2021-01-10 2 7 10 1 1 2021 0 \N

2021-01-11 3 1 11 1 1 2021 1 \N

2021-01-12 3 2 12 1 1 2021 1 \N

2021-01-13 3 3 13 1 1 2021 1 \N

2021-01-14 3 4 14 1 1 2021 1 \N

2021-01-15 3 5 15 1 1 2021 1 \N

2021-01-16 3 6 16 1 1 2021 0 \N

2021-01-17 3 7 17 1 1 2021 0 \N

2021-01-18 4 1 18 1 1 2021 1 \N

2021-01-19 4 2 19 1 1 2021 1 \N

2021-01-20 4 3 20 1 1 2021 1 \N

2021-01-21 4 4 21 1 1 2021 1 \N

2021-01-22 4 5 22 1 1 2021 1 \N

2021-01-23 4 6 23 1 1 2021 0 \N

2021-01-24 4 7 24 1 1 2021 0 \N

2021-01-25 5 1 25 1 1 2021 1 \N

2021-01-26 5 2 26 1 1 2021 1 \N

2021-01-27 5 3 27 1 1 2021 1 \N

2021-01-28 5 4 28 1 1 2021 1 \N

2021-01-29 5 5 29 1 1 2021 1 \N

2021-01-30 5 6 30 1 1 2021 0 \N

2021-01-31 5 7 31 1 1 2021 0 \N

2021-02-01 6 1 1 2 1 2021 1 \N

2021-02-02 6 2 2 2 1 2021 1 \N

2021-02-03 6 3 3 2 1 2021 1 \N

2021-02-04 6 4 4 2 1 2021 1 \N

2021-02-05 6 5 5 2 1 2021 1 \N

2021-02-06 6 6 6 2 1 2021 0 \N

2021-02-07 6 7 7 2 1 2021 1 \N

2021-02-08 7 1 8 2 1 2021 1 \N

2021-02-09 7 2 9 2 1 2021 1 \N

2021-02-10 7 3 10 2 1 2021 1 \N

2021-02-11 7 4 11 2 1 2021 0 春节

2021-02-12 7 5 12 2 1 2021 0 春节

2021-02-13 7 6 13 2 1 2021 0 春节

2021-02-14 7 7 14 2 1 2021 0 春节

2021-02-15 8 1 15 2 1 2021 0 春节

2021-02-16 8 2 16 2 1 2021 0 春节

2021-02-17 8 3 17 2 1 2021 0 春节

2021-02-18 8 4 18 2 1 2021 1 \N

2021-02-19 8 5 19 2 1 2021 1 \N

2021-02-20 8 6 20 2 1 2021 1 \N

2021-02-21 8 7 21 2 1 2021 0 \N

2021-02-22 9 1 22 2 1 2021 1 \N

2021-02-23 9 2 23 2 1 2021 1 \N

2021-02-24 9 3 24 2 1 2021 1 \N

2021-02-25 9 4 25 2 1 2021 1 \N

2021-02-26 9 5 26 2 1 2021 1 \N

2021-02-27 9 6 27 2 1 2021 0 \N

2021-02-28 9 7 28 2 1 2021 0 \N

2021-03-01 10 1 1 3 1 2021 1 \N

2021-03-02 10 2 2 3 1 2021 1 \N

2021-03-03 10 3 3 3 1 2021 1 \N

2021-03-04 10 4 4 3 1 2021 1 \N

2021-03-05 10 5 5 3 1 2021 1 \N

2021-03-06 10 6 6 3 1 2021 0 \N

2021-03-07 10 7 7 3 1 2021 0 \N

2021-03-08 11 1 8 3 1 2021 1 \N

2021-03-09 11 2 9 3 1 2021 1 \N

2021-03-10 11 3 10 3 1 2021 1 \N

2021-03-11 11 4 11 3 1 2021 1 \N

2021-03-12 11 5 12 3 1 2021 1 \N

2021-03-13 11 6 13 3 1 2021 0 \N

2021-03-14 11 7 14 3 1 2021 0 \N

2021-03-15 12 1 15 3 1 2021 1 \N

2021-03-16 12 2 16 3 1 2021 1 \N

2021-03-17 12 3 17 3 1 2021 1 \N

2021-03-18 12 4 18 3 1 2021 1 \N

2021-03-19 12 5 19 3 1 2021 1 \N

2021-03-20 12 6 20 3 1 2021 0 \N

2021-03-21 12 7 21 3 1 2021 0 \N

2021-03-22 13 1 22 3 1 2021 1 \N

2021-03-23 13 2 23 3 1 2021 1 \N

2021-03-24 13 3 24 3 1 2021 1 \N

2021-03-25 13 4 25 3 1 2021 1 \N

2021-03-26 13 5 26 3 1 2021 1 \N

2021-03-27 13 6 27 3 1 2021 0 \N

2021-03-28 13 7 28 3 1 2021 0 \N

2021-03-29 14 1 29 3 1 2021 1 \N

2021-03-30 14 2 30 3 1 2021 1 \N

2021-03-31 14 3 31 3 1 2021 1 \N

2021-04-01 14 4 1 4 2 2021 1 \N

2021-04-02 14 5 2 4 2 2021 1 \N

2021-04-03 14 6 3 4 2 2021 0 清明节

2021-04-04 14 7 4 4 2 2021 0 清明节

2021-04-05 15 1 5 4 2 2021 0 清明节

2021-04-06 15 2 6 4 2 2021 1 \N

2021-04-07 15 3 7 4 2 2021 1 \N

2021-04-08 15 4 8 4 2 2021 1 \N

2021-04-09 15 5 9 4 2 2021 1 \N

2021-04-10 15 6 10 4 2 2021 0 \N

2021-04-11 15 7 11 4 2 2021 0 \N

2021-04-12 16 1 12 4 2 2021 1 \N

2021-04-13 16 2 13 4 2 2021 1 \N

2021-04-14 16 3 14 4 2 2021 1 \N

2021-04-15 16 4 15 4 2 2021 1 \N

2021-04-16 16 5 16 4 2 2021 1 \N

2021-04-17 16 6 17 4 2 2021 0 \N

2021-04-18 16 7 18 4 2 2021 0 \N

2021-04-19 17 1 19 4 2 2021 1 \N

2021-04-20 17 2 20 4 2 2021 1 \N

2021-04-21 17 3 21 4 2 2021 1 \N

2021-04-22 17 4 22 4 2 2021 1 \N

2021-04-23 17 5 23 4 2 2021 1 \N

2021-04-24 17 6 24 4 2 2021 0 \N

2021-04-25 17 7 25 4 2 2021 1 \N

2021-04-26 18 1 26 4 2 2021 1 \N

2021-04-27 18 2 27 4 2 2021 1 \N

2021-04-28 18 3 28 4 2 2021 1 \N

2021-04-29 18 4 29 4 2 2021 1 \N

2021-04-30 18 5 30 4 2 2021 1 \N

2021-05-01 18 6 1 5 2 2021 0 劳动节

2021-05-02 18 7 2 5 2 2021 0 劳动节

2021-05-03 19 1 3 5 2 2021 0 劳动节

2021-05-04 19 2 4 5 2 2021 0 劳动节

2021-05-05 19 3 5 5 2 2021 0 劳动节

2021-05-06 19 4 6 5 2 2021 1 \N

2021-05-07 19 5 7 5 2 2021 1 \N

2021-05-08 19 6 8 5 2 2021 1 \N

2021-05-09 19 7 9 5 2 2021 0 \N

2021-05-10 20 1 10 5 2 2021 1 \N

2021-05-11 20 2 11 5 2 2021 1 \N

2021-05-12 20 3 12 5 2 2021 1 \N

2021-05-13 20 4 13 5 2 2021 1 \N

2021-05-14 20 5 14 5 2 2021 1 \N

2021-05-15 20 6 15 5 2 2021 0 \N

2021-05-16 20 7 16 5 2 2021 0 \N

2021-05-17 21 1 17 5 2 2021 1 \N

2021-05-18 21 2 18 5 2 2021 1 \N

2021-05-19 21 3 19 5 2 2021 1 \N

2021-05-20 21 4 20 5 2 2021 1 \N

2021-05-21 21 5 21 5 2 2021 1 \N

2021-05-22 21 6 22 5 2 2021 0 \N

2021-05-23 21 7 23 5 2 2021 0 \N

2021-05-24 22 1 24 5 2 2021 1 \N

2021-05-25 22 2 25 5 2 2021 1 \N

2021-05-26 22 3 26 5 2 2021 1 \N

2021-05-27 22 4 27 5 2 2021 1 \N

2021-05-28 22 5 28 5 2 2021 1 \N

2021-05-29 22 6 29 5 2 2021 0 \N

2021-05-30 22 7 30 5 2 2021 0 \N

2021-05-31 23 1 31 5 2 2021 1 \N

2021-06-01 23 2 1 6 2 2021 1 \N

2021-06-02 23 3 2 6 2 2021 1 \N

2021-06-03 23 4 3 6 2 2021 1 \N

2021-06-04 23 5 4 6 2 2021 1 \N

2021-06-05 23 6 5 6 2 2021 0 \N

2021-06-06 23 7 6 6 2 2021 0 \N

2021-06-07 24 1 7 6 2 2021 1 \N

2021-06-08 24 2 8 6 2 2021 1 \N

2021-06-09 24 3 9 6 2 2021 1 \N

2021-06-10 24 4 10 6 2 2021 1 \N

2021-06-11 24 5 11 6 2 2021 1 \N

2021-06-12 24 6 12 6 2 2021 0 端午节

2021-06-13 24 7 13 6 2 2021 0 端午节

2021-06-14 25 1 14 6 2 2021 0 端午节

2021-06-15 25 2 15 6 2 2021 1 \N

2021-06-16 25 3 16 6 2 2021 1 \N

2021-06-17 25 4 17 6 2 2021 1 \N

2021-06-18 25 5 18 6 2 2021 1 \N

2021-06-19 25 6 19 6 2 2021 0 \N

2021-06-20 25 7 20 6 2 2021 0 \N

2021-06-21 26 1 21 6 2 2021 1 \N

2021-06-22 26 2 22 6 2 2021 1 \N

2021-06-23 26 3 23 6 2 2021 1 \N

2021-06-24 26 4 24 6 2 2021 1 \N

2021-06-25 26 5 25 6 2 2021 1 \N

2021-06-26 26 6 26 6 2 2021 0 \N

2021-06-27 26 7 27 6 2 2021 0 \N

2021-06-28 27 1 28 6 2 2021 1 \N

2021-06-29 27 2 29 6 2 2021 1 \N

2021-06-30 27 3 30 6 2 2021 1 \N

2021-07-01 27 4 1 7 3 2021 1 \N

2021-07-02 27 5 2 7 3 2021 1 \N

2021-07-03 27 6 3 7 3 2021 0 \N

2021-07-04 27 7 4 7 3 2021 0 \N

2021-07-05 28 1 5 7 3 2021 1 \N

2021-07-06 28 2 6 7 3 2021 1 \N

2021-07-07 28 3 7 7 3 2021 1 \N

2021-07-08 28 4 8 7 3 2021 1 \N

2021-07-09 28 5 9 7 3 2021 1 \N

2021-07-10 28 6 10 7 3 2021 0 \N

2021-07-11 28 7 11 7 3 2021 0 \N

2021-07-12 29 1 12 7 3 2021 1 \N

2021-07-13 29 2 13 7 3 2021 1 \N

2021-07-14 29 3 14 7 3 2021 1 \N

2021-07-15 29 4 15 7 3 2021 1 \N

2021-07-16 29 5 16 7 3 2021 1 \N

2021-07-17 29 6 17 7 3 2021 0 \N

2021-07-18 29 7 18 7 3 2021 0 \N

2021-07-19 30 1 19 7 3 2021 1 \N

2021-07-20 30 2 20 7 3 2021 1 \N

2021-07-21 30 3 21 7 3 2021 1 \N

2021-07-22 30 4 22 7 3 2021 1 \N

2021-07-23 30 5 23 7 3 2021 1 \N

2021-07-24 30 6 24 7 3 2021 0 \N

2021-07-25 30 7 25 7 3 2021 0 \N

2021-07-26 31 1 26 7 3 2021 1 \N

2021-07-27 31 2 27 7 3 2021 1 \N

2021-07-28 31 3 28 7 3 2021 1 \N

2021-07-29 31 4 29 7 3 2021 1 \N

2021-07-30 31 5 30 7 3 2021 1 \N

2021-07-31 31 6 31 7 3 2021 0 \N

2021-08-01 31 7 1 8 3 2021 0 \N

2021-08-02 32 1 2 8 3 2021 1 \N

2021-08-03 32 2 3 8 3 2021 1 \N

2021-08-04 32 3 4 8 3 2021 1 \N

2021-08-05 32 4 5 8 3 2021 1 \N

2021-08-06 32 5 6 8 3 2021 1 \N

2021-08-07 32 6 7 8 3 2021 0 \N

2021-08-08 32 7 8 8 3 2021 0 \N

2021-08-09 33 1 9 8 3 2021 1 \N

2021-08-10 33 2 10 8 3 2021 1 \N

2021-08-11 33 3 11 8 3 2021 1 \N

2021-08-12 33 4 12 8 3 2021 1 \N

2021-08-13 33 5 13 8 3 2021 1 \N

2021-08-14 33 6 14 8 3 2021 0 \N

2021-08-15 33 7 15 8 3 2021 0 \N

2021-08-16 34 1 16 8 3 2021 1 \N

2021-08-17 34 2 17 8 3 2021 1 \N

2021-08-18 34 3 18 8 3 2021 1 \N

2021-08-19 34 4 19 8 3 2021 1 \N

2021-08-20 34 5 20 8 3 2021 1 \N

2021-08-21 34 6 21 8 3 2021 0 \N

2021-08-22 34 7 22 8 3 2021 0 \N

2021-08-23 35 1 23 8 3 2021 1 \N

2021-08-24 35 2 24 8 3 2021 1 \N

2021-08-25 35 3 25 8 3 2021 1 \N

2021-08-26 35 4 26 8 3 2021 1 \N

2021-08-27 35 5 27 8 3 2021 1 \N

2021-08-28 35 6 28 8 3 2021 0 \N

2021-08-29 35 7 29 8 3 2021 0 \N

2021-08-30 36 1 30 8 3 2021 1 \N

2021-08-31 36 2 31 8 3 2021 1 \N

2021-09-01 36 3 1 9 3 2021 1 \N

2021-09-02 36 4 2 9 3 2021 1 \N

2021-09-03 36 5 3 9 3 2021 1 \N

2021-09-04 36 6 4 9 3 2021 0 \N

2021-09-05 36 7 5 9 3 2021 0 \N

2021-09-06 37 1 6 9 3 2021 1 \N

2021-09-07 37 2 7 9 3 2021 1 \N

2021-09-08 37 3 8 9 3 2021 1 \N

2021-09-09 37 4 9 9 3 2021 1 \N

2021-09-10 37 5 10 9 3 2021 1 \N

2021-09-11 37 6 11 9 3 2021 0 \N

2021-09-12 37 7 12 9 3 2021 0 \N

2021-09-13 38 1 13 9 3 2021 1 \N

2021-09-14 38 2 14 9 3 2021 1 \N

2021-09-15 38 3 15 9 3 2021 1 \N

2021-09-16 38 4 16 9 3 2021 1 \N

2021-09-17 38 5 17 9 3 2021 1 \N

2021-09-18 38 6 18 9 3 2021 1 \N

2021-09-19 38 7 19 9 3 2021 0 中秋节

2021-09-20 39 1 20 9 3 2021 0 中秋节

2021-09-21 39 2 21 9 3 2021 0 中秋节

2021-09-22 39 3 22 9 3 2021 1 \N

2021-09-23 39 4 23 9 3 2021 1 \N

2021-09-24 39 5 24 9 3 2021 1 \N

2021-09-25 39 6 25 9 3 2021 0 \N

2021-09-26 39 7 26 9 3 2021 1 \N

2021-09-27 40 1 27 9 3 2021 1 \N

2021-09-28 40 2 28 9 3 2021 1 \N

2021-09-29 40 3 29 9 3 2021 1 \N

2021-09-30 40 4 30 9 3 2021 1 \N

2021-10-01 40 5 1 10 4 2021 0 国庆节

2021-10-02 40 6 2 10 4 2021 0 国庆节

2021-10-03 40 7 3 10 4 2021 0 国庆节

2021-10-04 41 1 4 10 4 2021 0 国庆节

2021-10-05 41 2 5 10 4 2021 0 国庆节

2021-10-06 41 3 6 10 4 2021 0 国庆节

2021-10-07 41 4 7 10 4 2021 0 国庆节

2021-10-08 41 5 8 10 4 2021 1 \N

2021-10-09 41 6 9 10 4 2021 1 \N

2021-10-10 41 7 10 10 4 2021 0 \N

2021-10-11 42 1 11 10 4 2021 1 \N

2021-10-12 42 2 12 10 4 2021 1 \N

2021-10-13 42 3 13 10 4 2021 1 \N

2021-10-14 42 4 14 10 4 2021 1 \N

2021-10-15 42 5 15 10 4 2021 1 \N

2021-10-16 42 6 16 10 4 2021 0 \N

2021-10-17 42 7 17 10 4 2021 0 \N

2021-10-18 43 1 18 10 4 2021 1 \N

2021-10-19 43 2 19 10 4 2021 1 \N

2021-10-20 43 3 20 10 4 2021 1 \N

2021-10-21 43 4 21 10 4 2021 1 \N

2021-10-22 43 5 22 10 4 2021 1 \N

2021-10-23 43 6 23 10 4 2021 0 \N

2021-10-24 43 7 24 10 4 2021 0 \N

2021-10-25 44 1 25 10 4 2021 1 \N

2021-10-26 44 2 26 10 4 2021 1 \N

2021-10-27 44 3 27 10 4 2021 1 \N

2021-10-28 44 4 28 10 4 2021 1 \N

2021-10-29 44 5 29 10 4 2021 1 \N

2021-10-30 44 6 30 10 4 2021 0 \N

2021-10-31 44 7 31 10 4 2021 0 \N

2021-11-01 45 1 1 11 4 2021 1 \N

2021-11-02 45 2 2 11 4 2021 1 \N

2021-11-03 45 3 3 11 4 2021 1 \N

2021-11-04 45 4 4 11 4 2021 1 \N

2021-11-05 45 5 5 11 4 2021 1 \N

2021-11-06 45 6 6 11 4 2021 0 \N

2021-11-07 45 7 7 11 4 2021 0 \N

2021-11-08 46 1 8 11 4 2021 1 \N

2021-11-09 46 2 9 11 4 2021 1 \N

2021-11-10 46 3 10 11 4 2021 1 \N

2021-11-11 46 4 11 11 4 2021 1 \N

2021-11-12 46 5 12 11 4 2021 1 \N

2021-11-13 46 6 13 11 4 2021 0 \N

2021-11-14 46 7 14 11 4 2021 0 \N

2021-11-15 47 1 15 11 4 2021 1 \N

2021-11-16 47 2 16 11 4 2021 1 \N

2021-11-17 47 3 17 11 4 2021 1 \N

2021-11-18 47 4 18 11 4 2021 1 \N

2021-11-19 47 5 19 11 4 2021 1 \N

2021-11-20 47 6 20 11 4 2021 0 \N

2021-11-21 47 7 21 11 4 2021 0 \N

2021-11-22 48 1 22 11 4 2021 1 \N

2021-11-23 48 2 23 11 4 2021 1 \N

2021-11-24 48 3 24 11 4 2021 1 \N

2021-11-25 48 4 25 11 4 2021 1 \N

2021-11-26 48 5 26 11 4 2021 1 \N

2021-11-27 48 6 27 11 4 2021 0 \N

2021-11-28 48 7 28 11 4 2021 0 \N

2021-11-29 49 1 29 11 4 2021 1 \N

2021-11-30 49 2 30 11 4 2021 1 \N

2021-12-01 49 3 1 12 4 2021 1 \N

2021-12-02 49 4 2 12 4 2021 1 \N

2021-12-03 49 5 3 12 4 2021 1 \N

2021-12-04 49 6 4 12 4 2021 0 \N

2021-12-05 49 7 5 12 4 2021 0 \N

2021-12-06 50 1 6 12 4 2021 1 \N

2021-12-07 50 2 7 12 4 2021 1 \N

2021-12-08 50 3 8 12 4 2021 1 \N

2021-12-09 50 4 9 12 4 2021 1 \N

2021-12-10 50 5 10 12 4 2021 1 \N

2021-12-11 50 6 11 12 4 2021 0 \N

2021-12-12 50 7 12 12 4 2021 0 \N

2021-12-13 51 1 13 12 4 2021 1 \N

2021-12-14 51 2 14 12 4 2021 1 \N

2021-12-15 51 3 15 12 4 2021 1 \N

2021-12-16 51 4 16 12 4 2021 1 \N

2021-12-17 51 5 17 12 4 2021 1 \N

2021-12-18 51 6 18 12 4 2021 0 \N

2021-12-19 51 7 19 12 4 2021 0 \N

2021-12-20 52 1 20 12 4 2021 1 \N

2021-12-21 52 2 21 12 4 2021 1 \N

2021-12-22 52 3 22 12 4 2021 1 \N

2021-12-23 52 4 23 12 4 2021 1 \N

2021-12-24 52 5 24 12 4 2021 1 \N

2021-12-25 52 6 25 12 4 2021 0 \N

2021-12-26 52 7 26 12 4 2021 0 \N

2021-12-27 53 1 27 12 4 2021 1 \N

2021-12-28 53 2 28 12 4 2021 1 \N

2021-12-29 53 3 29 12 4 2021 1 \N

2021-12-30 53 4 30 12 4 2021 1 \N

2021-12-31 53 5 31 12 4 2021 1 \N

-- 执行以下语句将其导入时间维度表

insert overwrite table dim_date select * from tmp_dim_date_info;

-- 检查数据是否导入成功

select * from dim_date;

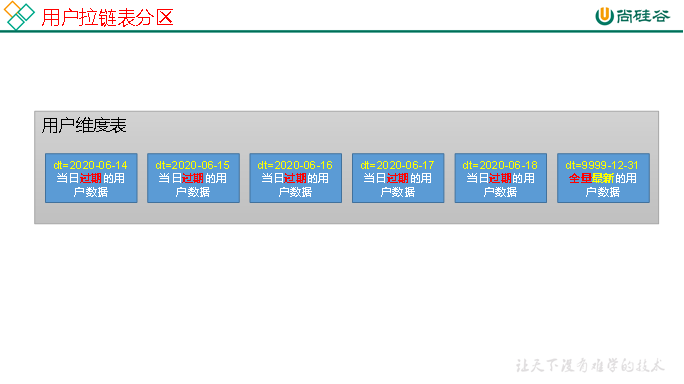

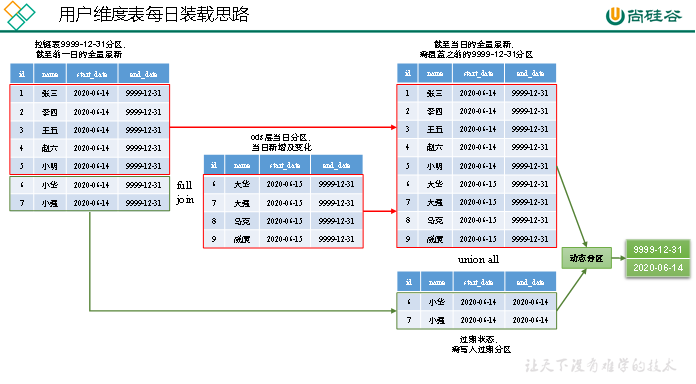

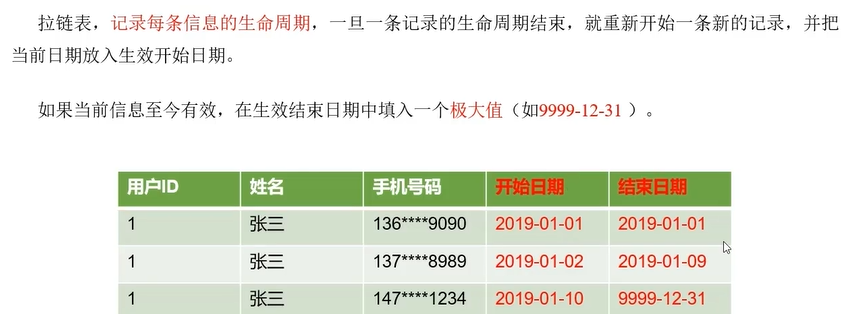

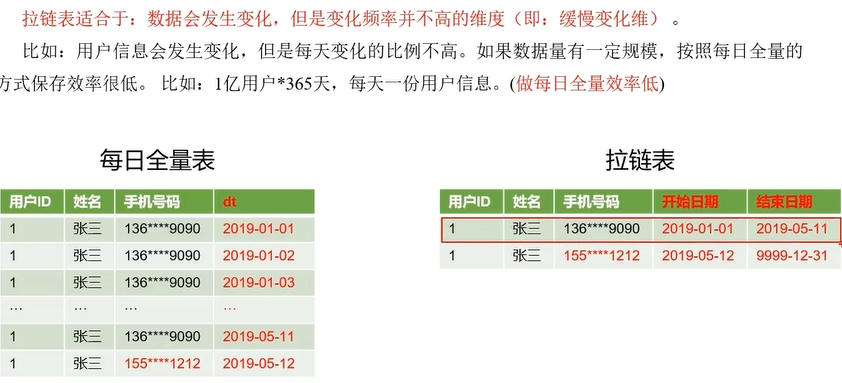

6. 用户维度表(缓慢变化维–>拉链表)

拉链表的意义在于与能够更加高效的保存维度信息的历史状态

DROP TABLE IF EXISTS dim_user_zip;

CREATE EXTERNAL TABLE dim_user_zip

(

`id` STRING COMMENT '用户id',

`login_name` STRING COMMENT '用户名称',

`nick_name` STRING COMMENT '用户昵称',

`name` STRING COMMENT '用户姓名',

`phone_num` STRING COMMENT '手机号码',

`email` STRING COMMENT '邮箱',

`user_level` STRING COMMENT '用户等级',

`birthday` STRING COMMENT '生日',

`gender` STRING COMMENT '性别',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '操作时间',

`start_date` STRING COMMENT '开始日期',

`end_date` STRING COMMENT '结束日期'

) COMMENT '用户表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dim/dim_user_zip/'

TBLPROPERTIES ('orc.compress' = 'snappy');

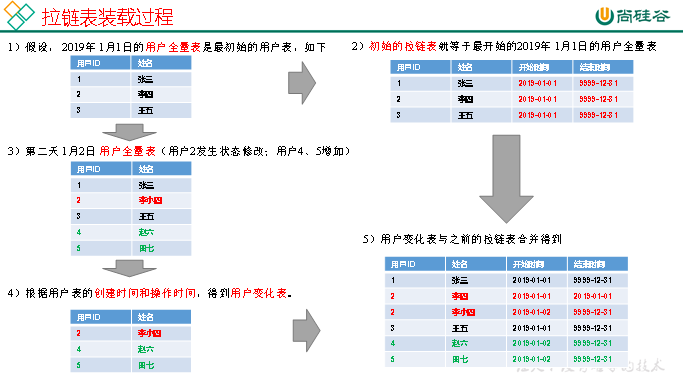

🔨数据装载

-

数据装载过程

-

数据流向

-- 首日装载全量信息(md5加密保护隐私信息)

insert overwrite table dim_user_zip partition (dt='9999-12-31')

select

data.id,

data.login_name,

data.nick_name,

md5(data.name),

md5(data.phone_num),

md5(data.email),

data.user_level,

data.birthday,

data.gender,

data.create_time,

data.operate_time,

'2020-06-14' start_date,

'9999-12-31' end_date

from ods_user_info_inc

where dt='2020-06-14'

and type='bootstrap-insert';

- 每日装载数据

想用一个insert将数据发往不同分区就要用到hive中的动态分区,动态分区基本上只用于首次加载!!(就是在写分区字段时不写具体分区即可

insert overwrite table dim_user_zip partition(dt)并在select中多写一个字段作为分区依据,注意union上下的语句字段类型要一致)

set hive.exec.dynamic.partition.mode=nonstrict;

with

tmp as

(

select

old.id old_id,

old.login_name old_login_name,

old.nick_name old_nick_name,

old.name old_name,

old.phone_num old_phone_num,

old.email old_email,

old.user_level old_user_level,

old.birthday old_birthday,

old.gender old_gender,

old.create_time old_create_time,

old.operate_time old_operate_time,

old.start_date old_start_date,

old.end_date old_end_date,

new.id new_id,

new.login_name new_login_name,

new.nick_name new_nick_name,

new.name new_name,

new.phone_num new_phone_num,

new.email new_email,

new.user_level new_user_level,

new.birthday new_birthday,

new.gender new_gender,

new.create_time new_create_time,

new.operate_time new_operate_time,

new.start_date new_start_date,

new.end_date new_end_date

from

(

select

id,

login_name,

nick_name,

name,

phone_num,

email,

user_level,

birthday,

gender,

create_time,

operate_time,

start_date,

end_date

from dim_user_zip

where dt='9999-12-31'

)old

full outer join

(

select

id,

login_name,

nick_name,

md5(name) name,

md5(phone_num) phone_num,

md5(email) email,

user_level,

birthday,

gender,

create_time,

operate_time,

'2020-06-15' start_date,

'9999-12-31' end_date

from

(

select

data.id,

data.login_name,

data.nick_name,

data.name,

data.phone_num,

data.email,

data.user_level,

data.birthday,

data.gender,

data.create_time,

data.operate_time,

row_number() over (partition by data.id order by ts desc) rn

from ods_user_info_inc

where dt='2020-06-15'

)t1

where rn=1

)new

on old.id=new.id

)

insert overwrite table dim_user_zip partition(dt)

select

if(new_id is not null,new_id,old_id),

if(new_id is not null,new_login_name,old_login_name),

if(new_id is not null,new_nick_name,old_nick_name),

if(new_id is not null,new_name,old_name),

if(new_id is not null,new_phone_num,old_phone_num),

if(new_id is not null,new_email,old_email),

if(new_id is not null,new_user_level,old_user_level),

if(new_id is not null,new_birthday,old_birthday),

if(new_id is not null,new_gender,old_gender),

if(new_id is not null,new_create_time,old_create_time),

if(new_id is not null,new_operate_time,old_operate_time),

if(new_id is not null,new_start_date,old_start_date),

if(new_id is not null,new_end_date,old_end_date),

-- 多写一个字段作为分区字段

if(new_id is not null,new_end_date,old_end_date) dt

from tmp

union all

select

old_id,

old_login_name,

old_nick_name,

old_name,

old_phone_num,

old_email,

old_user_level,

old_birthday,

old_gender,

old_create_time,

old_operate_time,

old_start_date,