Flink练习 当天活跃用户

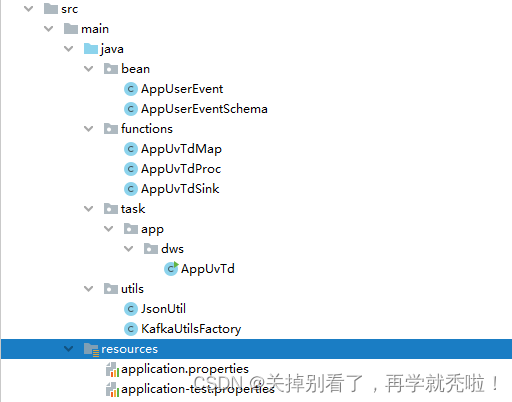

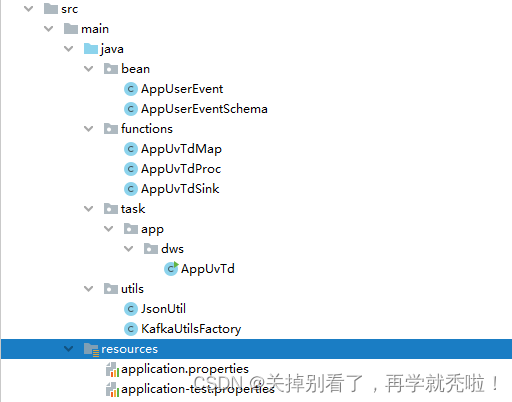

一、结构

二、functions

AppUvTdMap

package functions;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.util.Collector;

public class AppUvTdMap implements FlatMapFunction<String, Tuple4<String,Long,String,String>> {

public AppUvTdMap() {

}

@Override

public void flatMap(String data, Collector<Tuple4<String, Long, String, String>> collector) throws Exception {

try {

JSONObject kafkaRecord = JSON.parseObject(data);

if (null == kafkaRecord) return;

String app = kafkaRecord.getString("app");

String device_id = kafkaRecord.getString("device_id");

if (device_id == null){

device_id = kafkaRecord.getString("deviceid");

}

String os = kafkaRecord.getString("os");

String appVer = kafkaRecord.getJSONObject("param").getString("app_version");

String appVerUv = os.concat("/").concat(appVer);

long recvtime = System.currentTimeMillis();

if (device_id == null){

return;

}

collector.collect(new Tuple4<>(device_id,recvtime,app,appVerUv));

}catch (Exception e){

}

}

}

AppUvTdProc

package functions;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.state.MapState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.state.StateTtlConfig;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.shaded.guava18.com.google.common.collect.Lists;

import org.apache.flink.shaded.guava18.com.google.common.hash.BloomFilter;

import org.apache.flink.shaded.guava18.com.google.common.hash.Funnels;

import org.apache.flink.streaming.api.functions.windowing.ProcessAllWindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.text.SimpleDateFormat;

import java.util.*;

public class AppUvTdProc extends ProcessAllWindowFunction<Tuple4<String,Long,String,String>,Tuple4<String,String,Integer,String>, TimeWindow> {

private MapState<String, BloomFilter<String>> bloomFilterMapstate;

private MapState<String, JSONObject> appVerUvState;

private MapState<String,Integer> uvCountState;

private String stateKeyPattern;

private long stateTtl;

public AppUvTdProc(Properties properties) {

stateKeyPattern = properties.getProperty("state.key.pattern");

stateTtl = Long.valueOf(properties.getProperty("state.ttl"));

}

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

StateTtlConfig ttlConfig = StateTtlConfig.newBuilder(Time.seconds(stateTtl))

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build();

MapStateDescriptor<String, BloomFilter<String>> bloomFilterDescriptor =

new MapStateDescriptor<>("bloomFilter"

, TypeInformation.of(new TypeHint<String>() {})

, TypeInformation.of(new TypeHint<BloomFilter<String>>() {})

);

MapStateDescriptor<String, JSONObject> appVerUvDescriptor =

new MapStateDescriptor<>("appVerUv", String.class, JSONObject.class);

MapStateDescriptor<String, Integer> uvCountDescriptor =

new MapStateDescriptor<>("uvCount", String.class, Integer.class);

bloomFilterDescriptor.enableTimeToLive(ttlConfig);

appVerUvDescriptor.enableTimeToLive(ttlConfig);

uvCountDescriptor.enableTimeToLive(ttlConfig);

bloomFilterMapstate = getRuntimeContext().getMapState(bloomFilterDescriptor);

appVerUvState = getRuntimeContext().getMapState(appVerUvDescriptor);

uvCountState = getRuntimeContext().getMapState(uvCountDescriptor);

}

@Override

public void process(Context context, Iterable<Tuple4<String, Long, String, String>> iterable, Collector<Tuple4<String, String, Integer, String>> collector) throws Exception {

long timestamp = System.currentTimeMillis();

SimpleDateFormat sdf = new SimpleDateFormat(stateKeyPattern);

String date = sdf.format(new Date(timestamp));

List<Tuple4<String, Long, String, String>> dayUvList = Lists.newArrayList(iterable);

String app = dayUvList.get(0).f2;

BloomFilter<String> bloomFilter = bloomFilterMapstate.get(date);

Integer uv = uvCountState.get(date);

JSONObject appVerUvJson = appVerUvState.get(date);

if(!appVerUvState.contains(date)){

appVerUvJson = new JSONObject();

appVerUvState.put(date,appVerUvJson);

}

if (!uvCountState.contains(date)){

uv = 0;

uvCountState.put(date,uv);

}

if (!bloomFilterMapstate.contains(date)){

bloomFilter = BloomFilter.create(Funnels.unencodedCharsFunnel(),5*1000*1000);

bloomFilterMapstate.put(date,bloomFilter);

}

Iterator<Tuple4<String, Long, String, String>> mapIterator = iterable.iterator();

while (mapIterator.hasNext()){

Tuple4<String, Long, String, String> next = mapIterator.next();

String uid = next.f0;

String appVerUv = next.f3;

Integer count = 1;

if (!bloomFilter.mightContain(uid)){

bloomFilter.put(uid);

uv += 1;

if (appVerUvJson.getInteger(appVerUv) == null){

appVerUvJson.put(appVerUv,count);

}else {

count = appVerUvJson.getInteger(appVerUv);

count += 1;

appVerUvJson.put(appVerUv,count);

}

}

}

bloomFilterMapstate.put(date,bloomFilter);

appVerUvState.put(date,appVerUvJson);

uvCountState.put(date,uv);

collector.collect(Tuple4.of(app,null,uv,appVerUvJson.toString()));

}

}

AppUvTdSink

package functions;

import org.apache.commons.dbcp2.BasicDataSource;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.sql.Timestamp;

import java.util.Properties;

public class AppUvTdSink extends RichSinkFunction {

private PreparedStatement ps;

private BasicDataSource dataSource;

private Connection connection;

private String driverClass;

private String url;

private String userName;

private String pwd;

private String sinkTableName;

public AppUvTdSink(Properties properties){

this.sinkTableName = properties.getProperty("sink.table.name");

this.driverClass = properties.getProperty("driverClass");

this.url = properties.getProperty("jdbcurl");

this.userName = properties.getProperty("userName");

this.pwd = properties.getProperty("pwd");

}

@Override

public void open(Configuration parameters) throws Exception {

dataSource = new BasicDataSource();

}

@Override

public void close() throws Exception {

super.close();

if (connection!= null){connection.close();}

if (ps != null){ps.close();}

}

private Connection getConnect(BasicDataSource dataSource) throws SQLException {

dataSource.setDriverClassName(driverClass);

dataSource.setUrl(url);

dataSource.setUsername(userName);

dataSource.setPassword(pwd);

dataSource.setInitialSize(10);

dataSource.setMaxTotal(50);

dataSource.setMinIdle(2);

Connection con = null;

con = dataSource.getConnection();

return con;

}

@Override

public void invoke(Object value, Context context) throws Exception {

connection = getConnect(dataSource);

String sql = "insert into"+sinkTableName+"(app_code,uv,app_ver_uv,create_time) values(?,?,?,?);";

ps = this.connection.prepareStatement(sql);

ps.setString(1,((Tuple4<String,String,Integer,String>)value).f0);

ps.setInt(2,((Tuple4<String,String,Integer,String>)value).f2);

ps.setString(3,((Tuple4<String,String,Integer,String>)value).f3);

ps.setTimestamp(4,new Timestamp(System.currentTimeMillis()));

ps.addBatch();

int[] count = ps.executeBatch();

if (connection!= null){connection.close();}

if (ps != null){ps.close();}

}

}

三、utils

JsonUtil

package utils;

import com.alibaba.fastjson.JSONObject;

import com.fasterxml.jackson.annotation.JsonInclude;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.DeserializationFeature;

import com.fasterxml.jackson.databind.ObjectMapper;

import java.util.List;

public class JsonUtil {

private static ObjectMapper mapper;

static {

mapper = new ObjectMapper();

mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES,false);

mapper.setSerializationInclusion(JsonInclude.Include.ALWAYS);

}

public static JSONObject intersectJson(JSONObject jsonObject, List<String> removekeys){

JSONObject jsonObject1 = new JSONObject();

for (String key : jsonObject.keySet()) {

if (!removekeys.contains(key)){

jsonObject1.put(key,jsonObject.getString(key));

}

}

return jsonObject1;

}

public static ObjectMapper objectMapper(){return mapper;}

public static String toJson(Object object){

try {

return mapper.writeValueAsString(object);

} catch (JsonProcessingException e) {

e.printStackTrace();

}

return null;

}

}

KafkaUtilsFactory

package utils;

import org.apache.commons.lang3.StringUtils;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer;

import org.apache.flink.streaming.connectors.kafka.KafkaSerializationSchema;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.producer.ProducerConfig;

import java.lang.reflect.InvocationTargetException;

import java.text.SimpleDateFormat;

import java.util.Properties;

public class KafkaUtilsFactory {

private static SimpleDateFormat SDF = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

public static Properties getKafkaProperties(Properties properties,String brokersKey,String groupIdKey,String kafkaAclKey) {

Properties config = new Properties();

config.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, properties.getProperty(brokersKey));

config.setProperty(ConsumerConfig.GROUP_ID_CONFIG, properties.getProperty(groupIdKey));

config.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, properties.getProperty("kafka.consumer.auto.offset.reset"));

config.setProperty(ConsumerConfig.FETCH_MAX_WAIT_MS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.consumer.fetch.max.wait.ms"), "500"));

config.setProperty(ConsumerConfig.FETCH_MAX_BYTES_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.consumer.fetch.max.bytes"), 5 * 1024 * 1024 + ""));

config.setProperty(ConsumerConfig.FETCH_MIN_BYTES_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.consumer.fetch.min.bytes"), "1"));

config.setProperty(ConsumerConfig.REQUEST_TIMEOUT_MS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.request.timeout.ms"), "30000"));

if ("true".equals(properties.getProperty(kafkaAclKey))) {

StringBuffer acl = new StringBuffer("org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required unsecuredLoginStringClaim_sub=\"");

acl.append(properties.getProperty("kafka.consumer.client.id"))

.append("\" app_secret=\"")

.append(properties.getProperty("kafka.consumer.client.secret"))

.append("\" login_url=\"")

.append(properties.getProperty("kafka.consumer.client.login.url"))

.append("\";");

config.setProperty("sasl.jaas.config", acl.toString());

config.setProperty("security.protocol", "SASL_PLAINTEXT");

config.setProperty("sasl.mechanism", "OAUTHBEARER");

config.setProperty("sasl.login.callback.handler.class","com.htsc.kafka.security.oauthbearer.OauthAuthenticateLoginCallbackHandler");

}

return config;

}

public static <T> FlinkKafkaProducer<T> toKafkaDataStream(Properties properties,Class<? extends KafkaSerializationSchema<T>> clazz,

String brokersKey,String topic,String kafkaAclKey) throws NoSuchMethodException, IllegalAccessException, InvocationTargetException, InstantiationException {

Properties producerConfig = new Properties();

producerConfig.setProperty(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,properties.getProperty(brokersKey));

producerConfig.setProperty(ProducerConfig.TRANSACTION_TIMEOUT_CONFIG,properties.getProperty("kafka.producer.transaction.time.out"));

producerConfig.setProperty(ProducerConfig.ACKS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.acks"),"1"));

producerConfig.setProperty(ProducerConfig.BATCH_SIZE_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.batch.size.config"),"16384"));

producerConfig.setProperty(ProducerConfig.MAX_BLOCK_MS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.max.block.ms"),Long.MAX_VALUE+""));

producerConfig.setProperty(ProducerConfig.LINGER_MS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.linger.ms"),Long.MAX_VALUE+""));

producerConfig.setProperty(ProducerConfig.BUFFER_MEMORY_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.buffer.memory"),"33554432"));

producerConfig.setProperty(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.request.timeout.ms"),"30000"));

producerConfig.setProperty(ProducerConfig.MAX_REQUEST_SIZE_CONFIG,"10485760");

producerConfig.setProperty(ProducerConfig.COMPRESSION_TYPE_CONFIG,

StringUtils.defaultIfEmpty(properties.getProperty("kafka.producer.compression.type"),"none"));

if ("true".equals(properties.getProperty(kafkaAclKey))){

StringBuffer acl = new StringBuffer("org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required unsecuredLoginStringClaim_sub=\"");

acl.append(properties.getProperty("kafka.producer.client.id"))

.append("\" app_secret=\"")

.append(properties.getProperty("kafka.producer.client.secret"))

.append("\" login_url=\"")

.append(properties.getProperty("kafka.producer.client.login.url"))

.append("\";");

producerConfig.setProperty("sasl.jaas.config", acl.toString());

producerConfig.setProperty("security.protocol", "SASL_PLAINTEXT");

producerConfig.setProperty("sasl.mechanism", "OAUTHBEARER");

producerConfig.setProperty("sasl.login.callback.handler.class","com.htsc.kafka.security.oauthbearer.OauthAuthenticateLoginCallbackHandler");

}

Class[] classes = {Properties.class, String.class};

KafkaSerializationSchema<T> kafkaSerializationSchema = clazz.getConstructor(classes).newInstance(properties, topic);

return new FlinkKafkaProducer<T>(

topic,

kafkaSerializationSchema,

producerConfig,

FlinkKafkaProducer.Semantic.AT_LEAST_ONCE);

}

public static <T> FlinkKafkaProducer<T> toKafkaDataStream(Properties properties,Class<? extends KafkaSerializationSchema<T>> clazz, String topic) throws InvocationTargetException, NoSuchMethodException, InstantiationException, IllegalAccessException {

String brokers = "kafka.producer.brokers";

String kafkaAcl = "kafka.producer.acl";

return toKafkaDataStream(properties,clazz,brokers,topic,kafkaAcl);

}

}

四、task 主函数

AppUvTd

package task.app.dws;

import functions.AppUvTdMap;

import functions.AppUvTdProc;

import functions.AppUvTdSink;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import utils.KafkaUtilsFactory;

import java.util.Properties;

public class AppUvTd {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.ProcessingTime);

CheckpointConfig checkpointConfig = env.getCheckpointConfig();

checkpointConfig.setMinPauseBetweenCheckpoints(10000);

checkpointConfig.setCheckpointTimeout(600000);

checkpointConfig.setMaxConcurrentCheckpoints(1);

checkpointConfig.enableExternalizedCheckpoints(

CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION

);

env.enableCheckpointing(600000, CheckpointingMode.EXACTLY_ONCE);

String resourcePath = "/application-test.properties";

if (args.length>0){

resourcePath = args[0];

}

ParameterTool tool = ParameterTool.fromPropertiesFile(AppUvTd.class.getResourceAsStream(resourcePath));

Properties properties = tool.getProperties();

Properties consumerConfig = KafkaUtilsFactory.getKafkaProperties(properties, "kafka.consumer.brokers",

"kafka.consumer.group.id", "kafka.consumer.acl");

String topic = properties.getProperty("kafka.consumer.topic");

FlinkKafkaConsumer<String> consumer = new FlinkKafkaConsumer<>(topic, new SimpleStringSchema(), consumerConfig);

consumer.setStartFromLatest();

DataStream<String> stream = env.addSource(consumer).uid("kafka-consumer-al-app-user-event");

DataStream<Tuple4<String, Long, String, String>> appInfoStream =

stream.flatMap(new AppUvTdMap())

.uid("app-uv-td");

appInfoStream.windowAll(TumblingProcessingTimeWindows.of(Time.seconds(10)))

.process(new AppUvTdProc(properties))

.addSink(new AppUvTdSink(properties));

env.execute("app-uv-td");

}

}

五、resource 资源文件

#kafka consumer

kafka.consumer.brokers=

kafka.consumer.group.id=

kafka.consumer.topic=

kafka.consumer.auto.offset.reset=latest

kafka.consumer.isolation.level=1

kafka.request.timeout.ms=6000

#kafka consumer acl

kafka.consumer.acl=true

kafka.consumer.client.id=

kafka.consumer.client.secret=

kafka.consumer.client.login.url=

#kafka producer

kafka.producer.brokers=

kafka.producer.acks= -1

kafka.producer.transaction.time.out=30000

kafka.producer.batch.size.config=

kafka.producer.max.block.ms=

kafka.producer.linger.ms=1000

kafka.producer.buffer.memory=

kafka.producer.compression.type=lz4

kafka.producer.topic=

#kafka producer acl

kafka.producer.acl=true

kafka.producer.client.id=

kafka.producer.client.secret=

kafka.producer.client.login.url=

#mysql sink

driverClass=

jdbcurl=

userName=

pwd=

state.ttl=86400

state.key.pattern=MMdd

sink.table.name=

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?