1.准备compose.yml编排式文件

services:

#日志信息同步

logstash:

container_name: logstash

image: docker.elastic.co/logstash/logstash:7.17.14 #logstash:

command: logstash -f /usr/share/logstash/pipeline/logstash.conf

depends_on:

- elasticsearch

restart: on-failure

ports:

- "5000:5000"

- "5044:5044"

environment:

TZ: America/New_York

LANG: en_US.UTF-8

ES_JAVA_OPTS: "-Xmx1024m -Xms1024m"

volumes:

- /home/planetflix/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml

- /home/planetflix/logstash/conf/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

networks:

- es_net

#日志采集工具

filebeat:

container_name: filebeat

image: docker.elastic.co/beats/filebeat:7.17.14 #filebeat:轻量级的日志文件数据收集器,属于Beats六大日志采集器之一

depends_on:

- elasticsearch

- logstash

- kibana

restart: on-failure

environment:

TZ: America/New_York

LANG: en_US.UTF-8

ES_JAVA_OPTS: "-Xmx1024m -Xms1024m"

volumes:

- /home/planetflix/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml

- /home/planetflix/filebeat/modules.d/nginx.yml:/usr/share/filebeat/modules.d/nginx.yml

- /home/planetflix/filebeat/logs:/usr/share/filebeat/logs

- /home/planetflix/filebeat/data:/usr/share/filebeat/data

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/containers:/var/lib/docker/containers

#挂载日志

- /data/project/java/logs:/var/elk/logs

- /home/planetflix/nginx/logs:/usr/local/nginx/logs

networks:

- es_net

networks:

es_net:

driver: bridge2.配置filebeat.yml

# ============================== Filebeat inputs ===============================

filebeat.inputs:

- type: log #输入filebeat的类型 这里设置为log(默认),即具体路径的日志 另外属性值还有stdin(键盘输入)、kafka、redis,具体可参考官网

enabled: true #开启filebeat采集

backoff: "1s"

tail_files: false

##symlinks: true #采集软链接文件

paths: #配置采集全局路径,后期可根据不同模块去做区分

- /var/elk/logs/*.log

#指定读取内容类型为log,指定log文件所在路径

multiline.pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

multiline.negate: true

multiline.match: after

fields: #可想输出的日志添加额外的信息

log_type: syslog

tags: ["app-log"]

#nginx

- type: log

enabled: true #开启filebeat采集

backoff: "1s"

tail_files: false

paths: #配置采集全局路径,后期可根据不同模块去做区分

- /usr/local/nginx/logs/access.log

- /usr/local/nginx/logs/error.log

fields: #可想输出的日志添加额外的信息

filetype: nginxlog

fields_under_root: true

# ============================== Filebeat modules ==============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: false

# Period on which files under path should be checked for changes

# reload.period: 10s

# ======================= Elasticsearch template setting =======================

setup.template.settings:

index.number_of_shards: 3

# ------------------------------ Logstash Output -------------------------------

output.logstash:

enabled: true

hosts: ["logstash:5044"]

name: filebeat-node01

setup.template.name: "filebeat_log"

setup.template.pattern: "merit_*"

setup.template.enabled: true3.配置logstash.yml和logstash.conf

logstash.yml

## 和kibana的host一样,也需要设置成0.0.0.0才能启动成功

http.host: "0.0.0.0"

## 除了可以使用docker-compose.yml中elasticsearch的容器名如 "http://elasticsearch:9200"(前提是同属于一个docker network,且类型为bridge),也可以直接改成公网ip

xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: 'elastic'

xpack.monitoring.elasticsearch.password: '1qazxsw23'logstash.conf

##input输入日志 beats用于接收filebeat的插件 codec设置输入日志的格式 port端口为logstash的端口

input {

beats {

port => 5044

codec => json

client_inactivity_timeout => 36000

}

}

##filter对数据过滤操作

filter {

if [filetype] == "nginxlog" {

json {

source => "message"

remove_field => "message"

remove_field => "@timestamp"

}

date {

match => ["time_local", "ISO8601"]

target => "@timestamp"

}

grok {

match => { "request" => "%{WORD:method} (?<url>.* )" }

}

mutate {

remove_field => ["host","event","input","request","offset","prospector","source","type","tags","beat"]

rename => {"http_user_agent" => "agent"}

rename => {"upstream_response_time" => "response_time"}

rename => {"http_x_forwarded_for" => "x_forwarded_for"}

split => {"x_forwarded_for" => ", "}

split => {"response_time" => ", "}

}

}

}

##标签定义于filebeat.yml

output {

if "app-log" in [tags] { #写入日志到 es

elasticsearch{

hosts => ["http://elasticsearch:9200"]

index => "app-log-%{+YYYY.MM.dd}"

user => "elastic"

password => "123456"

}

stdout {codec => rubydebug}

}

if [filetype] == "nginxlog" { #写入日志到 es

elasticsearch{

hosts => ["http://elasticsearch:9200"]

index => "logstash-nginx-%{+YYYY.MM.dd}"

user => "elastic"

password => "123456"

}

stdout {codec => rubydebug}

}

}4.启动服务

docker-compose -f compose-elk.yml --compatibility up -d filebeat

docker-compose -f compose-elk.yml --compatibility up -d logstash5.管理索引(等于日志数据库)

访问kibana首页>stack management>索引模式>创建索引模式

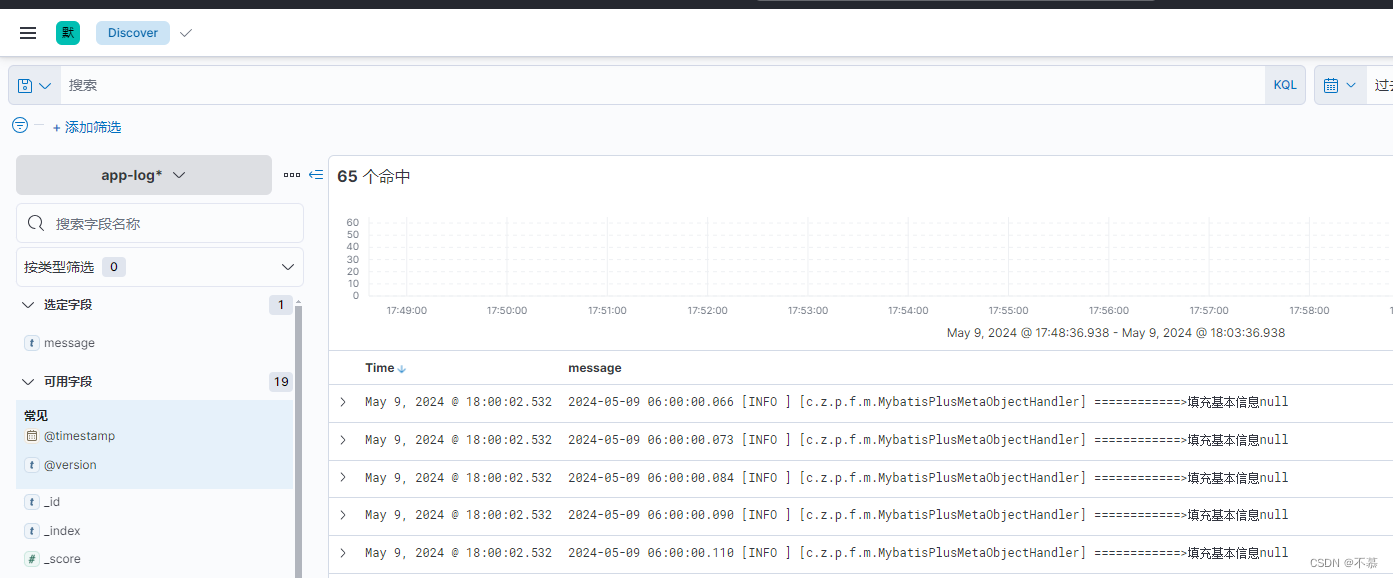

6.返回discover查看日志信息

1906

1906

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?