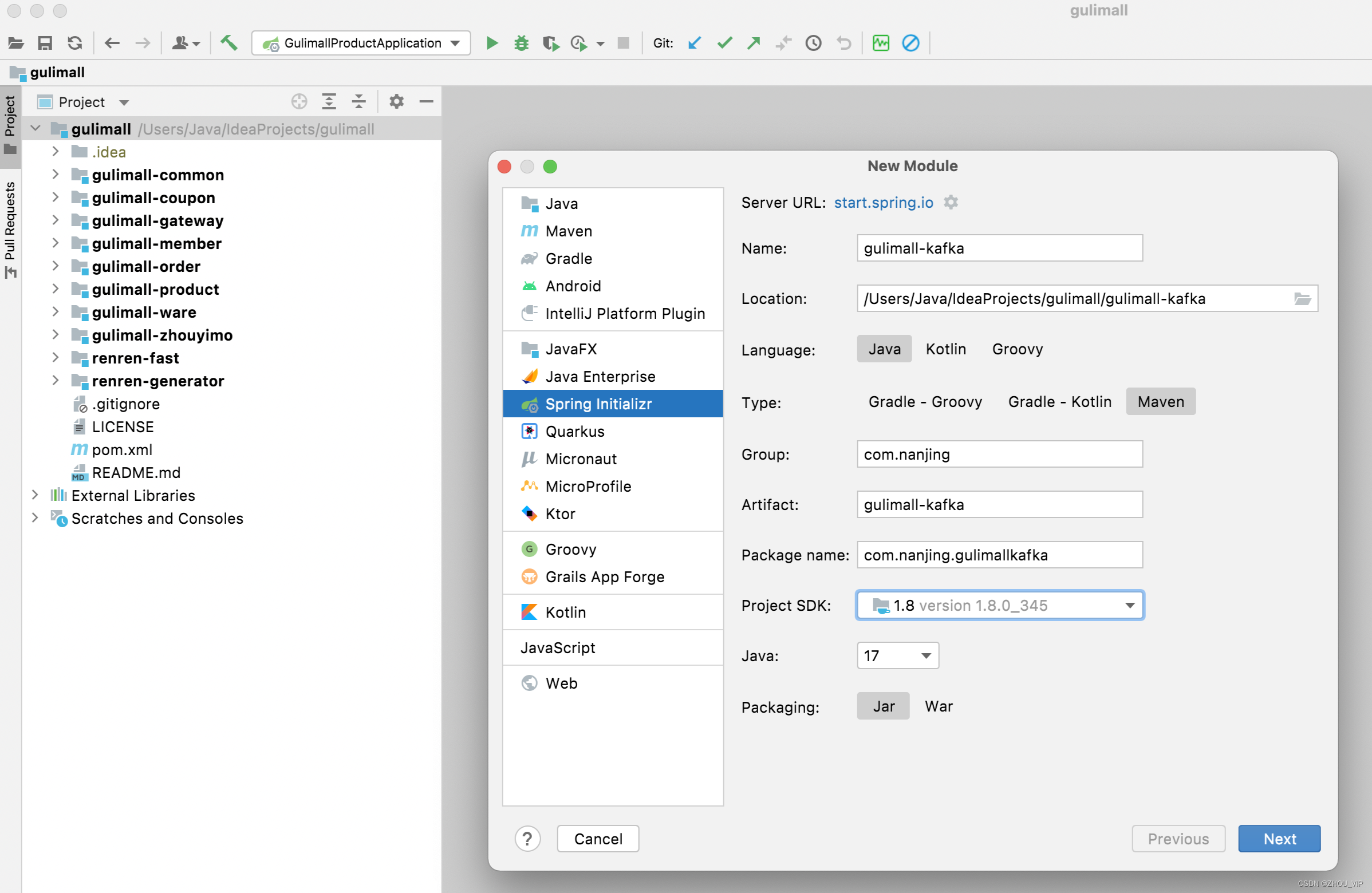

1.创建SpringBoot项目

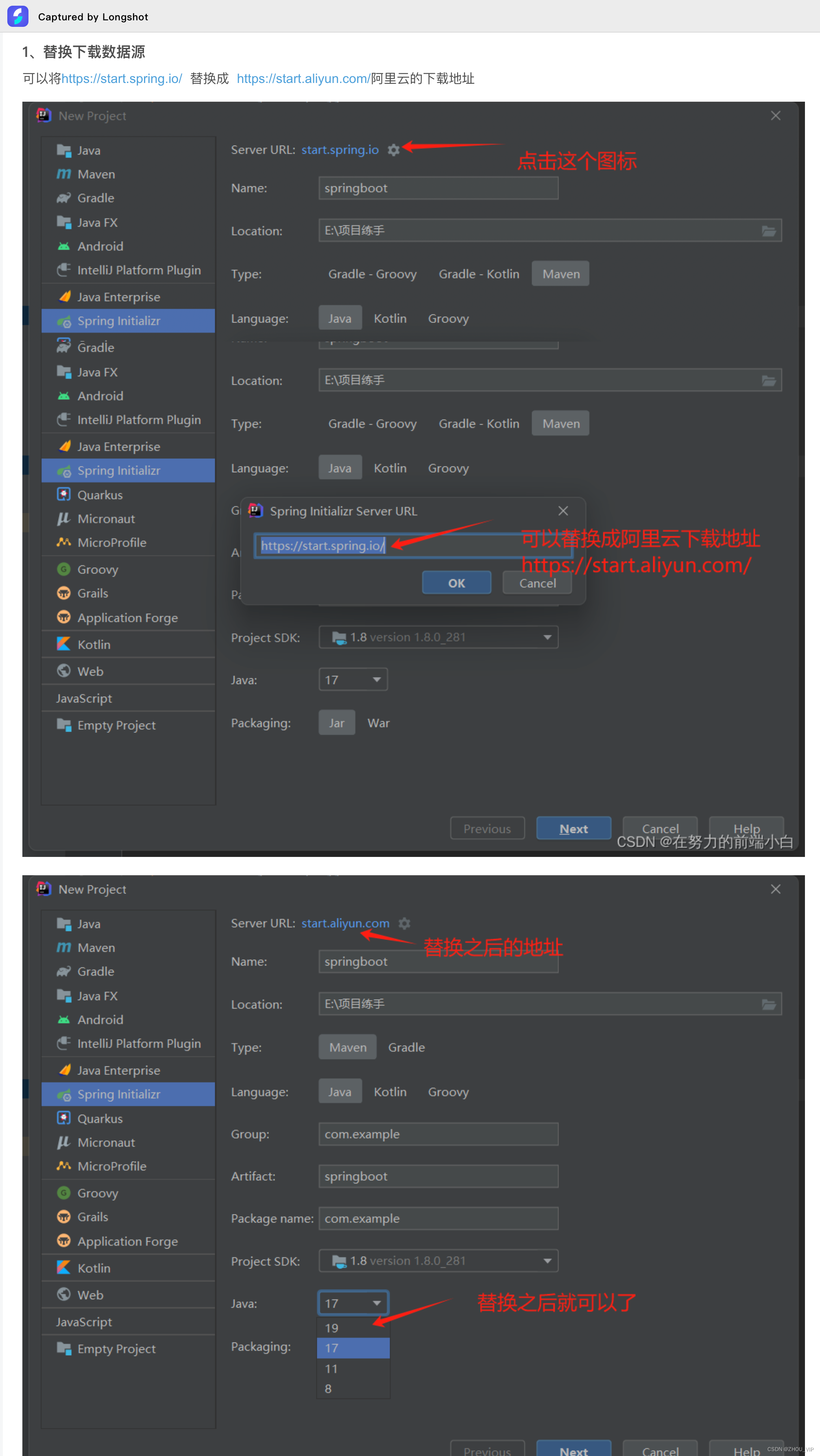

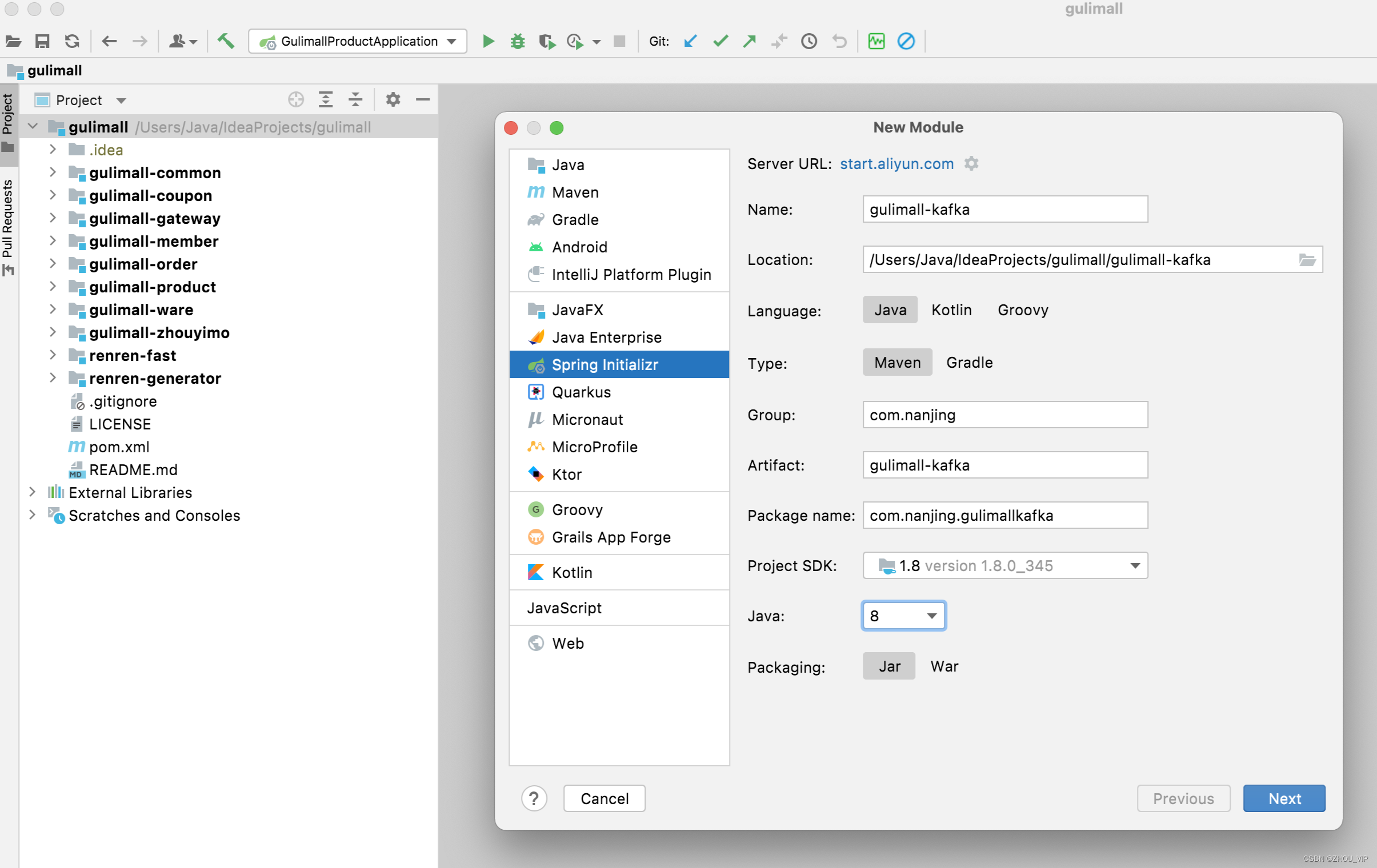

解决办法:

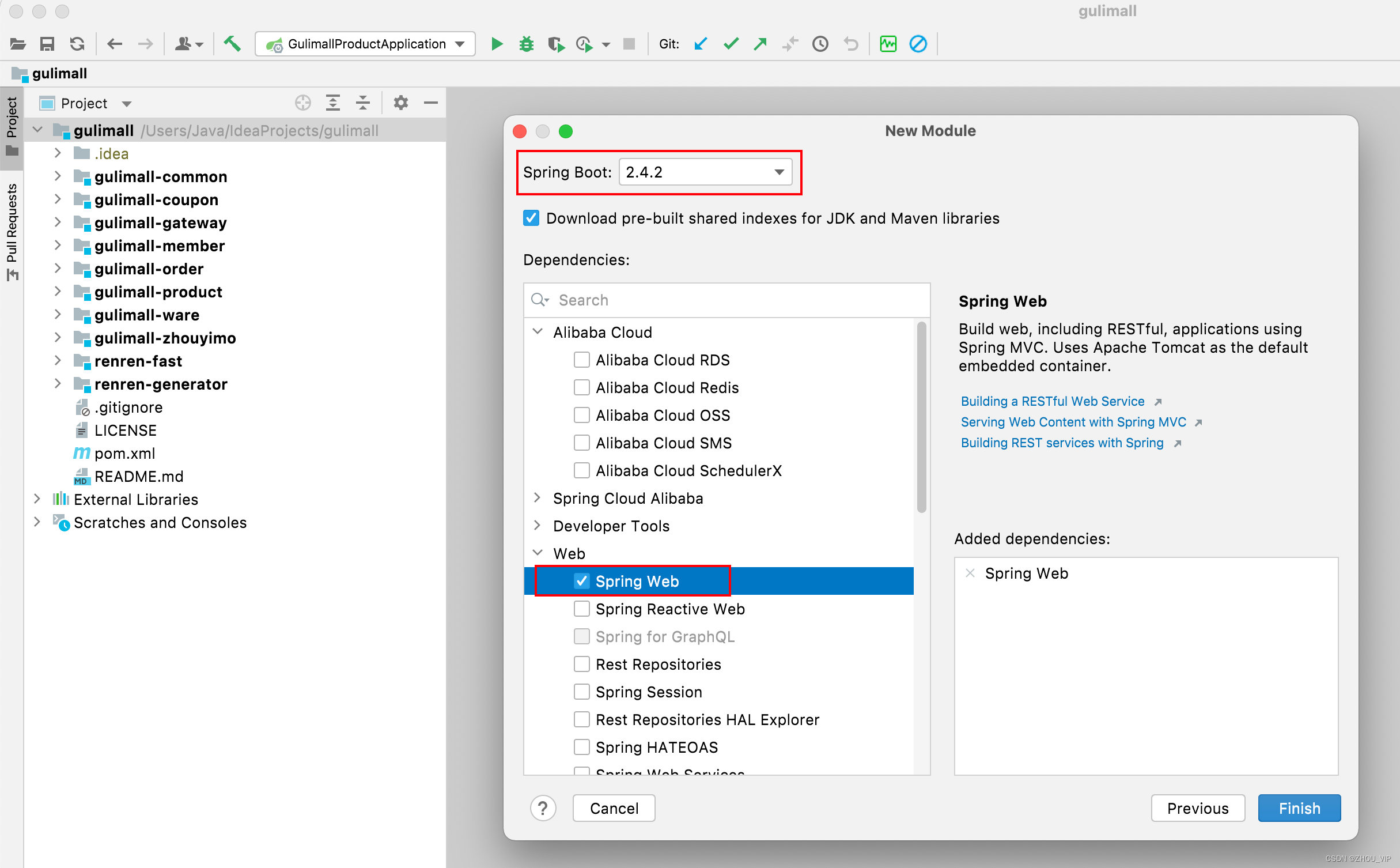

勾选:

2.pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.6.6</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.nanjing</groupId>

<artifactId>gulimall-kafka</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>gulimall-kafka</name>

<description>gulimall-kafka</description>

<properties>

<java.version>1.8</java.version>

<spring-cloud.version>2021.0.5</spring-cloud.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-thymeleaf</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.2.2</version>

</dependency>

<!--<dependency>

<groupId>com.mysql</groupId>

<artifactId>mysql-connector-j</artifactId>

<scope>runtime</scope>

</dependency>-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.6.1</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-json</artifactId>

<version>5.8.11</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-db</artifactId>

<version>5.8.11</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

3.yml文件

spring:

server:

port: 9999

kafka:

bootstrap-servers: localhost:9092

producer:

acks: all

batch-size: 16384

buffer-memory: 33554432

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

retries: 0

consumer:

group-id: test#消费者组

#消费方式: 在有提交记录的时候,earliest与latest是一样的,从提交记录的下一条开始消费

# earliest:无提交记录,从头开始消费

#latest:无提交记录,从最新的消息的下一条开始消费

auto-offset-reset: earliest

enable-auto-commit: true #是否自动提交偏移量offset

auto-commit-interval: 1s #前提是 enable-auto-commit=true。自动提交的频率

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

max-poll-records: 2

properties:

#如果在这个时间内没有收到心跳,该消费者会被踢出组并触发{组再平衡 rebalance}

session.timeout.ms: 120000

#最大消费时间。此决定了获取消息后提交偏移量的最大时间,超过设定的时间(默认5分钟),服务端也会认为该消费者失效。踢出并再平衡

max.poll.interval.ms: 300000

#配置控制客户端等待请求响应的最长时间。

#如果在超时之前没有收到响应,客户端将在必要时重新发送请求,

#或者如果重试次数用尽,则请求失败。

request.timeout.ms: 60000

#订阅或分配主题时,允许自动创建主题。0.11之前,必须设置false

allow.auto.create.topics: true

#poll方法向协调器发送心跳的频率,为session.timeout.ms的三分之一

heartbeat.interval.ms: 40000

#每个分区里返回的记录最多不超max.partitions.fetch.bytes 指定的字节

#0.10.1版本后 如果 fetch 的第一个非空分区中的第一条消息大于这个限制

#仍然会返回该消息,以确保消费者可以进行

#max.partition.fetch.bytes=1048576 #1M

listener:

#当enable.auto.commit的值设置为false时,该值会生效;为true时不会生效

#manual_immediate:需要手动调用Acknowledgment.acknowledge()后立即提交

#ack-mode: manual_immediate

missing-topics-fatal: true #如果至少有一个topic不存在,true启动失败。false忽略

#type: single #单条消费?批量消费? #批量消费需要配合 consumer.max-poll-records

type: batch

concurrency: 2 #配置多少,就为为每个消费者实例创建多少个线程。多出分区的线程空闲

template:

default-topic: "test"

4. 创建配置类:SpringBootKafkaConfig

package com.nanjing.gulimallkafka.config;

public class SpringBootKafkaConfig {

public static final String TOPIC_TEST = "test";

public static final String GROUP_ID = "test";

}5.创建生产者控制器:KafkaProducerController

package com.nanjing.gulimallkafka.controller;

import cn.hutool.json.JSONUtil;

import com.nanjing.gulimallkafka.config.SpringBootKafkaConfig;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

@RestController

@RequestMapping("/kafka")

@Slf4j

public class KafkaProducerController {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@ResponseBody

@PostMapping(value = "/produce", produces = "application/json")

public String produce(@RequestBody Object obj) {

try {

String obj2String = JSONUtil.toJsonStr(obj);

kafkaTemplate.send(SpringBootKafkaConfig.TOPIC_TEST, obj2String);

return "success";

} catch (Exception e) {

e.getMessage();

}

return "success";

}

}6.创建消费者:KafkaDataConsumer

package com.nanjing.gulimallkafka.component;

import cn.hutool.json.JSONObject;

import cn.hutool.json.JSONUtil;

import com.nanjing.gulimallkafka.config.SpringBootKafkaConfig;

import lombok.extern.slf4j.Slf4j;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.support.KafkaHeaders;

import org.springframework.messaging.handler.annotation.Header;

import org.springframework.stereotype.Component;

import java.util.List;

@Component

@Slf4j

public class KafkaDataConsumer {

@KafkaListener(topics = SpringBootKafkaConfig.TOPIC_TEST, groupId = SpringBootKafkaConfig.GROUP_ID)

public void topic_test(List<String> messages, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

for (String message : messages) {

final JSONObject entries = JSONUtil.parseObj(message);

System.out.println(SpringBootKafkaConfig.GROUP_ID + " 消费了: Topic:" + topic + ",Message:" + entries.getStr("data"));

//ack.acknowledge();

}

}

}7.启动,发现报错

解决方法:

8.还有报错

2024-05-09 23:25:55.743 WARN 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available.

2024-05-09 23:25:56.656 INFO 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Node -1 disconnected.

2024-05-09 23:25:56.656 WARN 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available.

2024-05-09 23:25:57.768 INFO 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Node -1 disconnected.

2024-05-09 23:25:57.768 WARN 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available.

2024-05-09 23:25:58.781 INFO 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Node -1 disconnected.

2024-05-09 23:25:58.781 WARN 5518 --- [| adminclient-1] org.apache.kafka.clients.NetworkClient : [AdminClient clientId=adminclient-1] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available.

2024-05-09 23:25:59.486 INFO 5518 --- [| adminclient-1] o.a.k.c.a.i.AdminMetadataManager : [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.TimeoutException: Timed out waiting for a node assignment. Call: fetchMetadata

2024-05-09 23:25:59.622 INFO 5518 --- [| adminclient-1] o.a.kafka.common.utils.AppInfoParser : App info kafka.admin.client for adminclient-1 unregistered

2024-05-09 23:25:59.623 INFO 5518 --- [| adminclient-1] o.a.k.c.a.i.AdminMetadataManager : [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.TimeoutException: The AdminClient thread has exited. Call: fetchMetadata

2024-05-09 23:25:59.623 INFO 5518 --- [| adminclient-1] o.a.k.clients.admin.KafkaAdminClient : [AdminClient clientId=adminclient-1] Timed out 1 remaining operation(s) during close.

2024-05-09 23:25:59.629 INFO 5518 --- [| adminclient-1] org.apache.kafka.common.metrics.Metrics : Metrics scheduler closed

2024-05-09 23:25:59.630 INFO 5518 --- [| adminclient-1] org.apache.kafka.common.metrics.Metrics : Closing reporter org.apache.kafka.common.metrics.JmxReporter

2024-05-09 23:25:59.630 INFO 5518 --- [| adminclient-1] org.apache.kafka.common.metrics.Metrics : Metrics reporters closed

2024-05-09 23:25:59.631 WARN 5518 --- [ main] ConfigServletWebServerApplicationContext : Exception encountered during context initialization - cancelling refresh attempt: org.springframework.context.ApplicationContextException: Failed to start bean 'org.springframework.kafka.config.internalKafkaListenerEndpointRegistry'; nested exception is java.lang.IllegalStateException: Topic(s) [test] is/are not present and missingTopicsFatal is true

2024-05-09 23:25:59.636 INFO 5518 --- [ main] o.apache.catalina.core.StandardService : Stopping service [Tomcat]

2024-05-09 23:25:59.652 INFO 5518 --- [ main] ConditionEvaluationReportLoggingListener :

Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled.

2024-05-09 23:25:59.671 ERROR 5518 --- [ main] o.s.boot.SpringApplication : Application run failed

org.springframework.context.ApplicationContextException: Failed to start bean 'org.springframework.kafka.config.internalKafkaListenerEndpointRegistry'; nested exception is java.lang.IllegalStateException: Topic(s) [test] is/are not present and missingTopicsFatal is true

at org.springframework.context.support.DefaultLifecycleProcessor.doStart(DefaultLifecycleProcessor.java:181) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.context.support.DefaultLifecycleProcessor.access$200(DefaultLifecycleProcessor.java:54) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.context.support.DefaultLifecycleProcessor$LifecycleGroup.start(DefaultLifecycleProcessor.java:356) ~[spring-context-5.3.18.jar:5.3.18]

at java.lang.Iterable.forEach(Iterable.java:75) ~[na:1.8.0_345]

at org.springframework.context.support.DefaultLifecycleProcessor.startBeans(DefaultLifecycleProcessor.java:155) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.context.support.DefaultLifecycleProcessor.onRefresh(DefaultLifecycleProcessor.java:123) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.context.support.AbstractApplicationContext.finishRefresh(AbstractApplicationContext.java:935) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:586) ~[spring-context-5.3.18.jar:5.3.18]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.refresh(ServletWebServerApplicationContext.java:145) ~[spring-boot-2.6.6.jar:2.6.6]

at org.springframework.boot.SpringApplication.refresh(SpringApplication.java:740) [spring-boot-2.6.6.jar:2.6.6]

at org.springframework.boot.SpringApplication.refreshContext(SpringApplication.java:415) [spring-boot-2.6.6.jar:2.6.6]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:303) [spring-boot-2.6.6.jar:2.6.6]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1312) [spring-boot-2.6.6.jar:2.6.6]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1301) [spring-boot-2.6.6.jar:2.6.6]

at com.nanjing.gulimallkafka.GulimallKafkaApplication.main(GulimallKafkaApplication.java:11) [classes/:na]

Caused by: java.lang.IllegalStateException: Topic(s) [test] is/are not present and missingTopicsFatal is true

at org.springframework.kafka.listener.AbstractMessageListenerContainer.checkTopics(AbstractMessageListenerContainer.java:509) ~[spring-kafka-2.8.4.jar:2.8.4]

at org.springframework.kafka.listener.ConcurrentMessageListenerContainer.doStart(ConcurrentMessageListenerContainer.java:191) ~[spring-kafka-2.8.4.jar:2.8.4]

at org.springframework.kafka.listener.AbstractMessageListenerContainer.start(AbstractMessageListenerContainer.java:466) ~[spring-kafka-2.8.4.jar:2.8.4]

at org.springframework.kafka.config.KafkaListenerEndpointRegistry.startIfNecessary(KafkaListenerEndpointRegistry.java:331) ~[spring-kafka-2.8.4.jar:2.8.4]

at org.springframework.kafka.config.KafkaListenerEndpointRegistry.start(KafkaListenerEndpointRegistry.java:276) ~[spring-kafka-2.8.4.jar:2.8.4]

at org.springframework.context.support.DefaultLifecycleProcessor.doStart(DefaultLifecycleProcessor.java:178) ~[spring-context-5.3.18.jar:5.3.18]

... 14 common frames omitted

Disconnected from the target VM, address: '127.0.0.1:62089', transport: 'socket'

Process finished with exit code 1

我没有安装kafka,后面准备在mac上安装下kafka

1255

1255

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?