本文改自 https://www.cnblogs.com/reblue520/p/12219116.html

JDK建议13版本

| 服务器 | 服务 | 系统 | ES版本 |

|---|---|---|---|

| 192.168.8.218 | node.master/node.data | centos7.3 | es7.5 |

| 192.168.8.219 | node.data | centos7.3 | es7.5 |

| 192.168.8.220 | node.data | centos7.3 | es7.5 |

服务器基础优化

Linux中,每个进程默认打开的最大文件句柄数是1000,对于服务器进程来说,显然太小,通过修改/etc/security/limits.conf来增大打开最大句柄数和/etc/security/limits.d/20-nproc.conf 配置

#elk用户添加

groupadd -g 100 elk

useradd -u 100 -g elk elk

参数修改

echo "fs.file-max = 1000000" >> /etc/sysctl.conf

echo "vm.max_map_count=262144" >> /etc/sysctl.conf

echo "vm.swappiness = 0" >> /etc/sysctl.conf

sysctl -p

sed -i 's/* soft nofile 65535/* soft nofile 655350/g' /etc/security/limits.conf

sed -i 's/* hard nofile 65535/* hard nofile 655350/g' /etc/security/limits.conf

sed -i 's#* soft nproc 4096##' /etc/security/limits.d/20-nproc.conf

#修改/etc/security/limits.d/20-nproc.conf

* soft memlock unlimited

* hard memlock unlimited

JDK建议13版本,文件下载存储:/home/elk

https://www.oracle.com/technetwork/java/javase/downloads/jdk13-downloads-5672538.html

JDK环境变量配置,每个节点都需要提前配置

export JAVA_HOME=/home/elk/jdk-13.0.2

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

集群配置

192.168.8.218 master配置

cd /home/elk

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.5.0-linux-x86_64.tar.gz

#解压

tar -xvf elasticsearch-7.5.0-linux-x86_64.tar.gz

新建日志、存储、备份目录

mkdir -p /home/elk/data && mkdir -p /home/elk/logs && mkdir /home/elk/esback

chown -Rf elk.elk /home/elk

[elk@localhost elasticsearch-7.5.0]$ su -elk

[elk@localhost elasticsearch-7.5.0]$ cd /home/elk/elasticsearch-7.5.0/

[elk@localhost elasticsearch-7.5.0]$ cat config/elasticsearch.yml

# ======================== Elasticsearch Configuration =========================

cluster.name: fengyu

node.name: fengyu218

path.data: /home/elk/data

path.logs: /home/elk/logs

path.repo: ["/home/elk/esback"]

bootstrap.memory_lock: true

network.host: 192.168.8.218

http.port: 9200

transport.tcp.port: 9300

node.master: true

node.data: true

discovery.seed_hosts: ["192.168.8.218:9300", "192.168.8.219:9300", "192.168.8.220:9300"]

cluster.initial_master_nodes: ["192.168.8.218"]

gateway.recover_after_nodes: 2

transport.tcp.compress: true

# 以下配置可以减少当es节点短时间宕机或重启时shards重新分布带来的磁盘io读写浪费

discovery.zen.fd.ping_timeout: 300s

discovery.zen.fd.ping_retries: 8

discovery.zen.fd.ping_interval: 30s

discovery.zen.ping_timeout: 180s

#启动

./bin/elasticsearch

#PS:如果用root启动过一次,需要重新将elk目录重新赋权给elk。要不elk无法正常启动

#能看到端口起来就正常

[2020-04-13T19:46:41,471][INFO ][o.e.x.s.a.s.FileRolesStore] [fengyu218] parsed [0] roles from file [/home/elk/elasticsearch-7.5.0/config/roles.yml]

[2020-04-13T19:46:42,379][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [fengyu218] [controller/7071] [Main.cc@110] controller (64 bit): Version 7.5.0 (Build 17d1c724ca38a1) Copyright (c) 2019 Elasticsearch BV

[2020-04-13T19:46:42,977][DEBUG][o.e.a.ActionModule ] [fengyu218] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

[2020-04-13T19:46:43,100][INFO ][o.e.d.DiscoveryModule ] [fengyu218] using discovery type [zen] and seed hosts providers [settings]

[2020-04-13T19:46:43,995][INFO ][o.e.n.Node ] [fengyu218] initialized

[2020-04-13T19:46:43,996][INFO ][o.e.n.Node ] [fengyu218] starting ...

[2020-04-13T19:46:44,108][INFO ][o.e.t.TransportService ] [fengyu218] publish_address {192.168.8.218:9300}, bound_addresses {192.168.8.218:9300}

[2020-04-13T19:46:44,270][INFO ][o.e.b.BootstrapChecks ] [fengyu218] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2020-04-13T19:46:44,273][INFO ][o.e.c.c.Coordinator ] [fengyu218] cluster UUID [upnpaUuXTDmqYoQGzXWGlw]

[2020-04-13T19:46:44,584][INFO ][o.e.c.s.MasterService ] [fengyu218] elected-as-master ([1] nodes joined)[{fengyu218}{GKJ2npj9Rc-wQUvCm8W_Ow}{b-sp6dmTR0apAdmpBtjrOg}{192.168.8.218}{192.168.8.218:9300}{dilm}{ml.machine_memory=3975491584, xpack.installed=true, ml.max_open_jobs=20} elect leader, _BECOME_MASTER_TAS

_, _FINISH_ELECTION_], term: 2, version: 22, delta: master node changed {previous [], current [{fengyu218}{GKJ2npj9Rc-wQUvCm8W_Ow}{b-sp6dmTR0apAdmpBtjrOg}{192.168.8.218}{192.168.8.218:9300}{dilm}{ml.machine_memory=3975491584, xpack.installed=true, ml.max_open_jobs=20}]}

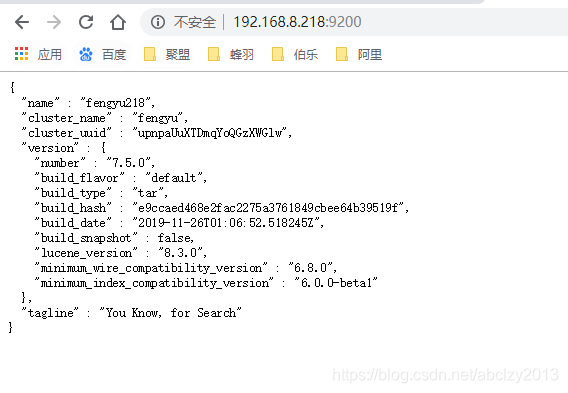

网页访问:http://192.168.8.218:9200/

node 192.168.8.219 配置

cd /home/elk

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.5.0-linux-x86_64.tar.gz

#解压

tar -xvf elasticsearch-7.5.0-linux-x86_64.tar.gz

新建日志、存储、备份目录

mkdir -p /home/elk/data && mkdir -p /home/elk/logs && mkdir /home/elk/esback

chown -Rf elk.elk /home/elk

#更改elasticsearch.yml

[elk@localhost elasticsearch-7.5.0]$ cd /home/elk/elasticsearch-7.5.0

[elk@localhost elasticsearch-7.5.0]$ cat config/elasticsearch.yml

cluster.name: fengyu

node.name: fengyu219

path.data: /home/elk/data

path.logs: /home/elk/logs

path.repo: ["/home/elk/esback"]

bootstrap.memory_lock: true

network.host: 192.168.8.219

http.port: 9200

transport.tcp.port: 9300

node.master: false

node.data: true

discovery.seed_hosts: ["192.168.8.218:9300", "192.168.8.219:9300", "192.168.8.220:9300"]

cluster.initial_master_nodes: ["192.168.8.218"]

gateway.recover_after_nodes: 2

transport.tcp.compress: true

# 以下配置可以减少当es节点短时间宕机或重启时shards重新分布带来的磁盘io读写浪费

discovery.zen.fd.ping_timeout: 300s

discovery.zen.fd.ping_retries: 8

discovery.zen.fd.ping_interval: 30s

discovery.zen.ping_timeout: 180s

启动

[root@localhost ~]# su - elk

Last login: Mon Apr 13 19:48:11 CST 2020 on pts/1

[elk@localhost ~]$ cd elasticsearch-7.5.0/

[elk@localhost elasticsearch-7.5.0]$ ./bin/elasticsearch

[2020-04-13T19:51:24,689][INFO ][o.e.t.TransportService ] [fengyu219] publish_address {192.168.8.219:9300}, bound_addresses {192.168.8.219:9300}

[2020-04-13T19:51:24,853][INFO ][o.e.b.BootstrapChecks ] [fengyu219] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2020-04-13T19:51:24,856][INFO ][o.e.c.c.Coordinator ] [fengyu219] cluster UUID [upnpaUuXTDmqYoQGzXWGlw]

[2020-04-13T19:51:26,065][INFO ][o.e.c.s.ClusterApplierService] [fengyu219] master node changed {previous [], current [{fengyu218}{GKJ2npj9Rc-wQUvCm8W_Ow}{b-sp6dmTR0apAdmpBtjrOg}{192.168.8.218}{192.168.8.218:9300}{dilm}{ml.machine_memory=3975491584, ml.max_open_jobs=20, xpack.installed=true}]}, added {{fengyu218}{

KJ2npj9Rc-wQUvCm8W_Ow}{b-sp6dmTR0apAdmpBtjrOg}{192.168.8.218}{192.168.8.218:9300}{dilm}{ml.machine_memory=3975491584, ml.max_open_jobs=20, xpack.installed=true}}, term: 2, version: 23, reason: ApplyCommitRequest{term=2, version=23, sourceNode={fengyu218}{GKJ2npj9Rc-wQUvCm8W_Ow}{b-sp6dmTR0apAdmpBtjrOg}{192.168.8.21}{192.168.8.218:9300}{dilm}{ml.machine_memory=3975491584, ml.max_open_jobs=20, xpack.installed=true}}[2020-04-13T19:51:26,123][INFO ][o.e.h.AbstractHttpServerTransport] [fengyu219] publish_address {192.168.8.219:9200}, bound_addresses {192.168.8.219:9200}

[2020-04-13T19:51:26,124][INFO ][o.e.n.Node ] [fengyu219] started

[2020-04-13T19:51:26,370][INFO ][o.e.l.LicenseService ] [fengyu219] license [7e965786-92b3-47c3-b8fc-b349c1c48d53] mode [basic] - valid

[2020-04-13T19:51:26,371][INFO ][o.e.x.s.s.SecurityStatusChangeListener] [fengyu219] Active license is now [BASIC]; Security is enabled

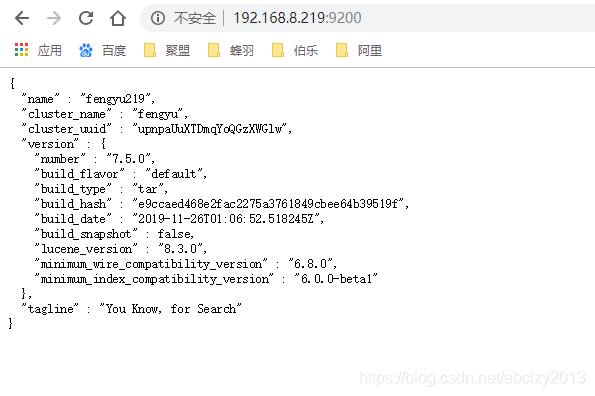

验证:

#同样的方式启动192.168.8.220,elasticsearch.yml配置

[elk@localhost elasticsearch-7.5.0]$ cat config/elasticsearch.yml

cluster.name: fengyu

node.name: fengyu220

path.data: /home/elk/data

path.logs: /home/elk/logs

path.repo: ["/home/elk/esback"]

bootstrap.memory_lock: true

network.host: 192.168.8.220

http.port: 9200

transport.tcp.port: 9300

node.master: false

node.data: true

discovery.seed_hosts: ["192.168.8.218:9300", "192.168.8.219:9300", "192.168.8.220:9300"]

cluster.initial_master_nodes: ["192.168.8.218"]

gateway.recover_after_nodes: 2

transport.tcp.compress: true

# 以下配置可以减少当es节点短时间宕机或重启时shards重新分布带来的磁盘io读写浪费

discovery.zen.fd.ping_timeout: 300s

discovery.zen.fd.ping_retries: 8

discovery.zen.fd.ping_interval: 30s

discovery.zen.ping_timeout: 180s

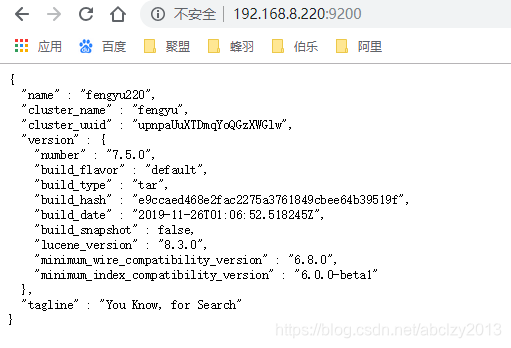

启动后验证

集群状态验证,当status 为green时候,集群状态正常

[elk@localhost elasticsearch-7.5.0]$ curl -u elastic:jumeng123! http://192.168.8.218:9200/_cluster/health?pretty

{

"cluster_name" : "fengyu",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 1,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

增加密码校验

在各个节点的elasticsearch.yml 最后增加

# 密码部分的配置最好等集群配置没有问题后再进行

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /home/elk/elasticsearch-7.5.0/config/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /home/elk/elasticsearch-7.5.0/config/elastic-certificates.p12

添加完以后不重启,制作证书

#必须用elk用户制作证书

[elk@localhost elasticsearch-7.5.0]$ ./bin/elasticsearch-certutil ca

Please enter the desired output file [elastic-stack-ca.p12]: #回车

Enter password for elastic-stack-ca.p12 : #回车

[elk@localhost elasticsearch-7.5.0]$ bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

#直接回车就好

[elk@localhost elasticsearch-7.5.0]$ cp elastic-certificates.p12 config/

#重启elasticsearch

[elk@localhost elasticsearch-7.5.0]$ pkill -9 -f elk

[elk@localhost elasticsearch-7.5.0]$ ./bin/elasticsearch

#如果此时无法启动,是证书制作有问题

#将证书一次传到其他的节点

[elk@localhost elasticsearch-7.5.0]$ for i in 192.168.8.219 192.168.8.220; do scp /home/elk/elasticsearch-7.5.0/config/elastic-certificates.p12 $i:/home/elk/elasticsearch-7.5.0/config; done

#依次重启其他节点

#获取集装状态

[elk@localhost elasticsearch-7.5.0]$ curl -u elastic:jumeng123! http://192.168.8.218:9200/_cluster/health?pretty

{

"cluster_name" : "fengyu",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 1,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

#密码初始化 输入你的密码

[elk@localhost elasticsearch-7.5.0]$ bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana]:

Reenter password for [kibana]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

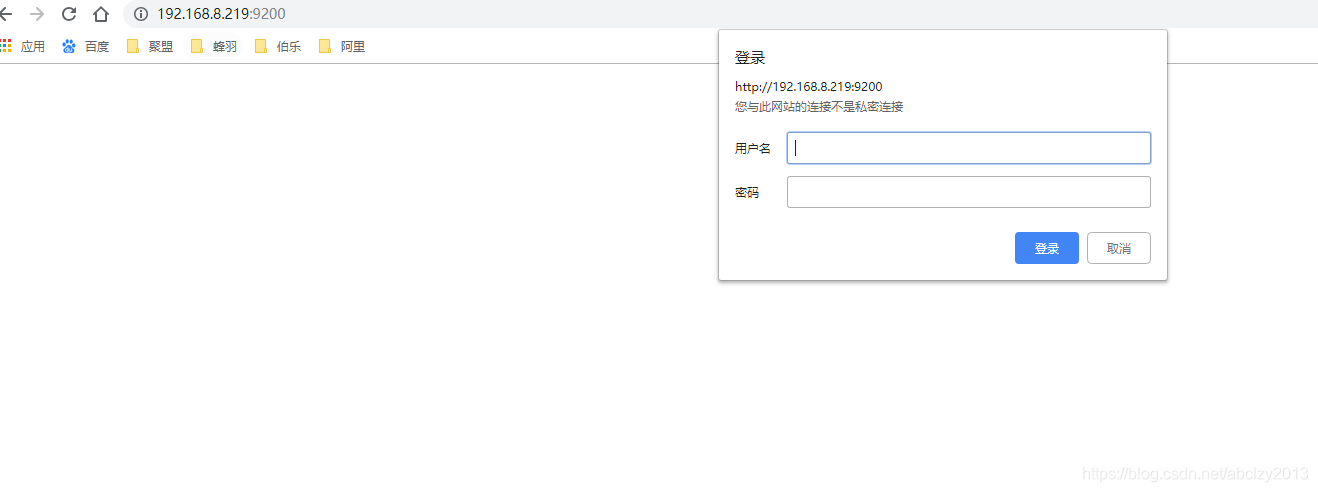

配置后,后续访问elastaicsearch需要输入密码

246

246

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?