目录

kube-controller-manager kube-scheduler部署

| 节点名称 | IP 地址 | 角色 |

| master | 192.168.44.128 | kubernetes-master etcd flannel |

| node01 | 192.168.44.129 | kubernetes-node etcd docker flannel *rhsm* |

| node02 | 192.168.44.130 | kubernetes-node etcd docker flannel *rhsm* |

1、基础环境准备(所有节点)

-

修改主机名并配置hosts文件解析

[root@master ~]# hostnamectl set-hostname master

[root@master ~]# cat <<EOF >>/etc/hosts

> 192.168.44.128 master

> 192.168.44.129 node01

> 192.168.44.130 node02

> EOF-

关闭防火墙及SELINUX

[root@master ~]# systemctl stop firewalld && systemctl disable firewalld

[root@master ~]# swapoff -a

[root@master ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0-

时钟同步

[root@master ~]# yum -y install ntp ntpdate

[root@master ~]# ntpdate 0.asia.pool.ntp.org

18 May 10:21:55 ntpdate[7781]: step time server 111.230.189.174 offset 57144.053711 sec2、安装软件包

-

master节点

[root@master ~]# yum install kubernetes-master etcd flannel -y-

node节点

[root@node02 ~]# yum install kubernetes-node etcd docker flannel *rhsm* -y

[root@node01 ~]# yum install kubernetes-node etcd docker flannel *rhsm* -y3、ETCD 集群部署

-

修改配置文件

1)master节点

[root@master ~]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.44.128:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.44.128:2379,http://127.0.0.1:2379"

ETCD_MAX_SNAPSHOTS="5"

ETCD_NAME="etcd-1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.44.128:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.44.128:2379"

ETCD_INITIAL_CLUSTER="etcd-1=http://192.168.44.128:2380,etcd-2=http://192.168.44.129:2380,etcd-3=http://192.168.44.130:2380"2)node节点

[root@node01 ~]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.44.129:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.44.129:2379,http://127.0.0.1:2379"

ETCD_MAX_SNAPSHOTS="5"

ETCD_NAME="etcd-2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.44.129:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.44.129:2379"

ETCD_INITIAL_CLUSTER="etcd-1=http://192.168.44.128:2380,etcd-2=http://192.168.44.129:2380,etcd-3=http://192.168.44.130:2380"

[root@node02 ~]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.44.130:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.44.130:2379,http://127.0.0.1:2379"

ETCD_MAX_SNAPSHOTS="5"

ETCD_NAME="etcd-3"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.44.130:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.44.130:2379"

ETCD_INITIAL_CLUSTER="etcd-1=http://192.168.44.128:2380,etcd-2=http://192.168.44.129:2380,etcd-3=http://192.168.44.130:2380"

-

创建数据存放目录

[root@master ~]# mkdir -p /data/etcd && chmod 757 -R /data/etcd-

启动服务

[root@master ~]# systemctl start etcd.service && systemctl enable etcd.service4、master节点部署

-

api-server部署

1)修改配置文件

[root@master ~]# cat /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.44.130:2379,http://192.168.44.128:2379,http://192.168.44.129:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

KUBE_API_ARGS=""2)启动服务

[root@master ~]# systemctl start kube-apiserver && systemctl enable kube-apiserver3)验证

[root@master ~]# etcdctl cluster-health

member 17e9229061f63b14 is healthy: got healthy result from http://192.168.44.128:2379

member a798c224ffb9efcb is healthy: got healthy result from http://192.168.44.129:2379

member c262847557f194ac is healthy: got healthy result from http://192.168.44.130:2379

cluster is healthy-

kube-controller-manager kube-scheduler部署

1)修改配置文件

[root@master ~]# cat /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://192.168.44.128:8080"2)启动服务

[root@master ~]# systemctl start kube-controller-manager && systemctl enable kube-controller-manager && systemctl start kube-scheduler && systemctl enable kube-scheduler3)验证

[root@master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"} 5、node节点部署(node1和node2)

-

kubelet部署

1)修改配置文件

[root@node01 ~]# cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.44.129" #ip地址或者字符串都行;node2修改成对应的HOSTNAME

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://192.168.44.128:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""2)启动服务

[root@node01 ~]# systemctl start kubelet && systemctl enable kublete-

kube-proxy部署

1)修改配置文件

[root@node01 ~]# cat /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://192.168.44.128:8080"2)启动服务

[root@node01 ~]# systemctl start kube-proxy && systemctl enable kube-proxy-

flanneld部署(所有节点)

1)修改配置文件

[root@node01 ~]# cat /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://192.168.44.128:8080"

[root@node01 ~]# systemctl start kube-proxy && systemctl enable kube-proxy^C

[root@node01 ~]# cat /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://192.168.44.130:2379,http://192.168.44.128:2379,http://192.168.44.129:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""2)ETCD写入网络配置(master节点)

[root@master ~]# etcdctl mk /atomic.io/network/config '{"Network":"172.17.0.0/16"}'

[root@master ~]# etcdctl get /atomic.io/network/config

{"Network":"172.17.0.0/16"}

3)启动服务(所有节点)

[root@master ~]# systemctl start flanneld && systemctl enable flanneld4)验证

三个节点分配了不同的网段

[root@master ~]# etcdctl ls /atomic.io/network/subnets

/atomic.io/network/subnets/172.17.42.0-24

/atomic.io/network/subnets/172.17.63.0-24

/atomic.io/network/subnets/172.17.1.0-24

5)测试网络连通性

如果三个节点网络相互不通,添加如下防火墙规则(node节点)

[root@node01 ~]# iptables -P FORWARD ACCEPT

重启docker服务保证和kub网段一致(node节点)

[root@node01 ~]# systemctl restart docker

6)查看集群节点状态

[root@master ~]# kubectl get nodes

NAME STATUS AGE

192.168.44.129 Ready 18h

192.168.44.130 Ready 18h6、Dashboard UI平台部署

拉取镜像原因,提前将需要镜像进行下载

以下链接可以下载也可以 docker search pull下载

https://download.csdn.net/download/jianlecn/12280554

https://download.csdn.net/download/qq_35669934/11061422

-

镜像导入(node1、node2)

[root@node01 ~]# docker load < kubernetes-dashboard-amd64.tgz

#此标签地址因为在kublete里配置,也可以修改

[root@node01 ~]# docker tag f0b8a9a54136 registry.access.redhat.com/rhel7/pod-infrastructure

-

部署

1)通过deployment部署

[root@master yaml]# cat dashboard-controller_deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubernetes-dashboard

image: docker.io/lizhenliang/kubernetes-dashboard-amd64

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://192.168.44.128:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 302)svc部署

[root@master yaml]# cat dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 90903)应用

[root@master yaml]# kubectl apply -f dashboard-controller_deployment.yaml

[root@master yaml]# kubectl apply -f dashboard-service.yaml4)查看并访问

[root@master yaml]# kubectl get pods -o wide -n kube-system

NAME READY STATUS RESTARTS AGE IP NODE

kubernetes-dashboard-1466209592-f0tcp 1/1 Running 0 18h 172.17.42.2 192.168.44.129

浏览器输入

http://192.168.44.128:8080/ui

7、服务部署

-

eg: nginx

-

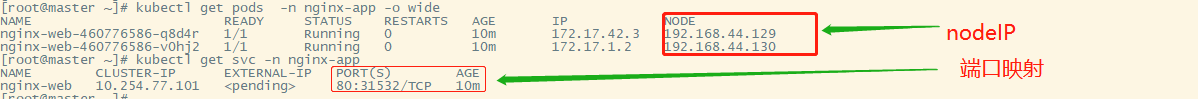

查看service 及node端口进行访问

3654

3654

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?