1. 何为反向映射

正向映射: 用户进程在申请内存时,内核并不会立刻给其分配物理内存,而是先为其分配一段虚拟地址空间,当进程访问该虚拟地址空间时,触发page fault异常,异常处理流程中会为其分配物理页面,并将虚拟地址和物理地址的映射关系保存在进程页表中,后续我们可以通过页表找到这个已分配的物理内存。

反向映射: 反向映射恰好相反,想根据已分配的物理页找到该映射到该物理页上的所有用户进程,该操作主要用于页面回收,当内存不足时,回收一个页面就需要找到映射到该页面的所有用户进程,修改进程页表的映射信息,防止出现非法访问。最简单找到该页面被哪些进程所映射的方式,是通过遍历所有的进程的页表,然后遍历每个页表项,检查是否映射到该页面上,但这种方式无疑是最耗时的,所以才有了后面的反向映射机制。

本篇只讲述匿名页的反向映射原理,文件页的反向映射留着以后再叙述。

2. 匿名页反向映射数据结构

2.1 struct page

struct page {

...

union {

struct { /* Page cache and anonymous pages */

...

/* See page-flags.h for PAGE_MAPPING_FLAGS */

struct address_space *mapping;

pgoff_t index; /* Our offset within mapping. */

...

};

} _struct_page_alignment;

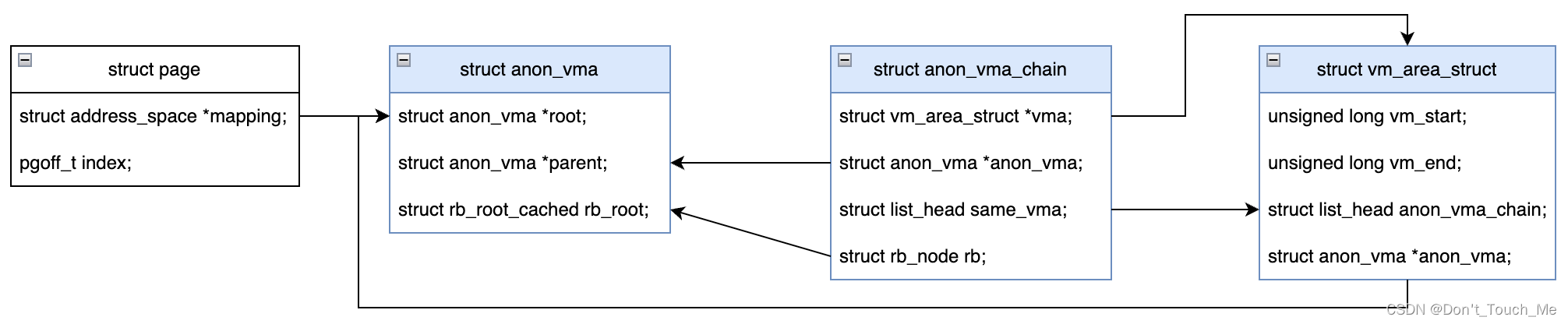

匿名页的数据结构中有一个 mapping 指针,通过这个mapping可以找到anon_vma,通过遍历anon_vma->root下所有的anon_vma_chain,可以获得所有的vma,进而就可以知道有哪些应用进程映射了该匿名页。

2.2 struct anon_vma

/*

* The anon_vma heads a list of private "related" vmas, to scan if

* an anonymous page pointing to this anon_vma needs to be unmapped:

* the vmas on the list will be related by forking, or by splitting.

*

* Since vmas come and go as they are split and merged (particularly

* in mprotect), the mapping field of an anonymous page cannot point

* directly to a vma: instead it points to an anon_vma, on whose list

* the related vmas can be easily linked or unlinked.

*

* After unlinking the last vma on the list, we must garbage collect

* the anon_vma object itself: we're guaranteed no page can be

* pointing to this anon_vma once its vma list is empty.

*/

struct anon_vma {

struct anon_vma *root; /* Root of this anon_vma tree */

...

struct anon_vma *parent; /* Parent of this anon_vma */

/* Interval tree of private "related" vmas */

struct rb_root_cached rb_root;

};

anon_vma 反向映射的关键数据结构,匿名页通过mapping指向该数据结构,通过该数据结构保存的信息进而获取到映射该匿名页的所有用户进程。

2.3 struct anon_vma_chain

/*

* The copy-on-write semantics of fork mean that an anon_vma

* can become associated with multiple processes. Furthermore,

* each child process will have its own anon_vma, where new

* pages for that process are instantiated.

*

* This structure allows us to find the anon_vmas associated

* with a VMA, or the VMAs associated with an anon_vma.

* The "same_vma" list contains the anon_vma_chains linking

* all the anon_vmas associated with this VMA.

* The "rb" field indexes on an interval tree the anon_vma_chains

* which link all the VMAs associated with this anon_vma.

*/

struct anon_vma_chain {

struct vm_area_struct *vma;

struct anon_vma *anon_vma;

struct list_head same_vma; /* locked by mmap_lock & page_table_lock */

struct rb_node rb; /* locked by anon_vma->rwsem */

unsigned long rb_subtree_last;

#ifdef CONFIG_DEBUG_VM_RB

unsigned long cached_vma_start, cached_vma_last;

#endif

};

anon_vma_chain 作为链接anon_vma和vma之间的桥梁,为啥需要有这个桥梁的存在,主要是减少锁冲突。

2.4 struct vm_area_struct

/*

* This struct describes a virtual memory area. There is one of these

* per VM-area/task. A VM area is any part of the process virtual memory

* space that has a special rule for the page-fault handlers (ie a shared

* library, the executable area etc).

*/

struct vm_area_struct {

...

/*

* A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma

* list, after a COW of one of the file pages. A MAP_SHARED vma

* can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack

* or brk vma (with NULL file) can only be in an anon_vma list.

*/

struct list_head anon_vma_chain; /* Serialized by mmap_lock &

* page_table_lock */

struct anon_vma *anon_vma; /* Serialized by page_table_lock */

...

} __randomize_layout;

vm_area_struct 该数据结构是用来描述应用进程的一段虚拟地址空间,anon_vma指针指向与该vma关联的反向映射结构,anon_vma_chain用来保存所有关联该vma的avc对象。

3. 建立反向映射流程(物理页面新分配时)

最终结果

当应用进程的虚拟地址未映射物理内存被访问时,会触发page fault,page fault处理流程中不仅包含为其分配物理页面,保存映射信息到进程页表,同时也会为新分配的物理页面建立反向映射。

handle_mm_fault()

-> __handle_mm_fault()

--> handle_pte_fault()

---> do_anonymous_page()

/*

* We enter with non-exclusive mmap_lock (to exclude vma changes,

* but allow concurrent faults), and pte mapped but not yet locked.

* We return with mmap_lock still held, but pte unmapped and unlocked.

*/

static vm_fault_t do_anonymous_page(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct page *page;

vm_fault_t ret = 0;

pte_t entry;

/* File mapping without ->vm_ops ? */

if (vma->vm_flags & VM_SHARED)

return VM_FAULT_SIGBUS;

/*

* Use pte_alloc() instead of pte_alloc_map(). We can't run

* pte_offset_map() on pmds where a huge pmd might be created

* from a different thread.

*

* pte_alloc_map() is safe to use under mmap_write_lock(mm) or when

* parallel threads are excluded by other means.

*

* Here we only have mmap_read_lock(mm).

*/

if (pte_alloc(vma->vm_mm, vmf->pmd))

return VM_FAULT_OOM;

/* See comment in handle_pte_fault() */

if (unlikely(pmd_trans_unstable(vmf->pmd)))

return 0;

/* Use the zero-page for reads */

if (!(vmf->flags & FAULT_FLAG_WRITE) &&

!mm_forbids_zeropage(vma->vm_mm)) {

entry = pte_mkspecial(pfn_pte(my_zero_pfn(vmf->address),

vma->vm_page_prot));

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd,

vmf->address, &vmf->ptl);

if (!pte_none(*vmf->pte)) {

update_mmu_tlb(vma, vmf->address, vmf->pte);

goto unlock;

}

ret = check_stable_address_space(vma->vm_mm);

if (ret)

goto unlock;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) {

pte_unmap_unlock(vmf->pte, vmf->ptl);

return handle_userfault(vmf, VM_UFFD_MISSING);

}

goto setpte;

}

// 为vma创建anon_vma

/* Allocate our own private page. */

if (unlikely(anon_vma_prepare(vma)))

goto oom;

page = alloc_zeroed_user_highpage_movable(vma, vmf->address);

if (!page)

goto oom;

if (mem_cgroup_charge(page_folio(page), vma->vm_mm, GFP_KERNEL))

goto oom_free_page;

cgroup_throttle_swaprate(page, GFP_KERNEL);

/*

* The memory barrier inside __SetPageUptodate makes sure that

* preceding stores to the page contents become visible before

* the set_pte_at() write.

*/

__SetPageUptodate(page);

entry = mk_pte(page, vma->vm_page_prot);

entry = pte_sw_mkyoung(entry);

if (vma->vm_flags & VM_WRITE)

entry = pte_mkwrite(pte_mkdirty(entry));

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd, vmf->address,

&vmf->ptl);

if (!pte_none(*vmf->pte)) {

update_mmu_tlb(vma, vmf->address, vmf->pte);

goto release;

}

ret = check_stable_address_space(vma->vm_mm);

if (ret)

goto release;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) {

pte_unmap_unlock(vmf->pte, vmf->ptl);

put_page(page);

return handle_userfault(vmf, VM_UFFD_MISSING);

}

inc_mm_counter_fast(vma->vm_mm, MM_ANONPAGES);

// 为页面建立反向映射

page_add_new_anon_rmap(page, vma, vmf->address);

lru_cache_add_inactive_or_unevictable(page, vma);

setpte:

set_pte_at(vma->vm_mm, vmf->address, vmf->pte, entry);

/* No need to invalidate - it was non-present before */

update_mmu_cache(vma, vmf->address, vmf->pte);

unlock:

pte_unmap_unlock(vmf->pte, vmf->ptl);

return ret;

release:

put_page(page);

goto unlock;

oom_free_page:

put_page(page);

oom:

return VM_FAULT_OOM;

}

在这里我们只需要关注两个函数 anon_vma_prepare(vma) 和 page_add_new_anon_rmap(page, vma, vmf->address); 一个是为该vma分配anon_vma,另一个是为该页面建立反向映射。

3.1 anon_vma_prepare()

static inline int anon_vma_prepare(struct vm_area_struct *vma)

{

// 如果该vma已经指向了一个anon_vma,直接返回无需创建新的anon_vma结构

if (likely(vma->anon_vma))

return 0;

return __anon_vma_prepare(vma); // 否则就创建

}

/**

* __anon_vma_prepare - attach an anon_vma to a memory region

* @vma: the memory region in question

*

* This makes sure the memory mapping described by 'vma' has

* an 'anon_vma' attached to it, so that we can associate the

* anonymous pages mapped into it with that anon_vma.

*

* The common case will be that we already have one, which

* is handled inline by anon_vma_prepare(). But if

* not we either need to find an adjacent mapping that we

* can re-use the anon_vma from (very common when the only

* reason for splitting a vma has been mprotect()), or we

* allocate a new one.

*

* Anon-vma allocations are very subtle, because we may have

* optimistically looked up an anon_vma in folio_lock_anon_vma_read()

* and that may actually touch the rwsem even in the newly

* allocated vma (it depends on RCU to make sure that the

* anon_vma isn't actually destroyed).

*

* As a result, we need to do proper anon_vma locking even

* for the new allocation. At the same time, we do not want

* to do any locking for the common case of already having

* an anon_vma.

*

* This must be called with the mmap_lock held for reading.

*/

int __anon_vma_prepare(struct vm_area_struct *vma)

{

struct mm_struct *mm = vma->vm_mm;

struct anon_vma *anon_vma, *allocated;

struct anon_vma_chain *avc;

might_sleep();

avc = anon_vma_chain_alloc(GFP_KERNEL); // 首先创建一个avc,用来连接vma和anon_vma

if (!avc)

goto out_enomem;

// 能否复用一个已有的anon_vma结构

anon_vma = find_mergeable_anon_vma(vma);

allocated = NULL;

if (!anon_vma) { // 找不到可复用的,就重新分配一个

anon_vma = anon_vma_alloc();

if (unlikely(!anon_vma))

goto out_enomem_free_avc;

anon_vma->num_children++; /* self-parent link for new root */

allocated = anon_vma;

}

anon_vma_lock_write(anon_vma);

/* page_table_lock to protect against threads */

spin_lock(&mm->page_table_lock);

if (likely(!vma->anon_vma)) { // 设置vma的反向映射信息

vma->anon_vma = anon_vma; // 指向anon_vma

anon_vma_chain_link(vma, avc, anon_vma); // 将avc,anon_vma,vma链接起来

anon_vma->num_active_vmas++;

allocated = NULL;

avc = NULL;

}

spin_unlock(&mm->page_table_lock);

anon_vma_unlock_write(anon_vma);

if (unlikely(allocated))

put_anon_vma(allocated);

if (unlikely(avc))

anon_vma_chain_free(avc);

return 0;

out_enomem_free_avc:

anon_vma_chain_free(avc);

out_enomem:

return -ENOMEM;

}

// 这个就是链接的具体实现

static void anon_vma_chain_link(struct vm_area_struct *vma,

struct anon_vma_chain *avc,

struct anon_vma *anon_vma)

{

avc->vma = vma; // avc->vma指向该用户进程的vma

avc->anon_vma = anon_vma; // avc->anon_vma指向anon_vma

list_add(&avc->same_vma, &vma->anon_vma_chain); // 并将该avc插入到vma->anon_vma_chain链表中,方便后续通过该链表找到所有与该vma关联的anon_vma

anon_vma_interval_tree_insert(avc, &anon_vma->rb_root); // 并将avc插入到anon_vma的红黑树中,方便后续遍历该树节点,找到与该anon_vma关联的所有vma

}

3.2 page_add_new_anon_rmap()

/**

* page_add_new_anon_rmap - add mapping to a new anonymous page

* @page: the page to add the mapping to

* @vma: the vm area in which the mapping is added

* @address: the user virtual address mapped

*

* If it's a compound page, it is accounted as a compound page. As the page

* is new, it's assume to get mapped exclusively by a single process.

*

* Same as page_add_anon_rmap but must only be called on *new* pages.

* This means the inc-and-test can be bypassed.

* Page does not have to be locked.

*/

// 为新分配的物理页建立反向映射信息

void page_add_new_anon_rmap(struct page *page,

struct vm_area_struct *vma, unsigned long address)

{

const bool compound = PageCompound(page);

int nr = compound ? thp_nr_pages(page) : 1;

VM_BUG_ON_VMA(address < vma->vm_start || address >= vma->vm_end, vma);

__SetPageSwapBacked(page);

if (compound) {

VM_BUG_ON_PAGE(!PageTransHuge(page), page);

/* increment count (starts at -1) */

atomic_set(compound_mapcount_ptr(page), 0);

atomic_set(compound_pincount_ptr(page), 0);

__mod_lruvec_page_state(page, NR_ANON_THPS, nr);

} else {

/* increment count (starts at -1) */

atomic_set(&page->_mapcount, 0);

}

__mod_lruvec_page_state(page, NR_ANON_MAPPED, nr);

__page_set_anon_rmap(page, vma, address, 1); // 直接看该函数

}

/**

* __page_set_anon_rmap - set up new anonymous rmap

* @page: Page or Hugepage to add to rmap

* @vma: VM area to add page to.

* @address: User virtual address of the mapping

* @exclusive: the page is exclusively owned by the current process

*/

static void __page_set_anon_rmap(struct page *page,

struct vm_area_struct *vma, unsigned long address, int exclusive)

{

struct anon_vma *anon_vma = vma->anon_vma;

BUG_ON(!anon_vma);

if (PageAnon(page))

goto out;

/*

* If the page isn't exclusively mapped into this vma,

* we must use the _oldest_ possible anon_vma for the

* page mapping!

*/

if (!exclusive)

anon_vma = anon_vma->root;

/*

* page_idle does a lockless/optimistic rmap scan on page->mapping.

* Make sure the compiler doesn't split the stores of anon_vma and

* the PAGE_MAPPING_ANON type identifier, otherwise the rmap code

* could mistake the mapping for a struct address_space and crash.

*/

// 为了区分是匿名反向映射还是文件反向映射,需要对anon_vma地址加上PAGE_MAPPING_ANON

anon_vma = (void *) anon_vma + PAGE_MAPPING_ANON;

// 然后将page->mapping进行赋值,指向该anon_vma反向映射结构

WRITE_ONCE(page->mapping, (struct address_space *) anon_vma);

// 这里比较有意思,这里是将该匿名页处于vma区域哪个位置(按页粒度)进行保存,方便计算该页面的虚拟起始地址

page->index = linear_page_index(vma, address);

out:

if (exclusive)

SetPageAnonExclusive(page);

}

static inline pgoff_t linear_page_index(struct vm_area_struct *vma,

unsigned long address)

{

pgoff_t pgoff;

if (unlikely(is_vm_hugetlb_page(vma)))

return linear_hugepage_index(vma, address);

pgoff = (address - vma->vm_start) >> PAGE_SHIFT; // 用该页面的起始的虚拟地址-该vma映射的起始虚拟地址,再除以PAGE_SIZE

pgoff += vma->vm_pgoff; // 匿名页映射的vma->vm_pgoff为0,文件映射的vma->vm_pgoff表示该vma起始地址映射到文件的位置偏移,也是以页粒度计算

return pgoff;

}

4. 建立反向映射流程(fork流程)

最终结果

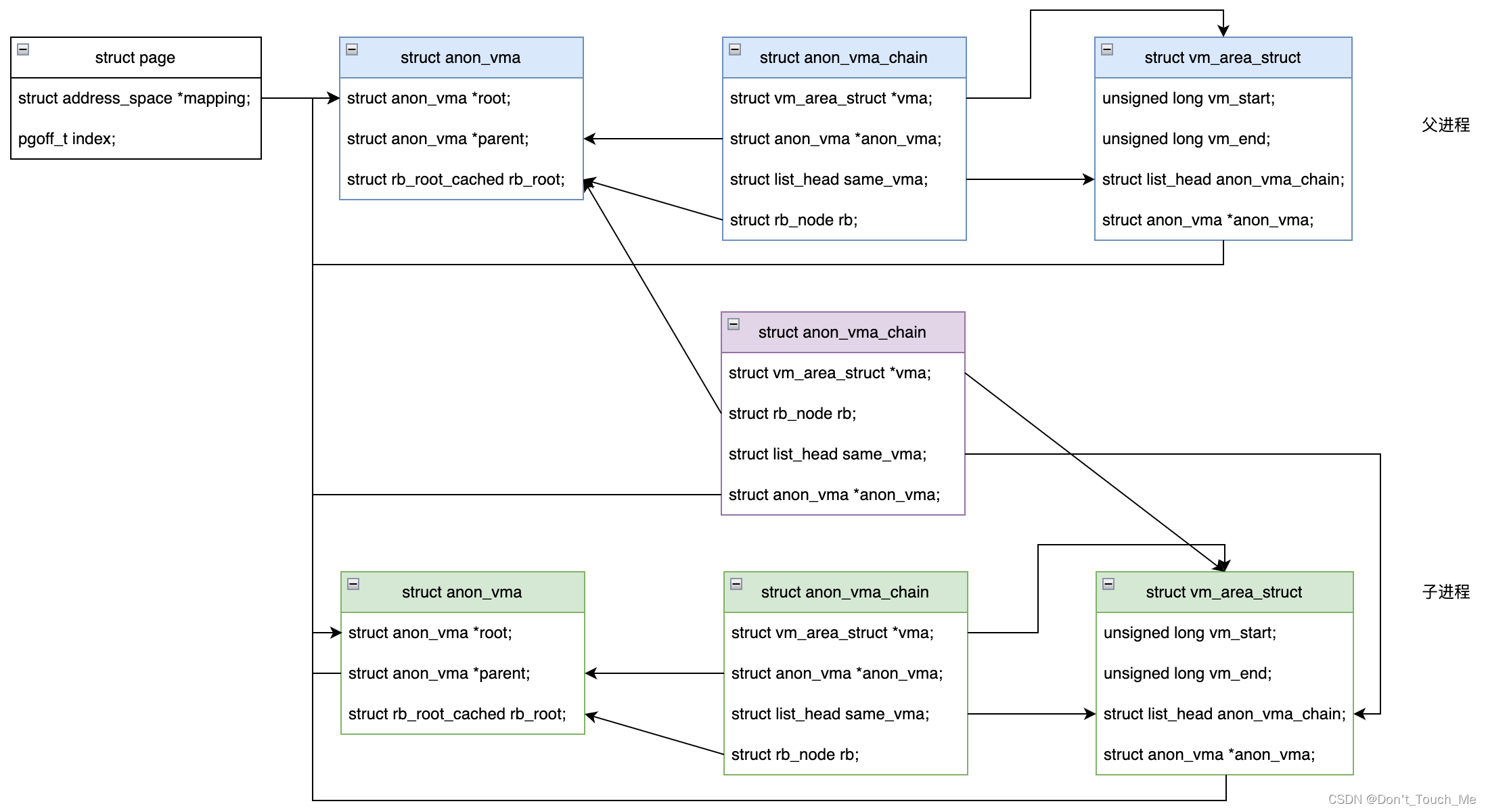

fork进程涉及到创建反向映射主要在 dup_mmap() 函数中处理

SYSCALL_DEFINE0(fork)

-> kernel_clone()

--> copy_process()

---> copy_mm()

----> dup_mm()

-----> dup_mmap()

dup_mmap()函数作用主要是将父进程的vma复制一份到子进程中,并建立页表映射和反向映射

#ifdef CONFIG_MMU

static __latent_entropy int dup_mmap(struct mm_struct *mm,

struct mm_struct *oldmm)

{

...

mas_for_each(&old_mas, mpnt, ULONG_MAX) { //遍历父进程的所有vma

...

tmp = vm_area_dup(mpnt); // 复制当前父进程的vma

if (!tmp)

goto fail_nomem;

retval = vma_dup_policy(mpnt, tmp);

if (retval)

goto fail_nomem_policy;

tmp->vm_mm = mm;

retval = dup_userfaultfd(tmp, &uf);

if (retval)

goto fail_nomem_anon_vma_fork;

if (tmp->vm_flags & VM_WIPEONFORK) {

/*

* VM_WIPEONFORK gets a clean slate in the child.

* Don't prepare anon_vma until fault since we don't

* copy page for current vma.

*/

tmp->anon_vma = NULL;

} else if (anon_vma_fork(tmp, mpnt)) // fork反向映射(核心函数)

goto fail_nomem_anon_vma_fork;

...

}

...

}

4.1 vm_area_dup()

struct vm_area_struct *vm_area_dup(struct vm_area_struct *orig)

{

struct vm_area_struct *new = kmem_cache_alloc(vm_area_cachep, GFP_KERNEL); // 为其分配一个新的vma

if (new) {

ASSERT_EXCLUSIVE_WRITER(orig->vm_flags);

ASSERT_EXCLUSIVE_WRITER(orig->vm_file);

/*

* orig->shared.rb may be modified concurrently, but the clone

* will be reinitialized.

*/

*new = data_race(*orig); // 将父进程的vma信息全部复制到新的vma中

INIT_LIST_HEAD(&new->anon_vma_chain); // 初始化新的vma的anon_vma_chain链表

dup_anon_vma_name(orig, new); // 复制反向映射空间的名称

}

return new;

}

该函数主要是为了生成父进程vma的一份新的拷贝

4.2 anon_vma_fork()

/*

* Attach vma to its own anon_vma, as well as to the anon_vmas that

* the corresponding VMA in the parent process is attached to.

* Returns 0 on success, non-zero on failure.

*/

int anon_vma_fork(struct vm_area_struct *vma, struct vm_area_struct *pvma)

{

struct anon_vma_chain *avc;

struct anon_vma *anon_vma;

int error;

/* Don't bother if the parent process has no anon_vma here. */

if (!pvma->anon_vma) // 如果父进程的vma没有反向映射,则无需进行下面的fork动作

return 0;

/* Drop inherited anon_vma, we'll reuse existing or allocate new. */

vma->anon_vma = NULL; // 将子进程的vma指向的anon_vma清空,因为之前是从父进程vma拷贝过来的

/*

* First, attach the new VMA to the parent VMA's anon_vmas,

* so rmap can find non-COWed pages in child processes.

*/

error = anon_vma_clone(vma, pvma); // 将该子进程的vma链接到父进程的所有anon_vma中

if (error)

return error;

/* An existing anon_vma has been reused, all done then. */

if (vma->anon_vma) // 如果vma->anon_vma可以被重用,则说明不需要分配新的anon_vma

return 0;

/* Then add our own anon_vma. */

anon_vma = anon_vma_alloc(); // 分配一个新的anon_vma

if (!anon_vma)

goto out_error;

anon_vma->num_active_vmas++;

avc = anon_vma_chain_alloc(GFP_KERNEL); // 创建一个avc,用来链接anon_vma和新创建的vma

if (!avc)

goto out_error_free_anon_vma;

/*

* The root anon_vma's rwsem is the lock actually used when we

* lock any of the anon_vmas in this anon_vma tree.

*/

anon_vma->root = pvma->anon_vma->root; // 新分配的anon_vma->root指向父进程anon_vma->root

anon_vma->parent = pvma->anon_vma; // 新分配的anon_vma->parent应该指向父进程的anon_vma

/*

* With refcounts, an anon_vma can stay around longer than the

* process it belongs to. The root anon_vma needs to be pinned until

* this anon_vma is freed, because the lock lives in the root.

*/

get_anon_vma(anon_vma->root); // 增加根节点anon_vma的引用计数

/* Mark this anon_vma as the one where our new (COWed) pages go. */

vma->anon_vma = anon_vma; // 子进程的vma的anon_vma指向新分配的anon_vma

anon_vma_lock_write(anon_vma);

anon_vma_chain_link(vma, avc, anon_vma); // 建立子进程vma,avc和新分配的anon_vma之间的关系

anon_vma->parent->num_children++;

anon_vma_unlock_write(anon_vma);

return 0;

out_error_free_anon_vma:

put_anon_vma(anon_vma);

out_error:

unlink_anon_vmas(vma);

return -ENOMEM;

}

4.3 anon_vma_clone()

/*

* Attach the anon_vmas from src to dst.

* Returns 0 on success, -ENOMEM on failure.

*

* anon_vma_clone() is called by __vma_adjust(), __split_vma(), copy_vma() and

* anon_vma_fork(). The first three want an exact copy of src, while the last

* one, anon_vma_fork(), may try to reuse an existing anon_vma to prevent

* endless growth of anon_vma. Since dst->anon_vma is set to NULL before call,

* we can identify this case by checking (!dst->anon_vma && src->anon_vma).

*

* If (!dst->anon_vma && src->anon_vma) is true, this function tries to find

* and reuse existing anon_vma which has no vmas and only one child anon_vma.

* This prevents degradation of anon_vma hierarchy to endless linear chain in

* case of constantly forking task. On the other hand, an anon_vma with more

* than one child isn't reused even if there was no alive vma, thus rmap

* walker has a good chance of avoiding scanning the whole hierarchy when it

* searches where page is mapped.

*/

int anon_vma_clone(struct vm_area_struct *dst, struct vm_area_struct *src)

{

struct anon_vma_chain *avc, *pavc;

struct anon_vma *root = NULL;

list_for_each_entry_reverse(pavc, &src->anon_vma_chain, same_vma) { // 遍历父进程vma->anon_vma_chain链表上保存的所有avc

struct anon_vma *anon_vma;

avc = anon_vma_chain_alloc(GFP_NOWAIT | __GFP_NOWARN); // 分配一个新的avc结构,用于链接子进程vma和父进程avc->anon_vma

if (unlikely(!avc)) {

unlock_anon_vma_root(root);

root = NULL;

avc = anon_vma_chain_alloc(GFP_KERNEL);

if (!avc)

goto enomem_failure;

}

anon_vma = pavc->anon_vma; // 获取父进程avc中的指向的anon_vma

root = lock_anon_vma_root(root, anon_vma);

anon_vma_chain_link(dst, avc, anon_vma); // 将子进程的vma,新分配的avc,与父进程avc中指向的anon_vma进行链接

/*

* Reuse existing anon_vma if it has no vma and only one

* anon_vma child.

*

* Root anon_vma is never reused:

* it has self-parent reference and at least one child.

*/

if (!dst->anon_vma && src->anon_vma && // 是否能复用父进程的anon_vma

anon_vma->num_children < 2 &&

anon_vma->num_active_vmas == 0)

dst->anon_vma = anon_vma;

}

if (dst->anon_vma)

dst->anon_vma->num_active_vmas++;

unlock_anon_vma_root(root);

return 0;

enomem_failure:

/*

* dst->anon_vma is dropped here otherwise its degree can be incorrectly

* decremented in unlink_anon_vmas().

* We can safely do this because callers of anon_vma_clone() don't care

* about dst->anon_vma if anon_vma_clone() failed.

*/

dst->anon_vma = NULL;

unlink_anon_vmas(dst);

return -ENOMEM;

}

就此匿名页的反向映射的建立到此结束。

5. 回收匿名页时使用反向映射

这里以kswapd进行匿名页回收为例,与直接回收的路径下半部分是重合的(从shrink_node()函数开始)

kswapd()

-> balance_pgdat()

--> kswapd_shrink_node()

---> shrink_node()

----> shrink_node_memcgs()

-----> shrink_lruvec()

------> shrink_list()

-------> shrink_inactive_list()

--------> shrink_folio_list()

---------> try_to_unmap()

在回收匿名页时,需要使用反向映射,将映射到该页面的所有应用进程的进行unmap动作,修改页表映射,所以我们接下来重点看try_to_unmap() 函数。

5.1 try_to_unmap()

/**

* try_to_unmap - Try to remove all page table mappings to a folio.

* @folio: The folio to unmap.

* @flags: action and flags

*

* Tries to remove all the page table entries which are mapping this

* folio. It is the caller's responsibility to check if the folio is

* still mapped if needed (use TTU_SYNC to prevent accounting races).

*

* Context: Caller must hold the folio lock.

*/

void try_to_unmap(struct folio *folio, enum ttu_flags flags)

{

struct rmap_walk_control rwc = {

.rmap_one = try_to_unmap_one,

.arg = (void *)flags,

.done = page_not_mapped,

.anon_lock = folio_lock_anon_vma_read,

};

if (flags & TTU_RMAP_LOCKED)

rmap_walk_locked(folio, &rwc);

else

rmap_walk(folio, &rwc);

}

void rmap_walk(struct folio *folio, struct rmap_walk_control *rwc)

{

if (unlikely(folio_test_ksm(folio)))

rmap_walk_ksm(folio, rwc);

else if (folio_test_anon(folio))

rmap_walk_anon(folio, rwc, false);

else

rmap_walk_file(folio, rwc, false);

}

因为我们是匿名页反向映射,所以看 rmap_walk_anon() 如何处理。

5.2 rmap_walk_anon()

/*

* rmap_walk_anon - do something to anonymous page using the object-based

* rmap method

* @page: the page to be handled

* @rwc: control variable according to each walk type

*

* Find all the mappings of a page using the mapping pointer and the vma chains

* contained in the anon_vma struct it points to.

*/

static void rmap_walk_anon(struct folio *folio,

struct rmap_walk_control *rwc, bool locked)

{

struct anon_vma *anon_vma;

pgoff_t pgoff_start, pgoff_end;

struct anon_vma_chain *avc;

if (locked) {

anon_vma = folio_anon_vma(folio); // 根据页面获取到该页面对应的anon_vma

/* anon_vma disappear under us? */

VM_BUG_ON_FOLIO(!anon_vma, folio);

} else {

anon_vma = rmap_walk_anon_lock(folio, rwc);

}

if (!anon_vma)

return;

pgoff_start = folio_pgoff(folio); // 计算该页面的起始偏移位置

pgoff_end = pgoff_start + folio_nr_pages(folio) - 1; // 该页面的终止偏移位置

anon_vma_interval_tree_foreach(avc, &anon_vma->rb_root, // 从anon_vma->root中查出所有的avc,从而找到映射了该页面的所有vma

pgoff_start, pgoff_end) {

struct vm_area_struct *vma = avc->vma;

unsigned long address = vma_address(&folio->page, vma);

VM_BUG_ON_VMA(address == -EFAULT, vma);

cond_resched();

if (rwc->invalid_vma && rwc->invalid_vma(vma, rwc->arg))

continue;

if (!rwc->rmap_one(folio, vma, address, rwc->arg)) // 进行映射解除

break;

if (rwc->done && rwc->done(folio))

break;

}

if (!locked)

anon_vma_unlock_read(anon_vma);

}

至此匿名页的反向映射处理和使用流程介绍完毕,感谢各位读者浏览!

662

662

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?