参考文档

Grid Infrastructure Installation Guide for Linux Clusterware Administration and Deployment Guide

Real Application Clusters Installation Guide for Linux and UNIX

Oracle Real Application Clusters Administration and Deployment Guide

1、硬件环境准备

1.1、2台主机配置

每机器2块网卡,1块本地磁盘(sda 20G),2块openfiler iscsi盘(sdb 1G,sdc 20G)

配置ip及主机名

cd /etc/sysconfig/network-scripts ls cp ifcfg-ens11f0 ifcfg-ens15f0 vi ifcfg-ens15f0 TYPE=Ethernet BOOTPROTO=static NAME=ens15f0 DEVICE=ens15f0 ONBOOT=yes IPADDR=192.168.203.12 NETMASK=255.255.255.0 systemctl restart network

hostname

hostnamectl set-hostname rac2 cat /etc/hostname ping rac2

关闭iptables

systemctl status firewalld systemctl stop firewalld systemctl disable firewalld systemctl status firewalld systemctl list-unit-files|grep firewalld

关闭SELINUX

sestatus (临时关闭) setenforce 0 vi /etc/sysconfig/selinux 改为disabled

1.2、/etc/hosts(每台主机)

#public 21.44.1.121 rac1 21.44.1.123 rac2 #private 192.168.201.121 rac1-priv 192.168.201.123 rac2-priv #virtual 21.44.1.122 rac1-vip 21.44.1.124 rac2-vip #scan 21.44.1.125 rac-scan

2、软件环境准备

2.1、系统环境配置

2.1.1 系统参数

cat >> /etc/security/limits.conf <<eof oracle soft nproc 16384 oracle hard nproc 16384 oracle soft nofile 65536 oracle hard nofile 65536 grid soft nproc 16384 grid hard nproc 16384 grid soft nofile 65536 grid hard nofile 65536 eof cat >> /etc/pam.d/login <<eof session required /lib64/security/pam_limits.so eof

2.1.2 设置语言

cp /etc/locale.conf /etc/locale.conf.bk > /etc/locale.conf cat >> /etc/locale.conf <<eof LANG="en_US.UTF-8" SUPPORTED="zh_CN.GB18030:zh_CN:zh:en_US.UTF-8:en_US:en" SYSFONT="lat0-sun16" eof cat /etc/locale.conf

2.1.3 检查操作系统安装包

cd /etc/yum.repos.d/ rm -rf *.repo ll touch /etc/yum.repos.d/rhel-debuginfo.repo cat >> /etc/yum.repos.d/rhel-debuginfo.repo <<eof [rhel] name=lvs baseurl=file:///mnt/ enabled=1 gpgcheck=0 eof ll /etc/yum.repos.d/ cat /etc/yum.repos.d/rhel-debuginfo.repo 挂载操作系统ISO: mount /dev/cdrom /mnt df -h cd /mnt/Packages linux7安装以下包即可: yum install -y binutils* compat-lib* gcc-* glibc-* ksh* libaio-* libgcc-* libstdc++-* libXi* libXtst* make-* sysstat-* elfutils-* smartmontools xorg-x11-apps-* unzip* ftp* vsftpd net-tools java

2.2、建立user、group及安装目录

(2台主机均需操作)

2.2.1 建立user、group

/usr/sbin/groupadd -g 1100 oinstall /usr/sbin/groupadd -g 1200 dba /usr/sbin/groupadd -g 1201 asmdba /usr/sbin/useradd -u 1101 -g oinstall -G dba,asmdba oracle /usr/sbin/useradd -u 1102 -g oinstall -G dba,asmdba grid /usr/bin/passwd oracle /usr/bin/passwd grid abc123

2.2.2 安装目录的建立

mkdir -p /u01/app/oracle mkdir -p /u01/app/11.2.0/grid mkdir -p /u01/app/grid chown oracle:oinstall /u01/app/oracle chown grid:oinstall /u01/app/11.2.0/grid chown grid:oinstall /u01/app/grid chmod -R 775 /u01/app/ chown -R grid:oinstall /u01/app

2.2.3 用户环境变量修改home下的 .bash_profile

ORACLE:

export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 export ORACLE_SID=orcl1 export LANG=en_US.UTF8 export ORACLE_UNQNAME=orcl export NLS_LANG=AMERICAN_AMERICA.ZHS16GBK export PATH=/usr/sbin:$PATH export PATH=$ORACLE_HOME/bin:/u01/app/11.2.0/grid/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib umask 022

GRID:

export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/11.2.0/grid export ORACLE_SID=+ASM1 export LANG=en_US.UTF8 export PATH=/usr/sbin:$PATH export PATH=$ORACLE_HOME/bin:/u01/app/11.2.0/grid/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib umask 022

2.3、配置iscsi

2.3.1 发现targets

iscsiadm -m discovery -t st -p 21.44.1.129 21.44.1.129:3260,1 iqn.2006-01.com.openfiler:tsn.e3edaa72331f

2.3.2 登录target

iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.e3edaa72331f -p 21.44.1.129:3260 -l

2.3.3 设置开机登录target

(将登录target命令添加到默认配置文件/etc/iscsi/iscsi.conf中)

cat >> /etc/iscsi/iscsi.conf <<EOF iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.e3edaa72331f -p 21.44.1.129:3260 -l EOF

2.3.4 查看认到的磁盘

fdisk -l lsblk

2.4、绑定 UDEV 共享磁盘

(2台主机均需操作)

2.4.1 生成规则文件

for i in b c; do echo "KERNEL==\"sd*\",SUBSYSTEM==\"block\",PROGRAM==\"/usr/lib/udev/scsi_id -g -u -d \$devnode\", RESULT==\"`/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", SYMLINK+=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmdba\", MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules done cat /etc/udev/rules.d/99-oracle-asmdevices.rules

2.4.2 诊断udev rule

/sbin/udevadm test /sys/block/sdb /sbin/udevadm test /sys/block/sdc 重新加载rules文件 systemctl status systemd-udevd.service systemctl enable systemd-udevd.service systemctl restart systemd-udevd.service /sbin/udevadm control --reload-rules /sbin/udevadm trigger --type=devices --action=change

2.4.3 检查

ll /dev/asm* ll /dev/sd*

2.5、Grid时间同步所需要的设置

Network Time Protocol Setting /sbin/service ntpd stop systemctl list-unit-files | grep ntpd mv /etc/ntp.conf /etc/ntp.conf.bak

检查两个节点的时间是否一致!!!

设置日期:

date -s 11/24/2021

设置时间:

date -s 16:03:00

保存到CMOS:

clock -w

3、安装Grid Infrastructure软件

3.1 安装

su - grid export DISPLAY=21.44.1.1:0.0 ./runInstaller scan Name: rac-scan SSH Connectivity: 先Setup,再Test ASM: OCR 发现路径: /dev/asm* Fix & Check Again

3.2 orainstRoot.sh报错

ohasd failed to start Failed to start the Clusterware. Last 20 lines of the alert log follow: 2019-08-24 09:54:35.959: [client(24030)]CRS-2101:The OLR was formatted using version 3.

解决方法步骤如下:(保持原脚本执行会话不动,克隆一个crt会话,root用户执行以下内容)

-

以root用户创建服务文件

touch /usr/lib/systemd/system/ohas.service chmod 777 /usr/lib/systemd/system/ohas.service

-

将以下内容添加到新创建的ohas.service文件中

vi /usr/lib/systemd/system/ohas.service [Unit] Description=Oracle High Availability Services After=syslog.target [Service] ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple Restart=always [Install] WantedBy=multi-user.target

-

以root用户运行下面的命令

systemctl daemon-reload systemctl enable ohas.service systemctl start ohas.service

-

查看运行状态

systemctl status ohas.service

3.3 orainstRoot.sh输出

rac1:

[root@rac1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to inittab

ohasd failed to start

Failed to start the Clusterware. Last 20 lines of the alert log follow:

2024-01-21 13:56:07.898:

[client(17966)]CRS-2101:The OLR was formatted using version 3.

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

ASM created and started successfully.

Disk Group OCR created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 9b97ac1e64ff4f0cbf39098cd0288a9d.

Successfully replaced voting disk group with +OCR.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 9b97ac1e64ff4f0cbf39098cd0288a9d (/dev/asm-diskb) [OCR]

Located 1 voting disk(s).

sh: /bin/netstat: No such file or directory

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.OCR.dg' on 'rac1'

CRS-2676: Start of 'ora.OCR.dg' on 'rac1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

rac2:

[root@rac2 network-scripts]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac2 network-scripts]#

[root@rac2 network-scripts]#

[root@rac2 network-scripts]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

Adding Clusterware entries to inittab

ohasd failed to start

Failed to start the Clusterware. Last 20 lines of the alert log follow:

2024-01-21 14:08:28.943:

[client(17820)]CRS-2101:The OLR was formatted using version 3.

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

sh: /bin/netstat: No such file or directory

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

3.4 将grid环境变量加到root下

vi /root/.bash_profile export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/11.2.0/grid export ORACLE_SID=+ASM2 export LANG=en_US.UTF8 export PATH=/usr/sbin:$PATH export PATH=$ORACLE_HOME/bin:/u01/app/11.2.0/grid/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib umask 022

避免在完成 Oracle Clusterware 安装后更改主机名,包括添加或删除域限定。主机名已更改的节点必须从群集中删除,然后使用新名称重新添加。

4、安装Database软件

su - oracle export DISPLAY=21.44.1.1:0.0 ./runInstaller software only SSH Connectivity: 先Setup,再Test

5、打补丁

6、建库

su - grid export DISPLAY=21.44.1.1:0.0 asmca disk group >>create: DATA su - oracle export DISPLAY=21.44.1.1:0.0 dbca 启用归档

tbs:

create bigfile tablespace assp datafile '+DATA' size 500g autoextend on;

user:

create user asspdb2022 identified by asspdb2022 default tablespace assp; grant connect,resource,unlimited tablespace to asspdb2022; grant dba to asspdb2022;

7、应用连接数据库

weblogic连接RAC:

jdbc:oracle:thin:@(description=(ADDRESS_LIST =(ADDRESS = (PROTOCOL = TCP)(HOST = 21.44.1.3)(PORT = 1521))(ADDRESS = (PROTOCOL = TCP)(HOST = 21.44.1.4)(PORT = 1521))(load_balance=yes)(failover=yes))(connect_data=(service_name= orcl)))

8、rac日常维护

检查OHAS及CRS状态

crsctl check crs

[root@rac1 utl]# crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE ONLINE rac1 Started

ora.cluster_interconnect.haip

1 ONLINE ONLINE rac1

ora.crf

1 ONLINE ONLINE rac1

ora.crsd

1 ONLINE ONLINE rac1

ora.cssd

1 ONLINE ONLINE rac1

ora.cssdmonitor

1 ONLINE ONLINE rac1

ora.ctssd

1 ONLINE ONLINE rac1 ACTIVE:0

ora.diskmon

1 OFFLINE OFFLINE

ora.evmd

1 ONLINE ONLINE rac1

ora.gipcd

1 ONLINE ONLINE rac1

ora.gpnpd

1 ONLINE ONLINE rac1

ora.mdnsd

1 ONLINE ONLINE rac1

[root@rac1 utl]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

关闭数据库实例

(oracle用户执行,只需在其中一个节点执行即可,两个节点上的数据库实例会同时关闭!)

srvctl stop database -d db_name srvctl stop database -d orcl

CVU

集群验证实用程序 (CVU):CVU 是一个命令行实用程序,可用于验证一系列集群和 Oracle RAC 特定组件。使用 CVU 验证共享存储设备、网络配置、系统要求和 Oracle Clusterware 以及操作系统组和用户。

安装并使用 CVU 对集群环境进行安装前和安装后检查。CVU 在 Oracle Clusterware 和 Oracle RAC 组件的预安装和安装期间特别有用,可确保您的配置满足最低安装要求。此外,在完成管理任务(例如节点添加和节点删除)后,还可以使用 CVU 验证您的配置。

参考文档:

Clusterware Administration and Deployment Guide A Cluster Verification Utility Reference

集群安装前后环境配置检查

(grid用户执行)

cluvfy stage -pre crsinst -n rac1,rac2 [-verbose] cluvfy stage -post crsinst -n rac1,rac2 [-verbose]

crs自启动配置

crsctl config crs crsctl enable crs crsctl disable crs

OCR/OLR

检查OCR/OLR状态

ocrcheck ##检查OCR状态 ocrcheck -local ##检查OLR状态

OCR添加、删除、迁移

[root@rac1 bin]# ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2964

Available space (kbytes) : 259156

ID : 1051152808

Device/File Name : +OCR

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

[root@rac1 bin]# ocrconfig -add +data

[root@rac1 bin]# ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2964

Available space (kbytes) : 259156

ID : 1051152808

Device/File Name : +OCR

Device/File integrity check succeeded

Device/File Name : +data

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

[root@rac1 bin]# ocrconfig -delete +OCR

[root@rac1 bin]# ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2964

Available space (kbytes) : 259156

ID : 1051152808

Device/File Name : +data

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

查看OCR/OLR内容及备份

ocrconfig --help 导出导入: 将OCR/OLR导出成文本格式方便查看并可用于导入恢复 备份是 OCR 的一致快照,而导出则不是。oracle不建议使用导出方式作为备份方式!!! ocrconfig -export my_ocr.txt ocrconfig -local -export my_olr.txt ocrconfig -import my_ocr.txt ocrconfig -local -import my_olr.txt 查看备份: ocrconfig -showbackup ##查看OCR备份,OCR只在master节点进行修改及备份!!! ocrconfig -local -showbackup ##查看OLR备份,OLR在各自节点分别进行备份! rac1 2024/01/24 17:56:30 /u01/app/backup00.ocr rac1 2024/01/24 13:56:29 /u01/app/backup01.ocr rac1 2024/01/23 20:04:22 /u01/app/backup02.ocr rac1 2024/01/23 16:04:20 /u01/app/day.ocr rac1 2024/01/21 20:08:20 /u01/app/week.ocr rac1 2024/01/21 18:14:39 /u01/app/backup_20240121_181439.ocr 配置手动备份位置: ocrconfig -backuploc /u01/app ##配置OCR备份位置 ocrconfig -local -backuploc /u01/app ##配置OLR备份位置 手动备份: ocrconfig -manualbackup ##手动备份OCR ocrconfig -local -manualbackup ##手动备份OLR 从物理备份还原:(具体操作步骤参见下面“OCR还原”) ocrconfig -restore /u01/app/backup_20240121_181439.ocr ocrconfig -local -restore /u01/app/backup_20240121_181439.ocr dump OCR/OLR内容: 将OCR/OLR转储成文本格式方便查看 ocrdump my_ocr.txt ##转储OCR成文本格式文件 ocrdump -local my_olr.txt ##转储OLR成文本格式文件 ocrdump -stdout|more ##转储OCR成文本格式直接输出查看 ocrdump -local -stdout|more ##转储OLR成文本格式直接输出查看 ocrdump -stdout -xml|more ##转储OCR成文本xml格式直接输出查看 ocrdump -local -stdout -xml|more ##转储OLR成文本xml格式直接输出查看 ocrdump -backupfile /u01/app/backup00.ocr -stdout|more ##校验备份文件完整性 ocrdump -stdout -keyname SYSTEM|more ##查看某keyname相关内容 ocrdump -stdout -keyname SYSTEM.css.interfaces|more ocrdump -stdout -keyname SYSTEM.crs|more ocrdump -stdout -keyname SYSTEM.CRSD.RESOURCES|more ocrdump -stdout -keyname SYSTEM.OCR|more ocrdump -stdout -keyname DATABASE|more ocrdump -stdout -keyname CRS|more

OCR还原

Use the following procedure to restore OCR on Linux or UNIX systems:

-

List the nodes in your cluster by running the following command on one node:

$ olsnodes

-

Stop Oracle Clusterware by running the following command as

rooton all of the nodes:# crsctl stop crs

If the preceding command returns any error due to OCR corruption, stop Oracle Clusterware by running the following command as

rooton all of the nodes:# crsctl stop crs -f

-

Start the Oracle Clusterware stack on one node in exclusive mode by running the following command as

root:# crsctl start crs -excl

Ignore any errors that display.

Check whether

crsdis running. If it is, stop it by running the following command asroot:# crsctl stop resource ora.crsd -init

Caution:

Do not use the

-initflag with any other command. -

Restore OCR with an OCR backup that you can identify in "Listing Backup Files" by running the following command as

root:ocrconfig -showbackup ##使用最新的备份来恢复,恢复前先校验下备份文件

校验下备份文件:

ocrdump -backupfile /u01/app/backup00.ocr -stdout|more

还原OCR:

ocrconfig -restore file_name

-

Verify the integrity of OCR:

# ocrcheck

-

Stop Oracle Clusterware on the node where it is running in exclusive mode:

# crsctl stop crs -f

-

Begin to start Oracle Clusterware by running the following command as

rooton all of the nodes:# crsctl start crs

-

Verify OCR integrity of all of the cluster nodes that are configured as part of your cluster by running the following CVU command:

$ cluvfy comp ocr -n all -verbose

votedisk

查看votedisk信息

crsctl query css votedisk

不能使用 crsctl 添加或删除存储在 Oracle ASM 磁盘组中的投票磁盘,因为 Oracle ASM 根据磁盘组的冗余级别管理投票磁盘的数量。

添加votedisk

crsctl add css votedisk /dev/asm-diskd

删除votedisk

crsctl delete css votedisk /dev/asm-diskd

更换votedisk位置

crsctl replace votedisk +data

设置votedisk

Defines the set of voting disks to be used by CRS

crsctl set css votedisk {asm <diskgroup>|raw <vdisk>[...]}

votedisk的备份和还原

在 Oracle Clusterware 11g 第 2 版 (11.2) 中,不再需要备份投票磁盘。投票磁盘数据将作为任何配置更改的一部分自动备份到 OCR 中,并自动还原到添加的任何投票磁盘。

但是,如果所有投票磁盘都已损坏,则可以按照“恢复投票磁盘”中的说明进行还原。

If all of the voting disks are corrupted, then you can restore them, as follows:

-

Restore OCR as described in "Restoring Oracle Cluster Registry", if necessary.

This step is necessary only if OCR is also corrupted or otherwise unavailable, such as if OCR is on Oracle ASM and the disk group is no longer available.

-

Run the following command as

rootfrom only one node to start the Oracle Clusterware stack in exclusive mode, which does not require voting files to be present or usable:# crsctl start crs -excl

-

Run the

crsctl query css votediskcommand to retrieve the list of voting files currently defined, similar to the following:$ crsctl query css votedisk -- ----- ----------------- --------- --------- ## STATE File Universal Id File Name Disk group 1. ONLINE 7c54856e98474f61bf349401e7c9fb95 (/dev/sdb1) [DATA]

This list may be empty if all voting disks were corrupted, or may have entries that are marked as status

3orOFF. -

Depending on where you store your voting files, do one of the following:

-

If the voting disks are stored in Oracle ASM, then run the following command to migrate the voting disks to the Oracle ASM disk group you specify:

crsctl replace votedisk +asm_disk_group

The Oracle ASM disk group to which you migrate the voting files must exist in Oracle ASM. You can use this command whether the voting disks were stored in Oracle ASM or some other storage device.

-

If you did not store voting disks in Oracle ASM, then run the following command using the File Universal Identifier (FUID) obtained in the previous step:

$ crsctl delete css votedisk FUID

Add a voting disk, as follows:

$ crsctl add css votedisk path_to_voting_disk

-

-

Stop the Oracle Clusterware stack as

root:# crsctl stop crs -f

-

Restart the Oracle Clusterware stack in normal mode as

root:# crsctl start crs

启动本机OHAS

crsctl start crs [-excl [-nocrs]|-nowait] crsctl start crs crsctl start crs -excl -nocrs crsctl start crs alert: 2024-01-25 15:48:14.061: [ohasd(40865)]CRS-2112:The OLR service started on node rac1. 2024-01-25 15:48:14.087: [ohasd(40865)]CRS-1301:Oracle High Availability Service started on node rac1. 2024-01-25 15:48:14.097: [ohasd(40865)]CRS-8017:location: /etc/oracle/lastgasp has 2 reboot advisory log files, 0 were announced and 0 errors occurred 2024-01-25 15:48:17.724: [/u01/app/11.2.0/grid/bin/orarootagent.bin(40910)]CRS-2302:Cannot get GPnP profile. Error CLSGPNP_NO_DAEMON (GPNPD daemon is not running). 2024-01-25 15:48:22.154: [gpnpd(41006)]CRS-2328:GPNPD started on node rac1. 2024-01-25 15:48:24.670: [cssd(41075)]CRS-1713:CSSD daemon is started in clustered mode 2024-01-25 15:48:26.458: [ohasd(40865)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE 2024-01-25 15:48:26.458: [ohasd(40865)]CRS-2769:Unable to failover resource 'ora.diskmon'. 2024-01-25 15:48:34.424: [cssd(41075)]CRS-1707:Lease acquisition for node rac1 number 1 completed 2024-01-25 15:48:35.725: [cssd(41075)]CRS-1605:CSSD voting file is online: /dev/asm-diskb; details in /u01/app/11.2.0/grid/log/rac1/cssd/ocssd.log. 2024-01-25 15:48:40.295: [cssd(41075)]CRS-1601:CSSD Reconfiguration complete. Active nodes are rac1 rac2 . 2024-01-25 15:48:42.998: [ctssd(41211)]CRS-2407:The new Cluster Time Synchronization Service reference node is host rac2. 2024-01-25 15:48:43.004: [ctssd(41211)]CRS-2401:The Cluster Time Synchronization Service started on host rac1. 2024-01-25 15:48:44.665: [ohasd(40865)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE 2024-01-25 15:48:44.665: [ohasd(40865)]CRS-2769:Unable to failover resource 'ora.diskmon'. 2024-01-25 15:49:06.024: [crsd(41347)]CRS-1012:The OCR service started on node rac1. 2024-01-25 15:49:06.068: [evmd(41239)]CRS-1401:EVMD started on node rac1. 2024-01-25 15:49:07.465: [crsd(41347)]CRS-1201:CRSD started on node rac1. [root@rac1 utl]# crsctl start crs -excl -nocrs CRS-4123: Oracle High Availability Services has been started. CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1' CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1' CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1' CRS-2672: Attempting to start 'ora.gipcd' on 'rac1' CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'rac1' CRS-2672: Attempting to start 'ora.diskmon' on 'rac1' CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded CRS-2679: Attempting to clean 'ora.cluster_interconnect.haip' on 'rac1' CRS-2672: Attempting to start 'ora.ctssd' on 'rac1' CRS-2681: Clean of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac1' CRS-2676: Start of 'ora.ctssd' on 'rac1' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'rac1' CRS-2676: Start of 'ora.asm' on 'rac1' succeeded [root@rac1 cdata]# crsctl start crs -excl CRS-4123: Oracle High Availability Services has been started. CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1' CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1' CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1' CRS-2672: Attempting to start 'ora.gipcd' on 'rac1' CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'rac1' CRS-2672: Attempting to start 'ora.diskmon' on 'rac1' CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.ctssd' on 'rac1' CRS-2679: Attempting to clean 'ora.cluster_interconnect.haip' on 'rac1' CRS-2681: Clean of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac1' CRS-2676: Start of 'ora.ctssd' on 'rac1' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'rac1' CRS-2676: Start of 'ora.asm' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'rac1' CRS-2676: Start of 'ora.crsd' on 'rac1' succeeded

停止本机OHAS

crsctl stop crs -f

[root@rac2 ~]# crsctl stop crs

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2'

CRS-2673: Attempting to stop 'ora.crsd' on 'rac2'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rac2'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'rac2'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'rac2'

CRS-2673: Attempting to stop 'ora.OCR.dg' on 'rac2'

CRS-2673: Attempting to stop 'ora.orcl.db' on 'rac2'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.rac2.vip' on 'rac2'

CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.scan1.vip' on 'rac2'

CRS-2677: Stop of 'ora.rac2.vip' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.rac2.vip' on 'rac1'

CRS-2677: Stop of 'ora.orcl.db' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.DATA.dg' on 'rac2'

CRS-2677: Stop of 'ora.DATA.dg' on 'rac2' succeeded

CRS-2677: Stop of 'ora.scan1.vip' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.scan1.vip' on 'rac1'

CRS-2676: Start of 'ora.rac2.vip' on 'rac1' succeeded

CRS-2676: Start of 'ora.scan1.vip' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'rac1'

CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'rac1' succeeded

CRS-2677: Stop of 'ora.OCR.dg' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac2'

CRS-2677: Stop of 'ora.asm' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'rac2'

CRS-2677: Stop of 'ora.ons' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'rac2'

CRS-2677: Stop of 'ora.net1.network' on 'rac2' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac2' has completed

CRS-2677: Stop of 'ora.crsd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac2'

CRS-2673: Attempting to stop 'ora.crf' on 'rac2'

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac2'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac2'

CRS-2673: Attempting to stop 'ora.asm' on 'rac2'

CRS-2677: Stop of 'ora.mdnsd' on 'rac2' succeeded

CRS-2677: Stop of 'ora.crf' on 'rac2' succeeded

CRS-2677: Stop of 'ora.evmd' on 'rac2' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'rac2' succeeded

CRS-2677: Stop of 'ora.asm' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac2'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac2'

CRS-2677: Stop of 'ora.cssd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'rac2'

CRS-2677: Stop of 'ora.gipcd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac2'

CRS-2677: Stop of 'ora.gpnpd' on 'rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

alert:

2024-01-25 15:47:07.012:

[/u01/app/11.2.0/grid/bin/orarootagent.bin(26163)]CRS-5822:Agent '/u01/app/11.2.0/grid/bin/orarootagent_root' disconnected from server. Details at (:CRSAGF00117:) {0:5:607} in /u01/app/11.2.0/grid/log/rac1/agent/crsd/orarootagent_root/orarootagent_root.log.

2024-01-25 15:47:08.069:

[ctssd(25892)]CRS-2405:The Cluster Time Synchronization Service on host rac1 is shutdown by user

2024-01-25 15:47:08.099:

[mdnsd(25682)]CRS-5602:mDNS service stopping by request.

2024-01-25 15:47:19.033:

[cssd(25763)]CRS-1603:CSSD on node rac1 shutdown by user.

2024-01-25 15:47:19.176:

[ohasd(25549)]CRS-2767:Resource state recovery not attempted for 'ora.cssdmonitor' as its target state is OFFLINE

2024-01-25 15:47:19.179:

[ohasd(25549)]CRS-2769:Unable to failover resource 'ora.cssdmonitor'.

2024-01-25 15:47:21.436:

[gpnpd(25694)]CRS-2329:GPNPD on node rac1 shutdown.

停止顺序:

ora.LISTENER.lsnr ∨

ora.LISTENER_SCAN1.lsnr

ora.rac2.vip

ora.orcl.db ∨

ora.DATA.dg ∨

ora.scan1.vip

ora.OCR.dg ∨

ora.asm ∨

ora.ons ∨

ora.net1.network ∨

Shutdown of Cluster Ready Services-managed resources on 'rac2' has completed

ora.crsd ∨

ora.mdnsd

ora.crf

ora.evmd

ora.ctssd ∨

ora.asm

ora.cluster_interconnect.haip

ora.cssd ∨

ora.gipcd ∨

ora.gpnpd ∨

Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

启动顺序:

ora.mdnsd

ora.gpnpd ∨

ora.gipcd ∨

ora.crf

ora.cssdmonitor

ora.diskmon

ora.cssd ∨

ora.ctssd ∨

ora.cluster_interconnect.haip ∨

ora.asm ∨

ora.evmd

ora.crsd ∨

Oracle High Availability Services-managed resources

启停resource及查看resource配置及状态

crsctl start res ora.DATA.dg -n rac1 -f crsctl stop res ora.DATA.dg -n rac1 -f crsctl stat res -t -init crsctl stat res -t crsctl stat res ora.DATA.dg -t crsctl stat res ora.DATA.dg -p ##静态配置 crsctl stat res ora.DATA.dg -v ##运行时配置 crsctl stat res ora.DATA.dg -f ##全部配置 crsctl stat res ora.cssd -p -init crsctl stat res ora.crsd -p -init crsctl stat res ora.cluster_interconnect.haip -init -p

修改resource配置

crsctl modify {resource|type|serverpool} <name> <options>

查看和修改resource权限

crsctl getperm resource ora.LISTENER.lsnr

Cluster Ready Services-managed resources

检查CRS管理资源的配置

srvctl config database -d orcl [root@rac1 utl]# srvctl config database -d orcl Database unique name: orcl Database name: orcl Oracle home: /u01/app/oracle/product/11.2.0/db_1 Oracle user: oracle Spfile: +DATA/orcl/spfileorcl.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: orcl Database instances: orcl1,orcl2 Disk Groups: DATA Mount point paths: Services: Type: RAC Database is administrator managed

relocate Cluster Resources

迁移scan到另一节点

[root@rac1 cdata]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public

21.44.1.121 rac1

21.44.1.123 rac2

#private

192.168.201.121 rac1-priv

192.168.201.123 rac2-priv

#virtual

21.44.1.122 rac1-vip

21.44.1.124 rac2-vip

#scan

21.44.1.125 rac-scan

[root@rac1 cdata]# ifconfig

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 21.44.1.121 netmask 255.255.255.0 broadcast 21.44.1.255

inet6 fe80::38f8:eb0d:942:ede0 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:f8:92:c2 txqueuelen 1000 (Ethernet)

RX packets 991183 bytes 1233395430 (1.1 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 543975 bytes 244071181 (232.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 21.44.1.125 netmask 255.255.255.0 broadcast 21.44.1.255

ether 00:0c:29:f8:92:c2 txqueuelen 1000 (Ethernet)

ens32:3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 21.44.1.122 netmask 255.255.255.0 broadcast 21.44.1.255

ether 00:0c:29:f8:92:c2 txqueuelen 1000 (Ethernet)

ens34: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.201.121 netmask 255.255.255.0 broadcast 192.168.201.255

inet6 fe80::1996:e63d:1799:b632 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:f8:92:cc txqueuelen 1000 (Ethernet)

RX packets 283440 bytes 203748971 (194.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 253089 bytes 178255028 (169.9 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens34:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 169.254.107.71 netmask 255.255.0.0 broadcast 169.254.255.255

ether 00:0c:29:f8:92:cc txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 78372 bytes 67922786 (64.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 78372 bytes 67922786 (64.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@rac1 utl]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

srvctl relocate scan -i 1 -n rac2

[root@rac1 cdata]# ifconfig

ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 21.44.1.121 netmask 255.255.255.0 broadcast 21.44.1.255

inet6 fe80::38f8:eb0d:942:ede0 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:f8:92:c2 txqueuelen 1000 (Ethernet)

RX packets 1047397 bytes 1305048692 (1.2 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 567408 bytes 252897682 (241.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens32:3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 21.44.1.122 netmask 255.255.255.0 broadcast 21.44.1.255

ether 00:0c:29:f8:92:c2 txqueuelen 1000 (Ethernet)

ens34: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.201.121 netmask 255.255.255.0 broadcast 192.168.201.255

inet6 fe80::1996:e63d:1799:b632 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:f8:92:cc txqueuelen 1000 (Ethernet)

RX packets 302052 bytes 218642477 (208.5 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 274227 bytes 196225122 (187.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens34:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 169.254.107.71 netmask 255.255.0.0 broadcast 169.254.255.255

ether 00:0c:29:f8:92:cc txqueuelen 1000 (Ethernet)

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 83021 bytes 71132717 (67.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 83021 bytes 71132717 (67.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@rac1 utl]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 ##迁完SCAN,LISTENER_SCAN1自动跟随SCAN迁到相应节点上了!

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac2

olsnodes

参考文档:

Clusterware Administration and Deployment Guide

C OLSNODES Command Reference

[root@rac1 ~]# olsnodes --help

Usage: olsnodes [ [-n] [-i] [-s] [-t] [<node> | -l [-p]] | [-c] ] [-g] [-v]

where

-n print node number with the node name

-p print private interconnect address for the local node

-i print virtual IP address with the node name

<node> print information for the specified node

-l print information for the local node

-s print node status - active or inactive

-t print node type - pinned or unpinned

-g turn on logging

-v Run in debug mode; use at direction of Oracle Support only.

-c print clusterware name

olsnodes -s ##查看节点数量及其状态

olsnodes -i ##查看节点vip

olsnodes -l -p ##查看本地节点私网ip(cluster_interconnect)

oifcfg

Oracle 接口配置工具 (OIFCFG):OIFCFG 是用于单实例 Oracle 数据库和 Oracle RAC 环境的命令行工具。使用 OIFCFG 为组件分配和取消分配网络接口。还可以使用 OIFCFG 指示组件使用特定的网络接口并检索组件配置信息。

参考文档:

Clusterware Administration and Deployment Guide

2 Administering Oracle Clusterware ----Changing Network Addresses on Manually Configured Networks

D Oracle Interface Configuration Tool (OIFCFG) Command Reference

oifcfg - Oracle Interface Configuration Tool.

Usage: oifcfg iflist [-p [-n]]

oifcfg setif {-node <nodename> | -global} {<if_name>/<subnet>:<if_type>}...

oifcfg getif [-node <nodename> | -global] [ -if <if_name>[/<subnet>] [-type <if_type>] ]

oifcfg delif {{-node <nodename> | -global} [<if_name>[/<subnet>]] [-force] | -force}

oifcfg [-help]

<nodename> - name of the host, as known to a communications network

<if_name> - name by which the interface is configured in the system

<subnet> - subnet address of the interface

<if_type> - type of the interface { cluster_interconnect | public }

oifcfg iflist

oifcfg getif

检查节点网络状态及连通性

su - grid cluvfy comp nodecon -n all -verbose

9、RAC重要概念

9.1 Oracle Clusterware与Oracle RAC

两个产品:

Oracle Clusterware和Oracle RAC

两个目录:

Oracle Clusterware home和Oracle RAC home

9.1.1 Oracle Clusterware安装

除了二进制文件之外,Oracle Clusterware 还存储了两个组件:投票磁盘文件(记录节点成员资格信息)和 Oracle Cluster Registry (OCR)(记录群集配置信息)。 投票磁盘和 OCR 必须驻留在可供所有群集成员节点使用的共享存储上。

您必须通过受支持的实用程序(如 Oracle Enterprise Manager、服务器控制实用程序 (SRVCTL)、OCR 配置实用程序 (OCRCONFIG) 或数据库配置助手 (Database Configuration Assistant, DBCA))更新 OCR

如果在安装过程中使用 * 仅安装网格基础架构软件 * 选项,则会在本地节点上安装软件二进制文件。要完成群集的安装,必须执行以下附加步骤:配置 Oracle Clusterware 和 Oracle ASM、创建本地安装的克隆、在其他节点上部署此克隆,然后将其他节点添加到群集。

9.1.2 取消Oracle Clusterware配置

通过运行 rootcrs.pl 命令标志 -deconfig -force,可以在一个或多个节点上取消配置 Oracle Clusterware,而无需删除已安装的二进制文件。

如果在安装过程中运行 root.sh 命令时在一个或多个群集节点上遇到错误(例如,一个节点上缺少操作系统软件包),则此功能非常有用。

通过在遇到安装错误的节点上运行 rootcrs.pl -deconfig -force,可以在这些节点上取消配置 Oracle Clusterware,更正错误原因,然后再次运行 root.sh。

若只是cluster层面配置有问题,rac层面没问题的话,rac层面都可完全不用管,只需要在cluster层面deconfig下,然后再执行root.sh配置后,即可恢复正常!!!

To deconfigure Oracle Clusterware: 1.Log in as the root user on a node where you encountered an error. 2.Change directory to Grid_home/crs/install. For example: cd /u01/app/11.2.0/grid/crs/install 3.Run rootcrs.pl with the -deconfig -force flags. For example: perl rootcrs.pl -deconfig -force Repeat on other nodes as required. 4.If you are deconfiguring Oracle Clusterware on all nodes in the cluster, then on the last node, enter the following command: perl rootcrs.pl -deconfig -force -lastnode The -lastnode flag completes deconfiguration of the cluster, including the OCR and voting disks.

cd /u01/app/11.2.0/grid/crs/install [root@rac2 install]# ./rootcrs.pl -deconfig -force Can't locate Env.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 . .) at crsconfig_lib.pm line 703. BEGIN failed--compilation aborted at crsconfig_lib.pm line 703. Compilation failed in require at ./rootcrs.pl line 305. BEGIN failed--compilation aborted at ./rootcrs.pl line 305. [root@rac2 install]# find /u01 -name Env.pm /u01/app/oracle/product/11.2.0/db_1/perl/lib/5.10.0/Env.pm /u01/app/11.2.0/grid/perl/lib/5.10.0/Env.pm [root@rac2 install]# cp /u01/app/11.2.0/grid/perl/lib/5.10.0/Env.pm /usr/lib64/perl5/vendor_perl/ [root@rac2 install]# ./rootcrs.pl -deconfig -force Using configuration parameter file: ./crsconfig_params PRCR-1119 : Failed to look up CRS resources of ora.cluster_vip_net1.type type PRCR-1068 : Failed to query resources Cannot communicate with crsd PRCR-1070 : Failed to check if resource ora.gsd is registered Cannot communicate with crsd PRCR-1070 : Failed to check if resource ora.ons is registered Cannot communicate with crsd CRS-4544: Unable to connect to OHAS CRS-4000: Command Stop failed, or completed with errors. Successfully deconfigured Oracle clusterware stack on this node [root@rac2 install]# ps -ef|grep d.bin root 19923 8157 0 12:20 pts/0 00:00:00 grep --color=auto d.bin [root@rac2 install]# ocrcheck PROT-601: Failed to initialize ocrcheck PROC-33: Oracle Cluster Registry is not configured Storage layer error [Error opening ocr.loc file. No such file or directory] [2]

9.1.3 重新配置Oracle Clusterware

/u01/app/11.2.0/grid/root.sh

[root@rac2 grid]# ./root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

Adding Clusterware entries to inittab

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

PRKO-2190 : VIP exists for node rac2, VIP name rac2-vip

Preparing packages...

cvuqdisk-1.0.9-1.x86_64

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac2 install]# crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 OFFLINE OFFLINE

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

9.2 Oracle Clusterware 软件组件概述

当 Oracle Clusterware 运行时,集群中的每个节点上都会运行多个特定于平台的进程或服务。

9.2.1 Oracle Clusterware 堆栈

Oracle Clusterware 由两个独立的堆栈组成:上层堆栈由 Cluster Ready Services (CRS) 守护程序 (crsd) 锚定,下层堆栈由 Oracle High Availability Services 守护程序 (ohasd) 锚定。这两个堆栈具有多个简化群集操作的进程。以下各节更详细地介绍了这些堆栈:

9.2.1.1 The Cluster Ready Services Stack

本节中的列表描述了构成 CRS 的过程。该列表包括 Linux 和 UNIX 操作系统上的进程或 Windows 上的服务。

Cluster Ready Services (CRS):

用于管理群集中的高可用性操作的主要程序。

CRS 守护程序 (crsd) 根据存储在 OCR 中每个资源的配置信息管理集群资源。这包括启动、停止、监视和故障转移操作。当资源状态发生变化时,crsd 进程会生成事件。安装 Oracle RAC 后,crsd 进程将监视 Oracle 数据库实例、侦听器等,并在发生故障时自动重新启动这些组件。

Cluster Synchronization Services (CSS):

通过控制哪些节点是集群的成员,并在节点加入或离开集群时通知成员来管理集群配置。如果您使用的是经过认证的第三方集群件,则 CSS 进程会与您的集群件接口来管理节点成员身份信息。

cssdagent 进程监视群集并提供 I/O 隔离。此服务以前由 Oracle Process Monitor Daemon (oprocd) 提供,在 Windows 上也称为 OraFenceService。cssdagent 故障可能会导致 Oracle Clusterware 重新启动节点。

Oracle ASM:

为 Oracle Clusterware 和 Oracle Database 提供磁盘管理。

Cluster Time Synchronization Service (CTSS):

在集群中为 Oracle Clusterware 提供时间管理。

Event Management (EVM):

发布 Oracle Clusterware 创建的事件的后台进程。

Oracle Notification Service (ONS):

用于传达快速应用程序通知 (FAN) 事件的发布和订阅服务。

Oracle Agent (oraagent):

扩展集群件以支持 Oracle 特定的需求和复杂的资源。此过程在发生 FAN 事件时运行服务器标注脚本。此过程在 Oracle Clusterware 11g 第 1 版 (11.1) 中称为 RACG。

Oracle Root Agent (orarootagent):

一个专用的 oraagent 进程,可帮助 crsd 管理 root 拥有的资源,例如网络和网格虚拟 IP 地址。

群集同步服务 (CSS)、事件管理 (EVM) 和 Oracle Notification Services (ONS) 组件与同一群集数据库环境中其他节点上的其他群集组件层进行通信。这些组件也是 Oracle 数据库、应用程序和 Oracle Clusterware 高可用性组件之间的主要通信链接。此外,这些后台进程监视和管理数据库操作。

9.2.1.2 The Oracle High Availability Services Stack

本节介绍构成 Oracle High Availability Services 堆栈的过程。该列表包括 Linux 和 UNIX 操作系统上的进程或 Windows 上的服务。

Cluster Logger Service (ologgerd):

从群集中的所有节点接收信息,并保留在基于 CHM 存储库的数据库中。此服务仅在群集中的两个节点上运行。

System Monitor Service (osysmond):

将数据发送到群集记录器服务的监视和操作系统指标收集服务。此服务在群集中的每个节点上运行。

Grid Plug and Play (GPNPD):

提供对网格即插即用配置文件的访问,并在集群的节点之间协调对配置文件的更新,以确保所有节点都具有最新的配置文件。

Grid Interprocess Communication (GIPC):

启用冗余互连使用的支持守护程序。

Multicast Domain Name Service (mDNS):

由 Grid 即插即用用于在群集中查找配置文件,以及由 GNS 用于执行名称解析。mDNS 进程是 Linux 和 UNIX 上的后台进程,也是 Windows 上的服务。

Oracle Grid Naming Service (GNS):

处理外部 DNS 服务器发送的请求,对群集定义的名称执行名称解析。

Table 1-1 List of Processes and Services Associated with Oracle Clusterware Components

| Oracle Clusterware Component | Linux/UNIX Process | Windows Services | Windows Processes |

|---|---|---|---|

| CRS | crsd.bin (r) | OracleOHService | crsd.exe |

| CSS | ocssd.bin, cssdmonitor, cssdagent | OracleOHService | cssdagent.exe, cssdmonitor.exe ocssd.exe |

| CTSS | octssd.bin (r) | octssd.exe | |

| EVM | evmd.bin, evmlogger.bin | OracleOHService | evmd.exe |

| GIPC | gipcd.bin | ||

| GNS | gnsd (r) | gnsd.exe | |

| Grid Plug and Play | gpnpd.bin | OracleOHService | gpnpd.exe |

| LOGGER | ologgerd.bin (r) | ologgerd.exe | |

| Master Diskmon | diskmon.bin | ||

| mDNS | mdnsd.bin | mDNSResponder | mDNSResponder.exe |

| Oracle agent | oraagent.bin (11.2), or racgmain and racgimon (11.1) | oraagent.exe | |

| Oracle High Availability Services | ohasd.bin (r) | OracleOHService | ohasd.exe |

| ONS | ons | ons.exe | |

| Oracle root agent | orarootagent (r) | orarootagent.exe | |

| SYSMON | osysmond.bin (r) | osysmond.exe |

9.2.1.3 Cluster Startup

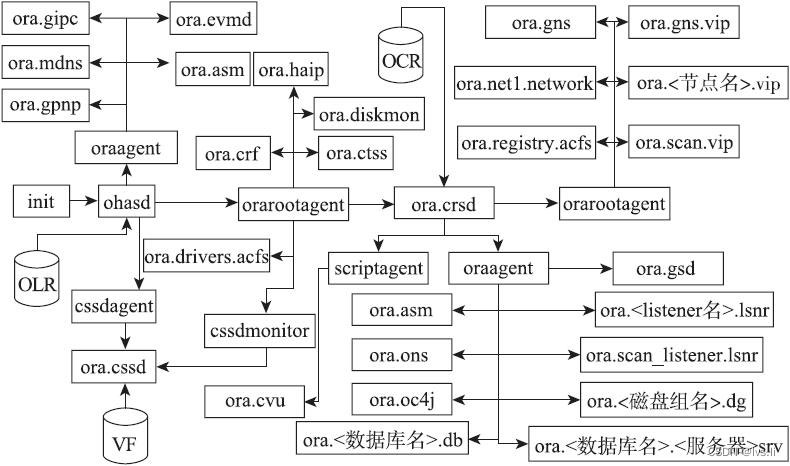

Figure 1-2 illustrates cluster startup.

Description of "Figure 1-2 Cluster Startup"

This figure depicts the startup process for Oracle Clusterware. The INIT process starts ohasd, which, in turn, starts the oraagent, orarootagent, and cssdagent. These processes then carry out the following steps:

-

The

oraagentstartsmdnsd,evmd, Oracle ASM,gpnpd, andgipcd(Grid IPC daemon). -

The

orarootagentstartsctssd, the CSSD Monitor,diskmon(Disk Monitor daemon), andcrsd. Thecrsd, in turn, starts anotheroraagentand anotherorarootagent.The

oraagentstarts Oracle Notification Service (ONS and eONS), an Oracle ASM instance (which communicates with Oracle ASM started by theoraagentthatohasdstarted), the database instance, the listeners, and the SCAN listenersThe

orarootagentstartsgnsd, the VIPs, the SCAN VIP, and the network resources. -

The

cssdagentstarts Cluster Synchronization Service (ocssd).

9.2.2 集群管理软件的各个组件的基本结构关系

9.3 voting disks

Oracle Clusterware 使用投票磁盘文件来确定哪些节点是集群的成员。您可以在 Oracle ASM 上配置投票磁盘,也可以在共享存储上配置投票磁盘。

Oracle Clusterware 使用投票磁盘文件来提供隔离和群集节点成员资格确定。OCR 提供群集配置信息。您可以将 Oracle Clusterware 文件放在 Oracle ASM 或共享公共磁盘存储器上。

9.4 OCR与OLR

Oracle Clusterware 使用 Oracle Cluster Registry (OCR) 存储和管理有关 Oracle Clusterware 控制的组件的信息,例如 Oracle RAC 数据库、侦听器、虚拟 IP 地址 (VIP) 以及服务和任何应用程序。OCR 将配置信息存储在树结构中的一系列键值对中。

您必须通过受支持的实用程序(如 Oracle Enterprise Manager、服务器控制实用程序 (SRVCTL)、OCR 配置实用程序 (OCRCONFIG) 或数据库配置助手 (DBCA))更新 OCR

如果在 Oracle ASM 磁盘组上配置了 OCR,则至少两个 OCR 位置。您应该在两个独立的磁盘组中配置 OCR。通常,这是工作区和恢复区域。

OCR的信息是会被备份的。OCR主节点上的crsd.bin会每隔4小时备份一次OCR,并且将备份最长保留一个星期。我们可以通过命令ocrconfig-showbackup来了解OCR的备份信息。

OLR 是一个类似于 OCR 的注册表,位于群集中的每个节点上,但包含特定于每个节点的信息。它包含有关 Oracle Clusterware 的可管理性信息,包括各种服务之间的依赖关系。Oracle High Availability Services 使用此信息。OLR 位于群集中每个节点的本地存储上。其默认位置位于路径 Grid_home/cdata/host_name.olr 中,其中 Grid_home 是 Oracle Grid Infrastructure 主目录,host_name 是节点的主机名。

OLR在最初集群安装配置时做备份,后续有配置改动时需要手工备份下。

ocrconfig -local -manualbackup

9.5 集群资源

资源概念

Oracle Clusterware 管理的任何内容都称为资源。

由于在11gR2版本的集群中,OCR和VF都已经保存到了ASM的磁盘组中,对于ocssd,它可以通过gpnp profile中的VF发现路径(Discovery String)来扫描对应路径下的所有磁盘的头信息,进而发现集群的VF。

但是对于CRSD,它需要在ASM实例启动并且OCR所在的磁盘组被挂载之后才能够访问OCR。与此同时ASM实例本身也作为集群的应用程序之一,需要被集群管理,而负责管理集群应用程序的进程是CRSD。

另外,集群也需要一个CRSD层面的ASM资源来对ASM实例进行一些全局操作,例如:配置local_listener。基于以上的考虑,在11gR2版本的集群中,出现了两个ASM资源:OHASD管理的ASM资源和CRSD管理的ASM资源。前者负责管理本地节点的ASM实例;后者负责反映ASM实例的状态,同时对ASM实例进行一些全局性的操作。

OHASD管理的资源(集群初始化资源)

OHASD所管理的资源都是集群的初始化资源,而这些资源都是集群的本地资源,也就是说,这些资源所进行的绝大部分操作都仅限于本地节点。

可通过crsctl stat res -t -init查看

ora.mdnsd

ora.gpnpd

ora.gipcd

ora.evmd

ora.ctssd

ora.cssdmonitor

ora.cssd

ora.crsd

ora.crf

ora.cluster_interconnect.haip

ora.asm

CRSD管理的资源(集群rac应用资源)

可通过crsctl stat res -t查看

数据库(database) 实例(instance) 服务(service) 节点应用(nodeapps):网络(network)、Oracle 通知服务 (ONS)、虚拟IP (VIP )

自动存储管理(asm) 磁盘组(diskgroup) 侦听器(listener) 单一客户端访问名称 (SCAN) SCAN 侦听器(scan_listener)

CRSD管理的资源分Local Resources(本地资源)和Cluster Resources(集群资源):

Local Resources(本地资源)包括:(只运行在某个节点本地的资源)

ons

network

asm

dg

lsnr

Cluster Resources(集群资源)包括:(在集群范围内运行资源,除db外,只在集群内运行一份的资源,可在节点间迁移)

scan-vip

vip

db(inst)

oc4j

cvu

scan_listener

db_service

资源管理工具

crsctl工具

资源启停及依赖、权限等属性查看及配置,使用crsctl工具(即包含OHASD管理的资源也包含CRSD管理的资源)

查看资源状态:

crsctl stat res -t -init ##查看集群初始化资源 crsctl stat res -t

查看资源属性配置:

crsctl stat res ora.cssd -p -init crsctl stat res ora.orcl.db -p

更改资源属性配置:

crsctl modify res ora.crf -attr "ENABLED=0" -init crsctl modify res ora.crf -attr "AUTO_START=never" -init

启停初始化堆栈:

crsctl start crs crsctl start crs -excl ##排他模式启动Oracle Clusterware crsctl start crs -excl -nocrs ##排他模式启动Oracle Clusterware,不启动crsd crsctl stop crs crsctl stop crs -f

启停资源:

crsctl start resource ora.crf -init crsctl stop resource ora.crf -init crsctl stop resource ora.orcl.db ##启停crsd管理的资源,推荐使用srvctl工具!! srvctl start database -d orcl srvctl stop database -d orcl

srvctl工具

对于CRSD管理的资源,查看当前配置及运行状态、修改资源,使用srvctl工具(通常需要使用oracle用户)

查看资源配置

srvctl config database -d orcl srvctl config nodeapps srvctl config asm srvctl config listener srvctl config service -d orcl

查看资源运行状态:

srvctl status database -d orcl srvctl status nodeapps srvctl status asm srvctl status scan srvctl status scan_listener srvctl status vip -n rac1 srvctl status listener

启停资源:

srvctl start database -d orcl srvctl stop database -d orcl

修改资源配置:

srvctl modify database -d orcl -r PRIMARY -p +DATA/orcl/spfileorcl.ora -a DATA

添加资源:

srvctl add instance -d orcl -i orcl3 -n rac3 srvctl add service -d orcl -s srv_lvs -r orcl1 -a orcl2

删除资源:

srvctl stop service -d orcl -s srv_lvs ##删除资源前需要先停止资源运行 srvctl remove service -d orcl -s srv_lvs

迁移资源:

srvctl relocate scan -i 1 -n rac2 ##scan ip迁移后,scan listener会随之迁移到同一节点上! srvctl relocate service -d orcl -s srv_lvs -i orcl1 -t orcl2

9.6 Cache Fusion

为确保每个 Oracle RAC 数据库实例都获得满足查询或事务所需的块,Oracle RAC 实例使用两个进程:全局缓存服务 (GCS) 和全局排队服务 (GES)。 GCS 和 GES 使用全局资源目录 (GRD) 维护每个数据文件和每个缓存块的状态记录。 GRD 内容分布在所有活动实例中,这有效地增加了 Oracle RAC 实例的 SGA 大小。

在一个实例缓存数据后,同一群集数据库中的任何其他实例都可以从同一数据库中的另一个实例获取块映像,这比从磁盘读取块的速度更快。 因此,Cache Fusion 会在实例之间移动当前块,而不是从磁盘重新读取块。 当另一个实例上需要一致的块或更改块时,Cache Fusion 会直接在受影响的实例之间传输块映像。 Oracle RAC 使用专用互连进行实例间通信和块传输。 GES Monitor 和 Instance Enqueue Process 管理对 Cache Fusion 资源的访问和排队恢复处理。

GCS 和 GES 进程以及 GRD 协作以实现缓存融合。

LMS 进程通过在全局资源目录 (GRD) 中记录信息来维护数据文件状态和每个缓存块的记录。 LMS 进程还控制到远程实例的消息流,管理全局数据块访问,并在不同实例的缓冲区缓存之间传输块映像。此处理是缓存融合功能的一部分。

LCK0:实例排队进程 LCK0 进程管理非缓存融合资源请求,例如库和行缓存请求。

9.7 SCAN

单个客户端访问名称 (SCAN):在 DNS 或 GNS 中定义的单个网络名称和 IP 地址,所有客户端都应使用该名称和 IP 地址来访问 Oracle RAC 数据库。

整个群集最多有三个 SCAN 地址。

使用 SCAN,当群集配置发生更改时,您不再需要修改客户端。 SCAN 还允许客户端使用 Easy Connect 字符串来提供与 Oracle RAC 数据库的负载平衡和故障转移连接。

数据库通过 init.ora 文件中的远程侦听器参数向 SCAN 侦听器注册。REMOTE_LISTENER参数必须设置为 SCAN:PORT。不要将其设置为具有单个地址的 TNSNAMES 别名,并将 SCAN 设置为 HOST=SCAN。

SQL> show parameter listen

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

listener_networks string

local_listener string (ADDRESS=(PROTOCOL=TCP)(HOST=

21.44.1.122)(PORT=1521))

remote_listener string rac-scan:1521

[oracle@rac1 ~]$ more /u01/app/11.2.0/grid/network/admin/listener.ora LISTENER=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))) #line added by Agent LISTENER_SCAN1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN1)))) # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_SCAN1=ON # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER=ON # line added by Agent

[oracle@rac1 ~]$ more $ORACLE_HOME/network/admin/tnsnames.ora

# tnsnames.ora Network Configuration File: /u01/app/oracle/product/11.2.0/db_1/network/admin/tnsnames.ora

# Generated by Oracle configuration tools.

ORCL =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac-scan)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

9.8 service

Oracle 数据库提供强大的自动负载管理工具(称为服务),以实现企业网格愿景。 服务是可以在 Oracle RAC 数据库中定义的实体。 通过服务,您可以对数据库工作负载进行分组,并将工作路由到分配给处理服务的最佳实例。 此外,您还可以使用服务来定义 Oracle Database 分配给处理工作负载的资源并监视工作负载资源。 分配给服务的应用程序以透明方式获取定义的自动工作负载管理特征,包括高可用性和负载均衡规则。 许多 Oracle Database 功能都与服务(例如 Resource Manager)集成,这使您能够限制服务可以在实例中使用的资源。 一些数据库功能还与 Oracle Streams、Advanced Queuing(以实现队列位置透明)和 Oracle Scheduler(将服务映射到特定作业类)集成。

在 Oracle RAC 数据库中,您配置的服务性能规则控制 Oracle Database 分配给该服务的每个可用实例的工作量。 当您通过添加节点、应用程序、应用程序组件等来扩展数据库时,您可以添加更多服务。

9.9 srvpool

服务器池使集群管理员能够创建策略,用于定义 Oracle Clusterware 如何分配资源。 Oracle RAC 策略管理的数据库在服务器池中运行。Oracle Clusterware 尝试在服务器池中保留所需数量的服务器,从而保留所需数量的 Oracle RAC 数据库实例。 一台服务器在任何时候都只能位于一个服务器池中。但是,一个数据库可以在多个服务器池中运行。 群集托管服务在服务器池中运行,在服务器池中,它们被定义为 UNIFORM(在服务器池中的所有实例上处于活动状态)或 SINGLETON(仅在服务器池中的一个实例上处于活动状态)。

9.10 CHM

集群运行状况监控器 (CHM):CHM 检测和分析与操作系统和集群资源相关的降级和故障,以便为用户提供有关许多 Oracle Clusterware 和 Oracle RAC 问题(例如节点逐出)的更多详细信息。该工具持续跟踪节点、进程和设备级别的操作系统资源消耗。它收集和分析集群范围的数据。在实时模式下,当达到阈值时,该工具会向用户显示警报。对于根本原因分析,可以回放历史数据以了解故障发生时发生的情况。

OCLUMON 命令行工具包含在 CHM 中,您可以使用它来查询 CHM 存储库,以显示指定时间段内特定于节点的指标。您还可以使用 oclumon 查询和打印指定时间段内节点上资源的持续时间和状态。这些状态基于每个资源指标的预定义阈值,表示为红色、橙色、黄色和绿色,表示严重程度的降序。例如,您可以查询以显示名为 node1 的节点上的 CPU 在过去一小时内保持 RED 状态的秒数。您还可以使用 OCLUMON 执行其他管理任务,例如更改调试级别、查询 CHM 版本和更改指标数据库大小。

9.11 HAIP(冗余互连)

您可以通过在安装期间或安装后使用 oifcfg setif 命令将接口分类为专用接口来定义冗余互连使用的多个接口。执行此操作时,Oracle Clusterware 会创建 1 到 4 个(具体取决于您定义的接口数量)高可用性 IP (HAIP) 地址,Oracle 数据库和 Oracle ASM 实例使用这些地址来确保高可用性和负载平衡的通信。

默认情况下,Oracle 软件(包括 Oracle RAC、Oracle ASM 和 Oracle ACFS,所有 11g 第 2 版(11.2.0.2 或更高版本)将这些 HAIP 地址用于其所有流量,从而允许在提供的一组集群互连接口之间实现负载均衡。如果其中一个定义的集群互连接口发生故障或变得非通信接口,则 Oracle Clusterware 会透明地将相应的 HAIP 地址移动到其余功能接口之一。

Oracle Clusterware 在任何给定点最多使用 4 个接口,无论定义的接口数量如何。如果其中一个接口发生故障,则 HAIP 地址将移动到定义集中的另一个已配置接口。

当只有一个 HAIP 地址和多个接口可供选择时,HAIP 地址移动到的接口不再是配置它的原始接口。Oracle Clusterware 选择要向其添加 HAIP 地址的具有最低数字子网的接口。

9.12 主要后台进程

9.12.1 GPNP

gpnp全称为grid plug and play,是Oracle 11gR2版本集群管理软件新增的组件。该组件的功能由gpnpd.bin守护进程实现的。

Oracle设计gpnp的目的主要有以下两点:

目的1:将集群的基本配置信息保存在本地,以便在启动集群时能够从本地文件中获得足够的信息,而不再需要完全依赖于OCR。

目的2:通过和mdnsd进行通信,能够更加灵活地识别集群中的节点,使集群结构更加灵活,而不再需要从OCR中获取节点列表。

gpnp组件由两部分构成:gpnp wallet和gpnp profile。

-

gpnp wallet gpnp wallet的功能是保存需要访问gpnp profile文件的客户签名信息,当客户访问gpnp profile时通过wallet中的签名信息进行验证,以确保只有指定的客户能够访问profile文件。这部分内容是不需要用户配置的,在安装GI时,Oracle会自动完成签名信息的初始化。另外,gpnp的wallet文件可以在路径<GI_home>/gpnp/wallets/peer下找到。例如:

-

gpnp profile gpnp profile是gpnp组件的重要部分,它是一个XML文件,用于保存启动(bootstrap)集群节点时所需的必要信息。

换句话说,当GI在启动时,所有必需的信息都保存在gpnp profile当中,从某种意义上来讲,gpnp profile中的信息类似于数据库bootstrap时所需要的信息。

gpnp profile保存在路径<GI_home>/gpnp/profiles/peer下。 通过工具gpnptool来获取gpnp profile 或使用xml工具格式化显示内容

su - grid

cd $ORACLE_HOME/gpnp/profiles/peer

xmllint --format profile.xml

<?xml version="1.0" encoding="UTF-8"?>

<gpnp:GPnP-Profile xmlns="http://www.grid-pnp.org/2005/11/gpnp-profile" xmlns:gpnp="http://www.grid-pnp.org/2005/11/gpnp-profile" xmlns:orcl="http://www.oracle.com/gpnp/2005/11/gpnp-profile" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" Version="1.0" xsi:schemaLocation="http://www.grid-pnp.org/2005/11/gpnp-profile gpnp-profile.xsd" ProfileSequence="4" ClusterUId="39f7ffb93038cf16bff7dc9075fc0649" ClusterName="rac-scan" PALocation="">

<gpnp:Network-Profile>

<gpnp:HostNetwork id="gen" HostName="*">

<gpnp:Network id="net1" IP="21.44.1.0" Adapter="ens32" Use="public"/>

<gpnp:Network id="net2" IP="192.168.201.0" Adapter="ens34" Use="cluster_interconnect"/>

</gpnp:HostNetwork>

</gpnp:Network-Profile>

<orcl:CSS-Profile id="css" DiscoveryString="+asm" LeaseDuration="400"/>

<orcl:ASM-Profile id="asm" DiscoveryString="/dev/asm*" SPFile="+OCR/rac-scan/asmparameterfile/registry.253.1158847285"/>

<ds:Signature xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo>

<ds:CanonicalizationMethod Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/>

<ds:SignatureMethod Algorithm="http://www.w3.org/2000/09/xmldsig#rsa-sha1"/>

<ds:Reference URI="">

<ds:Transforms>

<ds:Transform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/>

<ds:Transform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#">

<InclusiveNamespaces xmlns="http://www.w3.org/2001/10/xml-exc-c14n#" PrefixList="gpnp orcl xsi"/>

</ds:Transform>

</ds:Transforms>

<ds:DigestMethod Algorithm="http://www.w3.org/2000/09/xmldsig#sha1"/>

<ds:DigestValue>yYDRNTk15GyPyDuOmdGkQzFbph4=</ds:DigestValue>

</ds:Reference>

</ds:SignedInfo>

<ds:SignatureValue>dZxz/v5BV1IxLJ/j65xWTZr+DlZhCdEnlEXWOrHYeNzAfpLcJFCcRDiPzwuKoJigt1MZnVCr3pmc6r75rIydIGk5g7pXGZ8cJNuG1MdIImSYokaLnF3s8sHlSeGhcPt68gm11US+8O7tJ8UBlnEHX27GdJg6auD2DJiHWMs379A=</ds:SignatureValue>

</ds:Signature>

</gpnp:GPnP-Profile>

9.12.2 CTSS

Oracle Clusterware 11g 第 2 版 (11.2) 自动配置了集群时间同步服务 (Cluster Time Synchronization Service, CTSS)。此服务使用针对您部署的群集类型的最佳同步策略提供所有群集节点的自动同步。

如果您已有集群同步服务(如 NTP),则它将以观察者模式启动。否则,它将以活动模式启动,以确保时间在群集节点之间同步。CTSS 不会导致兼容性问题。

当 Oracle Clusterware 启动时,如果 CTSS 在活动模式下运行,并且时间差异超出了步进限制(限制为 24 小时),则 CTSS 会在警报日志中生成警报,退出,并且 Oracle Clusterware 启动失败。您必须手动调整加入集群的节点的时间以与集群同步,之后 Oracle Clusterware 可以启动,CTSS 可以管理节点的时间。

以下方式检查ctss服务状态及时钟同步情况:

crsctl check ctsss cluvfy comp clocksync -n all

9.13 重要文件

/etc/oraInst.loc ##定位inventory位置,通常为$ORACLE_BASE/../oraInventory inventory_loc/ContentsXML/inventory.xml ##inventory配置文件 /etc/oratab ##系统已安装的数据库实例及ASM实例及其HOME信息 /etc/oracle/ocr.loc ##定位OCR配置文件位置 /etc/oracle/olr.loc ##定位OLR配置文件位置 /u01/app/11.2.0/grid/cdata/rac1.olr ##OLR配置文件,二进制,strings查看或使用ocrdump为文本查看 /u01/app/11.2.0/grid/crs/install/crsconfig_params ##集群配置文件 /u01/app/11.2.0/grid/gpnp/profiles/peer/profile.xml ##gpnp profile /u01/app/11.2.0/grid/crs/install/crsconfig_params ##集群配置参数文件,root.sh调用

more /etc oraInst.loc *** /etc: directory *** :::::::::::::: oraInst.loc :::::::::::::: inventory_loc=/u01/app/oraInventory inst_group=oinstall

more /etc/oratab #Backup file is /u01/app/oracle/product/11.2.0/db_1/srvm/admin/oratab.bak.rac1 line added by Agent # # This file is used by ORACLE utilities. It is created by root.sh # and updated by either Database Configuration Assistant while creating # a database or ASM Configuration Assistant while creating ASM instance. # A colon, ':', is used as the field terminator. A new line terminates # the entry. Lines beginning with a pound sign, '#', are comments. # # Entries are of the form: # $ORACLE_SID:$ORACLE_HOME:<N|Y>: # # The first and second fields are the system identifier and home # directory of the database respectively. The third filed indicates # to the dbstart utility that the database should , "Y", or should not, # "N", be brought up at system boot time. # # Multiple entries with the same $ORACLE_SID are not allowed. # # +ASM1:/u01/app/11.2.0/grid:N # line added by Agent orcl:/u01/app/oracle/product/11.2.0/db_1:N # line added by Agent

more /u01/app/oraInventory/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2013, Oracle and/or its affiliates.

All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.4.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/u01/app/11.2.0/grid" TYPE="O" IDX="1" CRS="true">

<NODE_LIST>

<NODE NAME="rac1"/>

<NODE NAME="rac2"/>

</NODE_LIST>

</HOME>

<HOME NAME="OraDb11g_home1" LOC="/u01/app/oracle/product/11.2.0/db_1" TYPE="O" IDX="2">

<NODE_LIST>

<NODE NAME="rac1"/>

<NODE NAME="rac2"/>

</NODE_LIST>

</HOME>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

more /etc/oracle/ocr.loc ocrconfig_loc=+OCR local_only=FALSE

more /etc/oracle/olr.loc olrconfig_loc=/u01/app/11.2.0/grid/cdata/rac1.olr crs_home=/u01/app/11.2.0/grid

ocrdump -local my_olr.txt

su - grid

cd $ORACLE_HOME/gpnp/profiles/peer

xmllint --format profile.xml

<?xml version="1.0" encoding="UTF-8"?>

<gpnp:GPnP-Profile xmlns="http://www.grid-pnp.org/2005/11/gpnp-profile" xmlns:gpnp="http://www.grid-pnp.org/2005/11/gpnp-profile" xmlns:orcl="http://www.oracle.com/gpnp/2005/11/gpnp-profile" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" Version="1.0" xsi:schemaLocation="http://www.grid-pnp.org/2005/11/gpnp-profile gpnp-profile.xsd" ProfileSequence="4" ClusterUId="39f7ffb93038cf16bff7dc9075fc0649" ClusterName="rac-scan" PALocation="">

<gpnp:Network-Profile>

<gpnp:HostNetwork id="gen" HostName="*">

<gpnp:Network id="net1" IP="21.44.1.0" Adapter="ens32" Use="public"/>

<gpnp:Network id="net2" IP="192.168.201.0" Adapter="ens34" Use="cluster_interconnect"/>

</gpnp:HostNetwork>

</gpnp:Network-Profile>

<orcl:CSS-Profile id="css" DiscoveryString="+asm" LeaseDuration="400"/>

<orcl:ASM-Profile id="asm" DiscoveryString="/dev/asm*" SPFile="+OCR/rac-scan/asmparameterfile/registry.253.1158847285"/>

<ds:Signature xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo>

<ds:CanonicalizationMethod Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/>

<ds:SignatureMethod Algorithm="http://www.w3.org/2000/09/xmldsig#rsa-sha1"/>

<ds:Reference URI="">

<ds:Transforms>

<ds:Transform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/>

<ds:Transform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#">

<InclusiveNamespaces xmlns="http://www.w3.org/2001/10/xml-exc-c14n#" PrefixList="gpnp orcl xsi"/>

</ds:Transform>

</ds:Transforms>

<ds:DigestMethod Algorithm="http://www.w3.org/2000/09/xmldsig#sha1"/>

<ds:DigestValue>yYDRNTk15GyPyDuOmdGkQzFbph4=</ds:DigestValue>

</ds:Reference>

</ds:SignedInfo>

<ds:SignatureValue>dZxz/v5BV1IxLJ/j65xWTZr+DlZhCdEnlEXWOrHYeNzAfpLcJFCcRDiPzwuKoJigt1MZnVCr3pmc6r75rIydIGk5g7pXGZ8cJNuG1MdIImSYokaLnF3s8sHlSeGhcPt68gm11US+8O7tJ8UBlnEHX27GdJg6auD2DJiHWMs379A=</ds:SignatureValue>

</ds:Signature>

</gpnp:GPnP-Profile>

[root@rac2 ~]# more /u01/app/11.2.0/grid/crs/install/crsconfig_params # $Header: has/install/crsconfig/crsconfig_params.sbs /st_has_11.2.0/3 2011/03/21 22:55:23 ksviswan Exp $ # # crsconfig.lib # # Copyright (c) 2000, 2011, Oracle and/or its affiliates. All rights reserved. # # NAME # crsconfig_params.sbs - Installer variables required for root config # # DESCRIPTION # crsconfig_param - # # MODIFIED (MM/DD/YY) # ksviswan 03/08/11 - Backport ksviswan_febbugs2 from main # ksviswan 02/03/11 - Backport ksviswan_janbugs4 from main # dpham 05/20/10 - XbranchMerge dpham_bug-8609692 from st_has_11.2.0.1.0 # dpham 03/17/10 - Add TZ variable (9462081 # sujkumar 01/31/10 - CRF_HOME as ORACLE_HOME # sujkumar 01/05/10 - Double quote args # dpham 11/25/09 - Remove NETCFGJAR_NAME, EWTJAR_NAME, JEWTJAR_NAME, # SHAREJAR_NAME, HELPJAR_NAME, and EMBASEJAR_NAME # sukumar 11/04/09 - Fix CRFHOME. Add CRFHOME2 for Windows. # anutripa 10/18/09 - Add CRFHOME for IPD/OS # dpham 03/10/09 - Add ORACLE_BASE # dpham 11/19/08 - Add ORA_ASM_GROUP # khsingh 11/13/08 - revert ORA_ASM_GROUP for automated sh # dpham 11/03/08 - Add ORA_ASM_GROUP # ppallapo 09/22/08 - Add OCRID and CLUSTER_GUID # dpham 09/10/08 - set OCFS_CONFIG to sl_diskDriveMappingList # srisanka 05/13/08 - remove ORA_CRS_HOME, ORA_HA_HOME # ysharoni 05/07/08 - NETWORKS fmt change s_networkList->s_finalIntrList # srisanka 04/14/08 - ASM_UPGRADE param # hkanchar 04/02/08 - Add OCR and OLRLOC for windows # ysharoni 02/15/08 - bug 6817375 # ahabbas 02/28/08 - temporarily remove the need to instantiate the # OCFS_CONFIG value # srisanka 02/12/08 - add OCFS_CONFIG param # srisanka 01/15/08 - separate generic and OSD params # jachang 01/15/08 - Prepare ASM diskgroup parameter (commented out) # ysharoni 12/27/07 - Static pars CSS_LEASEDURATION and ASM_SPFILE # yizhang 12/10/07 - Add SCAN_NAME and SCAN_PORT # ysharoni 12/14/07 - gpnp work, cont-d # jachang 11/30/07 - Adding votedisk discovery string # ysharoni 11/27/07 - Add GPnP params # srisanka 10/18/07 - add params and combine crsconfig_defs.sh with this # file # khsingh 12/08/06 - add HA parameters # khsingh 12/08/06 - add HA_HOME # khsingh 11/25/06 - Creation # ========================================================== # Copyright (c) 2001, 2011, Oracle and/or its affiliates. All rights reserved. # # crsconfig_params.sbs - # # ========================================================== SILENT=false ORACLE_OWNER=grid ORA_DBA_GROUP=oinstall ORA_ASM_GROUP=oinstall LANGUAGE_ID=AMERICAN_AMERICA.AL32UTF8 TZ=Asia/Shanghai ISROLLING=true REUSEDG=false ASM_AU_SIZE=1 USER_IGNORED_PREREQ=true ORACLE_HOME=/u01/app/11.2.0/grid ORACLE_BASE=/u01/app/grid OLD_CRS_HOME= JREDIR=/u01/app/11.2.0/grid/jdk/jre/ JLIBDIR=/u01/app/11.2.0/grid/jlib VNDR_CLUSTER=false OCR_LOCATIONS=NO_VAL CLUSTER_NAME=rac-scan HOST_NAME_LIST=rac1,rac2 NODE_NAME_LIST=rac1,rac2 PRIVATE_NAME_LIST= VOTING_DISKS=NO_VAL #VF_DISCOVERY_STRING=%s_vfdiscoverystring% ASM_UPGRADE=false ASM_SPFILE= ASM_DISK_GROUP=OCR ASM_DISCOVERY_STRING=/dev/asm* ASM_DISKS=/dev/asm-diskb ASM_REDUNDANCY=EXTERNAL CRS_STORAGE_OPTION=1 CSS_LEASEDURATION=400 CRS_NODEVIPS='rac1-vip/255.255.255.0/ens32,rac2-vip/255.255.255.0/ens32' NODELIST=rac1,rac2 NETWORKS="ens32"/21.44.1.0:public,"ens34"/192.168.201.0:cluster_interconnect SCAN_NAME=rac-scan SCAN_PORT=1521 GPNP_PA= OCFS_CONFIG= # GNS consts GNS_CONF=false GNS_ADDR_LIST= GNS_DOMAIN_LIST= GNS_ALLOW_NET_LIST= GNS_DENY_NET_LIST= GNS_DENY_ITF_LIST= #### Required by OUI add node NEW_HOST_NAME_LIST= NEW_NODE_NAME_LIST= NEW_PRIVATE_NAME_LIST= NEW_NODEVIPS='rac1-vip/255.255.255.0/ens32,rac2-vip/255.255.255.0/ens32' ############### OCR constants # GPNPCONFIGDIR is handled differently in dev (T_HAS_WORK for all) # GPNPGCONFIGDIR in dev expands to T_HAS_WORK_GLOBAL GPNPCONFIGDIR=$ORACLE_HOME GPNPGCONFIGDIR=$ORACLE_HOME OCRLOC= OLRLOC= OCRID= CLUSTER_GUID= CLSCFG_MISSCOUNT= #### IPD/OS CRFHOME="/u01/app/11.2.0/grid"

10、添加删除节点

参见:

add&del_node.txt

11 重要维护脚本

runSSHSetup.sh

grid和oracle建立ssh等效性工具。$ORACLE_HOME/oui/bin/runSSHSetup.sh

grid用户: /u01/app/11.2.0/grid/oui/bin/runSSHSetup.sh oracle用户: /u01/app/oracle/product/11.2.0/db_1/oui/bin/runSSHSetup.sh su - grid cd $ORACLE_HOME/oui/bin ./runSSHSetup.sh -user grid -hosts "rac1 rac2 rac3" -advanced -noPromptPassphrase su - oracle cd $ORACLE_HOME/oui/bin ./runSSHSetup.sh -user oracle -hosts "rac1 rac2 rac3" -advanced -noPromptPassphrase 测试连通性 每个节点都分别以grid和oracle运行以下命令测试 su - grid ssh rac1 date ssh rac2 date ssh rac3 date su - oracle ssh rac1 date ssh rac2 date ssh rac3 date

addNode.sh

grid和oracle脚本内容一样。oracle cluster和oracle rac添加节点工具。$ORACLE_HOME/oui/bin/addNode.sh

脚本内容:

[root@rac1 bin]# more /u01/app/11.2.0/grid/oui/bin/addNode.sh

#!/bin/sh

OHOME=/u01/app/11.2.0/grid

INVPTRLOC=$OHOME/oraInst.loc

EXIT_CODE=0

ADDNODE="$OHOME/oui/bin/runInstaller -addNode -invPtrLoc $INVPTRLOC ORACLE_HOME=$OHOME $*"

if [ "$IGNORE_PREADDNODE_CHECKS" = "Y" -o ! -f "$OHOME/cv/cvutl/check_nodeadd.pl" ]

then

$ADDNODE

EXIT_CODE=$?;

else

CHECK_NODEADD="$OHOME/perl/bin/perl $OHOME/cv/cvutl/check_nodeadd.pl -pre ORACLE_HOME=$OHOME $*"

$CHECK_NODEADD

EXIT_CODE=$?;

if [ $EXIT_CODE -eq 0 ]

then

$ADDNODE

EXIT_CODE=$?;

fi

fi

exit $EXIT_CODE ;

使用范例

su - grid

cd /u01/app/11.2.0/grid/oui/bin

export IGNORE_PREADDNODE_CHECKS=Y

./addNode.sh -silent "CLUSTER_NEW_NODES={rac3}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac3-vip}" "CLUSTER_NEW_PRIVATE_NODE_NAMES={rac3-priv}"

su - oracle

cd $ORACLE_HOME/oui/bin

export IGNORE_PREADDNODE_CHECKS=Y

./addNode.sh -silent "CLUSTER_NEW_NODES={rac3}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac3-vip}"

runInstaller

grid和oracle脚本内容一样。$ORACLE_HOME/oui/bin/runInstaller

[grid@rac1 bin]$ ./runInstaller -help

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2024-01-28_10-50-32PM. Please wait ...[grid@rac1 bin]$

[grid@rac1 bin]$

[grid@rac1 bin]$

[grid@rac1 bin]$

[grid@rac1 bin]$ Oracle Universal Installer, Version 11.2.0.4.0 Production

Copyright (C) 1999, 2013, Oracle. All rights reserved.

Usage:

runInstaller [-options] [(<CommandLineVariable=Value>)*]

Where options include:

-clusterware oracle.crs,<crs version>

Version of Cluster ready services installed.

-crsLocation <Path>

Used only for cluster installs, specifies the path to the crs home location. Specifying this overrides CRS information obtained from central inventory.

-invPtrLoc <full path of oraInst.loc>

Unix only. To point to a different inventory location. The orainst.loc file contains:

inventory_loc=<location of central inventory>

inst_group=<>

-jreLoc <location>

Path where Java Runtime Environment is installed. OUI cannot be run without it.

-logLevel <level>

To filter log messages that have a lesser priority level than <level>. Valid options are: severe, warning, info, config, fine, finer, finest, basic, general, detailed, trace. The use of basic, general, detailed, trace is deprecated.

-paramFile <location of file>

Specify location of oraparam.ini file to be used by OUI.

-responseFile <Path>

Specifies the response file and path to use.

-sourceLoc <location of products.xml>

To specify the shiphome location.

-patchsetBugListFile <Path>

Specifies the patchsetBugList file and path to use.

-globalvarxml <location of the xml containing global variable definitions> OUI will pick up global variables from this location instead of stage

-addNode

For adding node(s) to the installation.

-attachHome