文章目录

需求说明

- 今天到现在为止实战课程的访问量

- 今天到现在为止从搜索引擎引流过来的实战课程访问量

用户行为日志介绍

用户行为日志:用户每次访问网站时所有的行为数据(访问、浏览、搜索、点击…)

用户行为轨迹、流量日志

典型的日志来源于Nginx和Ajax

日志数据内容:

1)访问的系统属性: 操作系统、浏览器等等

2)访问特征:点击的url、从哪个url跳转过来的(referer)、页面上的停留时间等

3)访问信息:session_id、访问ip(访问城市)等

Python日志产生器服务器测试并将日志写入到文件中

generate_log.py

#coding=UTF-8

import random

import time

url_paths = [

"class/112.html",

"class/128.html",

"class/145.html",

"class/146.html",

"class/131.html",

"class/130.html",

"learn/821",

"course/list"

]

ip_slices = [132,156,124,10,29,167,143,187,30,46,55,63,72,87,98,168]

http_referers = [

"http://www.baidu.com/s?wd={query}",

"https://www.sogou.com/web?query={query}",

"http://cn.bing.com/search?q={query}",

"https://search.yahoo.com/search?p={query}",

]

search_keyword = [

"Spark SQL实战",

"Hadoop基础",

"Storm实战",

"Spark Streaming实战",

"大数据面试"

]

status_codes = ["200","404","500"]

def sample_url():

return random.sample(url_paths, 1)[0]

def sample_ip():

slice = random.sample(ip_slices , 4)

return ".".join([str(item) for item in slice])

def sample_referer():

if random.uniform(0, 1) > 0.2:

return "-"

refer_str = random.sample(http_referers, 1)

query_str = random.sample(search_keyword, 1)

return refer_str[0].format(query=query_str[0])

def sample_status_code():

return random.sample(status_codes, 1)[0]

def generate_log(count = 10):

time_str = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

f = open("/home/hadoop/data/project/logs/access.log","w+")

while count >= 1:

query_log = "{ip}\t{local_time}\t\"GET /{url} HTTP/1.1\"\t{status_code}\t{referer}".format(url=sample_url(), ip=sample_ip(), referer=sample_referer(), status_code=sample_status_code(),local_time=time_str)

f.write(query_log + "\n")

count = count - 1

if __name__ == '__main__':

generate_log(100)

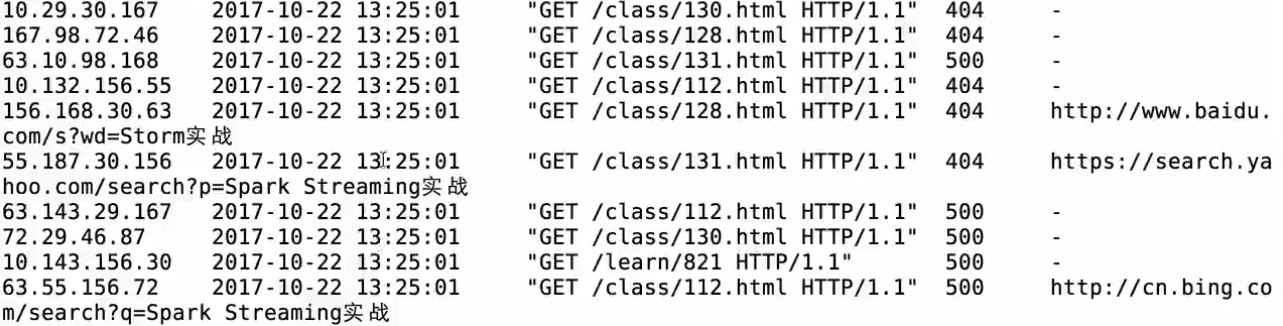

生成的日志

定时执行日志生成器:

linux crontab

网站:http://tool.lu/crontab

每一分钟执行一次的crontab表达式: */1 * * * *

log_generator.sh

python ****/generate_log.py

crontab -e

*/1 * * * * /home/hadoop/data/project/log_generator.sh

打通Flume&Kafka&Spark Streaming线路

使用Flume实时收集日志信息

对接python日志产生器输出的日志到Flume

streaming_project.conf

选型:access.log ==> 控制台输出

exec

memory

logger

exec-memory-logger.sources = exec-source

exec-memory-logger.sinks = logger-sink

exec-memory-logger.channels = memory-channel

exec-memory-logger.sources.exec-source.type = exec

exec-memory-logger.sources.exec-source.command = tail -F /home/hadoop/data/project/logs/access.log

exec-memory-logger.sources.exec-source.shell = /bin/sh -c

exec-memory-logger.channels.memory-channel.type = memory

exec-memory-logger.sinks.logger-sink.type = logger

exec-memory-logger.sources.exec-source.channels = memory-channel

exec-memory-logger.sinks.logger-sink.channel = memory-channel

启动flume测试

flume-ng agent \

--name exec-memory-logger \

--conf $FLUME_HOME/conf \

--conf-file /home/hadoop/data/project/streaming_project.conf \

-Dflume.root.logger=INFO,console

Flume对接kafka

日志==>Flume==>Kafka

启动zk:./zkServer.sh start

启动Kafka Server:

kafka-server-start.sh -daemon /home/hadoop/app/kafka_2.11-0.9.0.0/config/server.properties

修改Flume配置文件使得flume sink数据到Kafka

streaming_project2.conf

exec-memory-kafka.sources = exec-source

exec-memory-kafka.sinks = kafka-sink

exec-memory-kafka.channels = memory-channel

exec-memory-kafka.sources.exec-source.type = exec

exec-memory-kafka.sources.exec-source.command = tail -F /home/hadoop/data/project/logs/access.log

exec-memory-kafka.sources.exec-source.shell = /bin/sh -c

exec-memory-kafka.channels.memory-channel.type = memory

exec-memory-kafka.sinks.kafka-sink.type = org.apache.flume.sink.kafka.KafkaSink

exec-memory-kafka.sinks.kafka-sink.brokerList = hadoop000:9092

exec-memory-kafka.sinks.kafka-sink.topic = streamingtopic

exec-memory-kafka.sinks.kafka-sink.batchSize = 5

exec-memory-kafka.sinks.kafka-sink.requiredAcks = 1

exec-memory-kafka.sources.exec-source.channels = memory-channel

exec-memory-kafka.sinks.kafka-sink.channel = memory-channel

启动flume

flume-ng agent \

--name exec-memory-kafka \

--conf $FLUME_HOME/conf \

--conf-file /home/hadoop/data/project/streaming_project2.conf \

-Dflume.root.logger=INFO,console

启动kafka消费者查看日志是否正常

kafka-console-consumer.sh --zookeeper hadoop000:2181 --topic streamingtopic

Spark Streaming对接Kafka的数据进行消费

需求开发分析

功能1:今天到现在为止 实战课程 的访问量

yyyyMMdd courseid

使用数据库来进行存储我们的统计结果

Spark Streaming把统计结果写入到数据库里面

可视化前端根据:yyyyMMdd courseid 把数据库里面的统计结果展示出来

选择什么数据库作为统计结果的存储呢?

RDBMS: MySQL、Oracle...

day course_id click_count

20171111 1 10

20171111 2 10

下一个批次数据进来以后:(本操作比较麻烦)

20171111 (day)+ 1 (course_id ) ==> click_count + 下一个批次的统计结果 ==> 写入到数据库中

NoSQL: HBase、Redis....

HBase: 一个API就能搞定,非常方便(推荐)

20171111 + 1 ==> click_count + 下一个批次的统计结果

本次课程为什么要选择HBase的一个原因所在

前提需要启动:

HDFS

Zookeeper

HBase

HBase表设计

创建表

create 'imooc_course_clickcount', 'info'

Rowkey设计

day_courseid

思考:如何使用Scala来操作HBase

功能二:功能一+从搜索引擎引流过来的

HBase表设计

create 'imooc_course_search_clickcount','info'

rowkey设计:也是根据我们的业务需求来的

20171111 +search+ 1

在Spark应用程序接收到数据并完成相关需求

相关maven依赖已经在前面的文章中给出过

时间工具类:

package com.imooc.spark.project.utils

import java.util.Date

import org.apache.commons.lang3.time.FastDateFormat

/**

* 日期时间工具类

*/

object DateUtils {

val YYYYMMDDHHMMSS_FORMAT = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

val TARGE_FORMAT = FastDateFormat.getInstance("yyyyMMddHHmmss")

def getTime(time: String) = {

YYYYMMDDHHMMSS_FORMAT.parse(time).getTime

}

def parseToMinute(time :String) = {

TARGE_FORMAT.format(new Date(getTime(time)))

}

def main(args: Array[String]): Unit = {

println(parseToMinute("2017-10-22 14:46:01"))

}

}

java编写的hbase工具类

package com.imooc.spark.project.utils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

/**

* HBase操作工具类:Java工具类建议采用单例模式封装

*/

public class HBaseUtils {

HBaseAdmin admin = null;

Configuration configuration = null;

/**

* 私有改造方法

*/

private HBaseUtils(){

configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "hadoop000:2181");

configuration.set("hbase.rootdir", "hdfs://hadoop000:8020/hbase");

try {

admin = new HBaseAdmin(configuration);

} catch (IOException e) {

e.printStackTrace();

}

}

private static HBaseUtils instance = null;

public static synchronized HBaseUtils getInstance() {

if(null == instance) {

instance = new HBaseUtils();

}

return instance;

}

/**

* 根据表名获取到HTable实例

*/

public HTable getTable(String tableName) {

HTable table = null;

try {

table = new HTable(configuration, tableName);

} catch (IOException e) {

e.printStackTrace();

}

return table;

}

/**

* 添加一条记录到HBase表

* @param tableName HBase表名

* @param rowkey HBase表的rowkey

* @param cf HBase表的columnfamily

* @param column HBase表的列

* @param value 写入HBase表的值

*/

public void put(String tableName, String rowkey, String cf, String column, String value) {

HTable table = getTable(tableName);

Put put = new Put(Bytes.toBytes(rowkey));

put.add(Bytes.toBytes(cf), Bytes.toBytes(column), Bytes.toBytes(value));

try {

table.put(put);

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

//HTable table = HBaseUtils.getInstance().getTable("imooc_course_clickcount");

//System.out.println(table.getName().getNameAsString());

String tableName = "imooc_course_clickcount" ;

String rowkey = "20171111_88";

String cf = "info" ;

String column = "click_count";

String value = "2";

HBaseUtils.getInstance().put(tableName, rowkey, cf, column, value);

}

}

domain相关实体类

package com.imooc.spark.project.domain

/**

* 清洗后的日志信息

* @param ip 日志访问的ip地址

* @param time 日志访问的时间

* @param courseId 日志访问的实战课程编号

* @param statusCode 日志访问的状态码

* @param referer 日志访问的referer

*/

case class ClickLog(ip:String, time:String, courseId:Int, statusCode:Int, referer:String)

package com.imooc.spark.project.domain

/**

* 实战课程点击数实体类

* @param day_course 对应的就是HBase中的rowkey,20171111_1

* @param click_count 对应的20171111_1的访问总数

*/

case class CourseClickCount(day_course:String, click_count:Long)

package com.imooc.spark.project.domain

/**

* 从搜索引擎过来的实战课程点击数实体类

* @param day_search_course

* @param click_count

*/

case class CourseSearchClickCount(day_search_course:String, click_count:Long)

两需求的dao类

package com.imooc.spark.project.dao

import com.imooc.spark.project.domain.CourseClickCount

import com.imooc.spark.project.utils.HBaseUtils

import org.apache.hadoop.hbase.client.Get

import org.apache.hadoop.hbase.util.Bytes

import scala.collection.mutable.ListBuffer

/**

* 实战课程点击数-数据访问层

*/

object CourseClickCountDAO {

val tableName = "imooc_course_clickcount"

val cf = "info"

val qualifer =

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?