1.问题

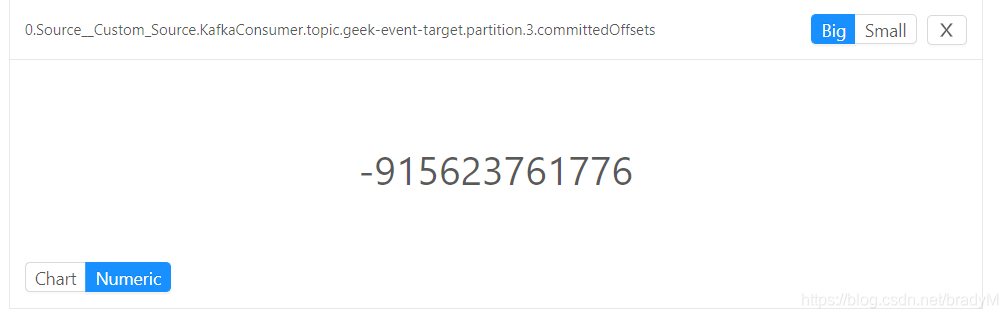

在一个flink任务提交后,我去 WebUi去查看偏移量的指标,发现值竟然是个负数(如下图):

这个指标官网上给出的释义是:对于每个分区,最后一次成功提交到Kafka的偏移量;所以不管怎么说,这个值都不是正常的。

2.结论

先给问题的结论(解决办法):之所以出现这样的值,是因为没有设置checkpoints的原因。

3.详解

但为什么不设置ck,这个指标就会变成负值呢?这就要我们深入源码去看啦

首先,我们定位到这个类:

org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher

/**

* For each partition, register a new metric group to expose current offsets and committed offsets.

* Per-partition metric groups can be scoped by user variables {@link KafkaConsumerMetricConstants#OFFSETS_BY_TOPIC_METRICS_GROUP}

* and {@link KafkaConsumerMetricConstants#OFFSETS_BY_PARTITION_METRICS_GROUP}.

*

* <p>Note: this method also registers gauges for deprecated offset metrics, to maintain backwards compatibility.

*

* @param consumerMetricGroup The consumer metric group

* @param partitionOffsetStates The partition offset state holders, whose values will be used to update metrics

*/

private void registerOffsetMetrics(

MetricGroup consumerMetricGroup,

List<KafkaTopicPartitionState<KPH>> partitionOffsetStates) {

for (KafkaTopicPartitionState<KPH> ktp : partitionOffsetStates) {

MetricGroup topicPartitionGroup = consumerMetricGroup

.addGroup(OFFSETS_BY_TOPIC_METRICS_GROUP, ktp.getTopic())

.addGroup(OFFSETS_BY_PARTITION_METRICS_GROUP, Integer.toString(ktp.getPartition()));

topicPartitionGroup.gauge(CURRENT_OFFSETS_METRICS_GAUGE, new OffsetGauge(ktp, OffsetGaugeType.CURRENT_OFFSET));

topicPartitionGroup.gauge(COMMITTED_OFFSETS_METRICS_GAUGE, new OffsetGauge(ktp, OffsetGaugeType.COMMITTED_OFFSET));

legacyCurrentOffsetsMetricGroup.gauge(getLegacyOffsetsMetricsGaugeName(ktp), new OffsetGauge(ktp, OffsetGaugeType.CURRENT_OFFSET));

legacyCommittedOffsetsMetricGroup.gauge(getLegacyOffsetsMetricsGaugeName(ktp), new OffsetGauge(ktp, OffsetGaugeType.COMMITTED_OFFSET));

}

}

我们可以看到registerOffsetMetrics这个方法中:

topicPartitionGroup.gauge(COMMITTED_OFFSETS_METRICS_GAUGE, new OffsetGauge(ktp, OffsetGaugeType.COMMITTED_OFFSET));

很明显,要提交的offset被封装到ktp的这个对象中;于是进入该对象,是个KafkaTopicPartitionState类型:

public KafkaTopicPartitionState(KafkaTopicPartition partition, KPH kafkaPartitionHandle) {

this.partition = partition;

this.kafkaPartitionHandle = kafkaPartitionHandle;

this.offset = KafkaTopicPartitionStateSentinel.OFFSET_NOT_SET;

this.committedOffset = KafkaTopicPartitionStateSentinel.OFFSET_NOT_SET;

}

/** Magic number that defines an unset offset. */

public static final long OFFSET_NOT_SET = -915623761776L;

这里我们就可以看到我们要的指标committedOffset在一开始的时候,就会被初始化一个这样的负值;

到这里我们似乎明白了在web ui中看到的负值是怎么来的了,但是为什么我们提交的偏移量没有赋值到committedOffset这个指标上呢?那我们就要把问题定位到提交offset的代码上了,我们继续往下看这个类:

org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase

public final void notifyCheckpointComplete(long checkpointId) throws Exception {

if (!running) {

LOG.debug("notifyCheckpointComplete() called on closed source");

return;

}

final AbstractFetcher<?, ?> fetcher = this.kafkaFetcher;

if (fetcher == null) {

LOG.debug("notifyCheckpointComplete() called on uninitialized source");

return;

}

if (offsetCommitMode == OffsetCommitMode.ON_CHECKPOINTS) {

// only one commit operation must be in progress

if (LOG.isDebugEnabled()) {

LOG.debug("Consumer subtask {} committing offsets to Kafka/ZooKeeper for checkpoint {}.",

getRuntimeContext().getIndexOfThisSubtask(), checkpointId);

}

try {

final int posInMap = pendingOffsetsToCommit.indexOf(checkpointId);

if (posInMap == -1) {

LOG.warn("Consumer subtask {} received confirmation for unknown checkpoint id {}",

getRuntimeContext().getIndexOfThisSubtask(), checkpointId);

return;

}

@SuppressWarnings("unchecked")

Map<KafkaTopicPartition, Long> offsets =

(Map<KafkaTopicPartition, Long>) pendingOffsetsToCommit.remove(posInMap);

// remove older checkpoints in map

for (int i = 0; i < posInMap; i++) {

pendingOffsetsToCommit.remove(0);

}

if (offsets == null || offsets.size() == 0) {

LOG.debug("Consumer subtask {} has empty checkpoint state.", getRuntimeContext().getIndexOfThisSubtask());

return;

}

fetcher.commitInternalOffsetsToKafka(offsets, offsetCommitCallback);

} catch (Exception e) {

if (running) {

throw e;

}

// else ignore exception if we are no longer running

}

}

}

上面的方法里有一个判断逻辑,然后当你的提交模式用了ck时,走了一个commitInternalOffsetsToKafka的方法:

if (offsetCommitMode == OffsetCommitMode.ON_CHECKPOINTS)

//......

fetcher.commitInternalOffsetsToKafka(offsets, offsetCommitCallback);

进入该方法后发现又调用了一个doCommitInternalOffsetsToKafka方法:

public final void commitInternalOffsetsToKafka(

Map<KafkaTopicPartition, Long> offsets,

@Nonnull KafkaCommitCallback commitCallback) throws Exception {

// Ignore sentinels. They might appear here if snapshot has started before actual offsets values

// replaced sentinels

doCommitInternalOffsetsToKafka(filterOutSentinels(offsets), commitCallback);

}

然后会跳到org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher 的 doCommitInternalOffsetsToKafka方法:

protected void doCommitInternalOffsetsToKafka(

Map<KafkaTopicPartition, Long> offsets,

@Nonnull KafkaCommitCallback commitCallback) throws Exception {

@SuppressWarnings("unchecked")

List<KafkaTopicPartitionState<TopicPartition>> partitions = subscribedPartitionStates();

Map<TopicPartition, OffsetAndMetadata> offsetsToCommit = new HashMap<>(partitions.size());

for (KafkaTopicPartitionState<TopicPartition> partition : partitions) {

Long lastProcessedOffset = offsets.get(partition.getKafkaTopicPartition());

if (lastProcessedOffset != null) {

checkState(lastProcessedOffset >= 0, "Illegal offset value to commit");

// committed offsets through the KafkaConsumer need to be 1 more than the last processed offset.

// This does not affect Flink's checkpoints/saved state.

long offsetToCommit = lastProcessedOffset + 1;

offsetsToCommit.put(partition.getKafkaPartitionHandle(), new OffsetAndMetadata(offsetToCommit));

partition.setCommittedOffset(offsetToCommit);

}

}

里面有个逻辑:

partition.setCommittedOffset(offsetToCommit);

在这里,发现offsetToCommit被重置了,至此真相大白;

梳理一下的话是这样的:

当flink提交offsetToCommit会判断当前的模式

if (offsetCommitMode == OffsetCommitMode.ON_CHECKPOINTS)

如果当前没用ck,offsetToCommit这个指标的值没有更新,是初始值-915623761776L;

如果当前用了ck,那么最后会走到一个

partition.setCommittedOffset(offsetToCommit);

这样的逻辑来更新offsetToCommit的指标;所以没有设置ck时,webui上的该值为负数。

981

981

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?