https://gitee.com/mindspore/docs/blob/master/tutorials/source_zh_cn/advanced/mixed_precision.ipynb

自动混合精度

混合精度(Mix Precision)训练是指在训练时,对神经网络不同的运算采用不同的数值精度的运算策略。在神经网络运算中,部分运算对数值精度不敏感,此时使用较低精度可以达到明显的加速效果(如conv、matmul等);而部分运算由于输入和输出的数值差异大,通常需要保留较高精度以保证结果的正确性(如log、softmax等)。

当前的AI加速卡通常通过针对计算密集、精度不敏感的运算设计了硬件加速模块,如NVIDIA GPU的TensorCore、Ascend NPU的Cube等。对于conv、matmul等运算占比较大的神经网络,其训练速度通常会有较大的加速比。

mindspore.amp模块提供了便捷的自动混合精度接口,用户可以在不同的硬件后端通过简单的接口调用获得训练加速。下面我们对混合精度计算原理进行简介,而后通过实例介绍MindSpore的自动混合精度用法。

混合精度计算原理

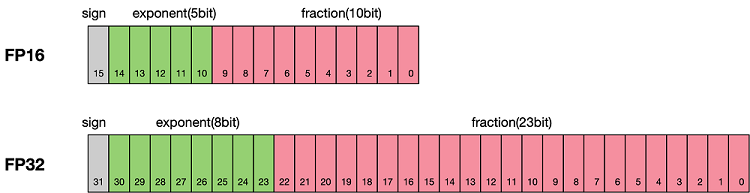

浮点数据类型主要分为双精度(FP64)、单精度(FP32)、半精度(FP16)。在神经网络模型的训练过程中,一般默认采用单精度(FP32)浮点数据类型,来表示网络模型权重和其他参数。在了解混合精度训练之前,这里简单了解浮点数据类型。

根据IEEE二进制浮点数算术标准(IEEE 754)的定义,浮点数据类型分为双精度(FP64)、单精度(FP32)、半精度(FP16)三种,其中每一种都有三个不同的位来表示。FP64表示采用8个字节共64位,来进行的编码存储的一种数据类型;同理,FP32表示采用4个字节共32位来表示;FP16则是采用2字节共16位来表示。如图所示:

从图中可以看出,与FP32相比,FP16的存储空间是FP32的一半。类似地,FP32则是FP64的一半。因此使用FP16进行运算具备以下优势:

- 减少内存占用:FP16的位宽是FP32的一半,因此权重等参数所占用的内存也是原来的一半,节省下来的内存可以放更大的网络模型或者使用更多的数据进行训练。

- 计算效率更高:在特殊的AI加速芯片如华为Atlas训练系列产品和Atlas 200/300/500推理产品系列,或者NVIDIA VOLTA架构的GPU上,使用FP16的执行运算性能比FP32更加快。

- 加快通讯效率:针对分布式训练,特别是在大模型训练的过程中,通讯的开销制约了网络模型训练的整体性能,通讯的位宽少了意味着可以提升通讯性能,减少等待时间,加快数据的流通。

但是使用FP16同样会带来一些问题:

- 数据溢出:FP16的有效数据表示范围为 [ 5.9 × 1 0 − 8 , 65504 ] [5.9\times10^{-8}, 65504] [5.9×10−8,65504],FP32的有效数据表示范围为 [ 1.4 × 1 0 − 45 , 1.7 × 1 0 38 ] [1.4\times10^{-45}, 1.7\times10^{38}] [1.4×10−45,1.7×1038]。可见FP16相比FP32的有效范围要窄很多,使用FP16替换FP32会出现上溢(Overflow)和下溢(Underflow)的情况。而在深度学习中,需要计算网络模型中权重的梯度(一阶导数),因此梯度会比权重值更加小,往往容易出现下溢情况。

- 舍入误差:Rounding Error是指当网络模型的反向梯度很小,一般FP32能够表示,但是转换到FP16会小于当前区间内的最小间隔,会导致数据溢出。如

0.00006666666在FP32中能正常表示,转换到FP16后会表示成为0.000067,不满足FP16最小间隔的数会强制舍入。

因此,在使用混合精度获得训练加速和内存节省的同时,需要考虑FP16引入问题的解决。Loss Scale损失缩放,FP16类型数据下溢问题的解决方案,其主要思想是在计算损失值loss的时候,将loss扩大一定的倍数。根据链式法则,梯度也会相应扩大,然后在优化器更新权重时再缩小相应的倍数,从而避免了数据下溢。

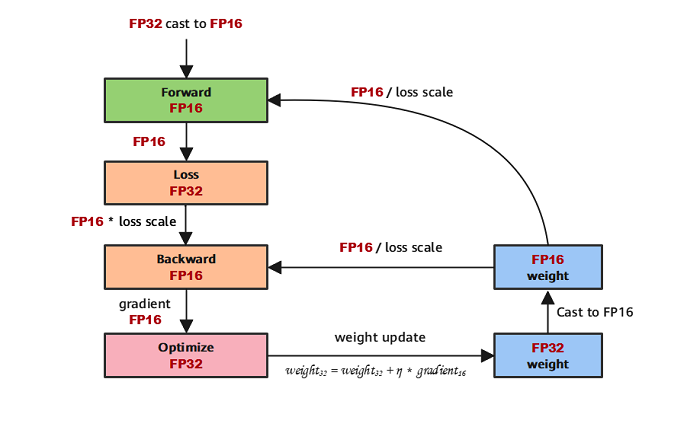

根据上述原理介绍,典型的混合精度计算流程如下图所示:

- 参数以FP32存储;

- 正向计算过程中,遇到FP16算子,需要把算子输入和参数从FP32 cast成FP16进行计算;

- 将Loss层设置为FP32进行计算;

- 反向计算过程中,首先乘以Loss Scale值,避免反向梯度过小而产生下溢;

- FP16参数参与梯度计算,其结果将被cast回FP32;

- 除以Loss scale值,还原被放大的梯度;

- 判断梯度是否存在溢出,如果溢出则跳过更新,否则优化器以FP32对原始参数进行更新。

下面我们通过导入快速入门中的手写数字识别模型及数据集,演示MindSpore的自动混合精度实现。

import mindspore as ms

from mindspore import nn

from mindspore import value_and_grad

from mindspore.dataset import vision, transforms

from mindspore.dataset import MnistDataset

# Download data from open datasets

from download import download

url = "https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/" \

"notebook/datasets/MNIST_Data.zip"

path = download(url, "./", kind="zip", replace=True)

def datapipe(path, batch_size):

image_transforms = [

vision.Rescale(1.0 / 255.0, 0),

vision.Normalize(mean=(0.1307,), std=(0.3081,)),

vision.HWC2CHW()

]

label_transform = transforms.TypeCast(ms.int32)

dataset = MnistDataset(path)

dataset = dataset.map(image_transforms, 'image')

dataset = dataset.map(label_transform, 'label')

dataset = dataset.batch(batch_size)

return dataset

train_dataset = datapipe('MNIST_Data/train', 64)

# Define model

class Network(nn.Cell):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.dense_relu_sequential = nn.SequentialCell(

nn.Dense(28*28, 512),

nn.ReLU(),

nn.Dense(512, 512),

nn.ReLU(),

nn.Dense(512, 10)

)

def construct(self, x):

x = self.flatten(x)

logits = self.dense_relu_sequential(x)

return logits

Downloading data from https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/notebook/datasets/MNIST_Data.zip (10.3 MB)

file_sizes: 100%|██████████████████████████| 10.8M/10.8M [00:01<00:00, 8.98MB/s]

Extracting zip file...

Successfully downloaded / unzipped to ./

类型转换

混合精度计算需要将需要使用低精度的运算进行类型转换,将其输入转为FP16类型,得到输出后进将其重新转回FP32类型。MindSpore同时提供了自动和手动类型转换的方法,满足对易用性和灵活性的不同需求,下面我们分别对其进行介绍。

自动类型转换

mindspore.amp.auto_mixed_precision 接口提供对网络做自动类型转换的功能。自动类型转换遵循黑白名单机制,根据常用的运算精度习惯配置了4个等级,分别为:

- ‘O0’:神经网络保持FP32;

- ‘O1’:按白名单将运算cast为FP16;

- ‘O2’:按黑名单保留FP32,其余运算cast为FP16;

- ‘O3’:神经网络完全cast为FP16。

下面是使用自动类型转换的示例:

from mindspore.amp import auto_mixed_precision

model = Network()

model = auto_mixed_precision(model, 'O2')

手动类型转换

通常情况下自动类型转换可以通过满足大部分混合精度训练的需求,但当用户需要精细化控制神经网络不同部分的运算精度时,可以通过手动类型转换的方式进行控制。

手动类型转换需考虑模型各个模块的运算精度,一般仅在需要获得极致性能的情况下使用。

下面我们对前文的Network进行改造,演示手动类型转换的不同方式。

Cell粒度类型转换

nn.Cell类提供了to_float方法,可以一键配置该模块的运算精度,自动将模块输入cast为指定的精度:

class NetworkFP16(nn.Cell):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.dense_relu_sequential = nn.SequentialCell(

nn.Dense(28*28, 512).to_float(ms.float16),

nn.ReLU(),

nn.Dense(512, 512).to_float(ms.float16),

nn.ReLU(),

nn.Dense(512, 10).to_float(ms.float16)

)

def construct(self, x):

x = self.flatten(x)

logits = self.dense_relu_sequential(x)

return logits

自定义粒度类型转换

当用户需要在单个运算,或多个模块组合配置运算精度时,Cell粒度往往无法满足,此时可以直接通过对输入数据的类型进行cast来达到自定义粒度控制的目的。

class NetworkFP16Manual(nn.Cell):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.dense_relu_sequential = nn.SequentialCell(

nn.Dense(28*28, 512),

nn.ReLU(),

nn.Dense(512, 512),

nn.ReLU(),

nn.Dense(512, 10)

)

def construct(self, x):

x = self.flatten(x)

x = x.astype(ms.float16)

logits = self.dense_relu_sequential(x)

logits = logits.astype(ms.float32)

return logits

损失缩放

MindSpore中提供了两种Loss Scale的实现,分别为StaticLossScaler和DynamicLossScaler,其差异为损失缩放值scale value是否进行动态调整。下面以DynamicLossScalar为例,根据混合精度计算流程实现神经网络训练逻辑。

首先,实例化LossScaler,并在定义前向网络时,手动放大loss值。

from mindspore.amp import DynamicLossScaler

# Instantiate loss function and optimizer

loss_fn = nn.CrossEntropyLoss()

optimizer = nn.SGD(model.trainable_params(), 1e-2)

# Define LossScaler

loss_scaler = DynamicLossScaler(scale_value=2**16, scale_factor=2, scale_window=50)

def forward_fn(data, label):

logits = model(data)

loss = loss_fn(logits, label)

# scale up the loss value

loss = loss_scaler.scale(loss)

return loss, logits

接下来进行函数变换,获得梯度函数。

grad_fn = value_and_grad(forward_fn, None, model.trainable_params(), has_aux=True)

定义训练step:计算当前梯度值并恢复损失。使用 all_finite 判断是否出现梯度下溢问题,如果无溢出,恢复梯度并更新网络权重;如果溢出,跳过此step。

from mindspore.amp import all_finite

@ms.jit

def train_step(data, label):

(loss, _), grads = grad_fn(data, label)

loss = loss_scaler.unscale(loss)

is_finite = all_finite(grads)

if is_finite:

grads = loss_scaler.unscale(grads)

optimizer(grads)

loss_scaler.adjust(is_finite)

return loss

最后,我们训练1个epoch,观察使用自动混合精度训练的loss收敛情况。

size = train_dataset.get_dataset_size()

model.set_train()

for batch, (data, label) in enumerate(train_dataset.create_tuple_iterator()):

loss = train_step(data, label)

if batch % 100 == 0:

loss, current = loss.asnumpy(), batch

print(f"loss: {loss:>7f} [{current:>3d}/{size:>3d}]")

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.292 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.418 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.487 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.511 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.820 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.464.847 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.523.923 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.523.992 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.047 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.073 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.092 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.239 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.264 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.302 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.334 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.375 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.401 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.524.574 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.091 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.124 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.291 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.436 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.577 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:30:51.525.717 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

loss: 2.308166 [ 0/938]

loss: 1.775861 [100/938]

loss: 0.774661 [200/938]

loss: 0.678518 [300/938]

loss: 0.550532 [400/938]

loss: 0.494209 [500/938]

loss: 0.282655 [600/938]

loss: 0.409580 [700/938]

loss: 0.208781 [800/938]

loss: 0.287908 [900/938]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.752.223 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.752.439 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.752.692 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.752.757 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.753.500 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

[ERROR] CORE(6834,ffffb667d010,python):2024-08-06-04:31:17.753.576 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_6834/933851328.py]

\

可以看到loss收敛趋势正常,没有出现溢出问题。

Cell配置自动混合精度

MindSpore支持使用Cell封装完整计算图的编程范式,此时可以使用mindspore.amp.build_train_network接口,自动进行类型转换,并将Loss Scale传入,作为整图计算的一部分。

此时仅需要配置混合精度等级和LossScaleManager即可获得配置好自动混合精度的计算图。

FixedLossScaleManager和DynamicLossScaleManager是Cell配置自动混合精度的Loss scale管理接口,分别与StaticLossScalar和DynamicLossScalar对应,具体详见mindspore.amp。

使用

Cell配置自动混合精度训练仅支持GPU和Ascend。

from mindspore.amp import build_train_network, FixedLossScaleManager

model = Network()

loss_scale_manager = FixedLossScaleManager()

model = build_train_network(model, optimizer, loss_fn, level="O2", loss_scale_manager=loss_scale_manager)

Model 配置自动混合精度

mindspore.train.Model是神经网络快速训练的高阶封装,其将mindspore.amp.build_train_network封装在内,因此同样只需要配置混合精度等级和LossScaleManager,即可进行自动混合精度训练。

使用

Model配置自动混合精度训练仅支持GPU和Ascend。

from mindspore.train import Model, LossMonitor

# Initialize network

model = Network()

loss_fn = nn.CrossEntropyLoss()

optimizer = nn.SGD(model.trainable_params(), 1e-2)

loss_scale_manager = FixedLossScaleManager()

trainer = Model(model, loss_fn=loss_fn, optimizer=optimizer, metrics={'accuracy'}, amp_level="O2", loss_scale_manager=loss_scale_manager)

loss_callback = LossMonitor(100)

trainer.train(10, train_dataset, callbacks=[loss_callback])

epoch: 1 step: 100, loss is 1.740437

epoch: 1 step: 200, loss is 1.0307916

epoch: 1 step: 300, loss is 0.69421303

epoch: 1 step: 400, loss is 0.40232363

epoch: 1 step: 500, loss is 0.29532564

epoch: 1 step: 600, loss is 0.30918723

epoch: 1 step: 700, loss is 0.44912875

epoch: 1 step: 800, loss is 0.44419393

epoch: 1 step: 900, loss is 0.25810432

epoch: 2 step: 62, loss is 0.38018188

epoch: 2 step: 162, loss is 0.29220462

epoch: 2 step: 262, loss is 0.18826479

epoch: 2 step: 362, loss is 0.21040359

epoch: 2 step: 462, loss is 0.5233204

epoch: 2 step: 562, loss is 0.1965191

epoch: 2 step: 662, loss is 0.18731003

epoch: 2 step: 762, loss is 0.19631395

epoch: 2 step: 862, loss is 0.2824983

epoch: 3 step: 24, loss is 0.305027

epoch: 3 step: 124, loss is 0.27728492

epoch: 3 step: 224, loss is 0.22115512

epoch: 3 step: 324, loss is 0.24749175

epoch: 3 step: 424, loss is 0.18050134

epoch: 3 step: 524, loss is 0.16673708

epoch: 3 step: 624, loss is 0.1952721

epoch: 3 step: 724, loss is 0.24775256

epoch: 3 step: 824, loss is 0.2637187

epoch: 3 step: 924, loss is 0.2051135

epoch: 4 step: 86, loss is 0.18540674

epoch: 4 step: 186, loss is 0.28360164

epoch: 4 step: 286, loss is 0.1406641

epoch: 4 step: 386, loss is 0.16650882

epoch: 4 step: 486, loss is 0.14571275

epoch: 4 step: 586, loss is 0.11102664

epoch: 4 step: 686, loss is 0.23643321

epoch: 4 step: 786, loss is 0.23962979

epoch: 4 step: 886, loss is 0.26412234

epoch: 5 step: 48, loss is 0.20528676

epoch: 5 step: 148, loss is 0.18884227

epoch: 5 step: 248, loss is 0.11676

epoch: 5 step: 348, loss is 0.15815629

epoch: 5 step: 448, loss is 0.09909089

epoch: 5 step: 548, loss is 0.083064914

epoch: 5 step: 648, loss is 0.16511258

epoch: 5 step: 748, loss is 0.14904837

epoch: 5 step: 848, loss is 0.06848145

epoch: 6 step: 10, loss is 0.14172876

epoch: 6 step: 110, loss is 0.10210356

epoch: 6 step: 210, loss is 0.17947555

epoch: 6 step: 310, loss is 0.07561863

epoch: 6 step: 410, loss is 0.0703983

epoch: 6 step: 510, loss is 0.0639836

epoch: 6 step: 610, loss is 0.12375862

epoch: 6 step: 710, loss is 0.12338794

epoch: 6 step: 810, loss is 0.13181406

epoch: 6 step: 910, loss is 0.2614221

epoch: 7 step: 72, loss is 0.110507764

epoch: 7 step: 172, loss is 0.2503576

epoch: 7 step: 272, loss is 0.18422352

epoch: 7 step: 372, loss is 0.028233707

epoch: 7 step: 472, loss is 0.0869534

epoch: 7 step: 572, loss is 0.3087073

epoch: 7 step: 672, loss is 0.08056754

epoch: 7 step: 772, loss is 0.10260949

epoch: 7 step: 872, loss is 0.17345119

epoch: 8 step: 34, loss is 0.04402008

epoch: 8 step: 134, loss is 0.12012412

epoch: 8 step: 234, loss is 0.061308697

epoch: 8 step: 334, loss is 0.18258664

epoch: 8 step: 434, loss is 0.07378549

epoch: 8 step: 534, loss is 0.12799212

epoch: 8 step: 634, loss is 0.04644889

epoch: 8 step: 734, loss is 0.2280572

epoch: 8 step: 834, loss is 0.038715497

epoch: 8 step: 934, loss is 0.27656066

epoch: 9 step: 96, loss is 0.15312964

epoch: 9 step: 196, loss is 0.12590355

epoch: 9 step: 296, loss is 0.07894421

epoch: 9 step: 396, loss is 0.068609774

epoch: 9 step: 496, loss is 0.10751188

epoch: 9 step: 596, loss is 0.2135655

epoch: 9 step: 696, loss is 0.036319293

epoch: 9 step: 796, loss is 0.18801415

epoch: 9 step: 896, loss is 0.12534878

epoch: 10 step: 58, loss is 0.05122885

epoch: 10 step: 158, loss is 0.08717978

epoch: 10 step: 258, loss is 0.03683787

epoch: 10 step: 358, loss is 0.07735188

epoch: 10 step: 458, loss is 0.07393028

epoch: 10 step: 558, loss is 0.1037335

epoch: 10 step: 658, loss is 0.08793874

epoch: 10 step: 758, loss is 0.11309098

epoch: 10 step: 858, loss is 0.06396476

477

477

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?