文章作自2016年7月31日,由于涉及专利,故延迟发布。文章技术已申请专利,如有合作意向请联系作者:45803625@qq.com。

The article was wrote on July 31, 2016, it's post recently because of patents involved. The technology of this article has been applied for patent, if you have the intention to cooperate, please contact the author: 45803625@qq.com.

最近关注了一下VR的新闻,突发奇想,同一个时刻人眼只能看清画面的一部分,即人眼焦点部分的内容,能不能在播放视频的时候,在焦点内播放高清内容,其他地方播放低清内容?这样只需要很少流量,就能观看到高清视频了。

I read some VR related news recently, suddenly an idea came to my mind. The human eye can only see part of the picture at the same time , where is the the focus of the human eye. Can we do like this? When playing video, render HD content in focus area of audience, while render lower resolution content in other area? This requires only a small amount of network traffic to watch HD video.想到这个点子的原因有两个,一是感觉现在的高清视频已经向2k、4k发展,手机都可以录制4k视频,但这种高清占用的资源(包括带宽、解码等)太高,推广很困难。二是现在的VR头显带有人眼检测功能。

There are two reasons for the idea, one is that, even mobile phone can record 4K video now, but HD video need huge bandwidth to trasport it, it's very difficult to promote. Another reason that, current VR headset has gaze detection function.

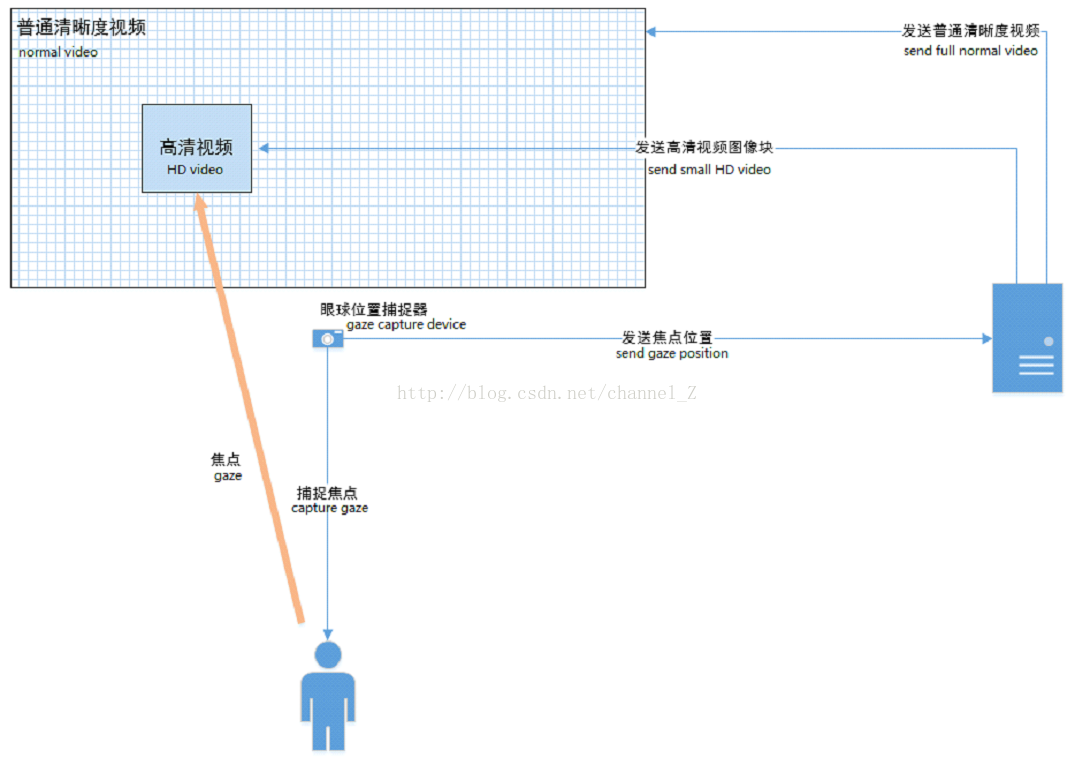

粗略的实现方法,首先正常播放低清视频,然后屏幕旁边的人眼检测设备(可以是摄像头)检测到人眼看到的屏幕的位置,然后发送到视频服务器,视频服务器裁剪高清视频中的,用户视线范围的一块高清图像,然后发送到播放器,播放器将该高清图像叠加到低清视频上,持续这个动作,使用户感到在观看高清视频。如下图:

Rough implementation, firstly we download and render normal resolution video, then the gaze detection device beside the screen detect the gaze position of the audience, it sends the gaze position to the video server, video server cut an image of HD video in that area, and then sent to the video player, the video player render the HD image on top of normal HD video, keep doing this, users can feel that they are watching HD video. Following chart:

想到使用VR头显的另一个原因,是头显相对人眼的位置是固定的,无论是前后距离,还是上下左右方向,都是不变的,这样容易实现人眼检测,一般手机摄像头应该都可以了。于是买了2个Google Cardboard类的简易头显,结果大大出乎我意料(原谅我的无知),原来VR头显原理是为2个眼睛显示不同的图像,为了能实现立体感,这样一个屏幕显示的内容就只有一半,而且头显还有一个透镜,放大了图像,这样图像就很不清晰了。这和我想象的完全不一样,如果之前对VR有一点认识,就不会产生这么“另类”的想法了。不过其实没有VR头显,只要能保证显示器在用户前面相对固定的位置,其实也能达到需求,毕竟人眼追踪这个技术发展也好多年了,应该有些成熟的方案。

The reason of using VR headset, because it can detect gaze easily. So I brought a Google Cardboard class, the result surprised me a lot (forgive me that know nothing about VR before), VR need to separate the screen to two parts, and display similar content both, which cause the actual resolution is much lower then screen.

接着又研究了一下视频解码技术,读了一篇非常通俗易懂的文章https://www.zhihu.com/question/22567173,再次发现一个大问题(请再次原谅我的无知)。原来视频压缩/传输的时候,并不是将一张张图片连续保存那么简单,它是有帧间压缩的,通常10几帧能压到1~2帧的水平,如果我们这里采用的还是每帧返回一张图像这种做法,即使这高清图比原图小,但这么多图片传输,并不能节省带宽。而且同时还想到另外一个问题,如果是实时对高清视频进行特定帧的提取和裁剪,服务器的计算压力很大,很难保证实时性,再加上网络延时,等高清图发到播放器,对应的帧早就过去了。这个方法在知晓视频编码算法的人来看,肯定是个“另类”和“疯狂”的想法。。。

Then I studied video encode/decode technology, here is an article for beginner. Https://www.zhihu.com/question/22567173 and then I found a big problem. (please forgive me know nothing about video encode/decode). When doing video compression / transmission, it's not simply transport and render images, it will compress between frames, usually 10 frames can be compressed to the size of 1~2 frames, if here we return HD image of gaze area in each frame, even if the original HD area is small, but so many HD images in transmission, can not save bandwidth. Also, if it extracts HD images in each frame on video server, it requires huge calculation, together with the network delay, it is difficult to ensure the real-time playing. This methodology is certainly a Crazy & Stupid idea for people who know video coding algorithms...

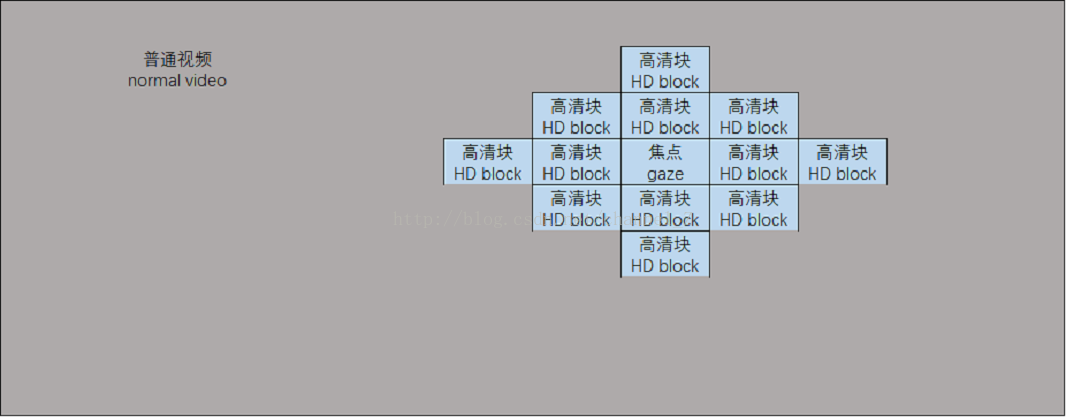

但是否就没有办法了呢?我们能不能沿用现在的视频压缩算法?我想到另外一种方法,针对高清视频,比如高清视频是10Ax10B大小,我们将高清视频切成AxB大小的小视频,总共100个,每个视频都可以单独播放,如果将它们按原来的位置摆放好,一起播放,就是一个完整的高清视频。我们将焦点范围换算成能看到的小视频块(带有它们的位置信息),将一批小视频发送至播放器,播放器将它们放在对应的位置,同步播放即可。当焦点不动时,持续加载这些小视频。当焦点缓慢移动时,可以只是加载新覆盖的小视频。只有当焦点快速移动时,才需要完全加载不同的小视频。我们这样用小视频代替高清图片,就能获得同样的视频压缩效果,而且大部分时间焦点不会移动很快,我们同样可以预加载一些小视频,这样加快高清视频的加载速度。另外当我们获得足够多的数据,还可以用大数据分析人们一般会关注哪些地方,可以用数据预测来预加载一些小视频。

Is there other way for that? Can we reuse existing video compression algorithms? I think of another way, for HD video, split them to 10x10 blocks, totally 100 blocks, each video block can be played individually, if put them all to the original positions and play together, it is a full HD video. We send the small video blocks (with their location) of the gaze area. The video player put them in the corresponding location and play them synchronously with normal video. Continue loading these video blocks when audience gaze is not moving. When the gaze moves slowly, we can just load few new video blocks and render them. Only when the gaze moves quickly that you need to load different video blocks completely. We use this video blocks instead of HD images, can reuse the same video compression algorithms, and most of the time the focus will not move quickly, we can also pre loaded small video, to accelerate HD video loading speed. In addition, when we get enough data, we can also use big data analysis to find out what people will pay attention to, and we can use the prediction to preload some video blocks.

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?