本次构建kubernetes的rpm包使用的是kubernetes-server-linux-amd64.tar.gz里面的.spec文件进行构建。因为kubernetes-server-linux-amd64.tar.gz包里面自带了每个组件的docker镜像。相比于从GitHub上面clone到的.spec文件,这里的镜像可以拿来直接用,也不需要fedora的镜像来build。

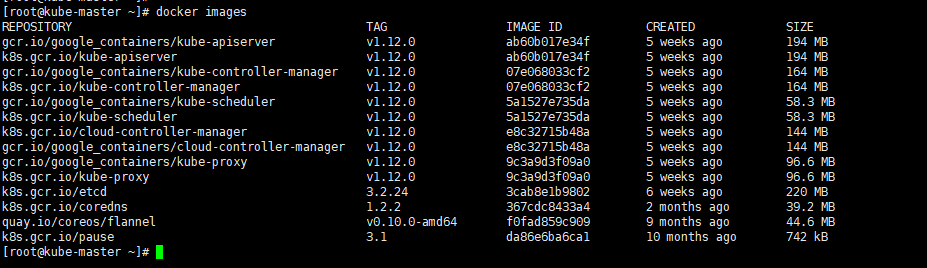

本次用到的所有镜像:

下载kubernetes-server-linux-amd64.tar.gz

1.从Google下载最新版的kubernetes包

https://storage.googleapis.com/kubernetes-release/release/${KUBE_VERSION}/kubernetes-server-linux-amd64.tar.gz

把${KUBE_VERSION}替换为你想下载的版本就可以了。

2.GitHub上选择自己想用的版本进行下载。

https://github.com/kubernetes/kubernetes/releases

本文使用1.12.0版本的kubernetes为例

rpm包构建目录

[root@kube-master ~]# tar xzf kubernetes-server-linux-amd64.tar.gz

[root@kube-master ~]# cd kubernetes

[root@kube-master kubernetes]# mkdir src

[root@kube-master kubernetes]# tar xzf kubernetes-src.tar.gz -C src/

[root@kube-master kubernetes]# cd src/build/rpms/

[root@kube-master rpms]# ls

10-kubeadm.conf cri-tools.spec kubectl.spec kubelet.service kubernetes-cni.spec

BUILD kubeadm.spec kubelet.env kubelet.spec OWNERS

存储镜像和二进制的目录(二进制文件也可以用来进行二进制安装)

[root@kube-master bin]# pwd

/root/kubernetes/server/bin

[root@kube-master bin]# ls

apiextensions-apiserver kubeadm kube-controller-manager.docker_tag kube-proxy.docker_tag mounter

cloud-controller-manager kube-apiserver kube-controller-manager.tar kube-proxy.tar

cloud-controller-manager.docker_tag kube-apiserver.docker_tag kubectl kube-scheduler

cloud-controller-manager.tar kube-apiserver.tar kubelet kube-scheduler.docker_tag

hyperkube kube-controller-manager kube-proxy kube-scheduler.tar

构建rpm包需要使用rpmbuild,所以先安装rpmbuild:

yum install rpmbuild rpmdevtools -y

rpmdev-setuptree

rpmbuild详细使用方法,请点这里

开始制作rpm包

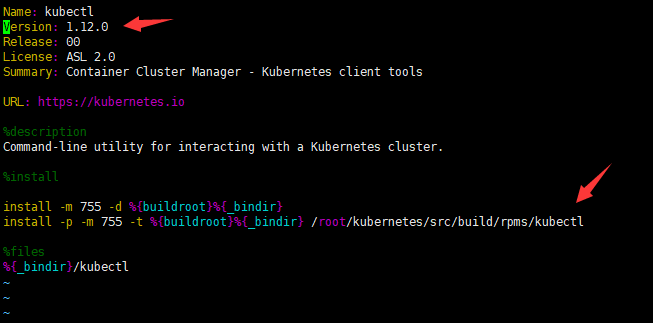

kubectl:

cd kubernetes/src/build/rpms/

cp -a /root/kubernetes/server/bin/kubectl .

vim kubectl.spec

这里写版本和kubectl二进制文件的绝对路径。

执行rpmbuild:

rpmbuild -ba kubectl.spec

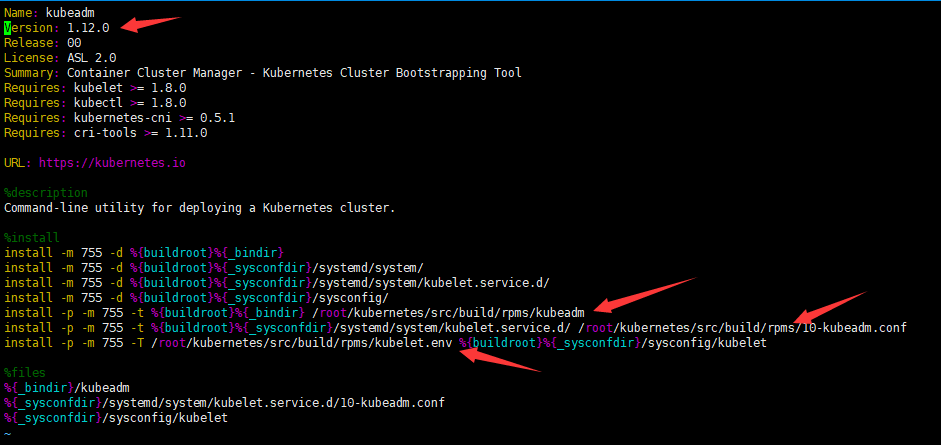

kubeadm:

cd kubernetes/src/build/rpms/

cp -a /root/kubernetes/server/bin/kubeadm .

vim kubeadm.spec

这里写版本和kubeadm二进制文件以及配置文件的绝对路径。

执行rpmbuild:

rpmbuild -ba kubeadm.spec

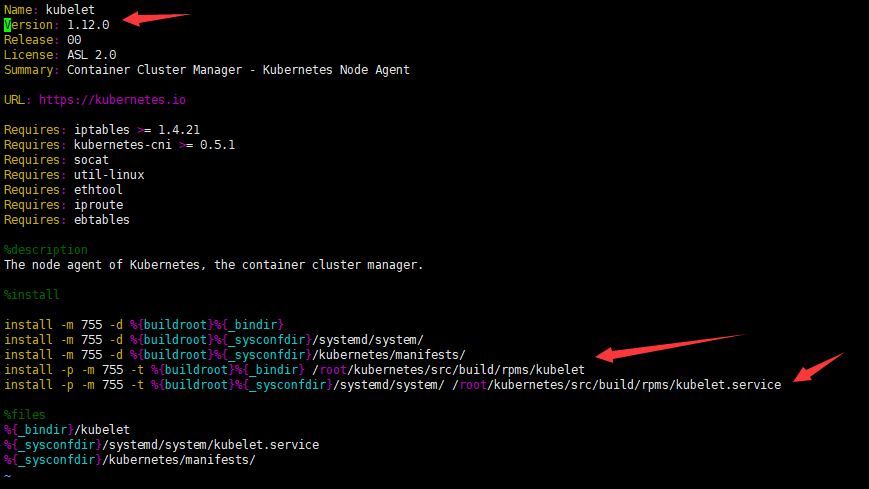

kubelet:

cd kubernetes/src/build/rpms/

cp -a /root/kubernetes/server/bin/kubelet .

vim kubelet.spec

这里的套路也是一样的,修改版本和二进制文件的绝对路径。

执行rpmbuild:

rpmbuild -ba kubelet.spec

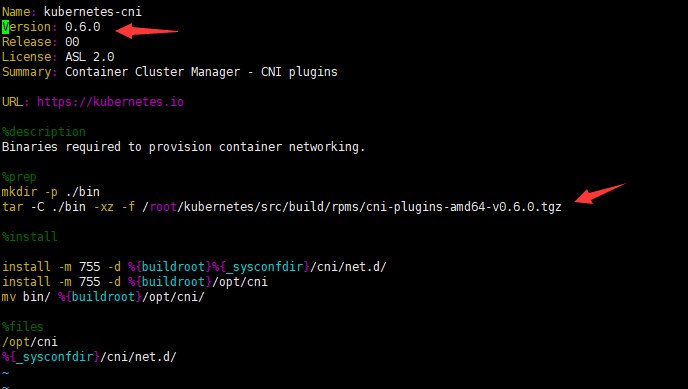

kubernetes-cni:

cd kubernetes/src/build/rpms/

vim kubernetes-cni.spec

修改版本号和源码包的位置。为什么是这个版本的呢??? 因为.spec文件里面的包版本就是v0.6.0版本,所以版本这里就写v0.6.0

wget https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-amd64-v0.6.0.tgz

下载完源码包后,开始执行rpmbuild:

rpmbuild -ba kubernetes-cni.spec

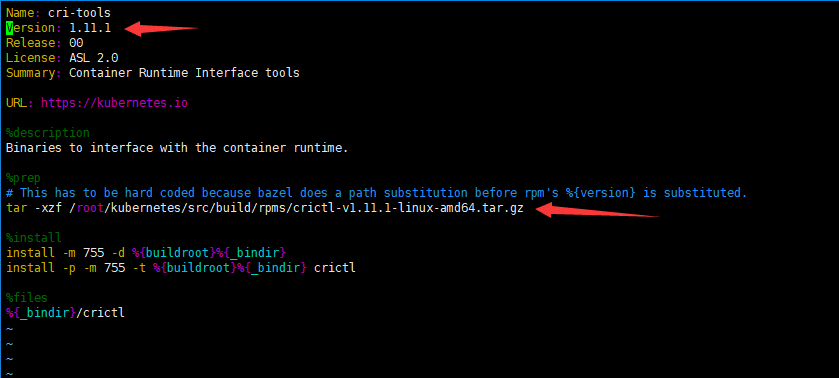

cri-tools:

cd kubernetes/src/build/rpms/

vim cri-tools.spec

修改版本号为源码包的版本,然后修改源码包的路径为绝对路径。

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.11.1/crictl-v1.11.1-linux-amd64.tar.gz

下载完源码包后,开始执行rpmbuild:

rpmbuild -ba cri-tools.spec

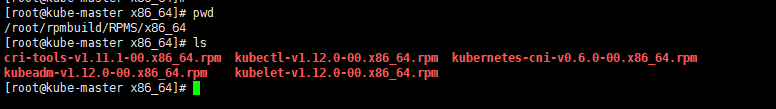

至此rpm全部build完成,查看rpm所在的目录:

安装:

新建虚拟机,系统信息如下:

[root@kube-master ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

[root@kube-master ~]# uname -a

Linux kube-master 3.10.0-862.el7.x86_64 #1 SMP Fri Apr 20 16:44:24 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

安装需要的软件并重启虚拟机:

yum install bash* vim lrzsz tree wget docker net-tools -y

reboot

添加hosts:

192.168.42.135 kube-master

192.168.42.136 kube-node1

启动docker、关闭防火墙和selinux、关闭交换分区、网络等配置。(安装kubernetes都是这些基本操作)

systemctl enable --now docker

systemctl disable --now firewalld

setenforce 0

sed -ri '/^[^#]*SELINUX=/s#=.+$#=disabled#' /etc/selinux/config

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

sysctl -w net.ipv4.ip_forward=1

安装rpm包:

[root@kube-master x86_64]# cd /root/rpmbuild/RPMS/x86_64

[root@kube-master x86_64]# yum install *.rpm

启动kubelet并加入开机启动:

systemctl enable --now kubelet

导入docker镜像:

cd /root/kubernetes/server/bin

for iso in `ls *.tar`; do docker load < $iso; done

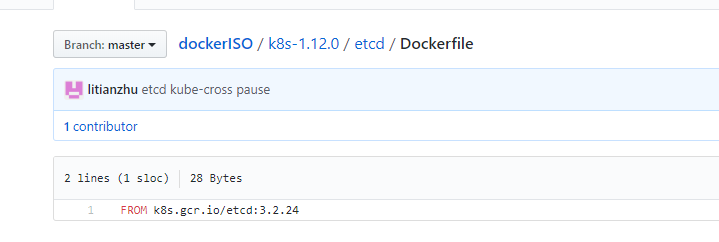

除了安装包里面给的镜像外,我们还需要额外的4个镜像;分别为:etcd(etcd数据库)、coredns(DNS镜像)、pause(Pod根镜像)、flannel(网络镜像)

网络镜像根据自己选择的网络可以进行更改,而且直接用docker pull就可以获取,这里用flannel做案例。

但是获取另外的3个镜像就相对困难了,因为需要拉取谷歌仓库里面的镜像,需要科学上网。

下面介绍一种无需科学上网获取镜像的方法:

思路:我们不需要科学上网就可以访问docker官方的仓库https://hub.docker.com/ ,而docker官方的仓库又可以从谷歌拉取镜像。所以我们只要在docker官方仓库里面拉取一下谷歌镜像,然后就可以用docker pull拉取仓库里面的谷歌镜像了。

-

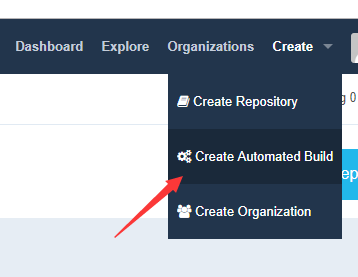

注册GitHub、Docker Hub账号。

-

在GitHub上创建一个项目,然后clone到本地。创建Dockerfile上传到GitHub。效果如下:

-

GitHub和Docker Hub做关联。

-

Docker Hub使用GitHub的Dockerfile拉取谷歌镜像。

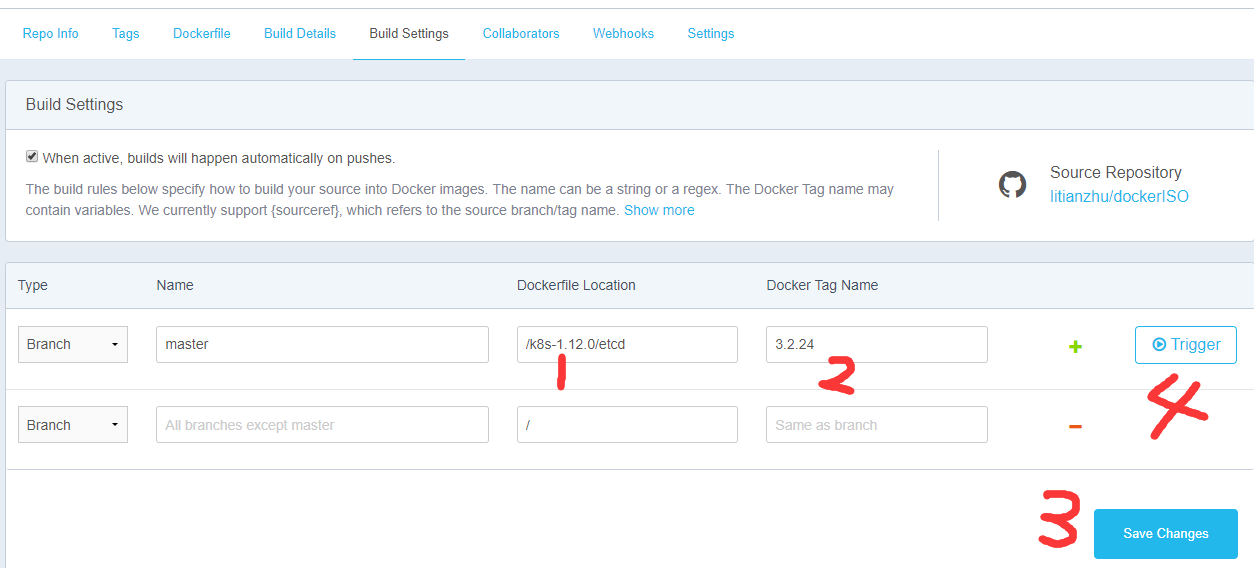

Dockerfile路径以自己创建的项目为/,后面加上镜像的版本,然后保存。

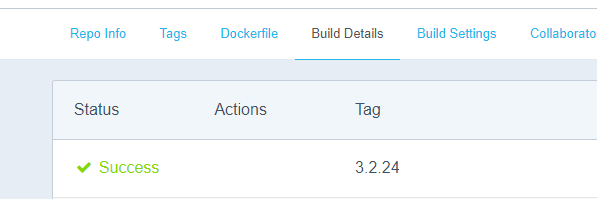

成功的样子

-

本地docker pull拉取Docker Hub的镜像。

[root@kube-master ~]# docker pull litianzhu/etcd:3.2.24

Trying to pull repository docker.io/litianzhu/etcd ...

3.2.24: Pulling from docker.io/litianzhu/etcd

8c5a7da1afbc: Pull complete

0d363128e48e: Pull complete

1ba5e77f0f6e: Pull complete

Digest: sha256:7b073bdab8c52dc23dfb3e2101597d30304437869ad8c0b425301e96a066c408

Status: Downloaded newer image for docker.io/litianzhu/etcd:3.2.24

- 更改拉取Docker Hub镜像的tag为谷歌镜像。

docker tag litianzhu/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24

其他缺少的镜像,可以使用同样的办法获取。

详细git使用方法参考:https://blog.csdn.net/lovewingss/article/details/50943843

详细Docker Hub使用GitHub拉取镜像参考:https://www.kubernetes.org.cn/878.html

rpm已经安装好了,镜像也已经下载完成,下面就可以用kubeadm安装kubernetes了:

指定kubernetes的版本和集群pod的网段。(为什么要用这个网段,后面会说)

[root@kube-master ~]# kubeadm init --kubernetes-version v1.12.0 --pod-network-cidr=10.244.0.0/16

[init] using Kubernetes version: v1.12.0

[preflight] running pre-flight checks

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [kube-master localhost] and IPs [192.168.42.135 127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [kube-master localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [kube-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.42.135]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[certificates] Generated sa key and public key.

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 32.501922 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node kube-master as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node kube-master as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "kube-master" as an annotation

[bootstraptoken] using token: 8o7v95.5kt4gbn15jsg00pi

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.42.135:6443 --token 8o7v95.5kt4gbn15jsg00pi --discovery-token-ca-cert-hash sha256:5b4aa5b06bde377fe3d24d0696105d7b83f5a77c21ab76b25195e4e62c40a2d7

给master添加admin权限,否则无法使用kubectl查看集群状态:

注:方法在刚才初始化的时候,输出信息里面有。如果想要node节点有权限,把文件拷贝到node节点的.kube目录下就可以了。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装集群网络:

kubernetes官网给出了安装各个网络组件的方法:

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

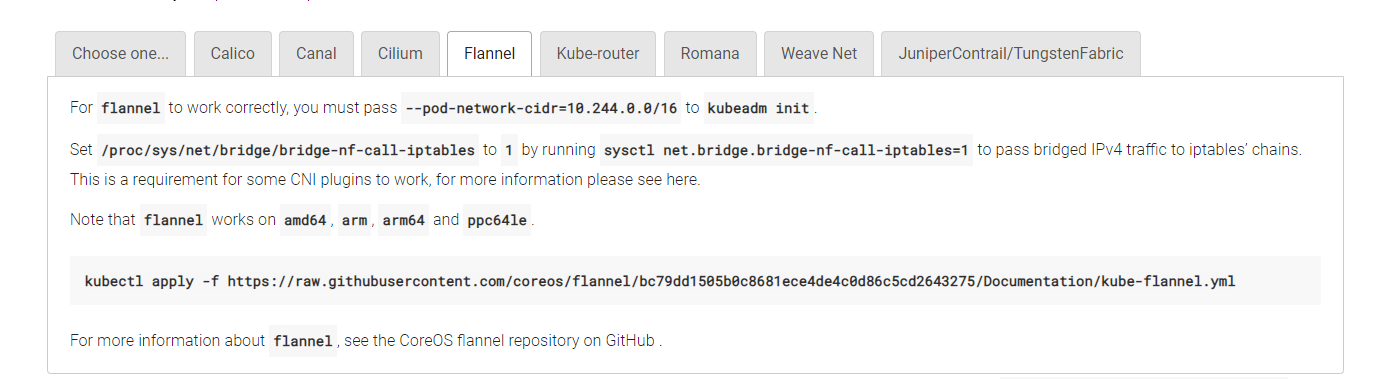

例如安装flannel网络,初始化的时候网段必须为10.244.0.0/16,/proc/sys/net/bridge/bridge-nf-call-iptables文件的值为1等。

满足以上条件就可以执行官网给出的安装命令了:

[root@kube-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/bc79dd1505b0c8681ece4de4c0d86c5cd2643275/Documentation/kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

查看集群的状态:

[root@kube-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-576cbf47c7-sf2nc 0/1 Pending 0 13m

coredns-576cbf47c7-zrjjs 0/1 Pending 0 13m

etcd-kube-master 0/1 Pending 0 1s

kube-controller-manager-kube-master 0/1 Pending 0 3s

kube-flannel-ds-amd64-sqtmv 0/1 Init:0/1 0 103s

kube-proxy-dd95n 1/1 Running 0 13m

kube-scheduler-kube-master 0/1 Pending 0 1s

[root@kube-master ~]# kubectl describe pod kube-flannel-ds-amd64-sqtmv -n kube-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m11s default-scheduler Successfully assigned kube-system/kube-flannel-ds-amd64-sqtmv to kube-master

Normal Pulling 2m10s kubelet, kube-master pulling image "quay.io/coreos/flannel:v0.10.0-amd64"

注:下载flannel镜像虽然不用科学上网,但是速度很慢。如果下载失败,多试几次就好了。反正我一般都是第三次成功 : )

再查看集群状态:

[root@kube-master iso]# kubectl get node

NAME STATUS ROLES AGE VERSION

kube-master Ready master 19m v1.12.0

[root@kube-master iso]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-576cbf47c7-sf2nc 1/1 Running 0 19m

coredns-576cbf47c7-zrjjs 1/1 Running 0 19m

etcd-kube-master 1/1 Running 0 33s

kube-apiserver-kube-master 1/1 Running 0 28s

kube-controller-manager-kube-master 1/1 Running 0 46s

kube-flannel-ds-amd64-sqtmv 1/1 Running 0 7m29s

kube-proxy-dd95n 1/1 Running 0 19m

kube-scheduler-kube-master 1/1 Running 0 43s

注:如果启动的很慢,可以尝试重启kubelet

其他node节点参照上面的操作,安装所有的东西,并同步镜像。然后执行master初始化得到的token就可以加入集群了:

kubeadm join 192.168.42.135:6443 --token 8o7v95.5kt4gbn15jsg00pi --discovery-token-ca-cert-hash sha256:5b4aa5b06bde377fe3d24d0696105d7b83f5a77c21ab76b25195e4e62c40a2d7

[root@kube-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

kube-master Ready master 30m v1.12.0

kube-node1 Ready <none> 3m6s v1.12.0

[root@kube-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-576cbf47c7-sf2nc 1/1 Running 0 29m

coredns-576cbf47c7-zrjjs 1/1 Running 0 29m

etcd-kube-master 1/1 Running 0 10m

kube-apiserver-kube-master 1/1 Running 0 10m

kube-controller-manager-kube-master 1/1 Running 0 11m

kube-flannel-ds-amd64-s485x 1/1 Running 0 3m11s

kube-flannel-ds-amd64-sqtmv 1/1 Running 0 17m

kube-proxy-9x7nr 1/1 Running 0 3m11s

kube-proxy-dd95n 1/1 Running 0 29m

kube-scheduler-kube-master 1/1 Running 0 11m

总结:本次安装的kubernetes优点在于,自己无需科学上网就可以构建自己的kubernetes集群,其他版本的kubernetes用这个方法也可以。缺点就是不适合部署到生产环境,只适合学习使用,因为对于kubernetes集群master很重要,如果master出了问题整个集群就gogo了,所以需要对master做高可用。后面更新的文章会介绍如何对master做高可用。还有就是排错了,如果用kubeadm安装的kubernetes集群报错,看下messages日志就可以了,看高亮的报错一般就可以解决的。

最后附上1.12.0版本的所有rpm包和iso镜像:

链接:https://pan.baidu.com/s/1tDW2NSFhV35AkYIfITe_hQ 提取码:hmyj

本文详述了如何在不依赖科学上网的情况下,通过构建Kubernetes的RPM包来安装和配置Kubernetes集群的过程。从下载kubernetes-server-linux-amd64.tar.gz包开始,到构建各组件的RPM包,再到安装、配置集群和网络,以及解决常见问题,提供了全面的指导。

本文详述了如何在不依赖科学上网的情况下,通过构建Kubernetes的RPM包来安装和配置Kubernetes集群的过程。从下载kubernetes-server-linux-amd64.tar.gz包开始,到构建各组件的RPM包,再到安装、配置集群和网络,以及解决常见问题,提供了全面的指导。

744

744

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?