一、MongoDB介绍

-

MongoDB是一个基于分布式文件存储的数据库。由C++语言编写。旨在为WEB应用提供可扩展的高性能数据存储解决方案。

-

MongoDB 将数据存储为一个文档,数据结构由键值(key=>value)对组成。它支持的数据结构非常松散,MongoDB 文档类似于 JSON 对象。字段值可以包含其他文档、数组及文档数组。

什么是 JSON ? JSON 指的是 JavaScript 对象表示法(JavaScript Object Notation) JSON 是轻量级的文本数据交换格式 JSON 独立于语言 * JSON 具有自我描述性,更易理解 JSON 使用 JavaScript 语法来描述数据对象,但是 JSON 仍然独立于语言和平台。JSON 解析器和 JSON 库支持许多不同的编程语言。

- Mongo最大的特点是它支持的查询语言非常强大,其语法有点类似于面向对象的查询语言,几乎可以实现类似关系数据库单表查询的绝大部分功能,而且还支持对数据建立索引。

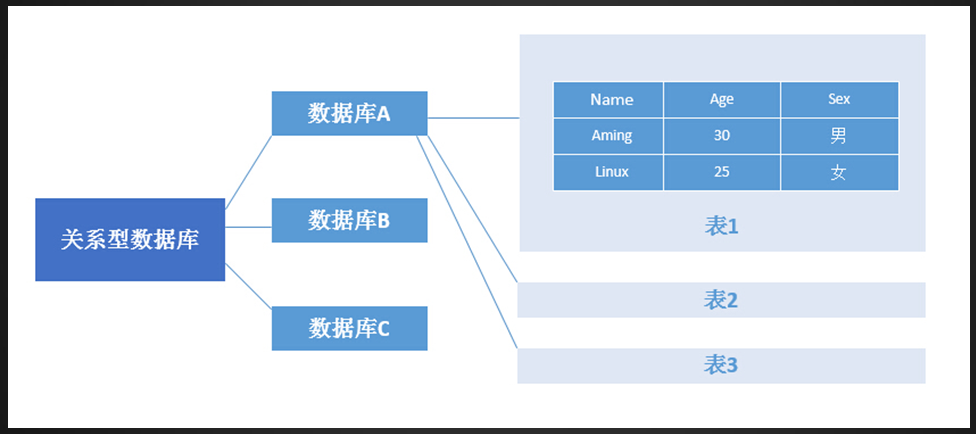

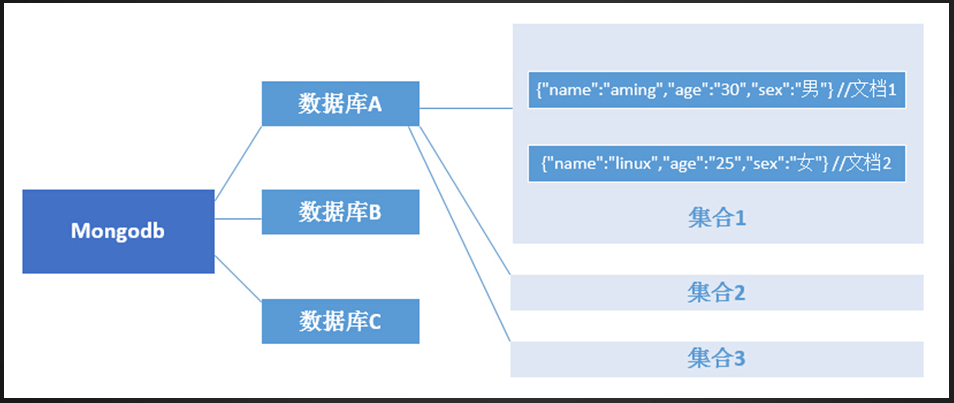

MongoDB和关系型数据库对比

关系型数据库数据结构

MongoDB数据结构

二、mongodb安装

按照官方网站 https://docs.mongodb.com/manual/tutorial/install-mongodb-on-red-hat/, 编辑repo文件

[root@localhost ~]# cd /etc/yum.repos.d/

[root@localhost yum.repos.d]# vim mongodb-org-4.0.repo

[mongodb-org-4.0]

name = MongoDB Repository

baseurl = https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck = 1

enabled = 1

gpgkey = https://www.mongodb.org/static/pgp/server-4.0.asc

查看yum list当中新生成的mongodb-org,并用yum安装所有的mongodb-org

[root@localhost yum.repos.d]# yum list|grep mongodb-org

mongodb-org.x86_64 4.0.1-1.el7 @mongodb-org-4.0

mongodb-org-mongos.x86_64 4.0.1-1.el7 @mongodb-org-4.0

mongodb-org-server.x86_64 4.0.1-1.el7 @mongodb-org-4.0

mongodb-org-shell.x86_64 4.0.1-1.el7 @mongodb-org-4.0

mongodb-org-tools.x86_64 4.0.1-1.el7 @mongodb-org-4.0

[root@localhost yum.repos.d]# yum install -y mongodb-org三、连接mongodb

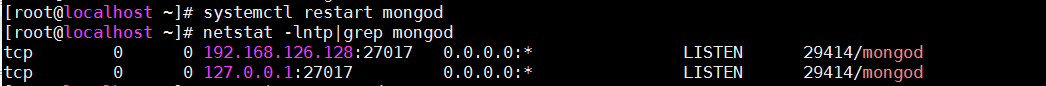

在mongod.conf文件中,添加本机ip,用逗号隔开

[root@localhost ~]# vim /etc/mongod.conf

bindIp: 127.0.0.1,192.168.126.128 //增加IP

连接MongoDB

直接连接

[root@localhost ~]# mongo

指定端口(配置文件没有明确指定)

[root@localhost ~]# mongo --port 27017

远程连接,需要IP 和端口

[root@localhost ~]# mongo --host 192.168.126.128 --port 27017四、mongodb用户管理

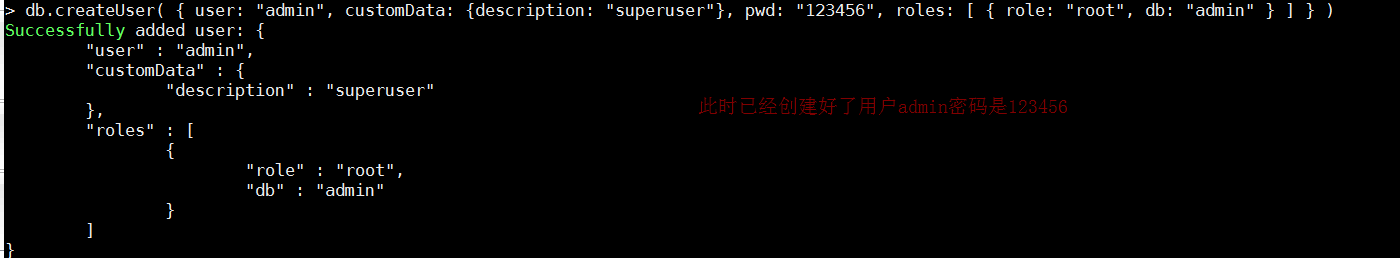

进入数据库,创建用户,进入之后首先要进行切换到admin库里面

db.createUser( { user: "admin", customData: {description: "superuser"}, pwd: "123456", roles: [ { role: "root", db: "admin" } ] } ) //创建admin用户

语句释义:

db.createUser( { user: "admin", customData: {description: "superuser"}, pwd: "admin122", roles: [ { role: "root", db: "admin" } ] } )

- db.createUser 创建用户的命令

- user: "admin" 定义用户名

- customData: {description: "superuser"}

- pwd: "123456" 定义用户密码

- roles: [ { role: "root", db: "admin" } ] 规则:角色 root,数据库admin

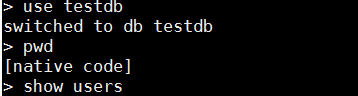

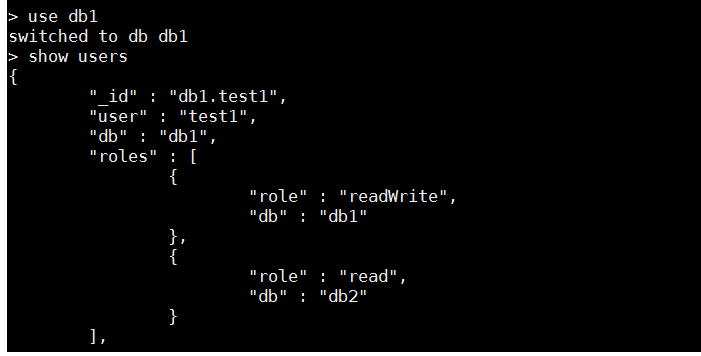

列出所有用户,需要切换到admin库

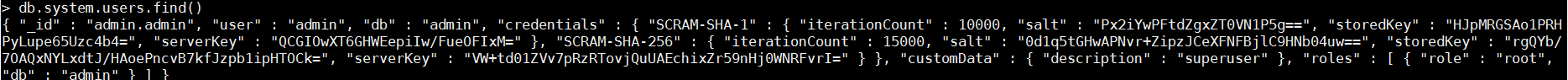

> db.system.users.find()

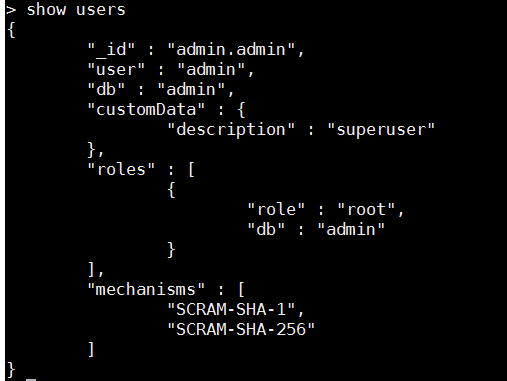

查看当前库下所有的用户

查看当前库下所有的用户

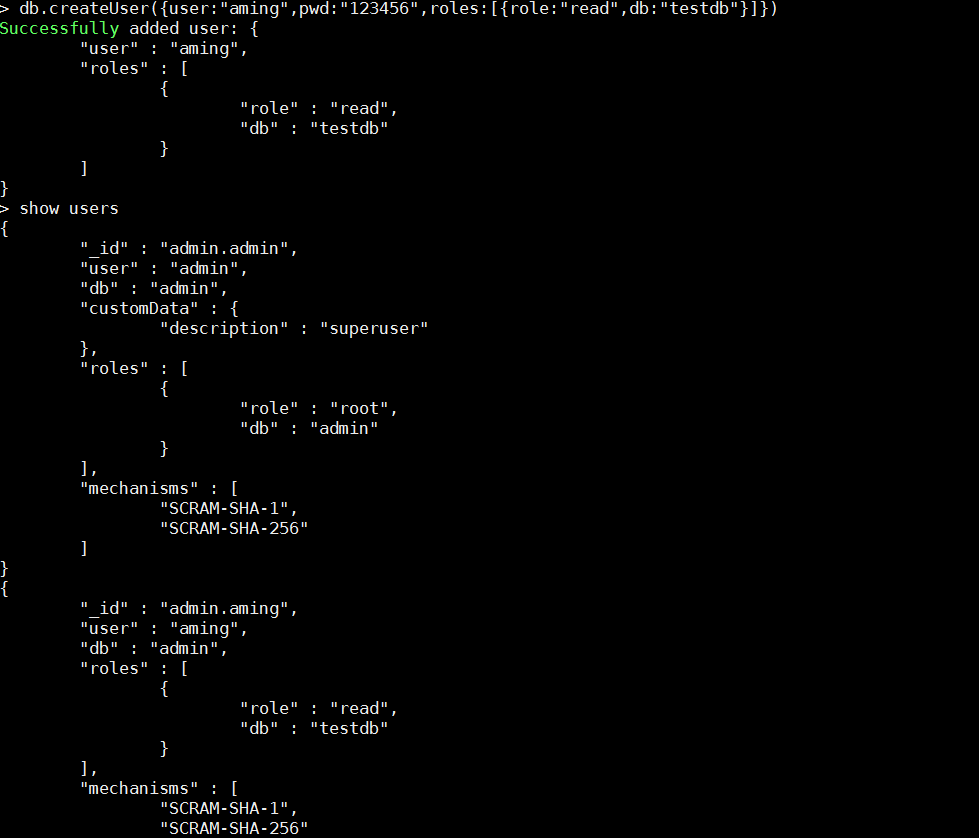

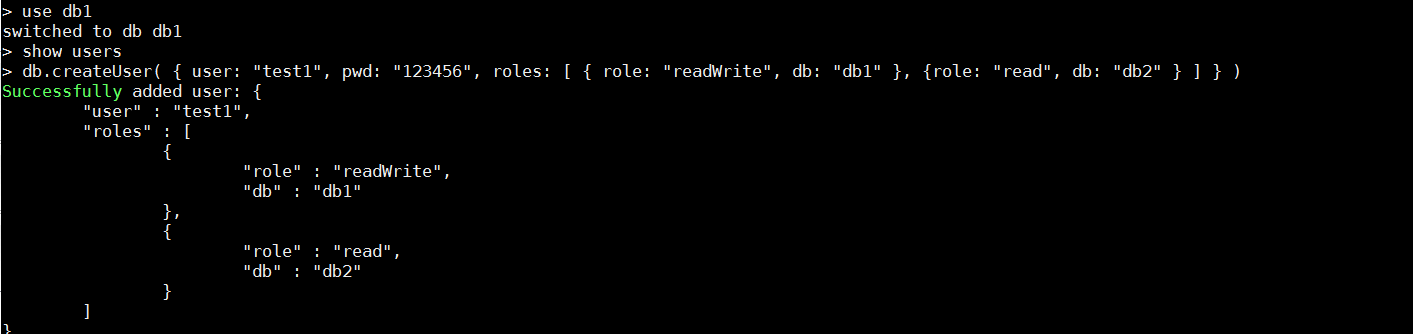

创建新用户

db.createUser({user:"aming",pwd:"123456",roles:[{role:"read",db:"testdb"}]})

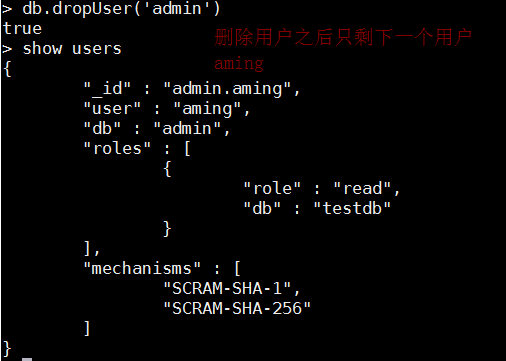

删除用户

db.dropUser('admin')

切换到testdb库,若此库不存在,会自动创建

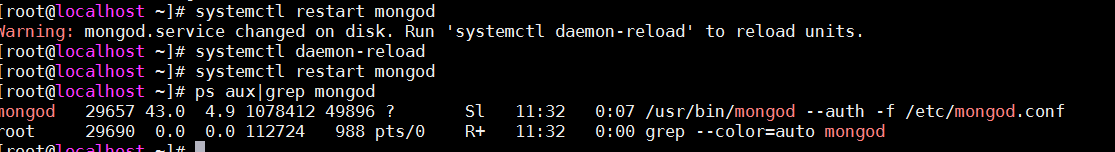

要使用用户生效,需要编辑启动服务脚本,添加 --auth,以后登录需要身份验证

vim /usr/lib/systemd/system/mongod.service

Environment="OPTIONS=-f /etc/mongod.conf" 改为 Environment="OPTIONS=--auth -f /etc/mongod.conf"重启mongo服务

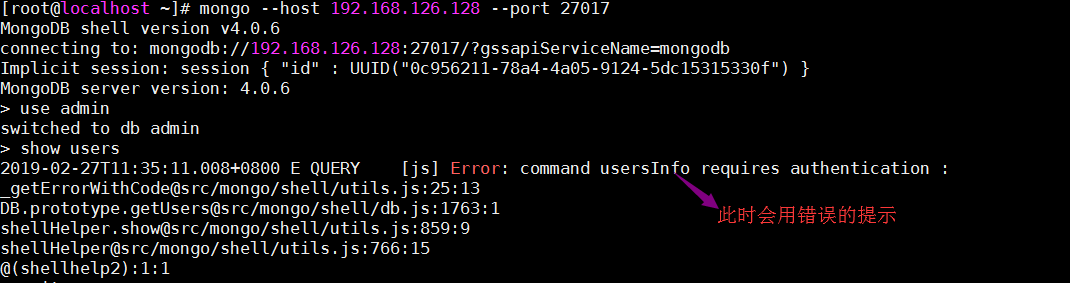

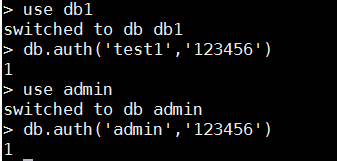

下面进行测试在不使用用户名和密码的情况下看一下能不能再MongoDB里面进行一些相关的操作

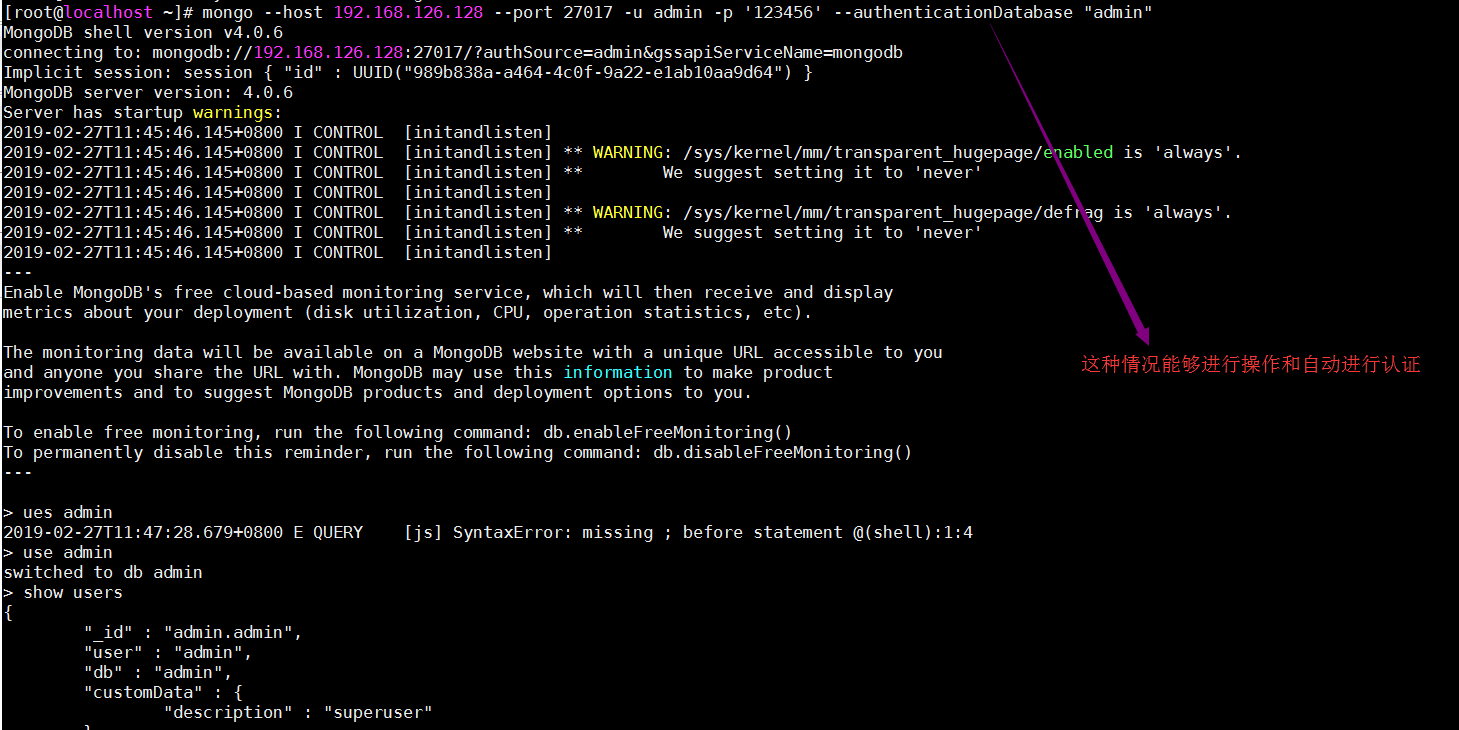

在使用用户名密码登录的情况下进行查看能不能进行相关操作

切换到db1下,创建新用户

MongoDB用户角色

- Read:允许用户读取指定数据库

- readWrite:允许用户读写指定数据库

- dbAdmin:允许用户在指定数据库中执行管理函数,如索引创建、删除,查看统计或访问system.profile

- userAdmin:允许用户向system.users集合写入,可以找指定数据库里创建、删除和管理用户

- clusterAdmin:只在admin数据库中可用,赋予用户所有分片和复制集相关函数的管理权限。

- readAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的读权限

- readWriteAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的读写权限

- userAdminAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的userAdmin权限

- dbAdminAnyDatabase:只在admin数据库中可用,赋予用户所有数据库的dbAdmin权限。

- root:只在admin数据库中可用。超级账号,超级权限

五、mongodb创建集合、数据管理

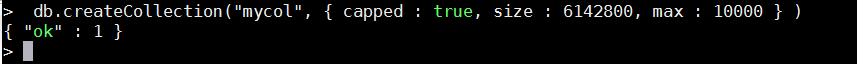

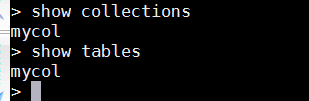

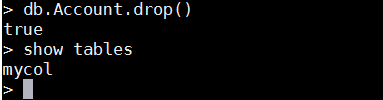

在db1下,创建 mycol的集合

![]()

创建集合的命令

db.createCollection("mycol", { capped : true, autoIndexID : true, size : 6142800, max : 10000 } )语法:db.createCollection(name,options)

- name就是集合的名字,

- options可选,用来配置集合的参数,参数如下

- capped true/false (可选)如果为true,则启用封顶集合。封顶集合是固定大小的集合,当它达到其最大大小,会自动覆盖最早的条目。如果指定true,则也需要指定尺寸参数。

- autoindexID true/false (可选)如果为true,自动创建索引_id字段的默认值是false。

- size (可选)指定最大大小字节封顶集合。如果封顶如果是 true,那么你还需要指定这个字段。单位B

- max (可选)指定封顶集合允许在文件的最大数量。

上面的命令在创建集合的过程中可能有错误信息,使用下面的命令就不会出现下面的命令

查看集合:show collections 或者使用show tables

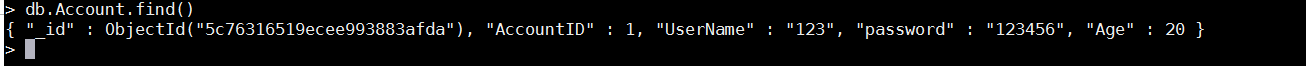

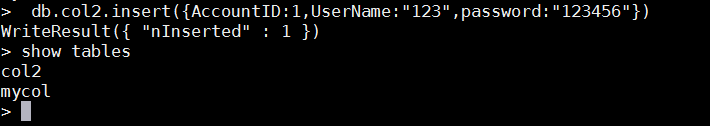

在集合Account中,直接插入数据。如果该集合不存在,则mongodb会自动创建集合

db.Account.insert({AccountID:1,UserName:"123",password:"123456"})在集合中更新信息数据

db.Account.update({AccountID:1},{"$set":{"Age":20}}) //在集合Account中,第一条中,增加一项信息查看所有文档:db.Account.find(),此时在第一条 有更新的信息

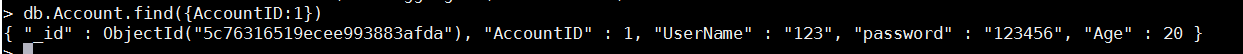

查看指定的文档, db.Account.find({AccountID:1})

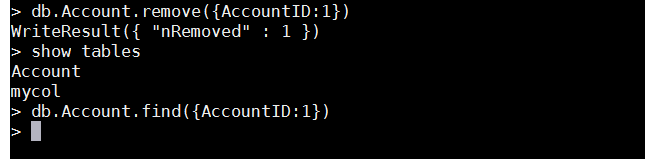

移除指定的文档:db.Account.remove({AccountID:1})

删除集合:db.Account.drop()

重新创建集合col2

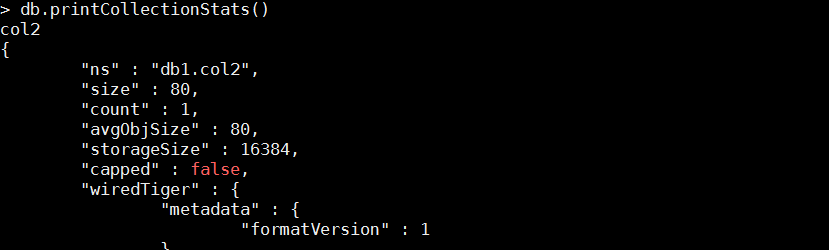

查看所有集合的状态:db.printCollectionStats()

六、PHP的mongodb扩展

下载源码包,并解压

[root@localhost ~]# cd /usr/local/src/

[root@localhost src]# wget https://pecl.php.net/get/mongodb-1.3.0.tgz

[root@localhost src]# ls mongodb-1.3.0.tgz

mongodb-1.3.0.tgz

[root@localhost src]# tar zxf mongodb-1.3.0.tgz用phpize生成configure文件

[root@localhost src]# cd mongodb-1.3.0

[root@localhost mongodb-1.3.0]# ls

config.m4 CREDITS Makefile.frag phongo_compat.h php_phongo.c php_phongo.h README.md src Vagrantfile

config.w32 LICENSE phongo_compat.c php_bson.h php_phongo_classes.h php_phongo_structs.h scripts tests

[root@localhost mongodb-1.3.0]# /usr/local/php-fpm/bin/phpize //生成configure文件

Configuring for:

PHP Api Version: 20131106

Zend Module Api No: 20131226

Zend Extension Api No: 220131226在解压目录下,定制相应的功能,并成成makefile文件,编译,安装

./configure --with-php-config=/usr/local/php-fpm/bin/php-config

make

make installphp配置文件中添加:extension = mongodb.so;并检测php是否加载mongodb模块

[root@localhost mongodb-1.3.0]# vim /usr/local/php-fpm/etc/php.ini

extension=memcache.so

extension=redis.so //之前添加上

extension = mongodb.so //添加此语句

[root@ying01 mongodb-1.3.0]# /usr/local/php-fpm/bin/php -m|grep mongodb

mongodb

[root@ying01 mongodb-1.3.0]# /etc/init.d/php-fpm restart //重启php服务

Gracefully shutting down php-fpm . done

Starting php-fpm done七 、PHP的mongo扩展

下载mongo源码包,解压,用phpize生成configure文件

[root@localhost ~]# cd /usr/local/src/

[root@localhost src]# wget https://pecl.php.net/get/mongo-1.6.16.tgz

[root@localhost src]# ls mongo-1.6.16.tgz

mongo-1.6.16.tgz

[root@localhost src]# tar zxf mongo-1.6.16.tgz

[root@localhost src]# cd mongo-1.6.16/

[root@localhost mongo-1.6.16]# /usr/local/php-fpm/bin/phpize

Configuring for:

PHP Api Version: 20131106

Zend Module Api No: 20131226

Zend Extension Api No: 220131226

定制相应的功能,并生成makefile文件,根据makefile的预设参数进行编译,然后安装

[root@localhost mongo-1.6.16]# ./configure --with-php-config=/usr/local/php-fpm/bin/php-config

[root@localhost mongo-1.6.16]# make

[root@localhost mongo-1.6.16]# make install

在php配置文件中添加:extension = mongo.so;并检测php是否加载mongodb模块

[root@localhost mongo-1.6.16]# vim /usr/local/php-fpm/etc/php.ini

extension=memcache.so

extension=redis.so

extension = mongodb.so

extension = mongo.so //新增加

[root@localhost mongo-1.6.16]# /usr/local/php-fpm/bin/php -m|grep mongo

mongo

mongodb写一个php,测试mongo扩展是否成功

[root@localhost mongo-1.6.16]# vim /data/wwwroot/default/1.php

<?php

$m = new MongoClient(); // 连接

$db = $m->test; // 获取名称为 "test" 的数据库

$collection = $db->createCollection("runoob");

echo "集合创建成功";

?>[root@localhost mongo-1.6.16]# curl localhost/1.php

集合创建成功

[root@localhost mongo-1.6.16]# mongo --host 192.168.126.128 --port 27017

> use test

switched to db test

> show tables

runoob

>八、mongodb副本集架构

8.1 mongodb副本集介绍

- 早期版本使用master-slave,一主一从和mysql类似,但slave在此架构中只读,当主库宕机后,从库不能自动切换为主。

- 目前已经淘汰了master-slave,改为副本集。此模式中有一个主(primary),和多个从(secondary),只读。支持给它们设置权重,当主宕机后,权重最高的从切换为主。

- 在此架构中,还可以建立一个仲裁(arbiter)的角色,它只负责裁决,而不存储数据。

- 此架构中读写数据都在主上,要想实现负载均衡则需要手动指定读库的目标server。

8.2 mongodb副本集搭建

机器分配:

- ying01 192.168.112.136 PRIMARY

- ying02 192.168.112.138 SECONDARY

- ying03 192.168.112.139 SECONDARY

分别在ying02、ying03上安装mongdb

[root@ying02 ~]# cd /etc/yum.repos.d/

[root@ying02 yum.repos.d]# vim mongodb-org-4.0.repo

[mongodb-org-4.0]

name = MongoDB Repository

baseurl = https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck = 1

enabled = 1

gpgkey = https://www.mongodb.org/static/pgp/server-4.0.asc

[root@ying02 yum.repos.d]# yum install -y mongodb-org

在ying03机器

[root@ying03 ~]# cd /etc/yum.repos.d/

[root@ying03 yum.repos.d]# vim mongodb-org-4.0.repo

[mongodb-org-4.0]

name = MongoDB Repository

baseurl = https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck = 1

enabled = 1

gpgkey = https://www.mongodb.org/static/pgp/server-4.0.asc

[root@ying03 yum.repos.d]# yum install -y mongodb-org

在ying01上编辑配置文件

[root@ying01 yum.repos.d]# vim /etc/mongod.conf

bindIp: 127.0.0.1,192.168.112.136

replication: //让其加载上,去掉#号

oplogSizeMB: 20 //添加

replSetName: yinglinux //添加

重启 mongod服务

[root@ying01 yum.repos.d]# systemctl restart mongod.service

[root@ying01 yum.repos.d]# ps aux|grep mongod

mongod 64534 9.7 3.1 1102168 58380 ? Sl 20:42 0:03 /usr/bin/mongod -f /etc/mongod.conf

root 64569 0.0 0.0 112720 984 pts/0 S+ 20:43 0:00 grep --color=auto mongod

与ying01上的配置一样,在ying02机器上,编辑mongod配置文件

[root@ying02 ~]# vim /etc/mongod.conf

bindIp: 127.0.0.1,192.168.112.138

replication:

oplogSizeMB: 20

replSetName: yinglinux

在ying03机器上,编辑mongod配置文件

[root@ying03 ~]# vim /etc/mongod.conf

bindIp: 127.0.0.1,192.168.112.139 //添加上内网IP

replication: //增加语句

oplogSizeMB: 20

replSetName: yinglinux

在ying02、ying03上机器都开启mongod服务

root@ying02 ~]# ps aux|grep mongod

mongod 16421 6.5 2.7 1102140 52160 ? Sl 20:50 0:00 /usr/bin/mongod -f /etc/mongod.conf

root 16452 0.0 0.0 112720 984 pts/0 R+ 20:50 0:00 grep --color=auto mongod

[root@ying02 ~]# netstat -lntp |grep mongod

tcp 0 0 192.168.112.138:27017 0.0.0.0:* LISTEN 16421/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 16421/mongod

[root@ying02 ~]#

root@ying03 ~]# systemctl start mongod

[root@ying03 ~]# ps aux|grep mongod

mongod 3773 5.6 2.7 1102148 52088 ? Sl 20:50 0:00 /usr/bin/mongod -f /etc/mongod.conf

root 3804 0.0 0.0 112720 984 pts/0 S+ 20:50 0:00 grep --color=auto mongod

[root@ying03 ~]# netstat -lntp |grep mongod

tcp 0 0 192.168.112.139:27017 0.0.0.0:* LISTEN 3773/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 3773/mongod

开始配置副本集架构

> config={_id:"yinglinux",members:[{_id:0,host:"192.168.112.136:27017"},{_id:1,host:"192.168.112.138:27017"},{_id:2,host:"192.168.112.139:27017"}]} //配置副本集

{

"_id" : "yinglinux",

"members" : [

{

"_id" : 0,

"host" : "192.168.112.136:27017"

},

{

"_id" : 1,

"host" : "192.168.112.138:27017"

},

{

"_id" : 2,

"host" : "192.168.112.139:27017"

}

]

}

> rs.initiate(config) //初始化

{

"ok" : 1,

"operationTime" : Timestamp(1535374960, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535374960, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

yinglinux:OTHER> rs.status() //查看rs状态

因为篇幅关系,只是显示 重要的信息

{

"_id" : 0,

"name" : "192.168.112.136:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", //PRIMARY,主

"uptime" : 1274,

"optime" : {

"ts" : Timestamp(1535375032, 1),

"t" : NumberLong(1)

{

"_id" : 1,

"name" : "192.168.112.138:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", //SECONDARY,从

"uptime" : 73,

"optime" : {

"ts" : Timestamp(1535375032, 1),

"t" : NumberLong(1)

{

"_id" : 2,

"name" : "192.168.112.139:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", //SECONDARY,从

"uptime" : 73,

"optime" : {

"ts" : Timestamp(1535375032, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535375032, 1),

"t" : NumberLong(1)

yinglinux:PRIMARY>

8.3 mongodb副本集测试

在ying01机器上:

在mydb库中,新增加acc表

yinglinux:PRIMARY> use admin

switched to db admin

yinglinux:PRIMARY> use mydb

switched to db mydb

yinglinux:PRIMARY> db.acc.insert({AccountID:1,UserName:"123",password:"123456"})

WriteResult({ "nInserted" : 1 })

yinglinux:PRIMARY> show dbs //查看所有库

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

mydb 0.000GB

test 0.000GB

yinglinux:PRIMARY> use mydb //切换为mydb库

switched to db mydb

yinglinux:PRIMARY> show tables //查看mydb下表,acc已经生成

acc

在ying02机器上:

想在从库,查看所有库,需要先执行 rs.slaveOk()

yinglinux:SECONDARY> rs.slaveOk()

yinglinux:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

mydb 0.000GB

test 0.000GB

yinglinux:SECONDARY> use mydb //切换为mydb库

switched to db mydb

yinglinux:SECONDARY> show tables //这时也能再从库,看到acc表

acc

在ying03机器上:

想在从库,查看所有库,需要先执行 rs.slaveOk()

yinglinux:SECONDARY> rs.slaveOk()

yinglinux:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

db1 0.000GB

local 0.000GB

mydb 0.000GB

test 0.000GB

yinglinux:SECONDARY> use mydb //切换为mydb库

switched to db mydb

yinglinux:SECONDARY> show tables //这时也能再从库,看到acc表

acc

现在模拟ying01宕机,看一下,其余两台机器之一会成为 PRIMARY

在ying01上增加iptables规则

[root@ying01 ~]# iptables -I INPUT -p tcp --dport 27017 -j DROP

在ying02机器上执行,查看状态,此时能够看到ying01宕机,ying02变为PRIMARY

yinglinux:PRIMARY> rs.status()

"_id" : 0,

"name" : "192.168.112.136:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)", //ying01机器出现问题了

"_id" : 1,

"name" : "192.168.112.138:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", //ying02变为PRIMARY

由于三台机器的 权重都是 1,因此之前ying02、ying03没有优先权,他们成为PRIMARY,可能性为50%。

现在开始设置权重:

在ying01上把之前的iptables的规则删除

[root@ying01 ~]# iptables -D INPUT -p tcp --dport 27017 -j DROP

此时ying01的连接恢复,但是变为SECONDARY。这个不能够变为PRIMARY

"_id" : 0,

"name" : "192.168.112.136:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

ying02成为PRIMARY,现在在其上面操作

yinglinux:PRIMARY> cfg = rs.conf() //查看权重

"_id" : 0,

"host" : "192.168.112.136:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1, //权重为1

"_id" : 1,

"host" : "192.168.112.138:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1, //权重也为1

"_id" : 2,

"host" : "192.168.112.139:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1, //权重为1

继续在PRIMARY上,分配权重

yinglinux:PRIMARY> cfg.members[0].priority = 3 //给ying01设置为3

3

yinglinux:PRIMARY> cfg.members[1].priority = 2 //给ying02设置为2

yinglinux:PRIMARY> cfg.members[2].priority = 1 //给ying03设置为1

1

yinglinux:PRIMARY> rs.reconfig(cfg) //重新加载,使其生效

{

"ok" : 1,

"operationTime" : Timestamp(1535381404, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1535381404, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

此时ying01会成为PRIMARY,因为他的权重设置的为最高,这一步也只能在PRIMARY操作

yinglinux:PRIMARY> cfg = rs.conf() //查看权重

"_id" : 0,

"host" : "192.168.112.136:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 3,

"_id" : 1,

"host" : "192.168.112.138:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"_id" : 2,

"host" : "192.168.112.139:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

从上面试验结果,ying01因为分配为最高的权重,因此又成为PRIMARY

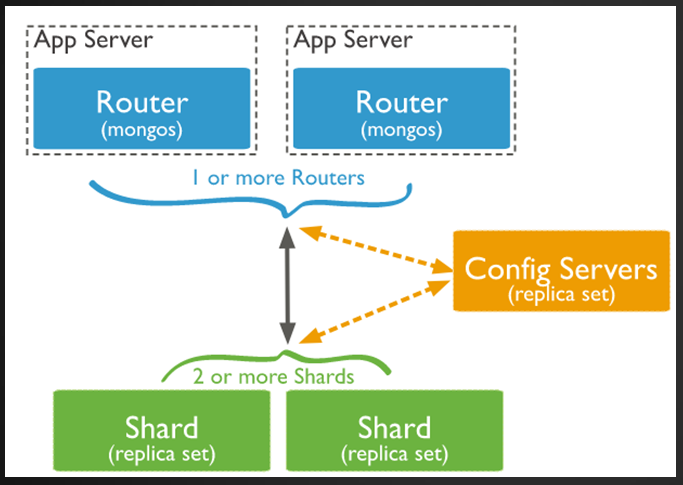

九 、mongodb分片

9.1 mongodb分片介绍

- 分片就是将数据库进行拆分,将大型集合分隔到不同服务器上。比如,本来100G的数据,可以分割成10份存储到10台服务器上,这样每台机器只有10G的数据。

- 通过一个mongos的进程(路由)实现分片后的数据存储与访问,也就是说mongos是整个分片架构的核心,对客户端而言是不知道是否有分片的,客户端只需要把读写操作转达给mongos即可。

- 虽然分片会把数据分隔到很多台服务器上,但是每一个节点都是需要有一个备用角色的,这样能保证数据的高可用。

- 当系统需要更多空间或者资源的时候,分片可以让我们按需方便扩展,只需要把mongodb服务的机器加入到分片集群中即可

MongoDB分片的三个角色:

mongos: 数据库集群请求的入口,所有的请求都通过mongos进行协调,不需要在应用程序添加一个路由选择器,mongos自己就是一个请求分发中心,它负责把对应的数据请求请求转发到对应的shard服务器上。在生产环境通常有多mongos作为请求的入口,防止其中一个挂掉所有的mongodb请求都没有办法操作。

config server: 配置服务器,存储所有数据库元信息(路由、分片)的配置。mongos本身没有物理存储分片服务器和数据路由信息,只是缓存在内存里,配置服务器则实际存储这些数据。mongos第一次启动或者关掉重启就会从 config server 加载配置信息,以后如果配置服务器信息变化会通知到所有的 mongos 更新自己的状态,这样 mongos 就能继续准确路由。在生产环境通常有多个 config server 配置服务器,因为它存储了分片路由的元数据,防止数据丢失!

shard: 存储了一个集合部分数据的MongoDB实例,每个分片是单独的mongodb服务或者副本集,在生产环境中,所有的分片都应该是副本集。

9.2 MongoDB分片搭建

注意:此处出现几处莫名错误,查询了几个小时,以及反复试验,都未能解决。理论步骤都正确,因为时间紧急,先把问题记录下来,日后再解决。

分片搭建 -服务器规划

三台机器 ying01 ying02 ying03

ying01搭建:mongos、config server、副本集1主节点、副本集2仲裁、副本集3从节点

ying02搭建:mongos、config server、副本集1从节点、副本集2主节点、副本集3仲裁

ying03搭建:mongos、config server、副本集1仲裁、副本集2从节点、副本集3主节点

端口分配:mongos 20000、config 21000、副本集1 27001、副本集2 27002、副本集3 27003

三台机器全部关闭firewalld服务和selinux,或者增加对应端口的规则

[root@ying01 ~]# mkdir -p /data/mongodb/mongos/log

[root@ying01 ~]# mkdir -p /data/mongodb/config/{data,log}

[root@ying01 ~]# mkdir -p /data/mongodb/shard1/{data,log}

[root@ying01 ~]# mkdir -p /data/mongodb/shard2/{data,log}

[root@ying01 ~]# mkdir -p /data/mongodb/shard3/{data,log}

[root@ying01 ~]# mkdir /etc/mongod/

config server配置

[root@ying01 ~]# vim /etc/mongod/config.conf

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 192.168.112.136

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster;

replSet=configs #副本集名称

maxConns=20000 #设置最大连接数

启动config server

[root@ying01 ~]# mongod -f /etc/mongod/config.conf

[root@ying01 ~]# ps aux|grep mongod

mongod 64534 0.9 5.4 1509136 101344 ? Sl 20:42 1:35 /usr/bin/mongod -f /etc/mongod.conf

root 65446 6.5 3.2 1147180 60648 ? Sl 23:22 0:01 mongod -f /etc/mongod/config.conf

root 65482 0.0 0.0 112720 980 pts/0 S+ 23:23 0:00 grep --color=auto mongod

[root@ying01 ~]# netstat -lntp |grep mongod

tcp 0 0 192.168.112.136:21000 0.0.0.0:* LISTEN 65446/mongod

tcp 0 0 192.168.112.136:27017 0.0.0.0:* LISTEN 64534/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 64534/mongod

以端口21000登录mongdb,初始化副本集

[root@ying01 ~]# mongo --host 192.168.112.136 --port 21000

config = { _id: "configs", members: [ {_id : 0, host : "192.168.112.136:21000"},{_id : 1, host : "192.168.112.138:21000"},{_id : 2, host : "192.168.112.139:21000"}] }

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "192.168.112.136:21000"

},

{

"_id" : 1,

"host" : "192.168.112.138:21000"

},

{

"_id" : 2,

"host" : "192.168.112.139:21000"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535383937, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1535383937, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1535383937, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:SECONDARY> rs.status()

"_id" : 0,

"name" : "192.168.112.136:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", //成为主

分片配置

shard1配置

[root@ying01 ~]# vim /etc/mongod/shard1.conf

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log

logappend = true

bind_ip = 192.168.112.136

port = 27001

fork = true

#httpinterface=true #打开web监控

#rest=true

replSet=shard1 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

shard2配置

[root@ying01 ~]# vim /etc/mongod/shard2.conf

pidfilepath = /var/run/mongodb/shard2.pid

dbpath = /data/mongodb/shard2/data

logpath = /data/mongodb/shard2/log/shard2.log

logappend = true

bind_ip = 192.168.112.136

port = 27002

fork = true

#httpinterface=true #打开web监控

#rest=true

replSet=shard2 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

shard3配置

[root@ying01 ~]# vim /etc/mongod/shard3.conf

pidfilepath = /var/run/mongodb/shard3.pid

dbpath = /data/mongodb/shard3/data

logpath = /data/mongodb/shard3/log/shard3.log

logappend = true

bind_ip = 192.168.112.136

port = 27003

fork = true

#httpinterface=true #打开web监控

#rest=true

replSet=shard3 #副本集名称

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #设置最大连接数

三台机子启动shard1、shard2、shard3

[root@ying01 ~]# mongod -f /etc/mongod/shard1.conf

2018-08-28T00:08:34.971+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 676

child process started successfully, parent exiting

以27001端口登录mongdb

[root@ying01 ~]# mongo --host 192.168.112.136 --port 27001

> use admin

switched to db admin

>

> config = { _id: "shard1", members: [ {_id : 0, host : "192.168.112.136:27001"}, {_id: 1,host : "192.168.112.138:27001"},{_id : 2, host : "192.168.112.139:27001",arbiterOnly:true}] } //此处注意,不能以ying03登录,这里139倍设为仲裁节点

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.112.136:27001"

},

{

"_id" : 1,

"host" : "192.168.112.138:27001"

},

{

"_id" : 2,

"host" : "192.168.112.139:27001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{

"ok" : 1, //有OK 1 表明配置正确

"operationTime" : Timestamp(1535386695, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535386695, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard1:SECONDARY> rs.status()

"_id" : 0,

"name" : "192.168.112.136:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", //此时ying01为PRIMARY

"uptime" : 438,

"optime" : {

"ts" : Timestamp(1535386758, 1),

"t" : NumberLong(1)

创建shard2副本集

ying02 以27002端口登录mongdb

[root@ying02 ~]# mongo --host 192.168.112.138 --port 27002

> use admin

switched to db admin

> config = { _id: "shard2", members: [ {_id : 0, host : "192.168.112.136:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.112.138:27002"},{_id : 2, host : "192.168.112.139:27002"}] } //不能用136登录,这里136被设为仲裁节点

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.112.136:27002",

"arbiterOnly" : true

},

{

"_id" : 1,

"host" : "192.168.112.138:27002"

},

{

"_id" : 2,

"host" : "192.168.112.139:27002"

}

]

}

> rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.112.138:27002",

"ok" : 1,

"operationTime" : Timestamp(1535387519, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535387519, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard2:SECONDARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("2018-08-27T16:32:32.274Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535387551, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535387551, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535387551, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535387551, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535387521, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.112.138:27002",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 318,

"optime" : {

"ts" : Timestamp(1535387551, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T16:32:31Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535387519, 2),

"electionDate" : ISODate("2018-08-27T16:31:59Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1535387551, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535387551, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard2:PRIMARY>

[root@ying03 ~]# mongo --host 192.168.112.139 --port 27003

> use admin

switched to db admin

> config = { _id: "shard3", members: [ {_id : 0, host : "192.168.112.136:27003"}, {_id : 1, host : "192.168.112.138:27003", arbiterOnly:true}, {_id : 2, host : "192.168.112.139:27003"}] }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "192.168.112.136:27003"

},

{

"_id" : 1,

"host" : "192.168.112.138:27003",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "192.168.112.139:27003"

}

]

}

> rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.112.139:27003",

"ok" : 1,

"operationTime" : Timestamp(1535387799, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535387799, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard3:SECONDARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2018-08-27T16:36:54.608Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535387812, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535387812, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535387812, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535387812, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535387802, 2),

"members" : [

{

"_id" : 0,

"name" : "192.168.112.139:27003",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 165,

"optime" : {

"ts" : Timestamp(1535387812, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-27T16:36:52Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535387800, 1),

"electionDate" : ISODate("2018-08-27T16:36:40Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1535387812, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535387812, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

shard3:PRIMARY>

开始

[root@ying01 ~]# mongo --host 192.168.112.136 --port 20000

MongoDB shell version v4.0.1

connecting to: mongodb://192.168.112.136:20000/

MongoDB server version: 4.0.1

Server has startup warnings:

2018-08-28T00:58:01.517+0800 I CONTROL [main]

2018-08-28T00:58:01.517+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2018-08-28T00:58:01.517+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2018-08-28T00:58:01.517+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2018-08-28T00:58:01.517+0800 I CONTROL [main]

mongos> sh.addShard("shard1/192.168.112.136:27001,192.168.112.138:27001,192.168.112.139:27001")

{

"shardAdded" : "shard1",

"ok" : 1,

"operationTime" : Timestamp(1535389509, 4),

"$clusterTime" : {

"clusterTime" : Timestamp(1535389509, 4),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard2/192.168.112.136:27002,192.168.112.138:27002,192.168.112.139:27002")

{

"ok" : 0,

"errmsg" : "in seed list shard2/192.168.112.136:27002,192.168.112.138:27002,192.168.112.139:27002, host 192.168.112.136:27002 does not belong to replica set shard2; found { hosts: [ \"192.168.112.138:27002\" ], setName: \"shard2\", setVersion: 1, ismaster: true, secondary: false, primary: \"192.168.112.138:27002\", me: \"192.168.112.138:27002\", electionId: ObjectId('7fffffff0000000000000001'), lastWrite: { opTime: { ts: Timestamp(1535389581, 1), t: 1 }, lastWriteDate: new Date(1535389581000), majorityOpTime: { ts: Timestamp(1535389581, 1), t: 1 }, majorityWriteDate: new Date(1535389581000) }, maxBsonObjectSize: 16777216, maxMessageSizeBytes: 48000000, maxWriteBatchSize: 100000, localTime: new Date(1535389587403), logicalSessionTimeoutMinutes: 30, minWireVersion: 0, maxWireVersion: 7, readOnly: false, compression: [ \"snappy\" ], ok: 1.0, operationTime: Timestamp(1535389581, 1), $clusterTime: { clusterTime: Timestamp(1535389586, 1), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } }",

"code" : 96,

"codeName" : "OperationFailed",

"operationTime" : Timestamp(1535389586, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535389586, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard2/192.168.112.136:27002,192.168.112.138:27002,192.168.112.139:27002")

{

"ok" : 0,

"errmsg" : "in seed list shard2/192.168.112.136:27002,192.168.112.138:27002,192.168.112.139:27002, host 192.168.112.136:27002 does not belong to replica set shard2; found { hosts: [ \"192.168.112.138:27002\" ], setName: \"shard2\", setVersion: 1, ismaster: true, secondary: false, primary: \"192.168.112.138:27002\", me: \"192.168.112.138:27002\", electionId: ObjectId('7fffffff0000000000000001'), lastWrite: { opTime: { ts: Timestamp(1535389731, 1), t: 1 }, lastWriteDate: new Date(1535389731000), majorityOpTime: { ts: Timestamp(1535389731, 1), t: 1 }, majorityWriteDate: new Date(1535389731000) }, maxBsonObjectSize: 16777216, maxMessageSizeBytes: 48000000, maxWriteBatchSize: 100000, localTime: new Date(1535389736979), logicalSessionTimeoutMinutes: 30, minWireVersion: 0, maxWireVersion: 7, readOnly: false, compression: [ \"snappy\" ], ok: 1.0, operationTime: Timestamp(1535389731, 1), $clusterTime: { clusterTime: Timestamp(1535389735, 1), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } }",

"code" : 96,

"codeName" : "OperationFailed",

"operationTime" : Timestamp(1535389735, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535389735, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard3/192.168.112.136:27003,192.168.112.138:27003,192.168.112.139:27003")

{

"ok" : 0,

"errmsg" : "in seed list shard3/192.168.112.136:27003,192.168.112.138:27003,192.168.112.139:27003, host 192.168.112.136:27003 does not belong to replica set shard3; found { hosts: [ \"192.168.112.139:27003\" ], setName: \"shard3\", setVersion: 1, ismaster: true, secondary: false, primary: \"192.168.112.139:27003\", me: \"192.168.112.139:27003\", electionId: ObjectId('7fffffff0000000000000001'), lastWrite: { opTime: { ts: Timestamp(1535390003, 1), t: 1 }, lastWriteDate: new Date(1535390003000), majorityOpTime: { ts: Timestamp(1535390003, 1), t: 1 }, majorityWriteDate: new Date(1535390003000) }, maxBsonObjectSize: 16777216, maxMessageSizeBytes: 48000000, maxWriteBatchSize: 100000, localTime: new Date(1535390011340), logicalSessionTimeoutMinutes: 30, minWireVersion: 0, maxWireVersion: 7, readOnly: false, compression: [ \"snappy\" ], ok: 1.0, operationTime: Timestamp(1535390003, 1), $clusterTime: { clusterTime: Timestamp(1535390007, 1), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } }",

"code" : 96,

"codeName" : "OperationFailed",

"operationTime" : Timestamp(1535390007, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535390007, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard3/192.168.112.136:27003,192.168.112.138:27003,192.168.112.139:27003")

{

"ok" : 0,

"errmsg" : "in seed list shard3/192.168.112.136:27003,192.168.112.138:27003,192.168.112.139:27003, host 192.168.112.136:27003 does not belong to replica set shard3; found { hosts: [ \"192.168.112.139:27003\" ], setName: \"shard3\", setVersion: 1, ismaster: true, secondary: false, primary: \"192.168.112.139:27003\", me: \"192.168.112.139:27003\", electionId: ObjectId('7fffffff0000000000000001'), lastWrite: { opTime: { ts: Timestamp(1535390463, 1), t: 1 }, lastWriteDate: new Date(1535390463000), majorityOpTime: { ts: Timestamp(1535390463, 1), t: 1 }, majorityWriteDate: new Date(1535390463000) }, maxBsonObjectSize: 16777216, maxMessageSizeBytes: 48000000, maxWriteBatchSize: 100000, localTime: new Date(1535390472093), logicalSessionTimeoutMinutes: 30, minWireVersion: 0, maxWireVersion: 7, readOnly: false, compression: [ \"snappy\" ], ok: 1.0, operationTime: Timestamp(1535390463, 1), $clusterTime: { clusterTime: Timestamp(1535390469, 2), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } }",

"code" : 96,

"codeName" : "OperationFailed",

"operationTime" : Timestamp(1535390469, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1535390469, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos>

2743

2743

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?