kubeadm创建k8s集群

1,环境准备

1.1,网络规划

| 节点名称 | IP地址 | 角色 | 安装工具 |

|---|---|---|---|

| k8s-master-01 | 172.16.2.101 | master | kubeadm、kubelet、kubectl、docker、 |

| k8s-master-02 | 172.16.2.102 | master | kubeadm、kubelet、kubectl、docker、haproxy、keepalived |

| k8s-master-03 | 172.16.2.203 | master | kubeadm、kubelet、kubectl、docker、haproxy、keepalived |

| k8s-node-01 | 172.16.2.211 | node | kubeadm、kubelet、kubectl、docker |

| k8s-node-02 | 172.16.2.212 | node | kubeadm、kubelet、kubectl、docker |

| k8s-node-03 | 172.16.2.213 | node | kubeadm、kubelet、kubectl、docker |

| 负载vip | 172.16.2.100 | VIP | |

| service网段 | 10.245.0.0/16 |

2.2,环境说明

| 软件 | 版本 | 下载地址 | 说明 |

|---|---|---|---|

| 系统 | centos 7 | 内核升级为最新版,本文使用4.4.180-2.el7.elrepo.x86_64 |

2.3,系统初始化

1,修改主机名

分别在每台机器上修改主机名

[root@localhost ~]# hostnamectl --static set-hostname k8s-master-01

2,在k8s-master-01安装ansible,方便集中管理

安装过程省略,记得修改配置文件中,关闭交互。(host_key_checking = False)

[root@k8s-master-01 ~]# cat hosts

[k8s-master]

172.16.2.101

172.16.2.102

172.16.2.103

[k8s-node]

172.16.2.111

172.16.2.112

172.16.2.113

[k8s-all:children]

k8s-master

k8s-node

3, 修改/etc/hosts,并同步到所有机器上。

[root@k8s-master-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.2.101 k8s-etcd-01 k8s-master-01

172.16.2.102 k8s-etcd-02 k8s-master-02

172.16.2.103 k8s-etcd-03 k8s-master-03

172.16.2.111 k8s-node-01

172.16.2.112 k8s-node-02

172.16.2.113 k8s-node-03

4,关闭防火墙

[root@k8s-master-01 ~]# ansible k8s-all -m shell -a 'sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config'

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m service -a 'name=firewalld enabled=no state=stopped'

5,同步时间

[root@k8s-master-01 ~]# ansible k8s-all -m yum -a 'state=present name=chrony'

[root@k8s-master-01 ~]# ansible k8s-all -m shell -a 'echo "server time.aliyun.com iburst" >> /etc/chrony.conf'

[root@k8s-master-01 ~]# ansible k8s-all -m service -a 'state=started name=chronyd enabled=yes'

6,关闭selinux

[root@k8s-master-01 ~]# ansible k8s-all -m selinux -a 'state=disabled'

7,安装elrepo源,所有机器均执行

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m shell -a 'rpm -import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org'

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m shell -a 'rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm'

8,升级内核

[root@k8s-master-01 ~]# yum --enablerepo=elrepo-kernel install kernel-lt kernel-lt-devel -y

[root@k8s-master-01 ~]# grub2-set-default 0

更改内核默认启动顺序

[root@k8s-master-01 ~]# reboot

9, 添加yum源

[root@k8s-master-01 ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/ yum/doc/rpm-package-key.gpg

[root@k8s-master-01 ~]# wget http://mirrors.aliyun.com/repo/Centos-7.repo -O /etc/yum.repos.d/CentOS-Base.repo

[root@k8s-master-01 ~]# wget http://mirrors.aliyun.com/repo/epel-7.repo -O /etc/yum.repos.d/epel.repo

[root@k8s-master-01 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

同步到其他节点上

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m synchronize -a 'src=/etc/yum.repos.d/ dest=/etc/yum.repos.d/ compress=yes'

8,加载ipvs模块

[root@k8s-master-01 ~]# vi ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

[root@k8s-master-01 ~]# chmod +x ipvs.modules

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m copy -a 'src=ipvs.modules dest=/etc/sysconfig/modules/'

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m shell -a 'bash /etc/sysconfig/modules/ipvs.modules'

9,调整内核参数

[root@k8s-master-01 ~]# vi sysctl_k8s.conf

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 10

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

net.netfilter.nf_conntrack_max = 2310720

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m copy -a 'src=sysctl_k8s.conf dest=/etc/sysconfig/modules/'

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m shell -a 'sysctl --system'

2,部署keepalived和haproxy

1,安装keepalived和haproxy

在k8s-master-02和k8s-master-03执行

[root@k8s-master-02 ~]# yum install -y keepalived haproxy

2,修改配置

在k8s-master-02和k8s-master-03执行

[root@k8s-master-02 ~]# mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.default

[root@k8s-master-02 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

duxuefeng@exchain.com

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_1

}

vrrp_instance VI_1 {

state MASTER

interface ens32 #修改成网卡的名字

lvs_sync_daemon_inteface ens32 #修改成网卡的名字

virtual_router_id 88

advert_int 1

priority 100 #k8s-master-02为100,k8s-master-03为90

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.2.100 #修改成负载VIP

}

}

[root@k8s-master-02 ~]# mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.default

[root@k8s-master-02 ~]# cat /etc/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind 172.16.2.100:8443 #修改成负载VIP,端口改为8443

mode tcp

balance roundrobin

timeout server 900s

timeout connect 15s

server apiserver01 172.16.2.101:6443 check port 6443 inter 5000 fall 5

server apiserver02 172.16.2.102:6443 check port 6443 inter 5000 fall 5

server apiserver03 172.16.2.103:6443 check port 6443 inter 5000 fall 5

#添加master的地址

3,启动服务

在k8s-master-02和k8s-master-03执行

[root@k8s-master-02 ~]# systemctl enable keepalived && systemctl start keepalived

[root@k8s-master-02 ~]# systemctl enable haproxy && systemctl start haproxy

##3. 部署kubernetes

1,安装k8s相关软件

所有节点均执行,由于kubeadm对Docker的版本是有要求的,需要安装与kubeadm匹配的版本。默认都安装最新版本,

[root@k8s-master-01 ~]# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

[root@k8s-master-01 ~]# yum install -y ipvsadm ipset docker-ce

[root@k8s-master-01 ~]# systemctl enable kubelet

[root@k8s-master-01 ~]# ansible -i hosts k8s-all -m service -a 'name=docker enabled=yes state=started'

2,修改初始化配置

命令生成默认配置

[root@k8s-master-01 ~]# kubeadm config print init-defaults > kubeadm-init.yaml

[root@k8s-master-01 ~]#

[root@k8s-master-01 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.2.101

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.16.2.100:8443" #设置为负载VIP,确保VIP端口能通。

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #修改成阿里云的k8s库

kind: ClusterConfiguration

kubernetesVersion: v1.14.0

networking:

dnsDomain: cluster.local

podSubnet: "10.245.0.0/16"

serviceSubnet: 10.96.0.0/12#设置成service网段

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

#默认生成的配置没有ipvs,需要添加

3, 预下载镜像(可有可无)

[root@k8s-master-01 ~]# kubeadm config images pull --config kubeadm-init.yaml

4,初始化

[root@k8s-master-01 ~]# kubeadm init --config kubeadm-init.yaml

。。。。。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 172.16.2.100:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:e1a2cb7e9d5187ae9901269db55a56283d12b6f76831d0b95f5cbda2af68f513 \

--experimental-control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.2.100:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:e1a2cb7e9d5187ae9901269db55a56283d12b6f76831d0b95f5cbda2af68f513

[root@k8s-master-01 ~]# mkdir -p $HOME/.kube

[root@k8s-master-01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master-01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master-01 ~]#

如果初始化过程出现问题,使用如下命令重置:

所有节点都执行

[root@k8s-master-01 ~]# kubeadm reset

[root@k8s-master-01 ~]# rm -rf /var/lib/cni/ $HOME/.kube/config

5,查看组件状态

[root@k8s-master-01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@k8s-master-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-01 NotReady master 74m v1.14.2

目前只有一个节点,角色是Master,状态是NotReady。

6,添加master节点到集群

在k8s-master-01将证书文件拷贝至其他master节点

[root@k8s-master-01 ~]# cat copy-cer_master.sh

USER=root

CONTROL_PLANE_IPS="k8s-master-02 k8s-master-03"

for host in ${CONTROL_PLANE_IPS}; do

ssh "${USER}"@$host "mkdir -p /etc/kubernetes/pki/etcd"

scp /etc/kubernetes/pki/ca.* "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.* "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.* "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.* "${USER}"@$host:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/admin.conf "${USER}"@$host:/etc/kubernetes/

done

[root@k8s-master-01 ~]# bash copy-cer_master.sh

在k8s-master-02和k8s-master-03执行,注意--experimental-control-plane参数

[root@k8s-master-02 ~]# kubeadm join 172.16.2.100:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:e1a2cb7e9d5187ae9901269db55a56283d12b6f76831d0b95f5cbda2af68f513 --experimental-control-plane

注意:token有效期是有限的,如果旧的token过期,可以使用kubeadm token create --print-join-command重新创建一条token。

[root@k8s-master-01 ~]# kubeadm token create --print-join-command

kubeadm join 172.16.2.100:8443 --token 1vfiau.fle2qrub60pzrue5 --discovery-token-ca-cert-hash sha256:6626fcb695fcf21d4181e2ab9ab2cebd96500f08ee6b6f7a74ac5c302e199e1a

7,node部署

在k8s-node-01,k8s-node-02,k8s-node-03执行,没有--experimental-control-plane参数

[root@k8s-node-01 ~]# kubeadm join 172.16.2.100:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:e1a2cb7e9d5187ae9901269db55a56283d12b6f76831d0b95f5cbda2af68f513

[root@k8s-master-01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-d5947d4b-bmrm5 0/1 Pending 0 4m54s

coredns-d5947d4b-jrjbw 0/1 Pending 0 4m54s

etcd-k8s-master-01 1/1 Running 0 3m56s

etcd-k8s-master-02 1/1 Running 0 97s

etcd-k8s-master-03 1/1 Running 0 46s

kube-apiserver-k8s-master-01 1/1 Running 0 3m59s

kube-apiserver-k8s-master-02 1/1 Running 0 98s

kube-controller-manager-k8s-master-01 1/1 Running 1 4m7s

kube-controller-manager-k8s-master-02 1/1 Running 0 98s

kube-controller-manager-k8s-master-03 1/1 Running 0 5s

kube-proxy-56bfd 1/1 Running 0 98s

kube-proxy-94tc4 1/1 Running 0 47s

kube-proxy-c57ms 1/1 Running 0 60s

kube-proxy-mprms 1/1 Running 0 4m54s

kube-proxy-pclch 1/1 Running 0 54s

kube-proxy-v8vxp 1/1 Running 0 55s

kube-scheduler-k8s-master-01 1/1 Running 1 4m11s

kube-scheduler-k8s-master-02 1/1 Running 0 98s

kube-scheduler-k8s-master-03 1/1 Running 0 5s

8,部署网络插件flannel

Master节点NotReady的原因就是因为没有使用任何的网络插件,此时Node和Master的连接还不正常。目前最流行的Kubernetes网络插件有Flannel、Calico、Canal、Weave这里选择使用flannel。

只在k8s-master-01安装即可

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改"Network": "10.244.0.0/16", >> "Network": "10.245.0.0/16",

[root@k8s-master-01 ~]# kubectl apply -f kube-flannel.yml

等5分钟。。。

查看节点状态

[root@k8s-master-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready master 102m v1.14.2

k8s-master-02 Ready master 22m v1.14.2

k8s-master-03 Ready master 23m v1.14.2

k8s-node-01 Ready <none> 24m v1.14.2

k8s-node-02 Ready <none> 22m v1.14.2

k8s-node-03 Ready <none> 17m v1.14.2

所有的节点已经处于Ready状态

问题1

查看pod,如果发现以后状态,说明是没有修改初始化的network。

[root@k8s-master-01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-d5947d4b-6tcn9 0/1 ContainerCreating 0 103m

coredns-d5947d4b-8h77z 0/1 ContainerCreating 0 103m

etcd-k8s-master-01 1/1 Running 0 103m

etcd-k8s-master-02 1/1 Running 0 23m

etcd-k8s-master-03 1/1 Running 0 24m

kube-apiserver-k8s-master-01 1/1 Running 0 102m

kube-apiserver-k8s-master-02 1/1 Running 1 22m

kube-apiserver-k8s-master-03 1/1 Running 0 24m

kube-controller-manager-k8s-master-01 1/1 Running 1 102m

kube-controller-manager-k8s-master-02 1/1 Running 0 22m

kube-controller-manager-k8s-master-03 1/1 Running 0 24m

kube-flannel-ds-amd64-4spmm 0/1 CrashLoopBackOff 5 6m54s

kube-flannel-ds-amd64-84cks 0/1 CrashLoopBackOff 5 6m54s

kube-flannel-ds-amd64-k9ql7 0/1 CrashLoopBackOff 5 6m54s

kube-flannel-ds-amd64-kcmt6 0/1 CrashLoopBackOff 5 6m54s

kube-flannel-ds-amd64-r9778 0/1 CrashLoopBackOff 5 6m54s

kube-flannel-ds-amd64-w9xcn 0/1 CrashLoopBackOff 5 6m54s

kube-proxy-kwqvg 1/1 Running 0 25m

kube-proxy-qs6v5 1/1 Running 0 103m

kube-proxy-rdvch 1/1 Running 0 18m

kube-proxy-sdlvx 1/1 Running 1 23m

kube-proxy-vzcpw 1/1 Running 0 24m

kube-proxy-zd6l4 1/1 Running 0 23m

kube-scheduler-k8s-master-01 1/1 Running 1 102m

kube-scheduler-k8s-master-02 1/1 Running 0 22m

kube-scheduler-k8s-master-03 1/1 Running 0 24m

查看flannel详细log信息

[root@k8s-master-01 ~]# kubectl --namespace kube-system logs kube-flannel-ds-amd64-4spmm

I0611 08:08:28.532281 1 main.go:514] Determining IP address of default interface

I0611 08:08:28.532651 1 main.go:527] Using interface with name ens32 and address 172.16.2.102

I0611 08:08:28.532689 1 main.go:544] Defaulting external address to interface address (172.16.2.102)

I0611 08:08:28.566661 1 kube.go:126] Waiting 10m0s for node controller to sync

I0611 08:08:28.566729 1 kube.go:309] Starting kube subnet manager

I0611 08:08:29.567475 1 kube.go:133] Node controller sync successful

I0611 08:08:29.567514 1 main.go:244] Created subnet manager: Kubernetes Subnet Manager - k8s-master-02

I0611 08:08:29.567524 1 main.go:247] Installing signal handlers

I0611 08:08:29.567613 1 main.go:386] Found network config - Backend type: vxlan

I0611 08:08:29.567675 1 vxlan.go:120] VXLAN config: VNI=1 Port=0 GBP=false DirectRouting=false

E0611 08:08:29.567951 1 main.go:289] Error registering network: failed to acquire lease: node "k8s-master-02" pod cidr not assigned

I0611 08:08:29.568005 1 main.go:366] Stopping shutdownHandler...

初始化的时候设置的service ip为10.245.0.0/16,而kube-flannel.yml里设置的是10.244.0.0/16,修改后重新执行。

[root@k8s-master-01 ~]# kubectl delete -f ./kube-flannel.yml

podsecuritypolicy.extensions "psp.flannel.unprivileged" deleted

clusterrole.rbac.authorization.k8s.io "flannel" deleted

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.extensions "kube-flannel-ds-amd64" deleted

daemonset.extensions "kube-flannel-ds-arm64" deleted

daemonset.extensions "kube-flannel-ds-arm" deleted

daemonset.extensions "kube-flannel-ds-ppc64le" deleted

daemonset.extensions "kube-flannel-ds-s390x" deleted

重新执行

[root@k8s-master-01 ~]# kubectl apply -f ./kube-flannel.yml

问题2

[root@k8s-master-01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-d5947d4b-55f6c 0/1 ContainerCreating 0 17m

coredns-d5947d4b-gj4kw 1/1 Running 0 17m

etcd-k8s-master-01 1/1 Running 0 16m

etcd-k8s-master-02 1/1 Running 0 16m

etcd-k8s-master-03 1/1 Running 0 16m

kube-apiserver-k8s-master-01 1/1 Running 0 16m

kube-apiserver-k8s-master-02 1/1 Running 0 16m

kube-apiserver-k8s-master-03 1/1 Running 1 14m

kube-controller-manager-k8s-master-01 1/1 Running 1 16m

kube-controller-manager-k8s-master-02 1/1 Running 0 16m

kube-controller-manager-k8s-master-03 1/1 Running 0 15m

kube-flannel-ds-amd64-65246 1/1 Running 0 13m

kube-flannel-ds-amd64-cvtk6 1/1 Running 0 13m

kube-flannel-ds-amd64-dtmc5 1/1 Running 0 13m

kube-flannel-ds-amd64-kz4sd 1/1 Running 0 13m

kube-flannel-ds-amd64-sl8vl 1/1 Running 0 13m

kube-flannel-ds-amd64-zzxqq 1/1 Running 0 13m

kube-proxy-2fvlc 1/1 Running 0 14m

kube-proxy-5xzvt 1/1 Running 0 16m

kube-proxy-8pdk7 1/1 Running 0 14m

kube-proxy-h4svq 1/1 Running 0 16m

kube-proxy-s7bgw 1/1 Running 0 14m

kube-proxy-zvtn7 1/1 Running 0 17m

kube-scheduler-k8s-master-01 1/1 Running 1 16m

kube-scheduler-k8s-master-02 1/1 Running 0 16m

kube-scheduler-k8s-master-03 1/1 Running 0 15m

查看日志

[root@k8s-master-01 ~]# kubectl describe pod coredns-d5947d4b-55f6c -n kube-system

Name: coredns-d5947d4b-55f6c

Namespace: kube-system

Priority: 2000000000

PriorityClassName: system-cluster-critical

Node: k8s-node-01/172.16.2.111

Start Time: Wed, 12 Jun 2019 17:26:25 +0800

Labels: k8s-app=kube-dns

pod-template-hash=d5947d4b

Annotations: <none>

Status: Pending

IP:

Controlled By: ReplicaSet/coredns-d5947d4b

。。。

Warning FailedCreatePodSandBox 21m kubelet, k8s-node-01 Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "19a9431aba770214c2374b92c139b4610bd0a2d91f807ba91e6db238a53c0ef2" network for pod "coredns- d5947d4b-55f6c": NetworkPlugin cni failed to set up pod "coredns-d5947d4b-55f6c_kube-system" network: failed to set bridge addr: "cni0" already has an IP address different from 10.245.3.1/24

Normal SandboxChanged 7m (x413 over 22m) kubelet, k8s-node-01 Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 2m (x543 over 21m) kubelet, k8s-node-01 (combined from similar events): Failed create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "098bea3b0808cc807686c445323fc04f8ae4d5d0877817c16bfc3668f7aa11a9" network for pod "coredns- d5947d4b-55f6c": NetworkPlugin cni failed to set up pod "coredns-d5947d4b-55f6c_kube-system" network: failed to set bridge addr: "cni0" already has an IP address different from 10.245.3.1/24

所有node节点执行如下:

[root@k8s-master-01 ~]# rm -rf /var/lib/cni/flannel/* && rm -rf /var/lib/cni/networks/cbr0/* && ip link delete cni0

[root@k8s-master-01 ~]# rm -rf /var/lib/cni/networks/cni0/*

#docker命令查看coredns在k8s-node-01正常,但是同样需要执行以上操作。造成以上问题的原因是多次执行重建k8s集群,原有配置没有清理干净。

查看状态

[root@k8s-master-01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-d5947d4b-55f6c 0/1 Running 0 33m

coredns-d5947d4b-gj4kw 1/1 Running 0 33m

etcd-k8s-master-01 1/1 Running 0 32m

etcd-k8s-master-02 1/1 Running 0 32m

etcd-k8s-master-03 1/1 Running 0 31m

kube-apiserver-k8s-master-01 1/1 Running 0 32m

kube-apiserver-k8s-master-02 1/1 Running 0 32m

kube-apiserver-k8s-master-03 1/1 Running 1 30m

kube-controller-manager-k8s-master-01 1/1 Running 1 32m

kube-controller-manager-k8s-master-02 1/1 Running 0 32m

kube-controller-manager-k8s-master-03 1/1 Running 0 31m

kube-flannel-ds-amd64-65246 1/1 Running 0 29m

kube-flannel-ds-amd64-cvtk6 1/1 Running 0 29m

kube-flannel-ds-amd64-dtmc5 1/1 Running 0 29m

kube-flannel-ds-amd64-kz4sd 1/1 Running 0 29m

kube-flannel-ds-amd64-sl8vl 1/1 Running 0 29m

kube-flannel-ds-amd64-zzxqq 1/1 Running 0 29m

kube-proxy-2fvlc 1/1 Running 0 30m

kube-proxy-5xzvt 1/1 Running 0 31m

kube-proxy-8pdk7 1/1 Running 0 30m

kube-proxy-h4svq 1/1 Running 0 32m

kube-proxy-s7bgw 1/1 Running 0 30m

kube-proxy-zvtn7 1/1 Running 0 33m

kube-scheduler-k8s-master-01 1/1 Running 1 32m

kube-scheduler-k8s-master-02 1/1 Running 0 32m

kube-scheduler-k8s-master-03 1/1 Running 0 31m

查看ipvs的状态

[root@k8s-master-01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.16.2.101:6443 Masq 1 0 0

-> 172.16.2.102:6443 Masq 1 0 0

-> 172.16.2.103:6443 Masq 1 1 0

TCP 10.96.0.10:53 rr

-> 10.245.4.2:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.245.4.2:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.245.4.2:53 Masq 1 0 0

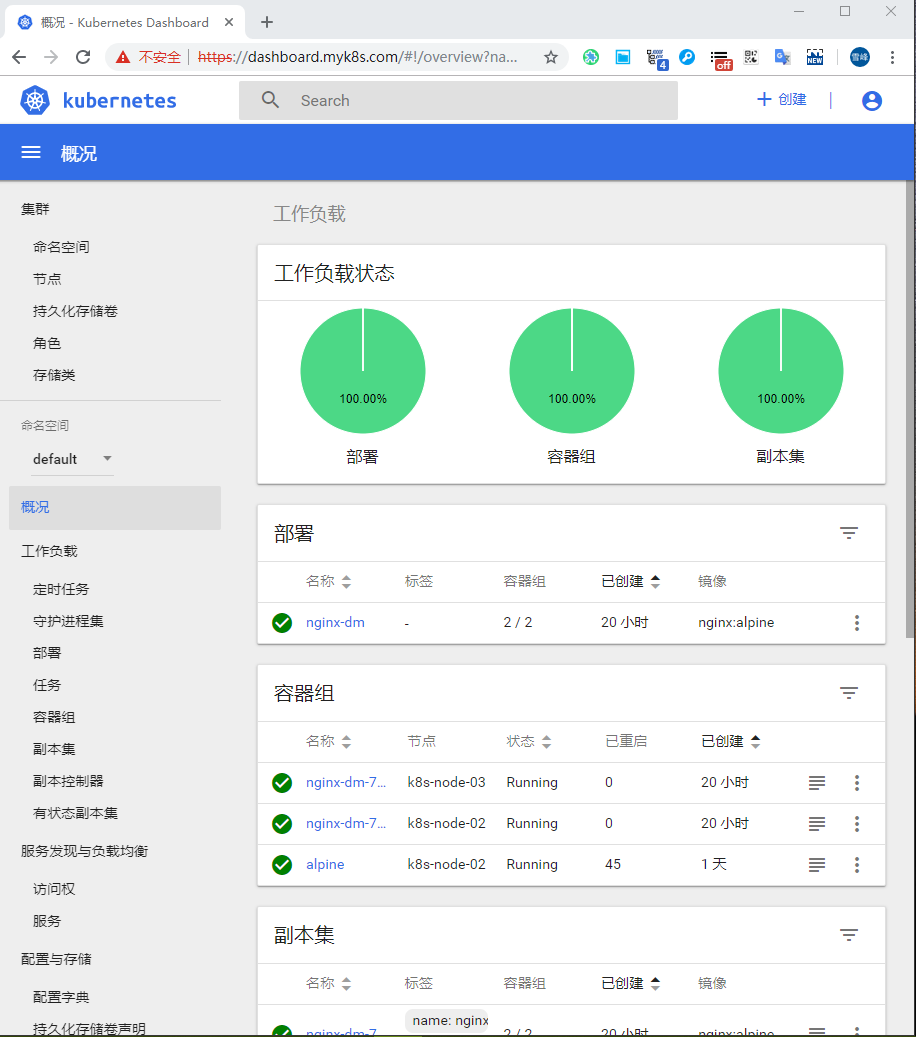

4,配置k8s

1,测试k8s集群 创建一个 nginx 服务

[root@k8s-master-01 ~]# cat service-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dm

spec:

replicas: 3

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: http

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

name: http

targetPort: 80

protocol: TCP

selector:

name: nginx

执行

[root@k8s-master-01 ~]# kubectl apply -f service-nginx.yaml

deployment.apps/nginx-dm created

service/nginx-svc created

查看状态

[root@k8s-master-01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dm-76cf455886-j2w46 1/1 Running 0 5m25s 10.245.3.3 k8s-node-01 <none> <none>

nginx-dm-76cf455886-jm477 1/1 Running 0 5m25s 10.245.5.2 k8s-node-03 <none> <none>

nginx-dm-76cf455886-sjkp5 1/1 Running 0 5m25s 10.245.4.2 k8s-node-02 <none> <none>

[root@k8s-master-01 ~]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 52m <none>

nginx-svc ClusterIP 10.106.28.5 <none> 80/TCP 5m42s name=nginx

访问

[root@k8s-master-01 ~]# curl 10.106.28.5

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

查看 ipvs 规则

[root@k8s-master-01 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.16.2.101:6443 Masq 1 0 0

-> 172.16.2.102:6443 Masq 1 0 0

-> 172.16.2.103:6443 Masq 1 1 0

TCP 10.96.0.10:53 rr

-> 10.245.3.2:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.245.3.2:9153 Masq 1 0 0

TCP 10.106.28.5:80 rr

-> 10.245.3.3:80 Masq 1 0 0

-> 10.245.4.2:80 Masq 1 0 1

-> 10.245.5.2:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.245.3.2:53 Masq 1 0 0

2,验证dns服务

#创建pod

[root@k8s-master-01 ~]# cat service-dns.yaml

apiVersion: v1

kind: Pod

metadata:

name: alpine

spec:

containers:

- name: alpine

image: alpine

command:

- sleep

- "3600"

#执行创建

[root@k8s-master-01 ~]# kubectl apply -f service-nginx.yaml

#查看pod状态

[root@k8s-master-01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alpine 1/1 Running 0 2m55s 10.245.4.4 k8s-node-02 <none> <none>

nginx-dm-76cf455886-2hhbq 1/1 Running 0 4m17s 10.245.4.3 k8s-node-02 <none> <none>

nginx-dm-76cf455886-d8m6j 1/1 Running 0 4m17s 10.245.3.4 k8s-node-01 <none> <none>

#测试

[root@k8s-master-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11m

nginx-svc ClusterIP 10.98.113.150 <none> 80/TCP 7m48s

[root@k8s-master-01 ~]# kubectl exec -it alpine nslookup kubernetes

nslookup: can't resolve '(null)': Name does not resolve

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

[root@k8s-master-01 ~]# kubectl exec -it alpine nslookup nginx-svc

nslookup: can't resolve '(null)': Name does not resolve

Name: nginx-svc

Address 1: 10.98.113.150 nginx-svc.default.svc.cluster.local

5,部署 Metrics-Server

v1.11 以后不再支持通过 heaspter 采集监控数据,支持新的监控数据采集组件metrics-server,比heaspter轻量很多,也不做数据的持久化存储,提供实时的监控数据查询。

官网:https://github.com/kubernetes-incubator/metrics-server

准备配置文件

[root@k8s-master-01 ~]# git clone https://github.com/kubernetes-incubator/metrics-server.git

[root@k8s-master-01 ~]# cd metrics-server/deploy/1.8+/

[root@k8s-master-01 1.8+]# cat aggregated-metrics-reader.yaml auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml resource-reader.yaml metrics-server-deployment.yaml metrics-server-service.yaml >> metrics-server.yaml

#修改配置文件,在最后添加参数

[root@k8s-master-01 1.8+]# vi metrics-server.yaml

84 apiVersion: v1

85 kind: ServiceAccount

86 metadata:

87 name: metrics-server

88 namespace: kube-system

89 ---

90 apiVersion: extensions/v1beta1

91 kind: Deployment

92 metadata:

93 name: metrics-server

94 namespace: kube-system

95 labels:

96 k8s-app: metrics-server

97 spec:

98 selector:

99 matchLabels:

100 k8s-app: metrics-server

101 template:

102 metadata:

103 name: metrics-server

104 labels:

105 k8s-app: metrics-server

106 spec:

107 serviceAccountName: metrics-server

108 volumes:

109 # mount in tmp so we can safely use from-scratch images and/or read-only containers

110 - name: tmp-dir

111 emptyDir: {}

112 containers:

113 - name: metrics-server

114 image: k8s.gcr.io/metrics-server-amd64:v0.3.3

115 imagePullPolicy: Always

116 volumeMounts:

117 - name: tmp-dir

118 mountPath: /tmp

119 command:

120 - /metrics-server

121 - --kubelet-insecure-tls

122 - --kubelet-preferred-address-types=InternalIP

执行

[root@k8s-master-01 1.8+]# kubectl apply -f metrics-server.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

serviceaccount/metrics-server created

deployment.extensions/metrics-server created

service/metrics-server created

查看服务

[root@k8s-master-01 ~]# kubectl get pods -n kube-system |grep metrics

metrics-server-7bddf85f5c-dh5zs 1/1 Running 0 3m12s

测试采集

[root@k8s-master-01 ~]# kubectl top node

error: metrics not available yet

等2分钟。。。。

[root@k8s-master-01 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master-01 153m 7% 1821Mi 47%

k8s-master-02 175m 8% 1518Mi 39%

k8s-master-03 138m 6% 1380Mi 35%

k8s-node-01 47m 2% 791Mi 20%

k8s-node-02 51m 2% 1439Mi 37%

6,Nginx Ingress

1,介绍

Kubernetes 暴露服务的方式目前只有三种:LoadBlancer Service、NodePort Service、Ingress;

LoadBlancer Service 是 kubernetes 深度结合云平台的一个组件;当使用 LoadBlancer Service 暴露服务时,实际上是通过向底层云平台申请创建一个负载均衡器来向外暴露服务;由于 LoadBlancer Service 深度结合了云平台,所以只能在一些云平台上来使用。

NodePort Service 顾名思义,实质上就是通过在集群的每个 node 上暴露一个端口,然后将这个端口映射到某个具体的 service 来实现的,虽然每个 node 的端口有很多(0~65535),但是由于安全性和易用性(服务多了就乱了,还有端口冲突问题)实际使用可能并不多。

Ingress 这个东西是 1.2 后才出现的,通过 Ingress 用户可以实现使用 nginx 等开源的反向代理负载均衡器实现对外暴露服务,官方推荐。

使用 Ingress 时一般会有三个组件:

反向代理负载均衡器:就是nginx,apache等

Ingress Controller:实质上可以理解为是个监视器,Ingress Controller 通过不断地跟 kubernetes API 打交道,实时的感知后端 service、pod 等变化,比如新增和减少 pod,service 增加与减少等;当得到这些变化信息后,Ingress Controller 再结合下文的 Ingress 生成配置,然后更新反向代理负载均衡器,并刷新其配置,达到服务发现的作用

Ingress: 简单理解就是个规则定义;比如说某个域名对应某个 service,即当某个域名的请求进来时转发给某个 service;这个规则将与 Ingress Controller 结合,然后 Ingress Controller 将其动态写入到负载均衡器配置中,从而实现整体的服务发现和负载均衡。

官方地址 https://kubernetes.github.io/ingress-nginx/

工作流程:所有请求都会到负载均衡器上,比如nginx,然后ingress controller通过跟ingress交互得知某个域名对应那个service,再通过跟kubernetes api交互得知service地址等信息,生成配置文件实时写入负载均衡器,然后负载均衡器reload该规则便可实现服务发现。

另外再介绍一种服务暴露的方式:traefik traefik实现和infress类似,它省去了ingress controller,直接能跟kubernetes API 交互,感知后端变化。

2,部署ingress

下载 yaml 文件

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

经常会遇到访问404的情况,换个方式

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

或者打开:https://github.com/kubernetes/ingress-nginx/blob/master/deploy/static/mandatory.yaml

复制内容,新建mandatory。

修改配置文件

#如果默认镜像地址不能下载,替换阿里镜像下载地址

[root@k8s-master-01 ~]# sed -i 's/quay\.io\/kubernetes-ingress-controller/registry\.cn-hangzhou\.aliyuncs\.com\/google_containers/g' mandatory.yaml

#配置pods份数

replicas: 3

#如果将ingress controller分配到node的节点上在kind: Deployment的对象定义中修改

。。。

210 spec:

211 hostNetwork: true

212 serviceAccountName: nginx-ingress-serviceaccount

。。。

#将ingress controller分配到master的节点上,这样不受node节点增减影响。修改如下:

#在kind: Deployment的对象定义中修改

210 spec:

211 hostNetwork: true

212 affinity:

213 nodeAffinity:

214 requiredDuringSchedulingIgnoredDuringExecution:

215 nodeSelectorTerms:

216 - matchExpressions:

217 - key: kubernetes.io/hostname

218 operator: In

219 values:

220 - k8s-master-01

221 - k8s-master-02

222 - k8s-master-03

223 podAntiAffinity:

224 requiredDuringSchedulingIgnoredDuringExecution:

225 - labelSelector:

226 matchExpressions:

227 - key: app.kubernetes.io/name

228 operator: In

229 values:

230 - ingress-nginx

231 topologyKey: "kubernetes.io/hostname"

232 tolerations:

233 - key: node-role.kubernetes.io/master

234 effect: NoSchedule

235 serviceAccountName: nginx-ingress-serviceaccount

另一种修改方式也贴上(未测试):

spec:

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

补充说明:

默认情况下 Kubernetes 不在 master 上部署各种服务,使用了 taint 的机制限制某个 node 的能力,可以查看一下 master 上的 taint:

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready master 22h v1.14.2

k8s-master-02 Ready master 22h v1.14.2

k8s-master-03 Ready master 22h v1.14.2

k8s-node-01 Ready <none> 22h v1.14.2

k8s-node-02 Ready <none> 22h v1.14.2

k8s-node-03 Ready <none> 3h46m v1.14.2

[root@k8s-master-01 ~]# kubectl describe node k8s-master-01

Name: k8s-master-01

Roles: master

。。。

Taints: node-role.kubernetes.io/master:NoSchedule

。。。

可以看到 master 节点上的 key 为 node-role.kubernetes.io/master 指定了 NoSchedule 限制,可以阻止其它 pod 被部署到这个节点上。 因此如果想要让 pod 部署在这里,需要在 pod 上指定 tolerations 配置,表示某个 pod 可以容忍被配置了这个 taint 的节点。 Taints 和 Tolerations 是一组非常精巧的设计,组合使用时可以允许某些 pod 被部署在当前节点,但阻止其它 pod 的部署。

apply 导入 文件

[root@k8s-master-01 ~]# kubectl apply -f mandatory.yaml

namespace/ingress-nginx unchanged

configmap/nginx-configuration unchanged

configmap/tcp-services unchanged

configmap/udp-services unchanged

serviceaccount/nginx-ingress-serviceaccount unchanged

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole unchanged

role.rbac.authorization.k8s.io/nginx-ingress-role unchanged

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding unchanged

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding unchanged

deployment.apps/nginx-ingress-controller configured

查看服务状态

[root@k8s-master-01 ~]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-d6f64c97f-d6bkj 1/1 Running 0 21m 172.16.2.101 k8s-master-01 <none> <none>

nginx-ingress-controller-d6f64c97f-f4nxl 1/1 Running 0 21m 172.16.2.103 k8s-master-03 <none> <none>

nginx-ingress-controller-d6f64c97f-nsct2 1/1 Running 0 21m 172.16.2.102 k8s-master-02 <none> <none>

创建service

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/cloud-generic.yaml

[root@k8s-master-01 ~]# cat cloud-generic.yaml

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

externalTrafficPolicy: Local

type: LoadBalancer

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

#导入

[root@k8s-master-01 ~]# kubectl apply -f cloud-generic.yaml

service/ingress-nginx created

#查看

[root@k8s-master-01 ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx LoadBalancer 10.102.26.125 <pending> 80:30753/TCP,443:32129/TCP 2m6s

[root@k8s-master-01 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 23h

metrics-server ClusterIP 10.96.195.16 <none> 443/TCP 8h

测试 ingress

查看之前的service对象

[root@k8s-master-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

nginx-svc ClusterIP 10.98.113.150 <none> 80/TCP 21h

# 创建一个 nginx-svc 的 ingress

[root@k8s-master-01 ~]# vi nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: nginx.service.com

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

这里注意,nginx-svc不能和kube-dns同一个namespace,Ingress要和nginx-svc同一个namespace,否则coredns不能解析

[root@k8s-master-01 ~]# kubectl exec -it alpine nslookup kubernetes

nslookup: can't resolve '(null)': Name does not resolve

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

[root@k8s-master-01 ~]# kubectl exec -it alpine nslookup nginx-svc

nslookup: can't resolve '(null)': Name does not resolve

nslookup: can't resolve 'nginx-svc': Name does not resolve

command terminated with exit code 1

#新建的ingress不能被识别

[root@k8s-master-01 ~]# kubectl describe ingress nginx-ingress -n kube-system

Name: nginx-ingress

Namespace: kube-system

Address:

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

nginx.service.com

nginx-svc:80 (<none>)

Annotations:

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{},"name":"nginx-ingress","namespace":"kube-system"},"spec":{"rules":[{"host":"nginx.ingress.com","http":{"paths":[{"backend":{"serviceName":"nginx-svc","servicePort":80}}]}}]}}

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 3m38s nginx-ingress-controller Ingress kube-system/nginx-ingress

Normal CREATE 3m38s nginx-ingress-controller Ingress kube-system/nginx-ingress

Normal CREATE 3m38s nginx-ingress-controller Ingress kube-system/nginx-ingress

正确输出

[root@k8s-master-01 ~]# kubectl describe ingress

Name: nginx-ingress

Namespace: default

Address:

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

nginx.service.com

nginx-svc:80 (10.245.4.7:80,10.245.5.4:80)

Annotations:

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{},"name":"nginx-ingress","namespace":"default"},"spec":{"rules":[{"host":"nginx.service.com","http":{"paths":[{"backend":{"serviceName":"nginx-svc","servicePort":80}}]}}]}}

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 45m nginx-ingress-controller Ingress default/nginx-ingress

Normal CREATE 45m nginx-ingress-controller Ingress default/nginx-ingress

Normal CREATE 45m nginx-ingress-controller Ingress default/nginx-ingress

本地测试(这里只在k8s-master-01上测试),没有配置域名解析所以要绑定hosts,域名解析直接解析到ingress所在的公网地址。

[root@k8s-master-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.2.101 k8s-etcd-01 k8s-master-01 nginx.service.com

172.16.2.102 k8s-etcd-02 k8s-master-02 nginx.service.com

172.16.2.103 k8s-etcd-03 k8s-master-03 nginx.service.com

172.16.2.111 k8s-node-01

172.16.2.112 k8s-node-02

172.16.2.113 k8s-node-03

#访问

[root@k8s-master-01 ~]# curl nginx.service.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

注意,必须绑定,这里直接访问域名是不行的。

[root@k8s-master-01 ~]# curl 172.16.2.101

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.15.10</center>

</body>

</html>

7, k8s UI

1, 配置安装 准备yaml

# 下载

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

# 如果在国内这里可以将下载 images 的地址修改为 阿里 的地址

[root@k8s-master-01 ~]# sed -i 's/k8s\.gcr\.io/registry\.cn-hangzhou\.aliyuncs\.com\/google_containers/g' kubernetes-dashboard.yaml

# 这里由于我上面 配置的 apiserver 端口为 8443 与这里的端口冲突了

[root@k8s-master-01 ~]# vi kubernetes-dashboard.yaml

...

113 ports:

114 - containerPort: 8443

115 protocol: TCP

...

128 livenessProbe:

129 httpGet:

130 scheme: HTTPS

131 path: /

132 port: 8443

133 initialDelaySeconds: 30

134 timeoutSeconds: 30

...

157 spec:

158 ports:

159 - port: 443

160 targetPort: 8443

#修改以上三处8443为9443,另外还要在args下添加一行

116 args:

117 - --auto-generate-certificates

118 - --port=9443

导入

[root@k8s-master-01 ~]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs unchanged

serviceaccount/kubernetes-dashboard unchanged

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

[root@k8s-master-01 ~]#

#查看

[root@k8s-master-01 ~]# kubectl get pods -n kube-system |grep dashboard

kubernetes-dashboard-769d4976b5-c6qbn 1/1 Running 0 84s

[root@k8s-master-01 ~]# kubectl get svc -n kube-system |grep dashboard

kubernetes-dashboard ClusterIP 10.110.88.80 <none> 443/TCP 98s

2,访问配置

暴露公网

由于我们已经部署了 Nginx-ingress 所以这里使用 ingress 来暴露出去

配置证书

Dashboard 这边 从 svc 上看只 暴露了 443 端口,所以这边需要生成一个证书 注: 这里由于测试,所以使用 openssl 生成临时的证书

[root@k8s-master-01 ~]# openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout dashboard.myk8s.com-key.key -out dashboard.myk8s.com.pem -subj "/CN=dashboard.myk8s.com"

Generating a 2048 bit RSA private key

....+++

........................................................+++

writing new private key to 'dashboard.myk8s.com-key.key'

-----

[root@k8s-master-01 ~]# kubectl create secret tls dashboard-secret --namespace=kube-system --cert dashboard.myk8s.com.pem --key dashboard.myk8s.com-key.key

secret/dashboard-secret created

#查看

[root@k8s-master-01 ~]# kubectl get secret -n kube-system |grep dashboard

dashboard-secret kubernetes.io/tls 2 52s

kubernetes-dashboard-certs Opaque 0 24m

kubernetes-dashboard-key-holder Opaque 2 12m

kubernetes-dashboard-token-6cwmp kubernetes.io/service-account-token 3 24m

[root@k8s-master-01 ~]# kubectl describe secret/dashboard-secret -n kube-system

Name: dashboard-secret

Namespace: kube-system

Labels: <none>

Annotations: <none>

Type: kubernetes.io/tls

Data

====

tls.crt: 1123 bytes

tls.key: 1704 bytes

创建 dashboard ingress

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/docs/examples/auth/oauth-external-auth/dashboard-ingress.yaml

#也可以复制nginx的ingress进行修改

[root@k8s-master-01 ~]# cat dashboard-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

tls:

- hosts:

- dashboard.myk8s.com

secretName: dashboard-secret

rules:

- host: dashboard.myk8s.com

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 443

[root@k8s-master-01 ~]# kubectl apply -f dashboard-ingress.yaml

ingress.extensions/kubernetes-dashboard created

---

[root@k8s-master-01 ~]# kubectl get ingress -n kube-system

NAME HOSTS ADDRESS PORTS AGE

kubernetes-dashboard dashboard.myk8s.com 80, 443 9s

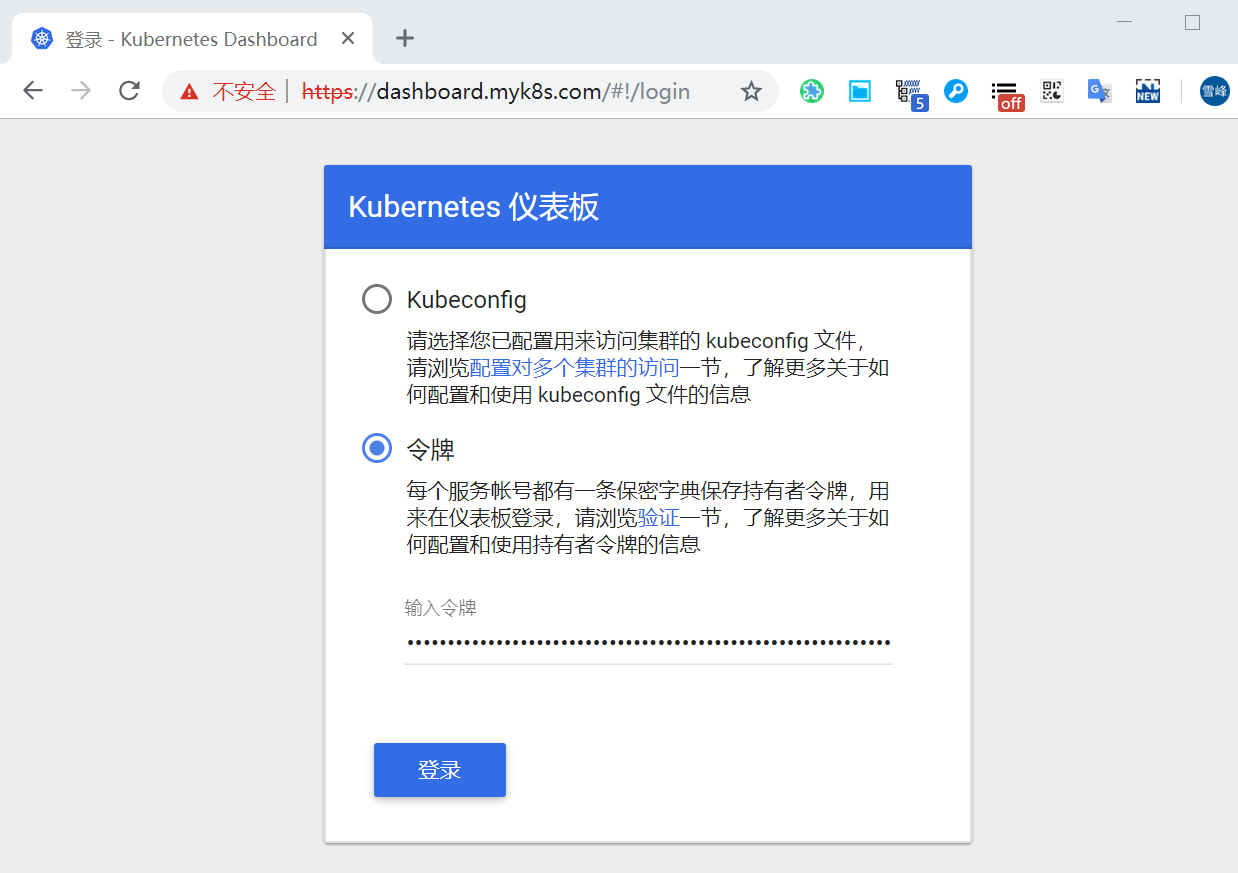

令牌 登录认证

# 创建一个 dashboard rbac 超级用户

[root@k8s-master-01 ~]# cat dashboard-admin-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

[root@k8s-master-01 ~]# kubectl apply -f dashboard-admin-rbac.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@k8s-master-01 ~]# kubectl -n kube-system get secret | grep kubernetes-dashboard-admin

kubernetes-dashboard-admin-token-2n4bb kubernetes.io/service-account-token 3 17s

[root@k8s-master-01 ~]# kubectl describe -n kube-system secret/kubernetes-dashboard-admin-token-2n4bb

Name: kubernetes-dashboard-admin-token-2n4bb

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: 48409592-8e4e-11e9-b201-000c296ac457

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi0ybjRiYiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ4NDA5NTkyLThlNGUtMTFlOS1iMjAxLTAwMGMyOTZhYzQ1NyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.JxYG3de5ZTf0gA6BwoaGluyjg_9mKG1YYcAn6DVNB7yA-IHZ7JbSYO6hgfwz1zlLXWJEmd8bG6XDfdM4aaEcKeobH8H3jfUmMZ7kA0JhodeZOGtJ33rkTbHVcWiH15dd9LQb5OotKsVp4_XpsDCENf1aejln0qv0TnU5oy6zLxTDbYoB0PKHucNBFK7mVKK5jQHRFoMzV3epBOg_UeXZ5-qoIXuSZgsM28apLzjKg42i-jYtTqhH26m1qCO9bzGGLbVJcP1cbHkCPXmUDw2CT36lay-tf5ByE-S_vLZxRUAre_M9FtYhwcrocOoJaFb34bXUbODZ3HY6n1l76ETO9w

查看dashboard状态

[root@k8s-master-01 ~]# kubectl describe ingress -n kube-system

Name: kubernetes-dashboard

Namespace: kube-system

Address:

Default backend: default-http-backend:80 (<none>)

TLS:

dashboard-secret terminates dashboard.myk8s.com

Rules:

Host Path Backends

---- ---- --------

dashboard.myk8s.com

/ kubernetes-dashboard:443 (10.245.5.5:9443)

Annotations:

ingress.kubernetes.io/ssl-passthrough: true

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{"ingress.kubernetes.io/ssl-passthrough":"true","nginx.ingress.kubernetes.io/backend-protocol":"HTTPS"},"name":"kubernetes-dashboard","namespace":"kube-system"},"spec":{"rules":[{"host":"dashboard.myk8s.com","http":{"paths":[{"backend":{"serviceName":"kubernetes-dashboard","servicePort":443},"path":"/"}]}}],"tls":[{"hosts":["dashboard.myk8s.com"],"secretName":"dashboard-secret"}]}}

nginx.ingress.kubernetes.io/backend-protocol: HTTPS

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 26m nginx-ingress-controller Ingress kube-system/kubernetes-dashboard

Normal CREATE 26m nginx-ingress-controller Ingress kube-system/kubernetes-dashboard

Normal CREATE 26m nginx-ingress-controller Ingress kube-system/kubernetes-dashboard

测试访问

在本地机器上做hosts绑定,绑三台master ip都行

172.16.2.101 dashboard.myk8s.com

输入以上生成的token,登录。。。

2228

2228

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?