主配置redis.conf

daemonize yes 是否开机启动

protected-mode no 是否能允许外界访问

#bind 127.0.0.1注了,不注意思是只能本机访问

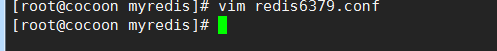

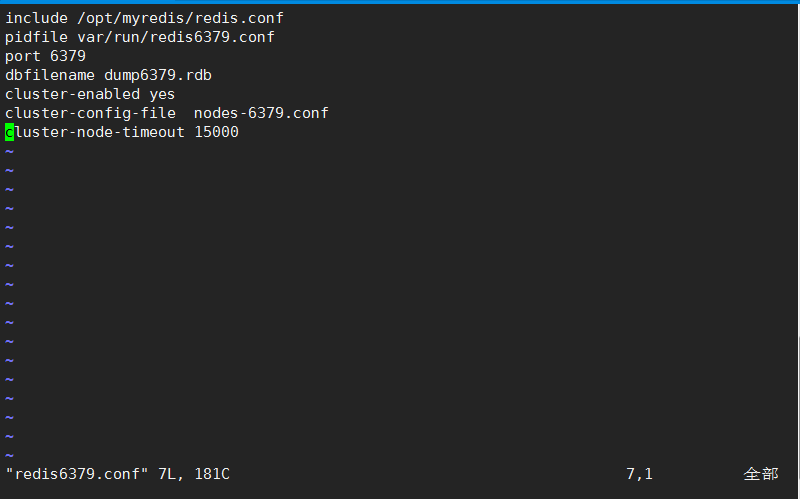

redis6379.conf

include /opt/myredis/redis.conf

pidfile var/run/redis6379.pid

port 6379

dbfilename dump6379.rdb 集群的生成的rdb文件

cluster-enabled yes

cluster-config-file nodes-6379.conf 集群节点生成配置信息

cluster-node-timeout 15000 超时时间

redis6379.conf里写

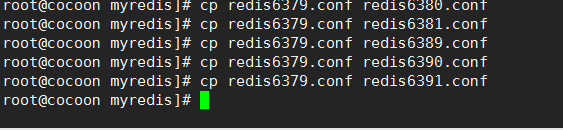

拷贝redis6379.conf五份,里面的6379分别改为对应的端口号

该对应的端口号此处略过,仅仅是vim redis6380.conf里面的基础端口号改一下而已

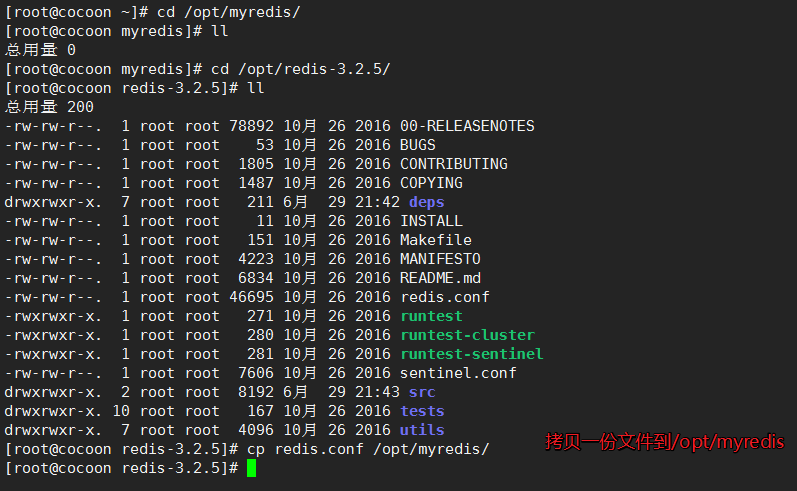

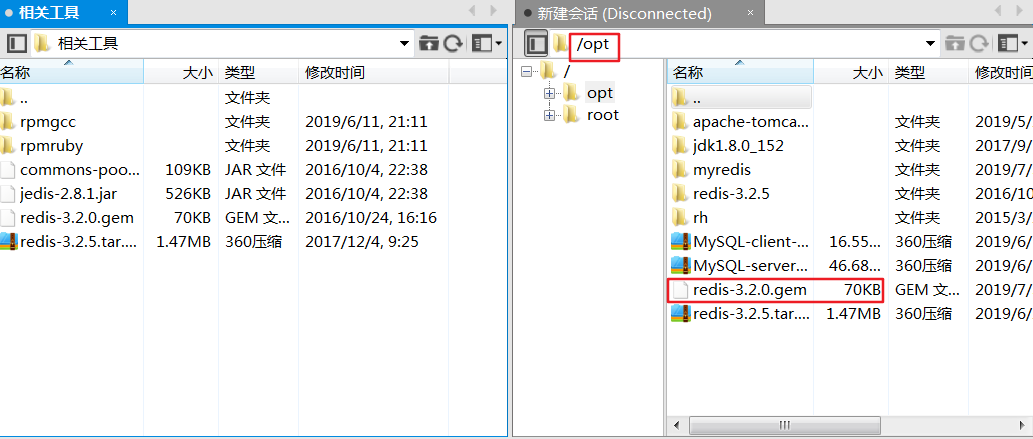

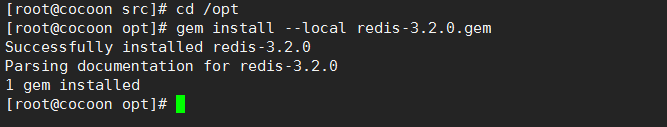

cd /opt opt wei gem的文件存放路径

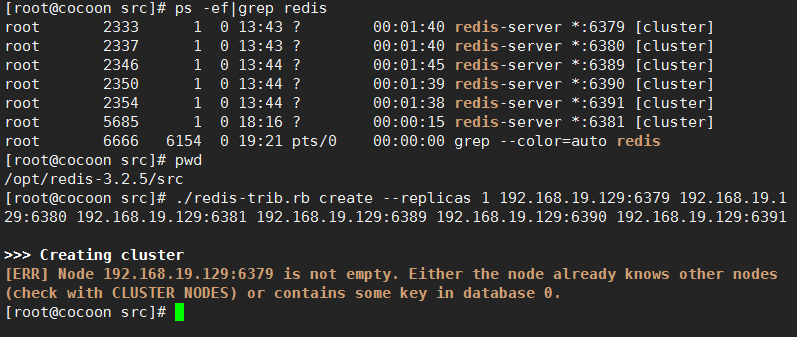

不为空,需要连接客户端,清除数据flushall

Xshell 5 (Build 0788)

Copyright (c) 2002-2015 NetSarang Computer, Inc. All rights reserved.

Type `help' to learn how to use Xshell prompt.

[c:\~]$

Connecting to 192.168.19.129:22...

Connection established.

To escape to local shell, press 'Ctrl+Alt+]'.

Last login: Wed Jul 3 18:36:00 2019 from 192.168.19.1

[root@cocoon ~]# cd /opt/myredis/

[root@cocoon myredis]# ll

总用量 0

[root@cocoon myredis]# cd /opt/redis-3.2.5/

[root@cocoon redis-3.2.5]# ll

总用量 200

-rw-rw-r--. 1 root root 78892 10月 26 2016 00-RELEASENOTES

-rw-rw-r--. 1 root root 53 10月 26 2016 BUGS

-rw-rw-r--. 1 root root 1805 10月 26 2016 CONTRIBUTING

-rw-rw-r--. 1 root root 1487 10月 26 2016 COPYING

drwxrwxr-x. 7 root root 211 6月 29 21:42 deps

-rw-rw-r--. 1 root root 11 10月 26 2016 INSTALL

-rw-rw-r--. 1 root root 151 10月 26 2016 Makefile

-rw-rw-r--. 1 root root 4223 10月 26 2016 MANIFESTO

-rw-rw-r--. 1 root root 6834 10月 26 2016 README.md

-rw-rw-r--. 1 root root 46695 10月 26 2016 redis.conf

-rwxrwxr-x. 1 root root 271 10月 26 2016 runtest

-rwxrwxr-x. 1 root root 280 10月 26 2016 runtest-cluster

-rwxrwxr-x. 1 root root 281 10月 26 2016 runtest-sentinel

-rw-rw-r--. 1 root root 7606 10月 26 2016 sentinel.conf

drwxrwxr-x. 2 root root 8192 6月 29 21:43 src

drwxrwxr-x. 10 root root 167 10月 26 2016 tests

drwxrwxr-x. 7 root root 4096 10月 26 2016 utils

[root@cocoon redis-3.2.5]# cp redis.conf /opt/myredis/

[root@cocoon redis-3.2.5]# cd /opt/myredis/

[root@cocoon myredis]# vim redis.conf

[root@cocoon myredis]# cd /opt/redis-3.2.5/

[root@cocoon redis-3.2.5]# cd /opt/myredis/

[root@cocoon myredis]# rm *

rm:是否删除普通文件 "redis.conf"?y

[root@cocoon myredis]# cd /opt/redis-3.2.5/

[root@cocoon redis-3.2.5]# cp redis.conf /opt/myredis/

[root@cocoon redis-3.2.5]# cd /opt/myredis/

[root@cocoon myredis]# vim redis.conf

[root@cocoon myredis]# vim redis.conf

[root@cocoon myredis]# vim redis.conf

[root@cocoon myredis]# cp redis.conf redis6379.conf

[root@cocoon myredis]# rm redis6379.conf

rm:是否删除普通文件 "redis6379.conf"?y

[root@cocoon myredis]# vim redis6379.conf

[root@cocoon myredis]# vim redis6379.conf

[root@cocoon myredis]# cp redis6379.conf redis6380.conf

[root@cocoon myredis]# cp redis6379.conf redis6381.conf

[root@cocoon myredis]# cp redis6379.conf redis6389.conf

[root@cocoon myredis]# cp redis6379.conf redis6390.conf

[root@cocoon myredis]# cp redis6379.conf redis6391.conf

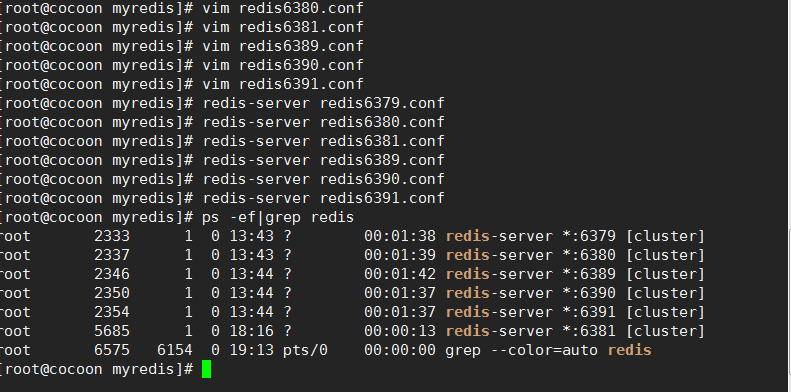

[root@cocoon myredis]# vim redis6380.conf

[root@cocoon myredis]# vim redis6381.conf

[root@cocoon myredis]# vim redis6389.conf

[root@cocoon myredis]# vim redis6390.conf

[root@cocoon myredis]# vim redis6391.conf

[root@cocoon myredis]# redis-server redis6379.conf

[root@cocoon myredis]# redis-server redis6380.conf

[root@cocoon myredis]# redis-server redis6381.conf

[root@cocoon myredis]# redis-server redis6389.conf

[root@cocoon myredis]# redis-server redis6390.conf

[root@cocoon myredis]# redis-server redis6391.conf

[root@cocoon myredis]# ps -ef|grep redis

root 2333 1 0 13:43 ? 00:01:38 redis-server *:6379 [cluster]

root 2337 1 0 13:43 ? 00:01:39 redis-server *:6380 [cluster]

root 2346 1 0 13:44 ? 00:01:42 redis-server *:6389 [cluster]

root 2350 1 0 13:44 ? 00:01:37 redis-server *:6390 [cluster]

root 2354 1 0 13:44 ? 00:01:37 redis-server *:6391 [cluster]

root 5685 1 0 18:16 ? 00:00:13 redis-server *:6381 [cluster]

root 6575 6154 0 19:13 pts/0 00:00:00 grep --color=auto redis

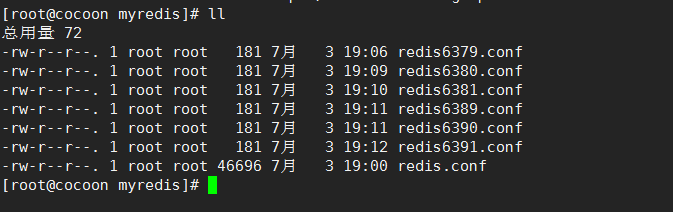

[root@cocoon myredis]# ll

总用量 72

-rw-r--r--. 1 root root 181 7月 3 19:06 redis6379.conf

-rw-r--r--. 1 root root 181 7月 3 19:09 redis6380.conf

-rw-r--r--. 1 root root 181 7月 3 19:10 redis6381.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6389.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6390.conf

-rw-r--r--. 1 root root 181 7月 3 19:12 redis6391.conf

-rw-r--r--. 1 root root 46696 7月 3 19:00 redis.conf

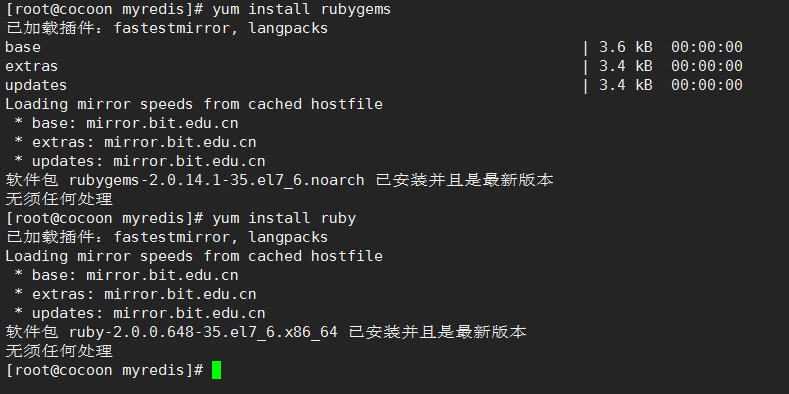

[root@cocoon myredis]# yum install rubygems

已加载插件:fastestmirror, langpacks

base | 3.6 kB 00:00:00

extras | 3.4 kB 00:00:00

updates | 3.4 kB 00:00:00

Loading mirror speeds from cached hostfile

* base: mirror.bit.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

软件包 rubygems-2.0.14.1-35.el7_6.noarch 已安装并且是最新版本

无须任何处理

[root@cocoon myredis]# yum install ruby

已加载插件:fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* base: mirror.bit.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

软件包 ruby-2.0.0.648-35.el7_6.x86_64 已安装并且是最新版本

无须任何处理

[root@cocoon myredis]# cd /opt/redis-3.2.5/src

[root@cocoon src]# gem install --local redis-3.2.0.gem

ERROR: Could not find a valid gem 'redis-3.2.0.gem' (>= 0) in any repository

[root@cocoon src]# cd /opt

[root@cocoon opt]# gem install --local redis-3.2.0.gem

Successfully installed redis-3.2.0

Parsing documentation for redis-3.2.0

1 gem installed

[root@cocoon opt]# cd /opt/redis-3.2.5/src

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.31.211:6379 192.168.31.211:6380 192.168.31.211:6381 192.168.31.211:6389 192.168.31.211:6390 192.168.31.211:6391>>> Creating cluster

^[[A^H^H

^C/usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:124:in `select': Interrupt

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:124:in `rescue in connect_addrinfo'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:121:in `connect_addrinfo'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:162:in `block in connect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:160:in `each'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:160:in `each_with_index'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:160:in `connect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/connection/ruby.rb:211:in `connect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:316:in `establish_connection'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:91:in `block in connect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:273:in `with_reconnect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:90:in `connect'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:337:in `ensure_connected'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:204:in `block in process'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:286:in `logging'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:203:in `process'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis/client.rb:109:in `call'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis.rb:84:in `block in ping'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis.rb:37:in `block in synchronize'

from /usr/share/ruby/monitor.rb:211:in `mon_synchronize'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis.rb:37:in `synchronize'

from /usr/local/share/gems/gems/redis-3.2.0/lib/redis.rb:83:in `ping'

from ./redis-trib.rb:100:in `connect'

from ./redis-trib.rb:1285:in `block in create_cluster_cmd'

from ./redis-trib.rb:1283:in `each'

from ./redis-trib.rb:1283:in `create_cluster_cmd'

from ./redis-trib.rb:1701:in `<main>'

[root@cocoon src]# ps -ef|grep redis

root 2333 1 0 13:43 ? 00:01:40 redis-server *:6379 [cluster]

root 2337 1 0 13:43 ? 00:01:40 redis-server *:6380 [cluster]

root 2346 1 0 13:44 ? 00:01:45 redis-server *:6389 [cluster]

root 2350 1 0 13:44 ? 00:01:39 redis-server *:6390 [cluster]

root 2354 1 0 13:44 ? 00:01:38 redis-server *:6391 [cluster]

root 5685 1 0 18:16 ? 00:00:15 redis-server *:6381 [cluster]

root 6666 6154 0 19:21 pts/0 00:00:00 grep --color=auto redis

[root@cocoon src]# pwd

/opt/redis-3.2.5/src

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.19.129:6379 192.168.19.129:6380 192.168.19.129:6381 192.168.19.129:6389 192.168.19.129:6390 192.168.19.129:6391

>>> Creating cluster

[ERR] Node 192.168.19.129:6379 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

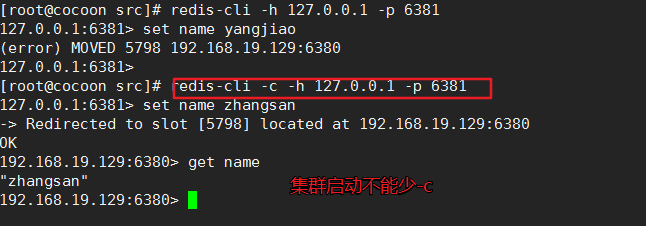

[root@cocoon src]# redis-cli -h 127.0.0.1 6379

(error) ERR unknown command '6379'

[root@cocoon src]# redis-cli -h 127.0.0.1 -p 6379

127.0.0.1:6379> flushall

(error) READONLY You can't write against a read only slave.

127.0.0.1:6379> cluster nodes

fcd0372d95434cd674115ce11ad7d990b98377eb 192.168.19.129:6389 master - 0 1562153224057 8 connected 0-5460

29bc7225cdd8fb5bd04d2adbe3eb24375a99d52e 192.168.19.129:6390 master - 0 1562153225072 7 connected 5461-10922

5e7a66d7f008211e699c3bbe5b37726bde882a71 192.168.19.129:6381 slave b15841b9c09e0b5d98bdb5879b07ed0cf06f5da9 0 1562153221026 9 connected

b15841b9c09e0b5d98bdb5879b07ed0cf06f5da9 192.168.19.129:6391 master - 0 1562153226078 9 connected 10923-16383

3aa719e5c6117466720c8e03559f453a38c06bd3 192.168.19.129:6380 slave 29bc7225cdd8fb5bd04d2adbe3eb24375a99d52e 0 1562153223052 7 connected

0facc53e89ce910bed05cc604e07f9021ca84e1d 192.168.19.129:6379 myself,slave fcd0372d95434cd674115ce11ad7d990b98377eb 0 0 1 connected

127.0.0.1:6379>

[root@cocoon src]# cd /opt

[root@cocoon opt]# cd /m

media/ mnt/

[root@cocoon opt]# cd myredis/

[root@cocoon myredis]# cp redis6379.conf redis6382.conf

[root@cocoon myredis]# cp redis6379.conf redis6383.conf

[root@cocoon myredis]# vim redis6382.conf

[root@cocoon myredis]# vim redis6383.conf

[root@cocoon myredis]# cd /opt/myredis/

[root@cocoon myredis]# cd /opt/redis-3.2.5/src

[root@cocoon src]# cd /opt/myredis/

[root@cocoon myredis]# redis-server redis6382.conf

[root@cocoon myredis]# redis-server redis6383.conf

[root@cocoon myredis]# cd /opt/redis-3.2.5/src

[root@cocoon src]# ./redis-trib.rb add-node 127.0.0.1:6382 127.0.0.1:6383

>>> Adding node 127.0.0.1:6382 to cluster 127.0.0.1:6383

>>> Performing Cluster Check (using node 127.0.0.1:6383)

M: 8f4bdb405ba075e43e7d6ba8d43588c60b9ddccf 127.0.0.1:6383

slots: (0 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[ERR] Not all 16384 slots are covered by nodes.

>>> Send CLUSTER MEET to node 127.0.0.1:6382 to make it join the cluster.

[OK] New node added correctly.

[root@cocoon src]# ./redis-trib.rb add-node --slave 127.0.0.1:6382 127.0.0.1:6383

>>> Adding node 127.0.0.1:6382 to cluster 127.0.0.1:6383

>>> Performing Cluster Check (using node 127.0.0.1:6383)

M: 8f4bdb405ba075e43e7d6ba8d43588c60b9ddccf 127.0.0.1:6383

slots: (0 slots) master

0 additional replica(s)

M: 2432ea2b8286ce1b0cdc79f701c7f2a5848c77f1 127.0.0.1:6382

slots: (0 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[ERR] Not all 16384 slots are covered by nodes.

Automatically selected master 127.0.0.1:6383

[ERR] Node 127.0.0.1:6382 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

[root@cocoon src]# ./redis-cli -p 6382

127.0.0.1:6382> flushall -

[root@cocoon src]# ./redis-cli -p 6383

127.0.0.1:6383> cluster nodes

8f4bdb405ba075e43e7d6ba8d43588c60b9ddccf 127.0.0.1:6383 myself,master - 0 0 0 connected

2432ea2b8286ce1b0cdc79f701c7f2a5848c77f1 127.0.0.1:6382 master - 0 1562155176163 1 connected

127.0.0.1:6383>

[root@cocoon src]# ps -ef |grep redis

root 2333 1 0 13:43 ? 00:01:49 redis-server *:6379 [cluster]

root 2337 1 0 13:43 ? 00:01:50 redis-server *:6380 [cluster]

root 2346 1 0 13:44 ? 00:01:53 redis-server *:6389 [cluster]

root 2350 1 0 13:44 ? 00:01:47 redis-server *:6390 [cluster]

root 2354 1 0 13:44 ? 00:01:47 redis-server *:6391 [cluster]

root 5685 1 0 18:16 ? 00:00:24 redis-server *:6381 [cluster]

root 7082 1 0 19:55 ? 00:00:02 redis-server *:6382 [cluster]

root 7086 1 0 19:55 ? 00:00:03 redis-server *:6383 [cluster]

root 7321 6154 0 20:16 pts/0 00:00:00 grep --color=auto redis

[root@cocoon src]# kill 2333

[root@cocoon src]# kill 2337

[root@cocoon src]# kill 2350

[root@cocoon src]# kill 2354

[root@cocoon src]# kill 5685

[root@cocoon src]# kill 7082

[root@cocoon src]# kill 7086

[root@cocoon src]# kill 7321

-bash: kill: (7321) - 没有那个进程

[root@cocoon src]# ps -ef |grep redis

root 2346 1 0 13:44 ? 00:01:54 redis-server *:6389 [cluster]

root 7334 6154 0 20:17 pts/0 00:00:00 grep --color=auto redis

[root@cocoon src]# kill 2346

[root@cocoon src]# cd /opt/myredis/

[root@cocoon myredis]# ll

总用量 120

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6379.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6380.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6381.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6382.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6383.rdb

-rw-r--r--. 1 root root 76 7月 3 20:17 dump6389.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6390.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6391.rdb

-rw-r--r--. 1 root root 217 7月 3 19:55 nodes-6382.conf

-rw-r--r--. 1 root root 217 7月 3 19:55 nodes-6383.conf

-rw-r--r--. 1 root root 181 7月 3 19:06 redis6379.conf

-rw-r--r--. 1 root root 181 7月 3 19:09 redis6380.conf

-rw-r--r--. 1 root root 181 7月 3 19:10 redis6381.conf

-rw-r--r--. 1 root root 181 7月 3 19:52 redis6382.conf

-rw-r--r--. 1 root root 181 7月 3 19:53 redis6383.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6389.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6390.conf

-rw-r--r--. 1 root root 181 7月 3 19:12 redis6391.conf

-rw-r--r--. 1 root root 46696 7月 3 19:00 redis.conf

[root@cocoon myredis]# rm no*

rm:是否删除普通文件 "nodes-6382.conf"?Y^H^H^H^H

rm:是否删除普通文件 "nodes-6383.conf"?y

[root@cocoon myredis]# ll

总用量 112

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6379.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6380.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6381.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6382.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6383.rdb

-rw-r--r--. 1 root root 76 7月 3 20:17 dump6389.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6390.rdb

-rw-r--r--. 1 root root 76 7月 3 20:16 dump6391.rdb

-rw-r--r--. 1 root root 181 7月 3 19:06 redis6379.conf

-rw-r--r--. 1 root root 181 7月 3 19:09 redis6380.conf

-rw-r--r--. 1 root root 181 7月 3 19:10 redis6381.conf

-rw-r--r--. 1 root root 181 7月 3 19:52 redis6382.conf

-rw-r--r--. 1 root root 181 7月 3 19:53 redis6383.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6389.conf

-rw-r--r--. 1 root root 181 7月 3 19:11 redis6390.conf

-rw-r--r--. 1 root root 181 7月 3 19:12 redis6391.conf

-rw-r--r--. 1 root root 46696 7月 3 19:00 redis.conf

[root@cocoon myredis]# redis-server redis6379.conf

[root@cocoon myredis]# redis-server redis6380.conf

[root@cocoon myredis]# redis-server redis6381.conf

[root@cocoon myredis]# redis-server redis6382.conf

[root@cocoon myredis]# redis-server redis6383.conf

[root@cocoon myredis]# redis-server redis6389.conf

[root@cocoon myredis]# redis-server redis6390.conf

[root@cocoon myredis]# redis-server redis6391.conf

[root@cocoon myredis]# ps -ef|grep redis

root 7383 1 0 20:18 ? 00:00:00 redis-server *:6379 [cluster]

root 7387 1 0 20:19 ? 00:00:00 redis-server *:6380 [cluster]

root 7391 1 0 20:19 ? 00:00:00 redis-server *:6381 [cluster]

root 7395 1 0 20:19 ? 00:00:00 redis-server *:6382 [cluster]

root 7399 1 0 20:19 ? 00:00:00 redis-server *:6383 [cluster]

root 7403 1 0 20:19 ? 00:00:00 redis-server *:6389 [cluster]

root 7407 1 0 20:19 ? 00:00:00 redis-server *:6390 [cluster]

root 7411 1 0 20:19 ? 00:00:00 redis-server *:6391 [cluster]

root 7430 6154 0 20:20 pts/0 00:00:00 grep --color=auto redis

[root@cocoon myredis]# cd /opt/redis-3.2.5/src/

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.19.129:6379 192.168.19.129:6380 192.168.19.129:6381 192.168.19.129:6389 192.168.19.129:6390 192.168.19.129:6391192.168.19.129.6382 192.168.19.129 6383

>>> Creating cluster

[ERR] Sorry, can't connect to node 192.168.19.129:6391192.168.19.129.6382

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.19.129:6379 192.168.19.129:6380 192.168.19.129:6381 192.168.19.129:6389 192.168.19.129:6390 192.168.19.129:6391 192.168.19.129.6382 192.168.19.129 6383

>>> Creating cluster

Invalid IP or Port (given as 192.168.19.129.6382) - use IP:Port format

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.19.129:6379 192.168.19.129:6380 192.168.19.129:6381 192.168.19.129:6389 192.168.19.129:6390 192.168.19.129:6391 192.168.19.129.6382 192.168.19.129 6383

>>> Creating cluster

Invalid IP or Port (given as 192.168.19.129.6382) - use IP:Port format

[root@cocoon src]# ./redis-trib.rb create --replicas 1 192.168.19.129:6379 192.168.19.129:6380 192.168.19.129:6381 192.168.19.129:6389 192.168.19.129:6390 192.168.19.129:6391 192.168.19.129:6382 192.168.19.129:6383

>>> Creating cluster

>>> Performing hash slots allocation on 8 nodes...

Using 4 masters:

192.168.19.129:6379

192.168.19.129:6380

192.168.19.129:6381

192.168.19.129:6389

Adding replica 192.168.19.129:6390 to 192.168.19.129:6379

Adding replica 192.168.19.129:6391 to 192.168.19.129:6380

Adding replica 192.168.19.129:6382 to 192.168.19.129:6381

Adding replica 192.168.19.129:6383 to 192.168.19.129:6389

M: bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379

slots:0-4095 (4096 slots) master

M: 73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380

slots:4096-8191 (4096 slots) master

M: fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381

slots:8192-12287 (4096 slots) master

M: d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389

slots:12288-16383 (4096 slots) master

S: 18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390

replicates bd1152b276859597c420b8d3903621582dc5f969

S: e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391

replicates 73622270351af85aaaae4015ca8067fa775e61f2

S: e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382

replicates fdbe5f68a4a16fdf739ede4b0973e54c86930213

S: d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383

replicates d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join.....

>>> Performing Cluster Check (using node 192.168.19.129:6379)

M: bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379

slots:0-4095 (4096 slots) master

1 additional replica(s)

S: e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391

slots: (0 slots) slave

replicates 73622270351af85aaaae4015ca8067fa775e61f2

S: 18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390

slots: (0 slots) slave

replicates bd1152b276859597c420b8d3903621582dc5f969

S: d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383

slots: (0 slots) slave

replicates d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df

S: e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382

slots: (0 slots) slave

replicates fdbe5f68a4a16fdf739ede4b0973e54c86930213

M: fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381

slots:8192-12287 (4096 slots) master

1 additional replica(s)

M: d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389

slots:12288-16383 (4096 slots) master

1 additional replica(s)

M: 73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380

slots:4096-8191 (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@cocoon src]#

[root@cocoon src]# server-cli -h 127.0.0.1 6389

bash: server-cli: 未找到命令...

[root@cocoon src]# server-cli -h 127.0.0.1 -p 6389

bash: server-cli: 未找到命令...

[root@cocoon src]# redis-cli -h 127.0.0.1 -p 6389

127.0.0.1:6389> cluster nodes

d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389 myself,master - 0 0 4 connected 12288-16383

d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383 slave d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 0 1562157179618 8 connected

73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380 master - 0 1562157180626 2 connected 4096-8191

18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390 slave bd1152b276859597c420b8d3903621582dc5f969 0 1562157181634 5 connected

fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381 master - 0 1562157181533 3 connected 8192-12287

e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391 slave 73622270351af85aaaae4015ca8067fa775e61f2 0 1562157182704 6 connected

e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382 slave fdbe5f68a4a16fdf739ede4b0973e54c86930213 0 1562157182137 7 connected

bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379 master - 0 1562157178613 1 connected 0-4095

127.0.0.1:6389> info replication

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.19.129,port=6383,state=online,offset=267,lag=1

master_repl_offset:267

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:2

repl_backlog_histlen:266

127.0.0.1:6389>

遇到的坑

1.ruby版本问题,默认2.0,redis集群要求至少2.2

2../redis-trib.rb 报错:/usr/local/rvm/gems/ruby-2.4.2/gems/redis-4.0.1/lib/redis/client.rb:119:in `call': ERR Slot 0 is already busy (Redis::CommandError)

错误提示是

slot插槽被占用了(这是 搭建集群前时,以前redis的旧数据和配置信息没有清理干净。)

解决方案是

用redis-cli 登录到每个节点执行 flushall 和 cluster reset 就可以了。

然后重新执行群集脚本命令:

./redis-trib.rb create --replicas 1 192.168.*.*:7001 192.168.*.*:7002 192.168.*.*:7003 192.168.*.*:7004 192.168.*.*:7005 192.168.*.*:7006

3.>> Creating cluster [ERR] Node 192.168.15.102:6000 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

解决方法:

1)、停止所有redis进程,将需要新增的节点下aof、rdb等本地备份文件删除;

2)、同时将新Node的集群配置文件删除,即:删除你redis.conf里面cluster-config-file所在的文件,一般为nodes.conf;

3)、再次添加新节点如果还是报错,则登录新节点redis-cli -c -h 127.0.0.1 -p 6379,然后 ./redis-cli–h x –p对数据库进行清除:

192.168.15.102:6000> flushdb #清空当前数据库

启动redis进程重新执行集群新建命令

./redis-trib.rb create –replicas 1 192.168.15.102:6000 192.168.15.102:6001 192.168.15.102:6002 192.168.15.102:6003 192.168.15.102:6004 192.168.15.102:6005

>> Creating cluster >>> Performing hash slots allocation on 6 nodes… Using 3 masters: 192.168.15.102:6000 192.168.15.102:6001 192.168.15.102:6002 Adding replica 192.168.15.102:6004 to 192.168.15.102:6000 Adding replica 192.168.15.102:6005 to 192.168.15.102:6001 Adding replica 192.168.15.102:6003 to 192.168.15.102:6002 >>> Trying to optimize slaves allocation for anti-affinity ……. Can I set the above configuration? (type ‘yes’ to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join…

[root@cocoon src]# ./redis-trib.rb reshard 192.168.19.129:6385

>>> Performing Cluster Check (using node 192.168.19.129:6385)

M: 876e41b2021ca2cb751ceb8527ac4e526296e079 192.168.19.129:6385

slots: (0 slots) master

0 additional replica(s)

M: d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389

slots:12954-16383 (3430 slots) master

1 additional replica(s)

M: 86de8e6c01a03de284501b39c10f3bb6d630605f 192.168.19.129:6384

slots: (0 slots) master

0 additional replica(s)

M: bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379

slots:0-4762,8192-8857,12288-12953 (6095 slots) master

1 additional replica(s)

M: fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381

slots:8858-12287 (3430 slots) master

1 additional replica(s)

S: e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382

slots: (0 slots) slave

replicates fdbe5f68a4a16fdf739ede4b0973e54c86930213

S: 18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390

slots: (0 slots) slave

replicates bd1152b276859597c420b8d3903621582dc5f969

M: 73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380

slots:4763-8191 (3429 slots) master

1 additional replica(s)

S: d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383

slots: (0 slots) slave

replicates d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df

S: e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391

slots: (0 slots) slave

replicates 73622270351af85aaaae4015ca8067fa775e61f2

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 300

What is the receiving node ID? bd1152b276859597c420b8d3903621582dc5f969

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:all

Ready to move 300 slots.

Source nodes:

M: 876e41b2021ca2cb751ceb8527ac4e526296e079 192.168.19.129:6385

slots: (0 slots) master

0 additional replica(s)

M: d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389

slots:12954-16383 (3430 slots) master

1 additional replica(s)

M: 86de8e6c01a03de284501b39c10f3bb6d630605f 192.168.19.129:6384

slots: (0 slots) master

0 additional replica(s)

M: fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381

slots:8858-12287 (3430 slots) master

1 additional replica(s)

M: 73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380

slots:4763-8191 (3429 slots) master

1 additional replica(s)

Destination node:

M: bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379

slots:0-4762,8192-8857,12288-12953 (6095 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 8858 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8859 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8860 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8861 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8862 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8863 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8864 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8865 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8866 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8867 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8868 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8869 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8870 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8871 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8872 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8873 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8874 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8875 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8876 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8877 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8878 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8879 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8880 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8881 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8882 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8883 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8884 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8885 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8886 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8887 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8888 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8889 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8890 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8891 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8892 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8893 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8894 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8895 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8896 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8897 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8898 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8899 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8900 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Moving slot 8901 from fdbe5f68a4a16fdf739ede4b0973e54c86930213

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 8858 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8859 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8860 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8861 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8862 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8863 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8864 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8865 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8866 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8867 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8868 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8869 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8870 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8871 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8872 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8873 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8874 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8875 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8876 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8877 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8878 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8879 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8880 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8881 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8882 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8883 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8884 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8885 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8886 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8887 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8888 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8889 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8890 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8891 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8892 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8893 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8894 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8895 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8896 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8897 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8898 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8899 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8900 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8901 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8902 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8903 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8904 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8905 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8906 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8907 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8908 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8909 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8910 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8911 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8912 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8913 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8914 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8915 from 192.168.19.129:6381 to 192.168.19.129:6379:

Moving slot 8916 from 192.168.19.129:6381 to 192.168.19.129:6379:

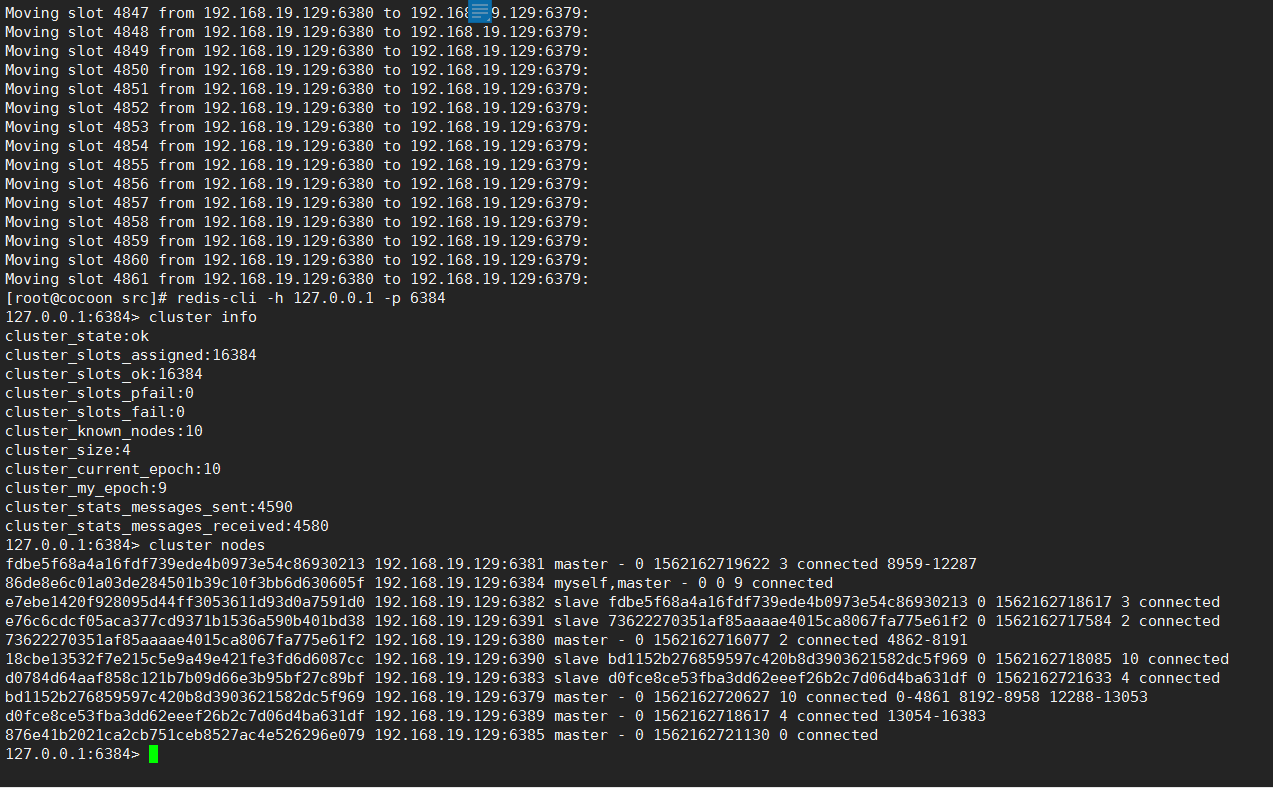

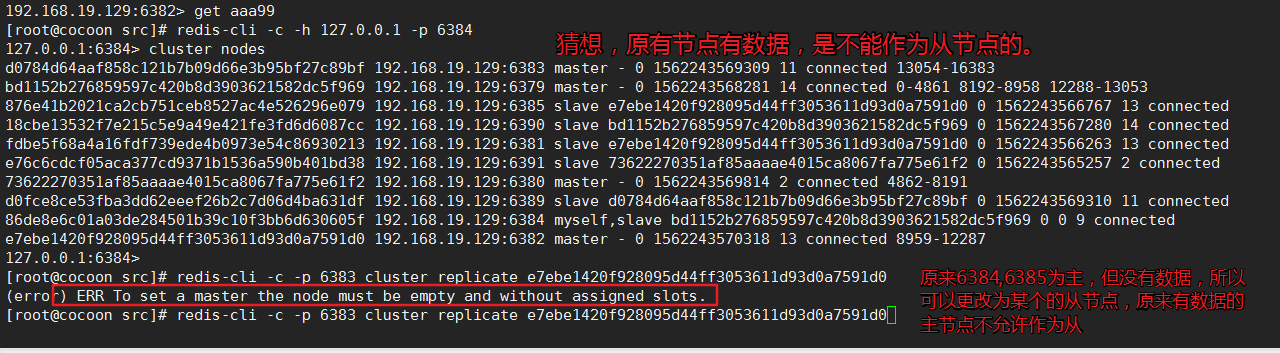

还有点问题,6主4从

redis集群更改节点主从状态

127.0.0.1:6384> cluster nodes

d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383 master - 0 1562234702472 11 connected 13054-16383

bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379 master - 0 1562234701968 14 connected 0-4861 8192-8958 12288-13053

876e41b2021ca2cb751ceb8527ac4e526296e079 192.168.19.129:6385 master - 0 1562234698439 0 connected

18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390 slave bd1152b276859597c420b8d3903621582dc5f969 0 1562234703482 14 connected

fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381 slave e7ebe1420f928095d44ff3053611d93d0a7591d0 0 1562234700457 13 connected

e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391 slave 73622270351af85aaaae4015ca8067fa775e61f2 0 1562234703180 2 connected

73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380 master - 0 1562234699447 2 connected 4862-8191

d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389 slave d0784d64aaf858c121b7b09d66e3b95bf27c89bf 0 1562234705506 11 connected

86de8e6c01a03de284501b39c10f3bb6d630605f 192.168.19.129:6384 myself,master - 0 0 9 connected

e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382 master - 0 1562234704493 13 connected 8959-12287

127.0.0.1:6384> slaveof 127.0.0.1 6379

(error) ERR SLAVEOF not allowed in cluster mode.

127.0.0.1:6384> slaveof 192.168.19.129 6379

(error) ERR SLAVEOF not allowed in cluster mode.

127.0.0.1:6384> slaveof 127.0.0.1 6379

(error) ERR SLAVEOF not allowed in cluster mode.

127.0.0.1:6384>

[root@cocoon myredis]# cd /opt/myredis/

[root@cocoon myredis]# cd /opt/redis-3.2.5/src

[root@cocoon src]# ./redis-trib.rb add-node 192.168.19.129:6384 192.168.19.129:6379

>>> Adding node 192.168.19.129:6384 to cluster 192.168.19.129:6379

>>> Performing Cluster Check (using node 192.168.19.129:6379)

M: bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379

slots:0-4861,8192-8958,12288-13053 (6395 slots) master

1 additional replica(s)

S: e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391

slots: (0 slots) slave

replicates 73622270351af85aaaae4015ca8067fa775e61f2

S: d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389

slots: (0 slots) slave

replicates d0784d64aaf858c121b7b09d66e3b95bf27c89bf

S: fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381

slots: (0 slots) slave

replicates e7ebe1420f928095d44ff3053611d93d0a7591d0

M: d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383

slots:13054-16383 (3330 slots) master

1 additional replica(s)

M: 73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380

slots:4862-8191 (3330 slots) master

1 additional replica(s)

M: 876e41b2021ca2cb751ceb8527ac4e526296e079 192.168.19.129:6385

slots: (0 slots) master

0 additional replica(s)

M: 86de8e6c01a03de284501b39c10f3bb6d630605f 192.168.19.129:6384

slots: (0 slots) master

0 additional replica(s)

M: e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382

slots:8959-12287 (3329 slots) master

1 additional replica(s)

S: 18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390

slots: (0 slots) slave

replicates bd1152b276859597c420b8d3903621582dc5f969

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[ERR] Node 192.168.19.129:6384 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

[root@cocoon src]# redis-cli -c -p 6384 cluster replicate d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df

(error) ERR I can only replicate a master, not a slave.

[root@cocoon src]# redis-cli -c -p 6379 cluster replicate d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df

(error) ERR I can only replicate a master, not a slave.

[root@cocoon src]# redis-cli -c -p 6379 cluster replicate 18cbe13532f7e215c5e9a49e421fe3fd6d6087cc

(error) ERR I can only replicate a master, not a slave.

[root@cocoon src]# redis-cli -c -p 6384 cluster replicate

(error) ERR Wrong CLUSTER subcommand or number of arguments

[root@cocoon src]# redis-cli -c -p 6384 cluster replicate bd1152b276859597c420b8d3903621582dc5f969

OK

[root@cocoon src]# redis-cli -c -p 6385 cluster replicate e7ebe1420f928095d44ff3053611d93d0a7591d0

OK

[root@cocoon src]# redis-cli -c -h 127.0.0.1 -p 6384

127.0.0.1:6384> cluster nodes

d0784d64aaf858c121b7b09d66e3b95bf27c89bf 192.168.19.129:6383 master - 0 1562243234694 11 connected 13054-16383

bd1152b276859597c420b8d3903621582dc5f969 192.168.19.129:6379 master - 0 1562243232077 14 connected 0-4861 8192-8958 12288-13053

876e41b2021ca2cb751ceb8527ac4e526296e079 192.168.19.129:6385 slave e7ebe1420f928095d44ff3053611d93d0a7591d0 0 1562243233082 13 connected

18cbe13532f7e215c5e9a49e421fe3fd6d6087cc 192.168.19.129:6390 slave bd1152b276859597c420b8d3903621582dc5f969 0 1562243230667 14 connected

fdbe5f68a4a16fdf739ede4b0973e54c86930213 192.168.19.129:6381 slave e7ebe1420f928095d44ff3053611d93d0a7591d0 0 1562243231675 13 connected

e76c6cdcf05aca377cd9371b1536a590b401bd38 192.168.19.129:6391 slave 73622270351af85aaaae4015ca8067fa775e61f2 0 1562243233686 2 connected

73622270351af85aaaae4015ca8067fa775e61f2 192.168.19.129:6380 master - 0 1562243229661 2 connected 4862-8191

d0fce8ce53fba3dd62eeef26b2c7d06d4ba631df 192.168.19.129:6389 slave d0784d64aaf858c121b7b09d66e3b95bf27c89bf 0 1562243232680 11 connected

86de8e6c01a03de284501b39c10f3bb6d630605f 192.168.19.129:6384 myself,slave bd1152b276859597c420b8d3903621582dc5f969 0 0 9 connected

e7ebe1420f928095d44ff3053611d93d0a7591d0 192.168.19.129:6382 master - 0 1562243227948 13 connected 8959-12287

127.0.0.1:6384>

2330

2330

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?