在慕课网学习的hadoop进阶视频的小练习

需求:实现两个矩阵的相乘

矩阵相乘的条件是:左矩阵的列 = 右矩阵的行

相乘结果:左行 X 右,然后依次累加得到一个结果

结果坐标:行:左行标,列:右列标

矩阵格式:行号 tab 列号_值,列号_值,列号_值,列号_值

1 1_1,2_2,3_-2,4_0

2 1_3,2_3,3_4,4_-3

3 1_-2,2_0,3_2,4_3

4 1_5,2_3,3_-1,4_2

5 1_4,2_2,3_0,4_2

--------------------------------------------------------------------------------------------------

代码实现:先将右矩阵转置,即行变成列,列变成行,然后与左矩阵相乘

1、将右矩阵转置

Mapper1.java

package step1;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class Mapper1 extends Mapper<LongWritable, Text, Text, Text>{

private Text outKey = new Text();

private Text outValue = new Text();

/**

* key 行号

* value 行字符串 1 1_0,2_3,3_-1,4_2,5_-3

*

* 将矩阵的行号变成列号,列号变成行号

*/

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

String[] rowAndLine = value.toString().split("\t");

//矩阵的行号

String row = rowAndLine[0];

String[] lines = rowAndLine[1].split(",");

//["1_0","2_3","3_-1","4_2","5_-3"]

for(int i = 0;i<lines.length; i++){

String column = lines[i].split("_")[0];

String valueStr = lines[i].split("_")[1];

//key:列号 value:行号_值

outKey.set(column);

outValue.set(row + "_" + valueStr);

context.write(outKey, outValue);

}

}

}

Reducer1.java

package step1;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class Reducer1 extends Reducer<Text, Text, Text, Text>{

private Text outKey = new Text();

private Text outValue = new Text();

//key:列号 value:[行号_值,行号_值,行号_值,行号_值,行号_值...]

@Override

protected void reduce(Text key, Iterable<Text> values, Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

for(Text text : values){

//text:行号_值

sb.append(text + ",");

}

String line = null;

if(sb.toString().endsWith(",")){

line = sb.substring(0, sb.length() - 1);

}

outKey.set(key);

outValue.set(line);

context.write(outKey, outValue);

}

}

MR1.java(main函数,这里要注意把项目打包jar放到D:\\jar\\test2.jar)

package step1;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MR1 {

//输入文件的相对路径

private static String inPath = "/matrix/step1_input/matrix2.txt";

//输出文件的相对路径

private static String outPath = "/matrix/step1_output";

//hdfs地址

private static String hdfs = "hdfs://192.168.77.128:9000";

public int run(){

try {

//创建job配置类

Configuration conf = new Configuration();

//设置hdfs的地址

//conf.set("fs.defaultFS", hdfs);

conf.set("mapred.jar", "D:\\jar\\test2.jar");

//创建一个job实例

Job job = Job.getInstance(conf, "step1");

//设置job的主类

job.setJarByClass(MR1.class);

//设置job的Mapper类和Reducer类

job.setMapperClass(Mapper1.class);

job.setReducerClass(Reducer1.class);

//设置Mapper输出的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileSystem fs = FileSystem.get(conf);

//设置输入和输出的路径

Path inputPath = new Path(hdfs + inPath);

if(fs.exists(inputPath)){

FileInputFormat.addInputPath(job, inputPath);

}

Path outputPath = new Path(hdfs + outPath);

fs.delete(outputPath, true);

FileOutputFormat.setOutputPath(job, outputPath);

return job.waitForCompletion(true)?1:-1;

} catch (IOException e) {

e.printStackTrace();

} catch (ClassNotFoundException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

return -1;

}

public static void main(String[] args){

int result = -1;

result = new MR1().run();

if(result == 1){

System.out.println("step1运行成功。。。");

}else if(result == -1){

System.out.println("step1运行失败。。。");

}

}

}

2、矩阵相乘

Mapper2.java

package step2;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class Mapper2 extends Mapper<LongWritable, Text, Text, Text>{

private Text outKey = new Text();

private Text outValue = new Text();

private List<String> cacheList = new ArrayList<String>();

@Override

protected void setup(Context context)

throws IOException, InterruptedException {

URI[] uris = DistributedCache.getCacheFiles(context.getConfiguration());

FileSystem fs = FileSystem.get(URI.create("hdfs://192.168.77.128:9000"), context.getConfiguration());

FSDataInputStream in = fs.open(new Path(uris[0].getPath()));

// Scanner input_A = new Scanner(in);

// while(input_A.hasNext()){

// System.out.println(input_A.next());

// cacheList.add(input_A.next());

// }

// input_A.close();

//通过输入流将全局缓存中的 右侧矩阵 读入List<String>中

//FileReader fr = new FileReader("matrix2");

BufferedReader br = new BufferedReader(new InputStreamReader(in));

//每一行的格式是 : 行 tab 列_值,列_值,列_值,列_值

String line = null;

while((line = br.readLine()) != null ){

cacheList.add(line);

System.out.println(line);

}

in.close();

br.close();

}

/**

* key:行号

* value:行 tab 列_值,列_值,列_值,列_值

*/

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

//行

String row_matrix1 = value.toString().split("\t")[0];

//列值(数组)

String[] column_value_array_matrix1 = value.toString().split("\t")[1].split(",");

for(String line : cacheList){

//右侧矩阵的行line

//格式 : 行 tab 列_值,列_值,列_值,列_值

String row_matrix2 = line.toString().split("\t")[0];

String[] column_value_array_matrix2 = line.toString().split("\t")[1].split(",");

//矩阵两行相乘得到结果

int result = 0;

//遍历左矩阵的第一行的每一列

for(String columm_value_matrix1 : column_value_array_matrix1){

String column_matrix1 = columm_value_matrix1.split("_")[0];

String value_matrix1 = columm_value_matrix1.split("_")[1];

//遍历右矩阵的每一行每一列

for(String columm_value_matrix2 : column_value_array_matrix2){

if(columm_value_matrix2.startsWith(column_matrix1 + "_")){

String value_matrix2 = columm_value_matrix2.split("_")[1];

//将两列的值相乘并累加

System.out.println(value_matrix1 + "---" + value_matrix2);

result = result + Integer.valueOf(value_matrix1) * Integer.valueOf(value_matrix2);

}

}

}

//result是结果矩阵中的某元素,坐标为 行:row_matrix1 列:row_matrix2(因为右矩阵已经转置)

outKey.set(row_matrix1);

outValue.set(row_matrix2 + "_" + result);

//输出格式key:行 value:列_值

context.write(outKey, outValue);

}

}

}

Reducer2.java

package step2;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class Reducer2 extends Reducer<Text, Text, Text, Text>{

private Text outKey = new Text();

private Text outValue = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

for(Text value : values){

sb.append(value + ",");

}

String result = null;

if(sb.toString().endsWith(",")){

result = sb.substring(0, sb.length() - 1);

}

//outKey:行号 outValue:列_值,列_值,列_值,列_值

outKey.set(key);

outValue.set(result);

context.write(outKey, outValue);

}

}

MR2.java

package step2;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MR2 {

//输入文件的相对路径

private static String inPath = "/matrix/step2_input/matrix1.txt";

//输出文件的相对路径

private static String outPath = "/matrix/output";

//将step1输出的转置矩阵作为全局缓存

private static String cache = "/matrix/step1_output/part-r-00000";

//hdfs地址

private static String hdfs = "hdfs://192.168.77.128:9000";

public int run(){

try {

//创建job配置类

Configuration conf = new Configuration();

//设置hdfs的地址

//conf.set("fs.defaultFS", hdfs);

conf.set("mapred.jar", "D:\\jar\\test2.jar");

//添加分布式缓存文件

DistributedCache.addCacheFile(new URI(hdfs + cache), conf);

//创建一个job实例

Job job = Job.getInstance(conf, "step2");

//设置job的主类

job.setJarByClass(MR2.class);

//设置job的Mapper类和Reducer类

job.setMapperClass(Mapper2.class);

job.setReducerClass(Reducer2.class);

//设置Mapper输出的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileSystem fs = FileSystem.get(conf);

//设置输入和输出的路径

Path inputPath = new Path(hdfs + inPath);

if(fs.exists(inputPath)){

FileInputFormat.addInputPath(job, inputPath);

}

Path outputPath = new Path(hdfs + outPath);

fs.delete(outputPath, true);

FileOutputFormat.setOutputPath(job, outputPath);

return job.waitForCompletion(true)?1:-1;

} catch (IOException e) {

e.printStackTrace();

} catch (ClassNotFoundException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (URISyntaxException e) {

e.printStackTrace();

}

return -1;

}

public static void main(String[] args){

int result = 0;

result = new MR2().run();

if(result == 1){

System.out.println("step2运行成功。。。");

}else if(result == -1){

System.out.println("step2运行失败。。。");

}

}

}

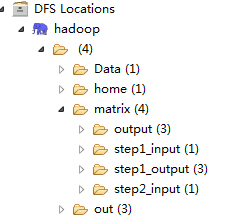

HDFS文件夹截图

出现问题解决:

1、Win环境下本地运行hadoop1版本出现访问权限问题,会遇到如下报错:

Exception in thread "main" java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Administrator\mapred\staging\Administrator-519341271\.staging to 0700

此时只需要修改org.apache.hadoop.fs.FileUtil文件并重新编译即可,简单解决步骤如下:

1.eclipse中新建java工程

2.将hadoop相关jar包都导入工程

3.到源码中拷贝src/core/org/apache/hadoop/fs/FileUtil.java文件,粘贴到eclipse工程的src目录下

4.找到以下部分,注释掉checkReturnValue方法中的代码

private static void checkReturnValue(boolean rv, File p,

FsPermission permission

) throws IOException {

/*

//win7 connect to linux hadoop

if (!rv) {

throw new IOException("Failed to set permissions of path: " + p +

" to " +

String.format("%04o", permission.toShort()));

}

*/

}5.到工程的输出目录找到class文件,会有两个class文件,因为FileUtil.java有内部类

6.将该class文件添加到hadoop-core-1.2.1.jar中对应的目录,覆盖原文件

7.将更新过的hadoop-core-1.2.1.jar拷贝到Hadoop集群,覆盖原有文件,重启Hadoop集群

8.将更新过的hadoop-core-1.2.1.jar添加到项目的依赖中,如果用到Maven可能涉及覆盖Maven库中的对应文件

9.运行程序,万事大吉!!!

===============================================

如果不会编译,那就下载一个吧!!!

http://download.csdn.net/detail/echoqun/6198467#comment

3736

3736

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?