---------------------------------DRBD安装配置及测试--------------------------------

一、准备工作

1.更改node1、node2的hosts文件为:

192.168.10.22 node1.wsh.com node1

192.168.10.23 node2.wsh.com node22.更改主机名,确保重启后主机名不变

node1, node2 # sed -i 's@\(HOSTNAME=\).*@\1node1.wsh.com@g' /etc/sysconfig/network

node1, node2 # sed -i 's@\(HOSTNAME=\).*@\1node2.wsh.com@g' /etc/sysconfig/network3.配置ssh双机互信

4.同步时间,确保时间保持一致

二、安装drbd

1.安装软件

node1, node2 # tar xf drbd-8.4.3.tar.gz

node1, node2 # cd drbd-8.4.3

node1, node2 # ./configure --prefix=/usr/local/drbd --sysconfdir=/etc --libdir=/usr/lib --with-km --with-pacemaker

node1, node2 # make && make install2.装载drbd模块

node1, node2 # modprobe drbd

node1, node2 # lsmod | grep drbd

drbd 325626 0

libcrc32c 1246 1 drbd确保开机自动装载模块:

node1, node2 # echo "modprobe drbd" > /etc/sysconfig/modules/drbd.modules

node1, node2 # chmod 755 /etc/sysconfig/modules/drbd.modules3.将/usr/local/drbd/lib软连接至/usr/lib/drbd

node1, node2 # ln -sv /usr/local/drbd/lib/drbd /usr/lib/drbd4.将/usr/local/drbd/sbin加入PATH路径

node1, node2 # echo "export PATH=/usr/local/drbd/sbin:$PATH" > /etc/profile.d/drbd.sh三、配置drbd

node1上操作:

1.更改global_common.conf配置文件,如下:

node1 # vim /etc/drbd.d/global_common.conf

global {

usage-count no;

}

common {

handlers {

pri-on-incon-degr

pri-lost-after-sb

local-io-error

}

disk {

on-io-error detach;

}

net {

protocol C;

cram-hmac-alg "sha1";

shared-secret "e1680f714c4e6d71";

}

syncer {

rate 1000M;

}

}2.定义drbd的资源mystore.res,如下:

node1 #vim /etc/drbd.d/mystore.res

resource mystore {

on node1.wsh.com {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.10.22:7789;

meta-disk internal;

}

on node2.wsh.com {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.10.23:7789;

meta-disk internal;

}

}将global_common.conf和mystore.res复制到node2的/etc/drbd.d下:

# scp /etc/drbd.d/* node2:/etc/drbd.d/四、初始化drbd资源并启动服务

1.初始化资源,在node1、node2上分别执行:

node1, node2 # drbdadm create-md mystore

Writing meta data...

initializing activity log

NOT initializing bitmap

New drbd meta data block successfully created.2.启动drbd服务,在node1、node2上执行:

node1, node2 #service drbd start3.查看启动状态

# drbd-overview

0:mystore/0 Connected Secondary/Secondary Inconsistent/Inconsistent C r-----# cat /proc/drbd

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build by root@node1.wsh.com, 2015-08-01 14:04:51

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r-----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:42007964. promote node1为Primary

从上述状态来看,两个节点均为Secondary状态,下面我们提升node1节点为Primary,需要在设置为Primary节点上执行:

node1 # drbdadm primary --force mystore 第一次提升主节点,需要使用--force

node1 # drbd-overview

0:mystore/0 SyncSource Primary/Secondary UpToDate/Inconsistent C r---n-

[===>................] sync'ed: 22.5% (3184/4100)M 此时正在同步数据稍等片刻,数据同步完成,在此查看状态:

node1 # drbd-overview

0:mystore/0 Connected Primary/Secondary UpToDate/UpToDate C r-----五、创建文件系统

1.格式化drbd设备

文件系统的挂载只能在Primary节点进行,因此,也只有在设置了主节点后才能对文件系统进行格式化:

node1 # mke2fs -t ext4 /dev/drbd02.创建/mydata/data,并挂载/dev/drbd0

node1, node2 # mkdir -v /mydata

node1 # mount /dev/drbd0 /mydata3.测试

node1 # cp /etc/inittab /mydata

node1 # # sed -i 's@\(id:\)3\(:initdefault:\)@\15\2@g' /mydata/inittab

node1 # drbd-overview

0:mystore/0 Connected Primary/Secondary UpToDate/UpToDate C r----- /mydata ext4 4.0G 137M 3.7G 4%六、切换Primary和Secondary节点

对于Primary/Secondary模型的drbd来讲,在某个时刻只能有一个Primary节点,因此,要切换两个节点的角色,需要先将原有的Primary节点demote为Secondary后,才能将另一个节点promote为Primary:

node1 demote:

node1 # umount /mydata

node1 # drbdadm secondary mystore

node1 # drbd-overview

0:mystore/0 Connected Secondary/Secondary UpToDate/UpToDate C r-----

node2 promote:

node2 # drbdadm primary mystore

node2 # mount /dev/drbd0 /mydata

node2 # tail -n1 /mydata/inittab

id:5:initdefault:文件及文件内容都正确;

#删除测试文件

node2 # rm -f /mydata/inittab

#创建data目录,作为mysql的数据目录

node2 # mkdir /mydata/data-----------------------------------DRBD完成-----------------------------------------

---------------------------------MySQL安装及配置-----------------------------------

一、编译安装MySQL

1.创建运行MySQL的用户和组

node1, node2 # groupadd -r -g 306 mysql

node1, node2 # useradd -r -u 306 -g mysql mysql2.开始编译安装

node1, node2 # yum -y install cmake

node1, node2 # tar xf mysql-5.6.26.tar.gz

node1, node2 # cd mysql-5.6.26

node1, node2 # cmake . -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

> -DMYSQL_DATADIR=/mydata/data \

> -DSYSCONFDIR=/etc \

> -DWITH_INNOBASE_STORAGE_ENGINE=1 \

> -DWITH_ARCHIVE_STORAGE_ENGINE=1 \

> -DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

> -DWITH_READLINE=1 \

> -DWITH_SSL=system \

> -DWITH_ZLIB=system \

> -DWITH_LIBWRAP=0 \

> -DWITH_UNIX_ADDR=/tmp/mysql.sock \

> -DDEFAULT_CHARSET=utf8 \

> -DDEFAULT_COLLATION=utf8_general_ci

node1, node2 # make && make install二、配置启动MySQL

1.配置MySQL

node1, node2 # cd /usr/local/mysql/

node1, node2 # chown -R mysql.mysql .

node1, node2 # cp support-files/my-default.cnf /etc/my.cnf

node1, node2 # sed -i '/^\[mysqld\]/a \datadir=\/mydata\/data' /etc/my.cnf

node1, node2 # sed -i '/^\[mysqld\]/a \socket=\/tmp\/mysql.sock' /etc/my.cnf

node1, node2 # echo -e "[mysql]\nsocket=/tmp/mysql.sock" >> /etc/my.cnf

node1, node2 # cp support-files/mysql.server /etc/init.d/mysqld

node1, node2 # chmod +x /etc/init.d/mysqld

node1, node2 # echo "export PATH=/usr/local/mysql/bin:$PATH" > /etc/profile.d/mysql.sh

node1, node2 #ln -sv /usr/local/mysql/include /usr/include/mysql

node1, node2 #echo '/usr/local/mysql/lib' /etc/ld.so.conf.d/mysql.conf2.初始化MySQL

因为目前drbd的Primary在node2节点上,并且挂载至/mydata/data,所以在node2上初始化mysql:

node2 # chown -R mysql.mysql /mydata/data

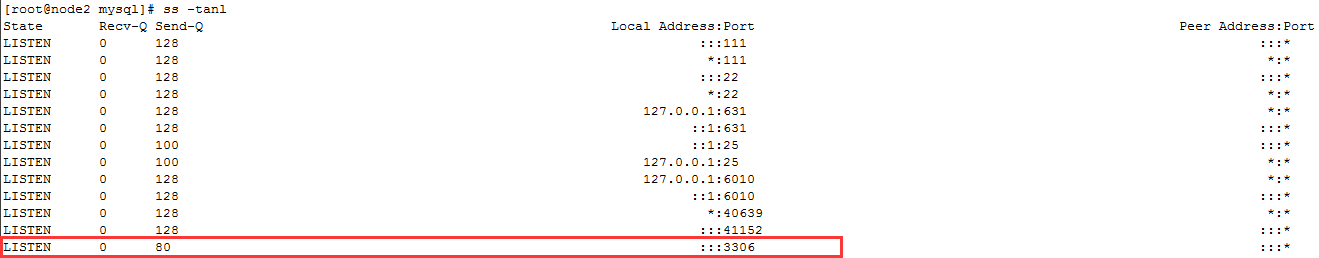

node2 # scripts/mysql_install_db --user=mysql --datadir=/mydata/data/在node2上尝试启动mysql:

node2 # service mysqld start

Starting MySQL.................... [ OK ]

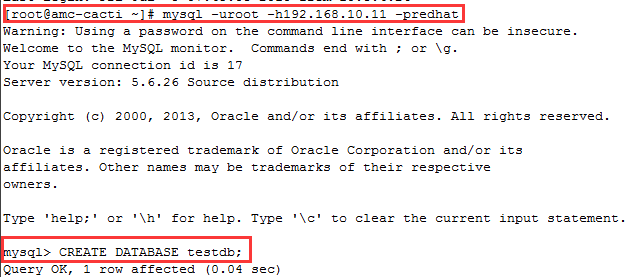

用户登录授权:

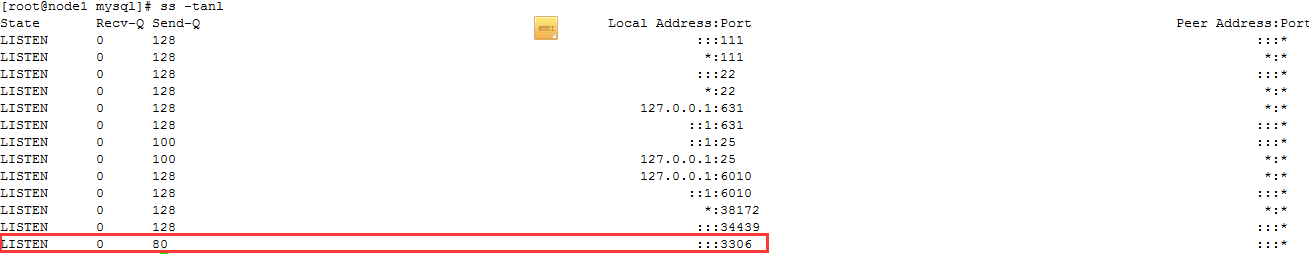

mysql>GRANT ALL ON *.* TO 'root'@'%' IDENTIFIED BY 'redhat';3.切换node1为Primary,并启动mysql

node2 # service mysqld stop

Shutting down MySQL.. [ OK ]

node2 # umount /mydata

node2 # drbdadm secondary mystore

node1 # drbdadm primary mystore

node1 # mount /dev/drbd0 /mydata/

node1 # service mysqld start

Starting MySQL.......... [ OK ]

三、停止服务并禁止开机自动启动

为了保证drbd和mysqld的启动关闭等是由集群来控制的,所以需要手动设置:

node1, node2 # chkconfig --add drbd

node1, node2 # chkconfig drbd off

node1, node2 # service drbd stop

node1, node2 # chkconfig --add mysqld

node1, node2 # chkconfig mysqld off

node1, node2 # service mysqld stop------------------------------------MySQL完成--------------------------------------

-------------------------------------集群配置-----------------------------------------

一、安装corosync、pacemaker、crmsh

1.安装corosync、pacemaker

node1, node2 # yum -y install corosync pacemaker2.安装crmsh

只在管理端安装即可,这里两个节点都安装:

node1, node2 # yum install python-pssh-2.3.1-4.2.x86_64.rpm pssh-2.3.1-4.2.x86_64.rpm crmsh-2.1-1.6.x86_64.rpm二、配置corosync

1.编辑corosync配置文件

node1 # cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

node1 # vim /etc/corosync/corosync.conf

compatibility: whitetank

totem {

version: 2

secauth: on

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.10.0

mcastaddr: 239.2.3.4

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: no

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

#这里将pacemaker作为corosync的插件启动(以后将会不支持)

service {

ver: 0

name: pacemaker

}

aisexec {

user: root

group: root

}2.生成节点间通信时的认证秘钥文件

node1 # corosync-keygen自动生存authkey文件,权限为400

3.将corosync.conf和authkey文件复制到node2的/etc/corosync下,并保持文件权限

node1 # scp -p /etc/corosync/{corosync.conf,authkey} node2:/etc/corosync4.在node1、node2上启动corosync

node1, node2 # service corosync start5.使用crmsh查看状态

三、配置属性

1.关闭stonith设备

node1 # crm configure property stonith-enabled=false2.配置不满足法定票数时的动作为忽略

node1 # crm configure property no-quorum-policy=ignore3.配置资源默认黏性值为100

node1 # crm configure rsc_defaults resource-stickiness=100四、定义资源

1.定义主资源:drbd

node1 # crm configure

crm(live)configure# primitive mysqlstore ocf:linbit:drbd params drbd_resource=mystore op monitor role=Master interval=10s timeout=20s op monitor role=Slave interval=20s timeout=20s op start timeout=240s op stop timeout=100s on-fail=restart2.定义drbd为主从资源

node1 # crm cnfigure

crm(live)configure# master ms_mysqlstore mysqlstore meta clone-max="2" clone-node-max="1" master-max="1" master-node-max="1" notify="true"3.定义主资源:VIP

node1 # crm configure

crm(live)configure# primitive VIP ocf:heartbeat:IPaddr params ip=192.168.10.11 op monitor interval=10s timeout=20s op start timeout=20s op stop timeout=20s on-fail=restart4.定义主资源:Filesystem

node1 # crm configure

crm(live)configure# primitive MYDATA ocf:heartbeat:Filesystem params device=/dev/drbd0 directory=/mydata fstype=ext4 op monitor interval=20s timeout=40s op start timeout=60s op stop timeout=60s on-fail=restart5.定义主资源:mysqld

node1 # crm configure

crm(live)configure# primitive MYSQLD lsb:mysqld op monitor interval=30s timeout=60s op start timeout=60s op stop timeout=60s on-fail=restart五、定于约束

资源VIP、MYDATA、MYSQLD需要运行于drbd的Primary节点上,定义排列约束:

node1 # crm configure所有资源必须和ms_mysqlstore的主节点在一起(即drbd promote为Primary的节点)

crm(live)configure# collocation ALL_with_ms_mysqlstore_master inf: MYSQLD MYDATA VIP ms_mysqlstore:Master资源的启动顺序:

ms_mysqlstore提升为Master(drbd promote为Primary)之后,MYDATA才能挂载,定义顺序约束:

crm(live)configure# order ms_mysqlstore_master_before_VIP_before_MYDATA_before_MYSQLD inf: ms_mysqlstore:promote VIP:start MYDATA:start MYSQLD:start校验:

crm(live)configure# verify校验没有问题,提交:

crm(live)configure# commit六、状态查看、角色切换及测试

将node1.wsh.com切换为standby:

node1 # crm node standby node1.wsh.com

------------------------------------集群配置完成--------------------------------------

至此,全部配置已完成。

文章仅作为学习笔记,如果疏漏,欢迎指正,谢谢!

1234

1234

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?