YARN部署使用

yarn伪分布式部署,主从架构

-

切换hadoop用户

[root@JD ~]# su - hadoop Last login: Sun Dec 1 15:09:50 CST 2019 on pts/0 -

配置mapred-site.xml

进入hadoop目录下 [hadoop@JD ~]$ cd app/hadoop/etc/hadoop 修改文件名 [hadoop@JD hadoop]$ cp mapred-site.xml.template mapred-site.xml 配置文件 [hadoop@JD hadoop]$ vi mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration> -

配置yarn-site.xml文件

web界面防火墙 38088 50070 开放这两个端口修改yarn的端口,防止挖矿 <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>JD:38088</value> </property> </configuration> -

启动yarn

启动命令 [hadoop@JD hadoop]$ start-yarn.sh starting yarn daemons starting resourcemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.16.2/logs/yarn-hadoop-resourcemanager-JD.out JD: starting nodemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.16.2/logs/yarn-hadoop-nodemanager-JD.out 查看进程 [hadoop@JD hadoop]$ jps 27616 NodeManager 28004 Jps 27512 ResourceManager 查看端口 [hadoop@JD hadoop]$ netstat -nlp |grep 27512 (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) tcp6 0 0 192.168.0.3:38088 :::* LISTEN 27512/java -

访问路径

JD:38088

在yarn上跑mapreduce样例程序

-

查找mapreduce的jar所在位置

[hadoop@JD hadoop]$ cd ../../ [hadoop@JD hadoop]$ find ./ -name '*example*.jar' ./share/hadoop/mapreduce1/hadoop-examples-2.6.0-mr1-cdh5.16.2.jar ./share/hadoop/mapreduce2/sources/hadoop-mapreduce-examples-2.6.0-cdh5.16.2-test-sources.jar ./share/hadoop/mapreduce2/sources/hadoop-mapreduce-examples-2.6.0-cdh5.16.2-sources.jar ./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.16.2.jar -

运行wordcount程序

运行下试了试,发现需要输入跟输出 [hadoop@JD hadoop]$ hadoop jar ./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.16.2.jar wordcount Usage: wordcount <in> [<in>...] <out> 创建输入文件 [hadoop@JD hadoop]$ vi 1.log aaa bbb ccc aaa ccc ddd eee 上传到hdfs上 启动hdfs [hadoop@JD hadoop]$ start-dfs.sh 19/12/01 17:13:35 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Starting namenodes on [JD] JD: starting namenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.16.2/logs/hadoop-hadoop-namenode-JD.out JD: starting datanode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.16.2/logs/hadoop-hadoop-datanode-JD.out Starting secondary namenodes [JD] JD: starting secondarynamenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.16.2/logs/hadoop-hadoop-secondarynamenode-JD.out 19/12/01 17:13:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 级联创建文件夹 [hadoop@JD hadoop]$ hadoop fs -mkdir -p /wordcount/input 19/12/01 17:14:50 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 上传本地文件 [hadoop@JD hadoop]$ hadoop fs -put 1.log /wordcount/input 19/12/01 17:14:58 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [hadoop@JD hadoop]$ hadoop fs -ls /wordcount/input 19/12/01 17:15:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items -rw-r--r-- 1 hadoop supergroup 29 2019-12-01 17:15 /wordcount/input/1.log 运行计算(切记输出的文件夹不要存在,否则会报错) [hadoop@JD hadoop]$ hadoop jar ./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.16.2.jar wordcount /wordcount/input /wordcount/output 查看结果目录 [hadoop@JD hadoop]$ hadoop fs -ls /wordcount/output 19/12/01 17:33:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 2 items -rw-r--r-- 1 hadoop supergroup 0 2019-12-01 17:24 /wordcount/output/_SUCCESS #标识文件,无内容 -rw-r--r-- 1 hadoop supergroup 30 2019-12-01 17:24 /wordcount/output/part-r-00000 #结果文件 查看结果文件 [hadoop@JD hadoop]$ hadoop fs -cat /wordcount/output/part-r-00000 19/12/01 17:35:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable aaa 2 bbb 1 ccc 2 ddd 1 eee 1

HDFS 存储 计算结果又返回存储HDFS

MR jar包 计算逻辑

Yarn 资源+作业调度

-

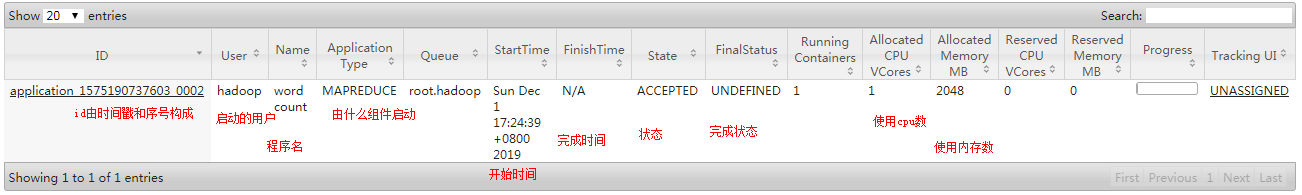

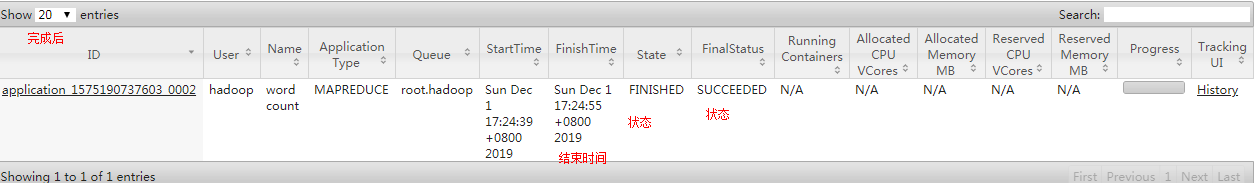

yarn页面介绍

运行时状态

完成后状态

大数据组件

存储 :HDFS 分布式文件系统 Hive HBase Kudu Cassandra

计算 :MR Hivesql Spark Flink

资源+作业调度 : Yarn(1家)

以及flume和kafka等

修改机器名称

-

centos7.x

查看当前主机名 [root@JD ~]# hostnamectl Static hostname: JD Icon name: computer-vm Chassis: vm Machine ID: 983e7d6ed0624a2499003862230af382 Boot ID: cb9adb2e30cb470b96b891712496807a Virtualization: kvm Operating System: CentOS Linux 7 (Core) CPE OS Name: cpe:/o:centos:centos:7 Kernel: Linux 3.10.0-327.el7.x86_64 Architecture: x86-64 命令帮助查看命令 [root@JD ~]# hostnamectl --help hostnamectl [OPTIONS...] COMMAND ... Query or change system hostname. -h --help Show this help --version Show package version --no-ask-password Do not prompt for password -H --host=[USER@]HOST Operate on remote host -M --machine=CONTAINER Operate on local container --transient Only set transient hostname --static Only set static hostname --pretty Only set pretty hostname Commands: status Show current hostname settings set-hostname NAME Set system hostname set-icon-name NAME Set icon name for host set-chassis NAME Set chassis type for host set-deployment NAME Set deployment environment for host set-location NAME Set location for host 修改主机名 [root@JD ~]# hostnamectl set-hostname xxx 修改成功后,配置hosts文件 [root@JD ~]# vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.0.3 xxx

jps真正使用

位置在哪

所在位置

[hadoop@JD hadoop]$ which jps

/usr/java/jdk1.8.0_45/bin/jps

[hadoop@JD hadoop]$ jps --help

illegal argument: --help

usage: jps [-help]

jps [-q] [-mlvV] [<hostid>]

Definitions:

<hostid>: <hostname>[:<port>]

jps -l命令(会打印出所在包名)

[hadoop@JD hadoop]$ jps -l

27616 org.apache.hadoop.yarn.server.nodemanager.NodeManager

30048 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

29733 org.apache.hadoop.hdfs.server.namenode.NameNode

27512 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

2555 sun.tools.jps.Jps

29884 org.apache.hadoop.hdfs.server.datanode.DataNode

对应的进程标识文件在哪

切换root用户,进入tmp目录

[root@JD ~]# cd /tmp

构成方式hsperfdata_xxx(用户名)

[root@JD tmp]# ll

total 20

drwxr-xr-x 2 hadoop hadoop 66 Dec 1 17:47 hsperfdata_hadoop

drwxr-xr-x 2 root root 17 Dec 1 17:47 hsperfdata_root

作用

查询 pid 进程名称

process information unavailable

进程所属的用户 去执行 jps命令,只显示自己的相关的进程信息,root用户 看所有的,但是显示不可用(process information unavailable)

-

root用户查看jps

[root@JD tmp]# jps 27616 -- process information unavailable 30048 -- process information unavailable 3842 jar 29733 -- process information unavailable 27512 -- process information unavailable 29884 -- process information unavailable 3117 Jps -

hadoop用户查看jps

[hadoop@JD hadoop]$ jps 27616 NodeManager 30048 SecondaryNameNode 29733 NameNode 27512 ResourceManager 3179 Jps 29884 DataNode

真假进程判断

有可能jps查看到某一进程存在,但是使用ps -ef |grep xxx查看不存在,以ps 查看的为准

真假判断 :

[root@JD hsperfdata_hadoop]# ps -ef|grep 31488

root 5291 3912 0 21:32 pts/1 00:00:00 grep --color=auto 31488

[root@JD hsperfdata_hadoop]#

该进程不存在

[root@JD hsperfdata_hadoop]# ps -ef|grep 31488 | grep -v grep | wc -l

0

该进程存在

[root@JD hsperfdata_hadoop]# ps -ef|grep 2594 | grep -v grep | wc -l

1

[root@JD hsperfdata_hadoop]#

结论

jps文件不影响进程启动和停止,但影响JPS判断进程,建议在生产使用ps -ef|grep 进程

/tmp/hsperfdata_xxx下的进程文件是否影响进程启动

删除/tmp/hsperfdata_xxx的某一个进程文件,不会影响进程的启动和停止,但是会影响jps命令查看进程,如果删除或移动文件,jps查看进程时,不会查出该进程

Linux机制 oom-kill机制

某个进程 memory 使用过高,机器为了保护自己,放置夯住,去杀死内存使用最多的进程;查看memory使用率,使用top命令;如果linux杀死进程,该日志文件不会有任何记录,应该去linux的系统日志去查看。

所以,以后进程挂了,--》log位置--》error: 有error具体分析;没 想到oom机制--》查看系统日志cat /var/log/messages | grep oom

Linux机制 /tmp默认存储周期 1个月 会自动清空不在规则以内的文件

启动hdfs或yarn,如果不在配置文件配置的话,生成的pid文件会在/tmp目录下,如果linux一个月自动清理pid文件,会造成程序有问题,所以我们需要在hdfs和yarn的配置文件中配置让,pid文件不要生成在/tmp目录下

-

hdfs的配置

配置hadoop-env.shexport HADOOP_PID_DIR=/home/hadoop/tmp -

yarn配置

配置yarn-env.shexport YARN_PID_DIR=/home/hadoop/tmp -

重新启动,查看pid文件路径

[hadoop@JD tmp]$ pwd /home/hadoop/tmp 生成在配置的路径下 [hadoop@JD tmp]$ ll total 20 -rw-rw-r-- 1 hadoop hadoop 5 Dec 1 18:28 hadoop-hadoop-datanode.pid -rw-rw-r-- 1 hadoop hadoop 5 Dec 1 18:28 hadoop-hadoop-namenode.pid -rw-rw-r-- 1 hadoop hadoop 5 Dec 1 18:29 hadoop-hadoop-secondarynamenode.pid -rw-rw-r-- 1 hadoop hadoop 5 Dec 1 18:29 yarn-hadoop-nodemanager.pid -rw-rw-r-- 1 hadoop hadoop 5 Dec 1 18:29 yarn-hadoop-resourcemanager.pid

3829

3829

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?