文章目录

官方文档

DEPLOYING A NEW CEPH CLUSTER-截至文章发布时的最新版

DEPLOYING A NEW CEPH CLUSTER-octopus版

参考

- Bilibili-用cephadm部署ceph集群(octopus)

- Setting up a single node Ceph storage cluster

- 如何在单节点 Ceph 中配置多数据副本

安装虚拟机

- 系统配置要高,至少4核6G。2核4G,在

添加存储阶段会莫名其妙的失败 - 5块磁盘,第一块装系统,其他是给ceph用的,每个6G,因为ceph对磁盘的最小要求是5G

- 非系统磁盘,啥也不要干 A storage device is considered available if all of the following conditions are met

The device must have no partitions. The device must not have any LVM state. The device must not be mounted. The device must not contain a file system. The device must not contain a Ceph BlueStore OSD. The device must be larger than 5 GB.

操作系统

root@ceph:~# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 18.04.2 LTS

Release: 18.04

Codename: bionic

安装ntp,lvm2

apt update -y && apt install ntp -y && apt install lvm2 -y

- 配置ntp

- 关闭系统自带的:

timedatectl set-ntp off

配置时区

- tzselect

- cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

- date -R,查看

安装docker

- ubuntu18使用阿里云源安装docker-ce

- 验证

root@ceph:/dev# docker -v Docker version 20.10.7, build f0df350

安装cephadm

- 官方文档:GET PACKAGES

- 依次执行如下命令,不然第三条命令会报错:

E: Unable to locate package cephadm

- wget -q -O- ‘https://download.ceph.com/keys/release.asc’ | sudo apt-key add -

- sudo apt-add-repository ‘deb https://download.ceph.com/debian-pacific/ bionic main’

- apt install -y cephadm

验证

root@ceph:~# cephadm version

ceph version 16.2.4 (3cbe25cde3cfa028984618ad32de9edc4c1eaed0) pacific (stable)

安装

root@ceph:~# cephadm bootstrap --mon-ip 192.168.142.103

Creating directory /etc/ceph for ceph.conf

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit systemd-timesyncd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit systemd-timesyncd.service is enabled and running

Host looks OK

Cluster fsid: ce131a9c-c5d7-11eb-b52a-000c29e37ba8

Verifying IP 192.168.142.103 port 3300 ...

Verifying IP 192.168.142.103 port 6789 ...

Mon IP 192.168.142.103 is in CIDR network 192.168.142.0/24

- internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Pulling container image docker.io/ceph/ceph:v16...

Ceph version: ceph version 16.2.4 (3cbe25cde3cfa028984618ad32de9edc4c1eaed0) pacific (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 192.168.142.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host ceph...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 13...

mgr epoch 13 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://ceph:8443/

User: admin

Password: y7f8s2n77a

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid fde2c7f4-c5dc-11eb-8740-000c296e3818 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/pacific/mgr/telemetry/

Bootstrap complete.

修改dashboard密码

- 在上面的输出中,把

https://ceph:8443改为https://192.18.142.103:8443URL: https://ceph:8443/ User: admin Password: y7f8s2n77a - 第一次登录需要修改密码

安装ceph-common

- 安装

- cephadm add-repo --release pacific

- cephadm install ceph-common

- 验证

root@ceph:~# ceph -v ceph version 16.2.4 (3cbe25cde3cfa028984618ad32de9edc4c1eaed0) pacific (stable) root@ceph:~# ceph status cluster: id: ce131a9c-c5d7-11eb-b52a-000c29e37ba8 health: HEALTH_WARN OSD count 0 < osd_pool_default_size 3 services: mon: 1 daemons, quorum ceph (age 6m) mgr: ceph.jvbrws(active, since 6m) osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

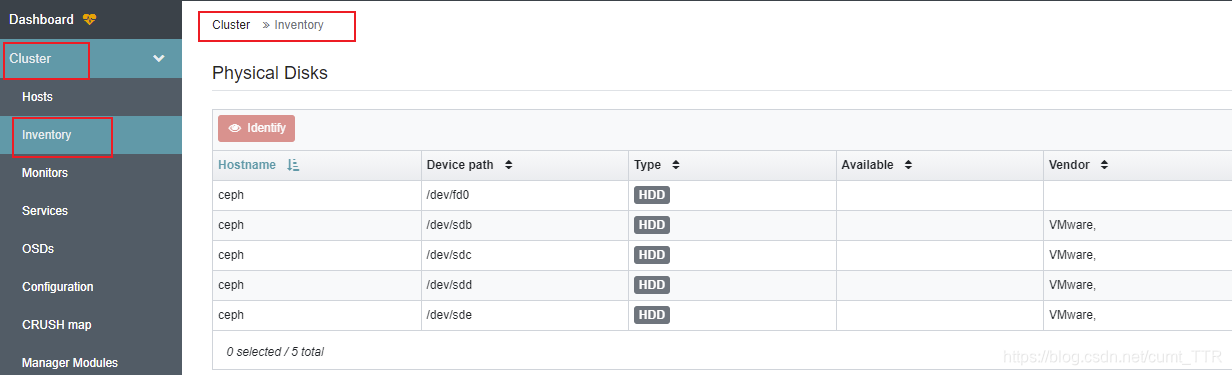

查看可用存储

如果没有输出可用的存储,就不能进行下去

- 官方文档对存储的要求

A storage device is considered available if all of the following conditions are met: The device must have no partitions. The device must not have any LVM state. The device must not be mounted. The device must not contain a file system. The device must not contain a Ceph BlueStore OSD. The device must be larger than 5 GB. - 查看本机磁盘:

fdisk -l - 命令:

ceph orch device ls - dashboard:

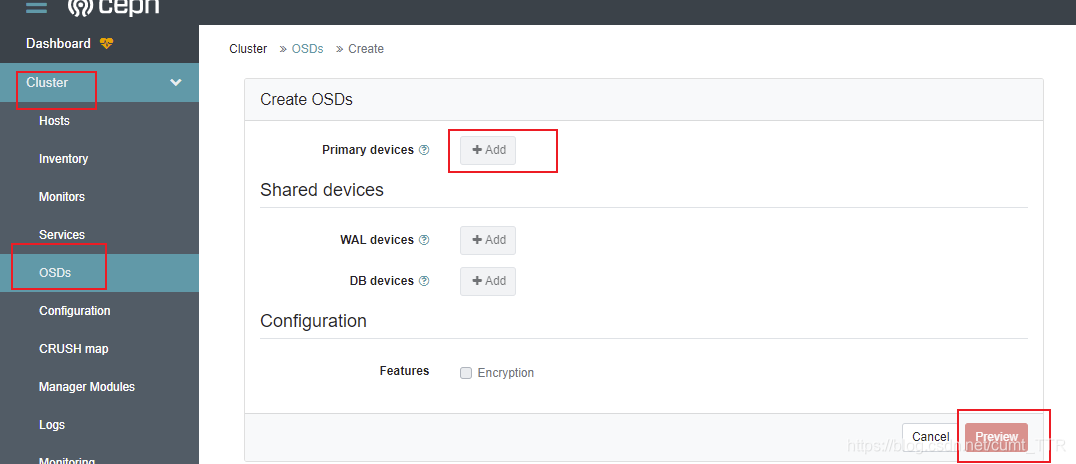

添加存储

这里是添加所有可用的存储

-

命令:

ceph orch apply osd --all-available-devices -

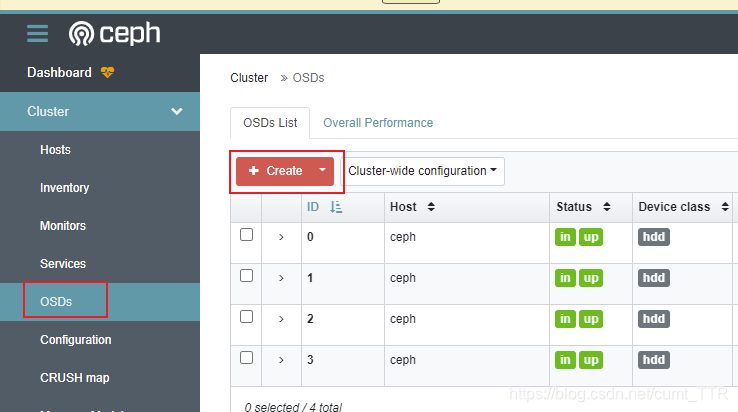

dashboard: 这里第一张图因为已经添加过了,所以按钮是灰色的,第二张图是已经成功的样子

验证

使用

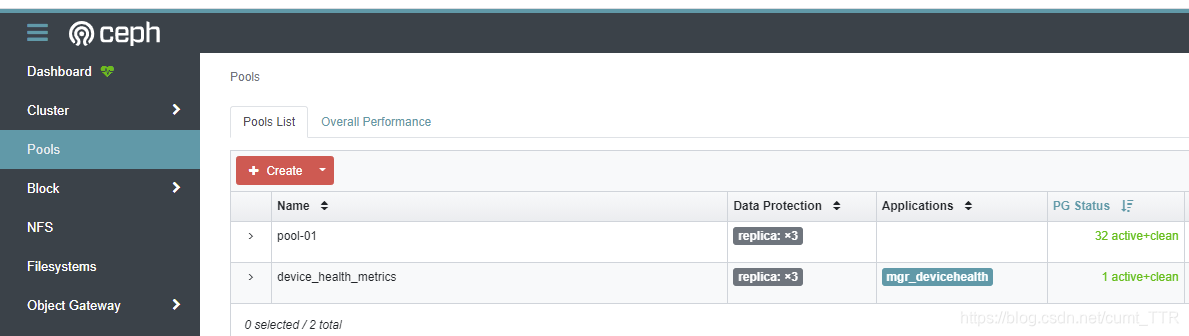

pool

创建

- 修改crush map

- 原因: 不然pool的创建会出问题,集群状态一直是不健康的,参考:Setting up a single node Ceph storage cluster

- 步骤:

- ceph status # Shows the status of the cluster

- ceph osd crush rule dump # Shows you the current crush maps

- ceph osd getcrushmap -o comp_crush_map.cm # Get crush map

- crushtool -d comp_crush_map.cm -o crush_map.cm # Decompile map

- vim crush_map.cm # Make and changes you need (host -> osd)

step chooseleaf firstn 0 type host改为step chooseleaf firstn 0 type osd,参考 如何在单节点 Ceph 中配置多数据副本

- crushtool -c crush_map.cm -o new_crush_map.cm # Compile map

- ceph osd setcrushmap -i new_crush_map.cm # Load the new map

- 命令:

ceph osd pool create pool-01 - dashboard:

删除pool

- 如果pool一直不成功,只能到后台shell删除

- 命令

ceph tell mon.* injectargs --mon_allow_pool_delete true ceph osd pool delete pool-01 pool-01 --yes-i-really-really-mean-it

块存储(Block storage),暂时有点问题。。

文档

步骤

- 关联application,dashboard上没有对应的执行界面

- 命令:

ceph osd pool application enable pool-1 rbd

- 命令:

- 创建image

- 映射使用

文件存储(File storage)

-

[官方文档](CREATE A CEPH FILE SYSTEM)

-

创建命令:

ceph osd pool create cephfs_data 64 64 ceph osd pool create cephfs_metadata 64 64 ceph fs new cephfs cephfs_metadata cephfs_data ceph orch apply mds cephfs --placement="1" -

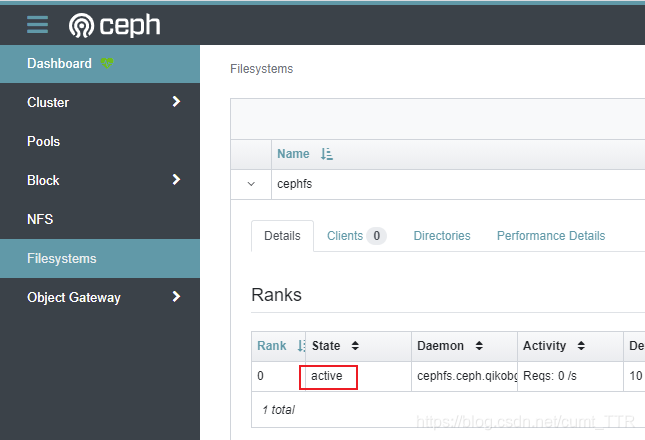

查看状态

- ceph fs ls

root@ceph:~# ceph fs ls name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ] - ceph mds stat # 状态要为

up:activeroot@ceph:~# ceph mds stat cephfs:1 {0=cephfs.ceph.qikobg=up:active}

- ceph fs ls

-

查看dashboard

-

挂载

- 可能需要安装

nfs-common - 获得admin账户密码

root@ceph:~# ceph auth get client.admin [client.admin] key = AQAXjbtgdHW1JxAAFYtIK/azRcROi4WbZtckSw== caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *" exported keyring for client.admin - 命令:

- 远端挂载(非安装ceph机器)

mount -t ceph 192.168.142.103:6789:/ /mnt/cephfs -o name=admin,secret=AQAXjbtgdHW1JxAAFYtIK/azRcROi4WbZtckSw== - 本地挂载(安装ceph的机器)

mount -t ceph :/ /mnt/cephfs -o name=admin,secret=AQAXjbtgdHW1JxAAFYtIK/azRcROi4WbZtckSw==

- 远端挂载(非安装ceph机器)

- 验证

- 在远端挂载机器上:

echo "hello,world">/mnt/cephs/1.txt - 在本机上查看写入的文件:

cat /mnt/cephs/1.txt

- 在远端挂载机器上:

- 可能需要安装

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?