c# cntk 模型复制

CNTK-分类模型 (CNTK - Classification Model)

This chapter will help you to understand how to measure performance of classification model in CNTK. Let us begin with confusion matrix.

本章将帮助您了解如何衡量CNTK中分类模型的性能。 让我们从混淆矩阵开始。

混淆矩阵 (Confusion matrix)

Confusion matrix - a table with the predicted output versus the expected output is the easiest way to measure the performance of a classification problem, where the output can be of two or more type of classes.

混淆矩阵-包含预期输出与预期输出的表是衡量分类问题性能的最简单方法,其中输出可以是两种或多种类型的类别。

In order to understand how it works, we are going to create a confusion matrix for a binary classification model that predicts, whether a credit card transaction was normal or a fraud. It is shown as follows −

为了了解其工作原理,我们将为二进制分类模型创建一个混淆矩阵,该模型可预测信用卡交易是正常交易还是欺诈交易。 它显示如下-

| Actual fraud | Actual normal | |

|---|---|---|

| Predicted fraud | True positive | False positive |

| Predicted normal | False negative | True negative |

| 实际欺诈 | 实际正常 | |

|---|---|---|

预期欺诈 | 真正的积极 | 假阳性 |

预测正常 | 假阴性 | 真否定 |

As we can see, the above sample confusion matrix contains 2 columns, one for class fraud and other for class normal. In the same way we have 2 rows, one is added for class fraud and other is added for class normal. Following is the explanation of the terms associated with confusion matrix −

可以看到,上面的样本混淆矩阵包含2列,一列用于类别欺诈,另一列用于类别正常。 以同样的方式,我们有2行,为行欺诈添加了一行,为正常课添加了另一行。 以下是与混淆矩阵相关的术语的解释-

True Positives − When both actual class & predicted class of data point is 1.

真实正值 -当数据点的实际类别和预测类别均为1时。

True Negatives − When both actual class & predicted class of data point is 0.

真否定 -当数据点的实际类别和预测类别均为0时。

False Positives − When actual class of data point is 0 & predicted class of data point is 1.

误报 -当数据点的实际类别为0且数据点的预测类别为1时。

False Negatives − When actual class of data point is 1 & predicted class of data point is 0.

假阴性 -当数据点的实际类别为1且数据点的预测类别为0时。

Let’s see, how we can calculate number of different things from the confusion matrix −

让我们看看,如何从混淆矩阵中计算出不同事物的数量-

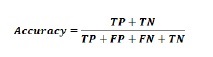

Accuracy − It is the number of correct predictions made by our ML classification model. It can be calculated with the help of following formula −

准确性 -这是我们的ML分类模型做出的正确预测的数量。 可以借助以下公式进行计算-

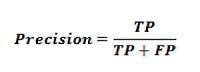

Precision −It tells us how many samples were correctly predicted out of all samples we predicted. It can be calculated with the help of following formula −

精度 -它告诉我们在我们预测的所有样本中正确预测了多少个样本。 可以借助以下公式进行计算-

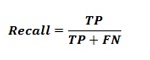

Recall or Sensitivity − Recall are the number of positives returned by our ML classification model. In other words, it tells us how many of the fraud cases in the dataset were actually detected by the model. It can be calculated with the help of following formula −

回忆或敏感度 -回忆是我们的ML分类模型返回的阳性数。 换句话说,它告诉我们模型实际上检测到数据集中有多少个欺诈案件。 可以借助以下公式进行计算-

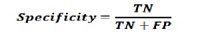

Specificity − Opposite to recall, it gives the number of negatives returned by our ML classification model. It can be calculated with the help of following formula −

特异性 -与召回相反,它给出了我们的ML分类模型返回的负数。 可以借助以下公式进行计算-

F测量 (F-measure)

We can use F-measure as an alternative of Confusion matrix. The main reason behind this, we can’t maximize Recall and Precision at the same time. There is a very strong relationship between these metrics and that can be understood with the help of following example −

我们可以使用F度量作为混淆矩阵的替代方案。 这背后的主要原因是,我们无法同时最大化查全率和查准率。 这些指标之间有很强的关系,可以通过以下示例来理解-

Suppose, we want to use a DL model to classify cell samples as cancerous or normal. Here, to reach maximum precision we need to reduce the number of predictions to 1. Although, this can give us reach around 100 percent precision, but recall will become really low.

假设我们要使用DL模型将细胞样本分类为癌性或正常。 在这里,为了达到最高的精度,我们需要将预测数减少到1。虽然,这可以使我们达到100%左右的精度,但是召回率会非常低。

On the other hand, if we would like to reach maximum recall, we need to make as many predictions as possible. Although, this can give us reach around 100 percent recall, but precision will become really low.

另一方面,如果我们想最大程度地提高召回率,则需要做出尽可能多的预测。 虽然,这可以使我们达到100%左右的召回率,但是精确度确实会降低。

In practice, we need to find a way balancing between precision and recall. The F-measure metric allows us to do so, as it expresses a harmonic average between precision and recall.

在实践中,我们需要找到一种在精度和召回率之间取得平衡的方法。 F量度指标允许我们这样做,因为它表示精度和召回率之间的谐波平均值。

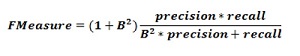

This formula is called the F1-measure, where the extra term called B is set to 1 to get an equal ratio of precision and recall. In order to emphasize recall, we can set the factor B to 2. On the other hand, to emphasize precision, we can set the factor B to 0.5.

该公式称为F1量度,其中将称为B的额外项设置为1,以得到相等的精度和查全率。 为了强调回忆,我们可以将系数B设置为2。另一方面,为了强调精度,我们可以将系数B设置为0.5。

使用CNTK衡量分类绩效 (Using CNTK to measure classification performance)

In previous section we have created a classification model using Iris flower dataset. Here, we will be measuring its performance by using confusion matrix and F-measure metric.

在上一节中,我们使用鸢尾花数据集创建了一个分类模型。 在这里,我们将使用混淆矩阵和F-measure度量来测量其性能。

创建混淆矩阵 (Creating Confusion matrix)

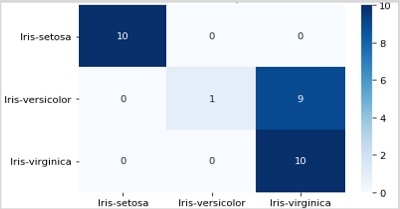

We already created the model, so we can start the validating process, which includes confusion matrix, on the same. First, we are going to create confusion matrix with the help of the confusion_matrix function from scikit-learn. For this, we need the real labels for our test samples and the predicted labels for the same test samples.

我们已经创建了模型,因此可以在其中开始包含混淆矩阵的验证过程。 首先,我们将借助scikit-learn的confusion_matrix函数创建混淆矩阵。 为此,我们需要测试样品的真实标签和相同测试样品的预测标签。

Let’s calculate the confusion matrix by using following python code −

让我们通过使用以下python代码来计算混淆矩阵-

from sklearn.metrics import confusion_matrix

y_true = np.argmax(y_test, axis=1)

y_pred = np.argmax(z(X_test), axis=1)

matrix = confusion_matrix(y_true=y_true, y_pred=y_pred)

print(matrix)

输出量 (Output)

[[10 0 0]

[ 0 1 9]

[ 0 0 10]]

We can also use heatmap function to visualise a confusion matrix as follows −

我们还可以使用热图函数来可视化混淆矩阵,如下所示:

import seaborn as sns

import matplotlib.pyplot as plt

g = sns.heatmap(matrix,

annot=True,

xticklabels=label_encoder.classes_.tolist(),

yticklabels=label_encoder.classes_.tolist(),

cmap='Blues')

g.set_yticklabels(g.get_yticklabels(), rotation=0)

plt.show()

We should also have a single performance number, that we can use to compare the model. For this, we need to calculate the classification error by using classification_error function, from the metrics package in CNTK as done while creating classification model.

我们还应该有一个性能数字,可以用来比较模型。 为此,我们需要使用classification_error函数来计算分类错误,从指标包CNTK为完成在创建分类模型。

Now to calculate the classification error, execute the test method on the loss function with a dataset. After that, CNTK will take the samples we provided as input for this function and make a prediction based on input features X_test.

现在要计算分类误差,请使用数据集对损失函数执行测试方法。 之后,CNTK将采用我们提供的样本作为此功能的输入,并根据输入特征X_ test进行预测。

loss.test([X_test, y_test])

输出量 (Output)

{'metric': 0.36666666666, 'samples': 30}

实施F措施 (Implementing F-Measures)

For implementing F-Measures, CNTK also includes function called fmeasures. We can use this function, while training the NN by replacing the cell cntk.metrics.classification_error, with a call to cntk.losses.fmeasure when defining the criterion factory function as follows −

为了实现F-措施,CNTK还包括称为f-措施的功能。 我们可以在定义标准工厂功能时通过调用cntk.losses.fmeasure替换单元cntk.metrics.classification_error来训练NN时使用此函数,如下所示:

import cntk

@cntk.Function

def criterion_factory(output, target):

loss = cntk.losses.cross_entropy_with_softmax(output, target)

metric = cntk.losses.fmeasure(output, target)

return loss, metric

After using cntk.losses.fmeasure function, we will get different output for the loss.test method call given as follows −

使用cntk.losses.fmeasure函数后,对于loss.test方法调用,我们将获得不同的输出,如下所示:

loss.test([X_test, y_test])

输出量 (Output)

{'metric': 0.83101488749, 'samples': 30}

c# cntk 模型复制

1084

1084

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?