theano

Theano-快速指南 (Theano - Quick Guide)

Theano-简介 (Theano - Introduction)

Have you developed Machine Learning models in Python? Then, obviously you know the intricacies in developing these models. The development is typically a slow process taking hours and days of computational power.

您是否使用Python开发了机器学习模型? 然后,显然您知道开发这些模型的复杂性。 开发通常是一个缓慢的过程,需要数小时和数天的计算能力。

The Machine Learning model development requires lot of mathematical computations. These generally require arithmetic computations especially large matrices of multiple dimensions. These days we use Neural Networks rather than the traditional statistical techniques for developing Machine Learning applications. The Neural Networks need to be trained over a huge amount of data. The training is done in batches of data of reasonable size. Thus, the learning process is iterative. Thus, if the computations are not done efficiently, training the network can take several hours or even days. Thus, the optimization of the executable code is highly desired. And that is what exactly Theano provides.

机器学习模型的开发需要大量的数学计算。 这些通常需要算术运算,尤其是多维的大型矩阵。 如今,我们使用神经网络而不是传统的统计技术来开发机器学习应用程序。 神经网络需要接受大量数据的培训。 训练是在合理大小的数据中分批进行的。 因此,学习过程是迭代的。 因此,如果计算效率不高,训练网络可能要花费几个小时甚至几天。 因此,非常需要可执行代码的优化。 而那正是Theano提供的。

Theano is a Python library that lets you define mathematical expressions used in Machine Learning, optimize these expressions and evaluate those very efficiently by decisively using GPUs in critical areas. It can rival typical full C-implementations in most of the cases.

Theano是一个Python库,可让您定义在机器学习中使用的数学表达式,优化这些表达式并通过在关键区域果断地使用GPU进行高效评估。 在大多数情况下,它可以与典型的完整C实现相媲美。

Theano was written at the LISA lab with the intention of providing rapid development of efficient machine learning algorithms. It is released under a BSD license.

Theano是在LISA实验室编写的,旨在快速开发高效的机器学习算法。 它是根据BSD许可证发行的。

In this tutorial, you will learn to use Theano library.

在本教程中,您将学习使用Theano库。

Theano-安装 (Theano - Installation)

Theano can be installed on Windows, MacOS, and Linux. The installation in all the cases is trivial. Before you install Theano, you must install its dependencies. The following is the list of dependencies −

Theano可以安装在Windows,MacOS和Linux上。 在所有情况下,安装都很简单。 在安装Theano之前,必须安装其依赖项。 以下是依赖项列表-

- Python Python

- NumPy − Required NumPy-必需

- SciPy − Required only for Sparse Matrix and special functions SciPy-仅对于稀疏矩阵和特殊功能是必需的

- BLAS − Provides standard building blocks for performing basic vector and matrix operations BLAS-提供用于执行基本矢量和矩阵运算的标准构件

The optional packages that you may choose to install depending on your needs are −

您可以根据需要选择安装的可选软件包是-

- nose: To run Theano’s test-suite 鼻子:经营Theano的测试套件

- Sphinx − For building documentation Sphinx-用于建筑文档

- Graphiz and pydot − To handle graphics and images Graphiz和pydot-处理图形和图像

- NVIDIA CUDA drivers − Required for GPU code generation/execution NVIDIA CUDA驱动程序-GPU代码生成/执行必需

- libgpuarray − Required for GPU/CPU code generation on CUDA and OpenCL devices libgpuarray-在CUDA和OpenCL设备上生成GPU / CPU代码所必需

We shall discuss the steps to install Theano in MacOS.

我们将讨论在MacOS中安装Theano的步骤。

MacOS安装 (MacOS Installation)

To install Theano and its dependencies, you use pip from the command line as follows. These are the minimal dependencies that we are going to need in this tutorial.

要安装Theano及其依赖项,请在命令行中使用pip ,如下所示。 这些是我们在本教程中需要的最小依赖关系。

$ pip install Theano

$ pip install numpy

$ pip install scipy

$ pip install pydot

You also need to install OSx command line developer tool using the following command −

您还需要使用以下命令安装OSx命令行开发人员工具-

$ xcode-select --install

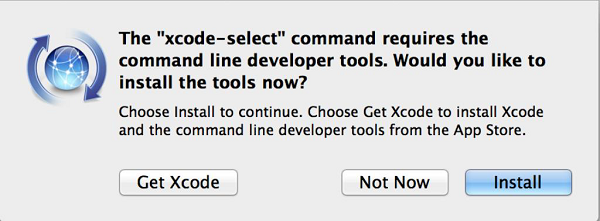

You will see the following screen. Click on the Install button to install the tool.

您将看到以下屏幕。 单击安装按钮以安装该工具。

On successful installation, you will see the success message on the console.

成功安装后,您将在控制台上看到成功消息。

测试安装 (Testing the Installation)

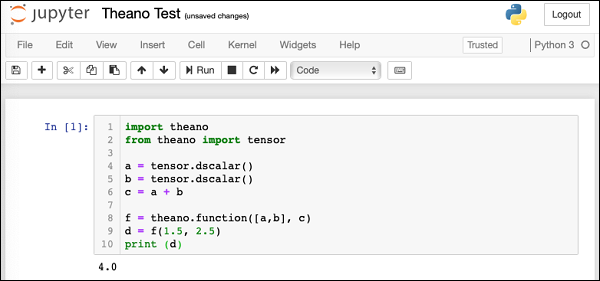

After the installation completes successfully, open a new notebook in the Anaconda Jupyter. In the code cell, enter the following Python script −

安装成功完成后,在Anaconda Jupyter中打开一个新笔记本。 在代码单元中,输入以下Python脚本-

例 (Example)

import theano

from theano import tensor

a = tensor.dscalar()

b = tensor.dscalar()

c = a + b

f = theano.function([a,b], c)

d = f(1.5, 2.5)

print (d)

输出量 (Output)

Execute the script and you should see the following output −

执行脚本,您应该看到以下输出-

4.0

The screenshot of the execution is shown below for your quick reference −

执行的屏幕截图如下所示,供您快速参考-

If you get the above output, your Theano installation is successful. If not, follow the debug instructions on Theano download page to fix the issues.

如果获得上述输出,则表明Theano安装成功。 如果不是,请按照Theano下载页面上的调试说明修复问题。

什么是Theano? (What is Theano?)

Now that you have successfully installed Theano, let us first try to understand what is Theano? Theano is a Python library. It lets you define, optimize, and evaluate mathematical expressions, especially the ones which are used in Machine Learning Model development. Theano itself does not contain any pre-defined ML models; it just facilitates its development. It is especially useful while dealing with multi-dimensional arrays. It seamlessly integrates with NumPy, which is a fundamental and widely used package for scientific computations in Python.

现在您已经成功安装了Theano,让我们首先尝试了解什么是Theano? Theano是一个Python库。 它使您可以定义,优化和评估数学表达式,尤其是在机器学习模型开发中使用的那些数学表达式。 Theano本身不包含任何预定义的ML模型; 它只是促进其发展。 在处理多维数组时特别有用。 它与NumPy无缝集成,NumPy是Python中用于科学计算的基本且广泛使用的软件包。

Theano facilitates defining mathematical expressions used in ML development. Such expressions generally involve Matrix Arithmetic, Differentiation, Gradient Computation, and so on.

Theano有助于定义ML开发中使用的数学表达式。 这样的表达式通常涉及矩阵算术,微分,梯度计算等。

Theano first builds the entire Computational Graph for your model. It then compiles it into highly efficient code by applying several optimization techniques on the graph. The compiled code is injected into Theano runtime by a special operation called function available in Theano. We execute this function repetitively to train a neural network. The training time is substantially reduced as compared to using pure Python coding or even a full C implementation.

Theano首先为您的模型构建整个计算图。 然后,通过在图形上应用多种优化技术,将其编译为高效代码。 已编译的代码通过Theano中可用的称为function的特殊操作注入Theano运行时中。 我们重复执行此功能以训练神经网络。 与使用纯Python编码甚至是完整的C实现相比,培训时间大大减少了。

We shall now understand the process of Theano development. Let us begin with how to define a mathematical expression in Theano.

现在我们将了解Theano开发的过程。 让我们从如何在Theano中定义数学表达式开始。

Theano-琐碎的Theano表达 (Theano - A Trivial Theano Expression)

Let us begin our journey of Theano by defining and evaluating a trivial expression in Theano. Consider the following trivial expression that adds two scalars −

让我们通过定义和评估Theano中的琐碎表达来开始Theano的旅程。 考虑以下将两个标量相加的平凡表达式-

c = a + b

c = a + b

Where a, b are variables and c is the expression output. In Theano, defining and evaluating even this trivial expression is tricky.

其中a , b是变量, c是表达式输出。 在Theano中,即使定义和评估这个琐碎的表达式也很棘手。

Let us understand the steps to evaluate the above expression.

让我们了解评估以上表达式的步骤。

导入Theano (Importing Theano)

First, we need to import Theano library in our program, which we do using the following statement −

首先,我们需要在程序中导入Theano库,我们使用以下语句进行操作-

from theano import *

Rather than importing the individual packages, we have used * in the above statement to include all packages from the Theano library.

而不是导入单个软件包,我们在上面的语句中使用*包括了Theano库中的所有软件包。

声明变量 (Declaring Variables)

Next, we will declare a variable called a using the following statement −

接下来,我们将使用以下语句声明一个名为a的变量-

a = tensor.dscalar()

The dscalar method declares a decimal scalar variable. The execution of the above statement creates a variable called a in your program code. Likewise, we will create variable b using the following statement −

dscalar方法声明一个十进制标量变量。 上面的语句的执行在程序代码中创建了一个称为a的变量。 同样,我们将使用以下语句创建变量b-

b = tensor.dscalar()

定义表达 (Defining Expression)

Next, we will define our expression that operates on these two variables a and b.

接下来,我们将定义对这两个变量a和b进行运算的表达式。

c = a + b

c = a + b

In Theano, the execution of the above statement does not perform the scalar addition of the two variables a and b.

在Theano中,执行以上语句不会对两个变量a和b执行标量加法。

定义Theano函数 (Defining Theano Function)

To evaluate the above expression, we need to define a function in Theano as follows −

为了评估上面的表达式,我们需要在Theano中定义一个函数,如下所示:

f = theano.function([a,b], c)

The function function takes two arguments, the first argument is an input to the function and the second one is its output. The above declaration states that the first argument is of type array consisting of two elements a and b. The output is a scalar unit called c. This function will be referenced with the variable name f in our further code.

函数函数有两个参数,第一个参数是函数的输入,第二个参数是其输出。 上面的声明指出,第一个参数的类型为数组,由两个元素a和b组成 。 输出是称为c的标量单位。 在我们的后续代码中,该函数将以变量名f引用。

调用Theano函数 (Invoking Theano Function)

The call to the function f is made using the following statement −

使用以下语句对函数f进行调用-

d = f(3.5, 5.5)

d = f(3.5,5.5)

The input to the function is an array consisting of two scalars: 3.5 and 5.5. The output of execution is assigned to the scalar variable d. To print the contents of d, we will use the print statement −

该函数的输入是一个由两个标量组成的数组: 3.5和5.5 。 执行的输出分配给标量变量d 。 要打印d的内容,我们将使用print语句-

print (d)

The execution would cause the value of d to be printed on the console, which is 9.0 in this case.

执行将导致在控制台上打印d的值,在这种情况下为9.0。

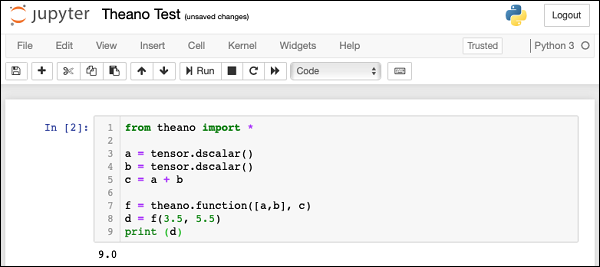

完整程序清单 (Full Program Listing)

The complete program listing is given here for your quick reference −

这里给出了完整的程序清单,供您快速参考-

from theano import *

a = tensor.dscalar()

b = tensor.dscalar()

c = a + b

f = theano.function([a,b], c)

d = f(3.5, 5.5)

print (d)

Execute the above code and you will see the output as 9.0. The screen shot is shown here −

执行上面的代码,您将看到输出为9.0。 屏幕截图显示在此处-

Now, let us discuss a slightly more complex example that computes the multiplication of two matrices.

现在,让我们讨论一个稍微复杂的示例,该示例计算两个矩阵的乘法。

Theano-矩阵乘法的表达式 (Theano - Expression for Matrix Multiplication)

We will compute a dot product of two matrices. The first matrix is of dimension 2 x 3 and the second one is of dimension 3 x 2. The matrices that we used as input and their product are expressed here −

$$\begin{bmatrix}0 & -1 & 2\\4 & 11 & 2\end{bmatrix} \:\begin{bmatrix}3& -1 \\1 & 2 \\35 & 20 \end{bmatrix}=\begin{bmatrix}11 & 0 \\35 & 20 \end{bmatrix}$$我们将计算两个矩阵的点积。 第一个矩阵的尺寸为2 x 3,第二个矩阵的尺寸为3 x2。我们用作输入的矩阵及其乘积在此处表示-

$$ \ begin {bmatrix} 0&-1&2 \\ 4&11&2 \ end {bmatrix} \:\ begin {bmatrix} 3&-1 \\ 1&2 \\ 35&20 \ end {bmatrix} = \ begin {bmatrix} 11&0 \\ 35&20 \ end {bmatrix} $$声明变量 (Declaring Variables)

To write a Theano expression for the above, we first declare two variables to represent our matrices as follows −

要为上述代码编写Theano表达式,我们首先声明两个变量以表示我们的矩阵,如下所示:

a = tensor.dmatrix()

b = tensor.dmatrix()

The dmatrix is the Type of matrices for doubles. Note that we do not specify the matrix size anywhere. Thus, these variables can represent matrices of any dimension.

dmatrix是双精度矩阵的类型。 注意,我们没有在任何地方指定矩阵大小。 因此,这些变量可以表示任何维度的矩阵。

定义表达 (Defining Expression)

To compute the dot product, we used the built-in function called dot as follows −

为了计算点积,我们使用了称为dot的内置函数,如下所示:

c = tensor.dot(a,b)

The output of multiplication is assigned to a matrix variable called c.

乘法的输出分配给名为c的矩阵变量。

定义Theano函数 (Defining Theano Function)

Next, we define a function as in the earlier example to evaluate the expression.

接下来,我们像前面的示例一样定义一个函数来评估表达式。

f = theano.function([a,b], c)

Note that the input to the function are two variables a and b which are of matrix type. The function output is assigned to variable c which would automatically be of matrix type.

请注意,该函数的输入是矩阵类型的两个变量a和b。 函数输出分配给变量c ,该变量将自动为矩阵类型。

调用Theano函数 (Invoking Theano Function)

We now invoke the function using the following statement −

现在,我们使用以下语句调用该函数-

d = f([[0, -1, 2], [4, 11, 2]], [[3, -1],[1,2], [6,1]])

The two variables in the above statement are NumPy arrays. You may explicitly define NumPy arrays as shown here −

上面的语句中的两个变量是NumPy数组。 您可以显式定义NumPy数组,如下所示-

f(numpy.array([[0, -1, 2], [4, 11, 2]]),

numpy.array([[3, -1],[1,2], [6,1]]))

After d is computed we print its value −

计算d之后,我们打印其值-

print (d)

You will see the following output on the output −

您将在输出上看到以下输出-

[[11. 0.]

[25. 20.]]

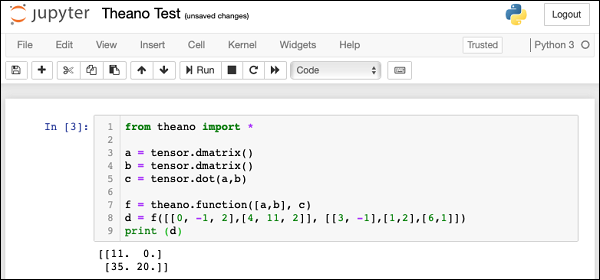

完整程序清单 (Full Program Listing)

The complete program listing is given here:

from theano import *

a = tensor.dmatrix()

b = tensor.dmatrix()

c = tensor.dot(a,b)

f = theano.function([a,b], c)

d = f([[0, -1, 2],[4, 11, 2]], [[3, -1],[1,2],[6,1]])

print (d)

The screenshot of the program execution is shown here −

程序执行的屏幕截图显示在此处-

Theano-计算图 (Theano - Computational Graph)

From the above two examples, you may have noticed that in Theano we create an expression which is eventually evaluated using the Theano function. Theano uses advanced optimization techniques to optimize the execution of an expression. To visualize the computation graph, Theano provides a printing package in its library.

从上面的两个示例中,您可能已经注意到,在Theano中,我们创建了一个最终使用Theano 函数求值的表达式。 Theano使用高级优化技术来优化表达式的执行。 为了可视化计算图,Theano在其库中提供了一个打印程序包。

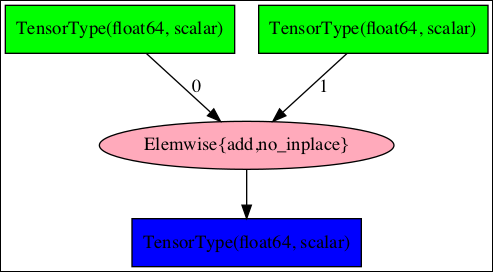

标量加法的符号图 (Symbolic Graph for Scalar Addition)

To see the computation graph for our scalar addition program, use the printing library as follows −

要查看我们标量加法程序的计算图,请使用打印库,如下所示:

theano.printing.pydotprint(f, outfile="scalar_addition.png", var_with_name_simple=True)

When you execute this statement, a file called scalar_addition.png will be created on your machine. The saved computation graph is displayed here for your quick reference −

执行此语句时,将在您的计算机上创建一个名为scalar_addition.png的文件。 保存的计算图显示在此处,供您快速参考-

The complete program listing to generate the above image is given below −

下面给出了生成上述图像的完整程序清单-

from theano import *

a = tensor.dscalar()

b = tensor.dscalar()

c = a + b

f = theano.function([a,b], c)

theano.printing.pydotprint(f, outfile="scalar_addition.png", var_with_name_simple=True)

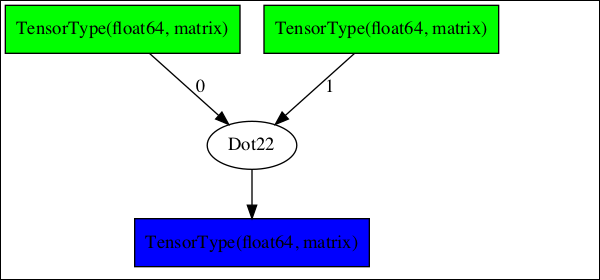

矩阵乘法器的符号图 (Symbolic Graph for Matrix Multiplier)

Now, try creating the computation graph for our matrix multiplier. The complete listing for generating this graph is given below −

现在,尝试为矩阵乘法器创建计算图。 下面给出了生成此图的完整列表-

from theano import *

a = tensor.dmatrix()

b = tensor.dmatrix()

c = tensor.dot(a,b)

f = theano.function([a,b], c)

theano.printing.pydotprint(f, outfile="matrix_dot_product.png", var_with_name_simple=True)

The generated graph is shown here −

生成的图如下所示-

复杂图 (Complex Graphs)

In larger expressions, the computational graphs could be very complex. One such graph taken from Theano documentation is shown here −

在较大的表达式中,计算图可能非常复杂。 从Theano文档中获取的一个这样的图显示在这里-

To understand the working of Theano, it is important to first know the significance of these computational graphs. With this understanding, we shall know the importance of Theano.

要了解Theano的工作原理,首先了解这些计算图的重要性很重要。 有了这些了解,我们将了解Theano的重要性。

为什么选择Theano? (Why Theano?)

By looking at the complexity of the computational graphs, you will now be able to understand the purpose behind developing Theano. A typical compiler would provide local optimizations in the program as it never looks at the entire computation as a single unit.

通过查看计算图的复杂性,您现在将能够了解开发Theano的目的。 典型的编译器会在程序中提供局部优化,因为它永远不会将整个计算视为一个单元。

Theano implements very advanced optimization techniques to optimize the full computational graph. It combines the aspects of Algebra with aspects of an optimizing compiler. A part of the graph may be compiled into C-language code. For repeated calculations, the evaluation speed is critical and Theano meets this purpose by generating a very efficient code.

Theano实现了非常先进的优化技术来优化整个计算图。 它结合了代数方面和优化编译器方面。 图的一部分可以被编译成C语言代码。 对于重复计算,评估速度至关重要,Theano通过生成非常有效的代码来达到此目的。

Theano-数据类型 (Theano - Data Types)

Now, that you have understood the basics of Theano, let us begin with the different data types available to you for creating your expressions. The following table gives you a partial list of data types defined in Theano.

现在,您已经了解了Theano的基础,让我们从可用于创建表达式的不同数据类型开始。 下表为您提供了Theano中定义的数据类型的部分列表。

| Data type | Theano type |

|---|---|

| Byte | bscalar, bvector, bmatrix, brow, bcol, btensor3, btensor4, btensor5, btensor6, btensor7 |

| 16-bit integers | wscalar, wvector, wmatrix, wrow, wcol, wtensor3, wtensor4, wtensor5, wtensor6, wtensor7 |

| 32-bit integers | iscalar, ivector, imatrix, irow, icol, itensor3, itensor4, itensor5, itensor6, itensor7 |

| 64-bit integers | lscalar, lvector, lmatrix, lrow, lcol, ltensor3, ltensor4, ltensor5, ltensor6, ltensor7 |

| float | fscalar, fvector, fmatrix, frow, fcol, ftensor3, ftensor4, ftensor5, ftensor6, ftensor7 |

| double | dscalar, dvector, dmatrix, drow, dcol, dtensor3, dtensor4, dtensor5, dtensor6, dtensor7 |

| complex | cscalar, cvector, cmatrix, crow, ccol, ctensor3, ctensor4, ctensor5, ctensor6, ctensor7 |

| 数据类型 | 茶野型 |

|---|---|

| 字节 | bscalar,bvector,bmatrix,眉毛,bcol,btensor3,btensor4,btensor5,btensor6,btensor7 |

| 16位整数 | wscalar,wvector,wmatrix,wrow,wcol,wtensor3,wtensor4,wtensor5,wtensor6,wtensor7 |

| 32位整数 | iscalar,ivector,imatrix,irow,icol,itensor3,itensor4,itensor5,itensor6,itensor7 |

| 64位整数 | lscalar,lvector,lmatrix,row,lcol,ltensor3,ltensor4,ltensor5,ltensor6,ltensor7 |

| 浮动 | fscalar,fvector,fmatrix,frow,fcol,ftensor3,ftensor4,ftensor5,ftensor6,ftensor7 |

| 双 | dscalar,dvector,dmatrix,drow,dcol,dtensor3,dtensor4,dtensor5,dtensor6,dtensor7 |

| 复杂 | cscalar,cvector,cmatrix,乌鸦,ccol,ctensor3,ctensor4,ctensor5,ctensor6,ctensor7 |

The above list is not exhaustive and the reader is referred to the tensor creation document for a complete list.

上面的列表并不详尽,读者可以参考张量创建文档以获取完整列表。

I will now give you a few examples of how to create variables of various kinds of data in Theano.

现在,我将为您提供一些有关如何在Theano中创建各种数据变量的示例。

标量 (Scalar)

To construct a scalar variable you would use the syntax −

要构建标量变量,您可以使用语法-

句法 (Syntax)

x = theano.tensor.scalar ('x')

x = 5.0

print (x)

输出量 (Output)

5.0

一维数组 (One-dimensional Array)

To create a one dimensional array, use the following declaration −

要创建一维数组,请使用以下声明-

例 (Example)

f = theano.tensor.vector

f = (2.0, 5.0, 3.0)

print (f)f = theano.tensor.vector

f = (2.0, 5.0, 3.0)

print (f)

print (f[0])

print (f[2])

输出量 (Output)

(2.0, 5.0, 3.0)

2.0

3.0

If you do f[3] it would generate an index out of range error as shown here −

如果执行f [3] ,则会生成索引超出范围的错误,如下所示-

print f([3])

输出量 (Output)

IndexError Traceback (most recent call last)

<ipython-input-13-2a9c2a643c3a> in <module>

4 print (f[0])

5 print (f[2])

----> 6 print (f[3])

IndexError: tuple index out of range

二维阵列 (Two-dimensional Array)

To declare a two-dimensional array you would use the following code snippet −

要声明一个二维数组,您将使用以下代码片段-

例 (Example)

m = theano.tensor.matrix

m = ([2,3], [4,5], [2,4])

print (m[0])

print (m[1][0])

输出量 (Output)

[2, 3]

4

5维数组 (5-dimensional Array)

To declare a 5-dimensional array, use the following syntax −

要声明5维数组,请使用以下语法-

例 (Example)

m5 = theano.tensor.tensor5

m5 = ([0,1,2,3,4], [5,6,7,8,9], [10,11,12,13,14])

print (m5[1])

print (m5[2][3])

输出量 (Output)

[5, 6, 7, 8, 9]

13

You may declare a 3-dimensional array by using the data type tensor3 in place of tensor5, a 4-dimensional array using the data type tensor4, and so on up to tensor7.

你可以通过代替tensor5的使用数据类型tensor3,使用数据类型tensor4 4维阵列,并依此类推,直到tensor7声明一个3维阵列。

多个构造函数 (Plural Constructors)

Sometimes, you may want to create variables of the same type in a single declaration. You can do so by using the following syntax −

有时,您可能希望在单个声明中创建相同类型的变量。 您可以使用以下语法来做到这一点-

句法 (Syntax)

from theano.tensor import * x, y, z = dmatrices('x', 'y', 'z')

x = ([1,2],[3,4],[5,6])

y = ([7,8],[9,10],[11,12])

z = ([13,14],[15,16],[17,18])

print (x[2])

print (y[1])

print (z[0])

输出量 (Output)

[5, 6]

[9, 10]

[13, 14]

Theano-变量 (Theano - Variables)

In the previous chapter, while discussing the data types, we created and used Theano variables. To reiterate, we would use the following syntax to create a variable in Theano −

在上一章中,在讨论数据类型时,我们创建并使用了Theano变量。 重申一下,我们将使用以下语法在Theano中创建一个变量-

x = theano.tensor.fvector('x')

In this statement, we have created a variable x of type vector containing 32-bit floats. We are also naming it as x. The names are generally useful for debugging.

在此语句中,我们创建了一个包含32位浮点数的类型为矢量的变量x 。 我们也将其命名为x 。 这些名称通常对于调试有用。

To declare a vector of 32-bit integers, you would use the following syntax −

要声明一个32位整数的向量,可以使用以下语法-

i32 = theano.tensor.ivector

Here, we do not specify a name for the variable.

在此,我们不为变量指定名称。

To declare a three-dimensional vector consisting of 64-bit floats, you would use the following declaration −

要声明由64位浮点数组成的三维矢量,可以使用以下声明-

f64 = theano.tensor.dtensor3

The various types of constructors along with their data types are listed in the table below −

下表列出了各种类型的构造函数及其数据类型:

| Constructor | Data type | Dimensions |

|---|---|---|

| fvector | float32 | 1 |

| ivector | int32 | 1 |

| fscalar | float32 | 0 |

| fmatrix | float32 | 2 |

| ftensor3 | float32 | 3 |

| dtensor3 | float64 | 3 |

| 建设者 | 数据类型 | 外型尺寸 |

|---|---|---|

| 向量 | float32 | 1个 |

| 向量 | int32 | 1个 |

| 标量 | float32 | 0 |

| 矩阵 | float32 | 2 |

| ftensor3 | float32 | 3 |

| dtensor3 | float64 | 3 |

You may use a generic vector constructor and specify the data type explicitly as follows −

您可以使用通用向量构造函数,并明确指定数据类型,如下所示:

x = theano.tensor.vector ('x', dtype=int32)

In the next chapter, we will learn how to create shared variables.

在下一章中,我们将学习如何创建共享变量。

Theano-共享变量 (Theano - Shared Variables)

Many a times, you would need to create variables which are shared between different functions and also between multiple calls to the same function. To cite an example, while training a neural network you create weights vector for assigning a weight to each feature under consideration. This vector is modified on every iteration during the network training. Thus, it has to be globally accessible across the multiple calls to the same function. So we create a shared variable for this purpose. Typically, Theano moves such shared variables to the GPU, provided one is available. This speeds up the computation.

很多时候,您将需要创建在不同函数之间以及在同一函数的多个调用之间共享的变量。 举一个例子,在训练神经网络时,您将创建权重向量,以为要考虑的每个特征分配权重。 在网络训练期间的每次迭代中都会修改此向量。 因此,必须在对同一函数的多次调用中全局访问它。 因此,我们为此目的创建一个共享变量。 通常,Theano将此类共享变量移至GPU(如果有)。 这样可以加快计算速度。

句法 (Syntax)

You create a shared variable you use the following syntax −

您使用以下语法创建共享变量-

import numpy

W = theano.shared(numpy.asarray([0.1, 0.25, 0.15, 0.3]), 'W')

例 (Example)

Here the NumPy array consisting of four floating point numbers is created. To set/get the W value you would use the following code snippet −

在这里,将创建由四个浮点数组成的NumPy数组。 要设置/获取W值,您将使用以下代码片段-

import numpy

W = theano.shared(numpy.asarray([0.1, 0.25, 0.15, 0.3]), 'W')

print ("Original: ", W.get_value())

print ("Setting new values (0.5, 0.2, 0.4, 0.2)")

W.set_value([0.5, 0.2, 0.4, 0.2])

print ("After modifications:", W.get_value())

输出量 (Output)

Original: [0.1 0.25 0.15 0.3 ]

Setting new values (0.5, 0.2, 0.4, 0.2)

After modifications: [0.5 0.2 0.4 0.2]

Theano-功能 (Theano - Functions)

Theano function acts like a hook for interacting with the symbolic graph. A symbolic graph is compiled into a highly efficient execution code. It achieves this by restructuring mathematical equations to make them faster. It compiles some parts of the expression into C language code. It moves some tensors to the GPU, and so on.

Theano 函数的作用就像是一个与符号图进行交互的钩子。 将符号图编译为高效的执行代码。 它通过重构数学方程以使其更快来实现这一点。 它将表达式的某些部分编译为C语言代码。 它将一些张量移动到GPU,等等。

The efficient compiled code is now given as an input to the Theano function. When you execute the Theano function, it assigns the result of computation to the variables specified by us. The type of optimization may be specified as FAST_COMPILE or FAST_RUN. This is specified in the environment variable THEANO_FLAGS.

现在,将有效的编译代码作为Theano 函数的输入。 当执行Theano 函数时 ,它将计算结果分配给我们指定的变量。 优化类型可以指定为FAST_COMPILE或FAST_RUN。 这是在环境变量THEANO_FLAGS中指定的。

A Theano function is declared using the following syntax −

Theano 函数使用以下语法声明-

f = theano.function ([x], y)

The first parameter [x] is the list of input variables and the second parameter y is the list of output variables.

第一个参数[x]是输入变量的列表,第二个参数y是输出变量的列表。

Having now understood the basics of Theano, let us begin Theano coding with a trivial example.

现在已经了解了Theano的基础知识,让我们从一个简单的例子开始Theano编码。

Theano-简单培训示例 (Theano - Trivial Training Example)

Theano is quite useful in training neural networks where we have to repeatedly calculate cost, and gradients to achieve an optimum. On large datasets, this becomes computationally intensive. Theano does this efficiently due to its internal optimizations of the computational graph that we have seen earlier.

Theano在训练神经网络时非常有用,在神经网络中,我们必须反复计算成本和渐变以达到最佳效果。 在大型数据集上,这变得计算量很大。 Theano有效地做到了这一点,这是由于我们在内部已经对计算图进行了内部优化。

问题陈述 (Problem Statement)

We shall now learn how to use Theano library to train a network. We will take a simple case where we start with a four feature dataset. We compute the sum of these features after applying a certain weight (importance) to each feature.

现在我们将学习如何使用Theano库来训练网络。 我们以一个简单的案例为例,我们从一个四要素数据集开始。 在对每个特征应用一定的权重(重要性)之后,我们计算这些特征的总和。

The goal of the training is to modify the weights assigned to each feature so that the sum reaches a target value of 100.

训练的目的是修改分配给每个特征的权重,以使总和达到目标值100。

sum = f1 * w1 + f2 * w2 + f3 * w3 + f4 * w4

Where f1, f2, ... are the feature values and w1, w2, ... are the weights.

其中f1 , f2 ,...是特征值, w1 , w2 ,...是权重。

Let me quantize the example for a better understanding of the problem statement. We will assume an initial value of 1.0 for each feature and we will take w1 equals 0.1, w2 equals 0.25, w3 equals 0.15, and w4 equals 0.3. There is no definite logic in assigning the weight values, it is just our intuition. Thus, the initial sum is as follows −

让我量化示例以更好地理解问题陈述。 我们将为每个功能假设一个初始值1.0,我们将w1等于0.1 , w2等于0.25 , w3等于0.15 , w4等于0.3 。 分配权重值没有明确的逻辑,这只是我们的直觉。 因此,初始总和如下-

sum = 1.0 * 0.1 + 1.0 * 0.25 + 1.0 * 0.15 + 1.0 * 0.3

Which sums to 0.8. Now, we will keep modifying the weight assignment so that this sum approaches 100. The current resultant value of 0.8 is far away from our desired target value of 100. In Machine Learning terms, we define cost as the difference between the target value minus the current output value, typically squared to blow up the error. We reduce this cost in each iteration by calculating the gradients and updating our weights vector.

总计为0.8 。 现在,我们将继续修改权重分配,以使该总和接近100。当前的结果值0.8与我们期望的目标值100相去甚远。在机器学习术语中,我们将成本定义为目标值减去目标值之间的差当前输出值,通常平方以消除误差。 我们通过计算梯度并更新权重向量来降低每次迭代的成本。

Let us see how this entire logic is implemented in Theano.

让我们看看如何在Theano中实现整个逻辑。

声明变量 (Declaring Variables)

We first declare our input vector x as follows −

我们首先声明我们的输入向量x,如下所示:

x = tensor.fvector('x')

Where x is a single dimensional array of float values.

其中x是浮点值的一维数组。

We define a scalar target variable as given below −

我们定义一个标量目标变量,如下所示:

target = tensor.fscalar('target')

Next, we create a weights tensor W with the initial values as discussed above −

接下来,我们使用上述初始值创建权重张量W-

W = theano.shared(numpy.asarray([0.1, 0.25, 0.15, 0.3]), 'W')

定义Theano表达 (Defining Theano Expression)

We now calculate the output using the following expression −

我们现在使用以下表达式计算输出-

y = (x * W).sum()

Note that in the above statement x and W are the vectors and not simple scalar variables. We now calculate the error (cost) with the following expression −

注意,在上面的语句中, x和W是向量,而不是简单的标量变量。 我们现在使用以下表达式计算误差(成本)-

cost = tensor.sqr(target - y)

The cost is the difference between the target value and the current output, squared.

成本是目标值和当前输出之间的差,平方。

To calculate the gradient which tells us how far we are from the target, we use the built-in grad method as follows −

为了计算告诉我们离目标有多远的梯度,我们使用内置的grad方法,如下所示:

gradients = tensor.grad(cost, [W])

We now update the weights vector by taking a learning rate of 0.1 as follows −

我们现在通过采用如下的0.1的学习率来更新权重向量-

W_updated = W - (0.1 * gradients[0])

Next, we need to update our weights vector using the above values. We do this in the following statement −

接下来,我们需要使用上述值更新权重向量。 我们在以下语句中这样做-

updates = [(W, W_updated)]

定义/调用Theano函数 (Defining/Invoking Theano Function)

Lastly, we define a function in Theano to compute the sum.

最后,我们在Theano中定义一个函数来计算总和。

f = function([x, target], y, updates=updates)

To invoke the above function a certain number of times, we create a for loop as follows −

要多次调用上述函数,我们创建如下的for循环-

for i in range(10):

output = f([1.0, 1.0, 1.0, 1.0], 100.0)

As said earlier, the input to the function is a vector containing the initial values for the four features - we assign the value of 1.0 to each feature without any specific reason. You may assign different values of your choice and check if the function ultimately converges. We will print the values of the weight vector and the corresponding output in each iteration. It is shown in the below code −

如前所述,该函数的输入是一个包含四个要素初始值的向量-我们将1.0的值分配给每个要素而没有任何特定原因。 您可以分配不同的值,然后检查功能是否最终收敛。 我们将在每次迭代中打印权重向量的值和相应的输出。 它显示在下面的代码中-

print ("iteration: ", i)

print ("Modified Weights: ", W.get_value())

print ("Output: ", output)

完整程序清单 (Full Program Listing)

The complete program listing is reproduced here for your quick reference −

此处复制了完整的程序清单,供您快速参考-

from theano import *

import numpy

x = tensor.fvector('x')

target = tensor.fscalar('target')

W = theano.shared(numpy.asarray([0.1, 0.25, 0.15, 0.3]), 'W')

print ("Weights: ", W.get_value())

y = (x * W).sum()

cost = tensor.sqr(target - y)

gradients = tensor.grad(cost, [W])

W_updated = W - (0.1 * gradients[0])

updates = [(W, W_updated)]

f = function([x, target], y, updates=updates)

for i in range(10):

output = f([1.0, 1.0, 1.0, 1.0], 100.0)

print ("iteration: ", i)

print ("Modified Weights: ", W.get_value())

print ("Output: ", output)

When you run the program you will see the following output −

运行程序时,您将看到以下输出-

Weights: [0.1 0.25 0.15 0.3 ]

iteration: 0

Modified Weights: [19.94 20.09 19.99 20.14]

Output: 0.8

iteration: 1

Modified Weights: [23.908 24.058 23.958 24.108]

Output: 80.16000000000001

iteration: 2

Modified Weights: [24.7016 24.8516 24.7516 24.9016]

Output: 96.03200000000001

iteration: 3

Modified Weights: [24.86032 25.01032 24.91032 25.06032]

Output: 99.2064

iteration: 4

Modified Weights: [24.892064 25.042064 24.942064 25.092064]

Output: 99.84128

iteration: 5

Modified Weights: [24.8984128 25.0484128 24.9484128 25.0984128]

Output: 99.968256

iteration: 6

Modified Weights: [24.89968256 25.04968256 24.94968256 25.09968256]

Output: 99.9936512

iteration: 7

Modified Weights: [24.89993651 25.04993651 24.94993651 25.09993651]

Output: 99.99873024

iteration: 8

Modified Weights: [24.8999873 25.0499873 24.9499873 25.0999873]

Output: 99.99974604799999

iteration: 9

Modified Weights: [24.89999746 25.04999746 24.94999746 25.09999746]

Output: 99.99994920960002

Observe that after four iterations, the output is 99.96 and after five iterations, it is 99.99, which is close to our desired target of 100.0.

观察到经过四次迭代,输出为99.96 ,经过五次迭代,输出为99.99 ,这接近我们期望的目标100.0 。

Depending on the desired accuracy, you may safely conclude that the network is trained in 4 to 5 iterations. After the training completes, look up the weights vector, which after 5 iterations takes the following values −

根据所需的精度,您可以安全地得出结论,网络经过4到5次迭代训练。 训练完成后,查找权重向量,该向量在5次迭代后取以下值-

iteration: 5

Modified Weights: [24.8984128 25.0484128 24.9484128 25.0984128]

You may now use these values in your network for deploying the model.

现在,您可以在网络中使用这些值来部署模型。

Theano-结论 (Theano - Conclusion)

The Machine Learning model building involves intensive and repetitive computations involving tensors. These require intensive computing resources. As a regular compiler would provide the optimizations at the local level, it does not generally produce a fast execution code.

机器学习模型的建立涉及涉及张量的密集且重复的计算。 这些需要大量的计算资源。 由于常规编译器将在本地级别提供优化,因此通常不会生成快速执行代码。

Theano first builds a computational graph for the entire computation. As the whole picture of computation is available as a single image during compilation, several optimization techniques can be applied during pre-compilation and that’s what exactly Theano does. It restructures the computational graph, partly converts it into C, moves shared variables to GPU, and so on to generate a very fast executable code. The compiled code is then executed by a Theano function which just acts as a hook for injecting the compiled code into the runtime. Theano has proved its credentials and is widely accepted in both academics and industry.

Theano首先为整个计算构建一个计算图。 由于在编译过程中可以将整个计算图作为单个图像使用,因此在预编译期间可以应用多种优化技术,而Theano正是这样做的。 它重组计算图,部分将其转换为C,将共享变量移至GPU,等等,以生成非常快速的可执行代码。 然后由Theano 函数执行编译后的代码,该函数仅用作将编译后的代码注入运行时的挂钩。 Theano证明了其信誉,并在学者和行业中被广泛接受。

翻译自: https://www.tutorialspoint.com/theano/theano_quick_guide.htm

theano

3492

3492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?