一、添加jar包

环境准备:

由于hive是通过zookeeper完成分布式协同服务,且使用Hadoop中的hdfs存储文件、mapreduce处理数据,所以需要保证试验机安装Hadoop+Hive+zookeeper环境。

将hbase中的jar包导入到hive中。

cp /opt/soft/hbase235/lib/* /opt/soft/hive312/lib

二、修改Hive配置文件

hive-site.xml中添加如下配置:

<property>

<name>hive.zookeeper.quorum</name>

<value>192.168.95.150</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>192.168.95.150</value>

</property>

<property>

<name>hive.aux.jars.path</name>

<value>file:///opt/soft/hive312/lib/hive-hbase-handler-3.1.2.jar,file:///opt/soft/hive312/lib/zookeeper-3.4.6.jar,file:///opt/soft/hive312/lib/hbase-client-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5-tests.jar,file:///opt/soft/hive312/lib/hbase-server-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-protocol-2.3.5.jar,file:///opt/soft/hive312/lib/htrace-core-3.2.0-incubating.jar</value>

</property>三、创建关联表

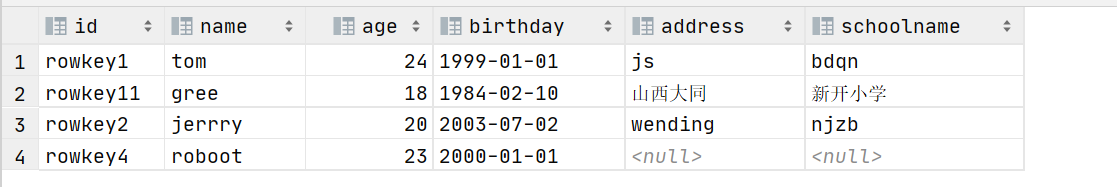

create external table student(

id string,

name string,

age int,

birthday string,

address string,

schoolname string

)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' with

serdeproperties ("hbase.columns.mapping"=

":key,baseinfo:name,baseinfo:age,baseinfo:birthday,schoolinfo:address,schoolinfo:name")

tblproperties ("hbase.table.name"="bigdata:student");

四、示例

4.1 启动服务

# 启动hadoop

[root@hadoop ~]# start-all.sh

# 启动zookeeper

[root@hadoop ~]# zkServer.sh start

# 启动hbase

[root@hadoop ~]# start-hbase.sh

# 启动hive

[root@hadoop ~]# nohup hive --service metastore &

[root@hadoop ~]# nohup hive --service hiveserver2 &

4.2 添加依赖

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.3.5</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.3.5</version>

</dependency>4.3 初始化方法

@Before

public void init() throws IOException {

config = HBaseConfiguration.create();

config.set(HConstants.HBASE_DIR,"hdfs://192.168.153.139:9000/hbase");

config.set(HConstants.ZOOKEEPER_QUORUM,"192.168.153.139");

config.set(HConstants.CLIENT_PORT_STR,"2181");

conn = ConnectionFactory.createConnection(config);

admin = conn.getAdmin();

}4.4 关闭方法

@After

public void close() throws IOException {

if(admin!=null){

admin.close();

}

if(conn!=null){

conn.close();

}

}4.5 创建命名空间

@Test

public void createNameSpace() throws IOException {

NamespaceDescriptor bigdata = NamespaceDescriptor.create("bigdata").build();

admin.createNamespace(bigdata);

}4.6 创建表方法

@Test

public void createTable() throws IOException {

TableName tableName = TableName.valueOf("bigdata:car");

HTableDescriptor desc = new HTableDescriptor(tableName);

HColumnDescriptor family1 = new HColumnDescriptor("carinfo");

HColumnDescriptor family2 = new HColumnDescriptor("brandinfo");

HColumnDescriptor family3 = new HColumnDescriptor("saleinfo");

desc.addFamily(family1);

desc.addFamily(family2);

desc.addFamily(family3);

admin.createTable(desc);

}4.7 查看表结构

@Test

public void getAllNamespace() throws IOException {

List<TableDescriptor> tableDesc = admin.listTableDescriptorsByNamespace("bigdata".getBytes());

System.out.println(tableDesc.toString());

}

4.8 插入数据

@Test

public void insertData() throws IOException {

Table table = conn.getTable(TableName.valueOf("bigdata:car"));

Put car1 = new Put(Bytes.toBytes("car1"));

car1.addColumn("carinfo".getBytes(),"weight".getBytes(),"700kg".getBytes());

car1.addColumn("carinfo".getBytes(),"height".getBytes(),"2.4m".getBytes());

car1.addColumn("carinfo".getBytes(),"broad".getBytes(),"1.2m".getBytes());

car1.addColumn("brandinfo".getBytes(),"name".getBytes(),"宝马".getBytes());

car1.addColumn("brandinfo".getBytes(),"series".getBytes(),"x5".getBytes());

car1.addColumn("saleinfo".getBytes(),"money".getBytes(),"520000".getBytes());

car1.addColumn("saleinfo".getBytes(),"time".getBytes(),"2023-03-08".getBytes());

Put car2 = new Put(Bytes.toBytes("car2"));

car2.addColumn("carinfo".getBytes(),"weight".getBytes(),"900kg".getBytes());

car2.addColumn("carinfo".getBytes(),"height".getBytes(),"2.3m".getBytes());

car2.addColumn("carinfo".getBytes(),"broad".getBytes(),"1.4m".getBytes());

car2.addColumn("brandinfo".getBytes(),"name".getBytes(),"宝马".getBytes());

car2.addColumn("brandinfo".getBytes(),"series".getBytes(),"华晨".getBytes());

car2.addColumn("saleinfo".getBytes(),"money".getBytes(),"250000".getBytes());

car2.addColumn("saleinfo".getBytes(),"time".getBytes(),"2023-03-06".getBytes());

table.put(car1);

table.put(car2);

}4.9 查询数据

@Test

public void queryData() throws IOException {

Table table = conn.getTable(TableName.valueOf("bigdata:car"));

Get car1 = new Get(Bytes.toBytes("car1"));

Result result = table.get(car1);

byte[] value11 = result.getValue(Bytes.toBytes("carinfo"), Bytes.toBytes("weight"));

System.out.println("重量:"+Bytes.toString(value11));

byte[] value12 = result.getValue(Bytes.toBytes("carinfo"), Bytes.toBytes("height"));

System.out.println("高度:"+Bytes.toString(value12));

byte[] value13 = result.getValue(Bytes.toBytes("carinfo"), Bytes.toBytes("broad"));

System.out.println("宽度:"+Bytes.toString(value13));

byte[] value21 = result.getValue(Bytes.toBytes("brandinfo"), Bytes.toBytes("money"));

System.out.println("品牌:"+Bytes.toString(value21));

byte[] value22 = result.getValue(Bytes.toBytes("brandinfo"), Bytes.toBytes("series"));

System.out.println("系列:"+Bytes.toString(value22));

byte[] value31 = result.getValue(Bytes.toBytes("saleinfo"), Bytes.toBytes("weight"));

System.out.println("售价:"+Bytes.toString(value31));

byte[] value32 = result.getValue(Bytes.toBytes("saleinfo"), Bytes.toBytes("time"));

System.out.println("销售时间:"+Bytes.toString(value32));

}4.10 扫描全表

@Test

public void scanDate() throws IOException {

Table table = conn.getTable(TableName.valueOf("bigdata:car"));

Scan scan = new Scan();

ResultScanner scanner = table.getScanner(scan);

for (Result rs : scanner) {

byte[] value = rs.getValue(Bytes.toBytes("carinfo"), Bytes.toBytes("weight"));

System.out.println("重量:"+Bytes.toString(value));

}

}4.11 删除数据

@Test

public void deleteTable() throws IOException {

// 先禁用

admin.disableTable(TableName.valueOf("bigdata:car"));

// 再删除

admin.deleteTable(TableName.valueOf("bigdata:car"));

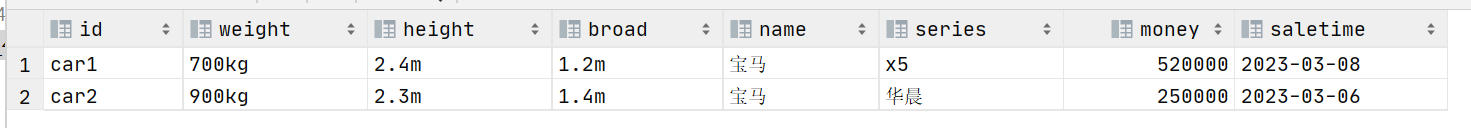

}4.12 使用dataGrip连接hive查看数据

// 创建外部表

create external table car(

id string,

weight string,

height string,

broad string,

name string,

series string,

money int,

saletime string

)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' with

serdeproperties ("hbase.columns.mapping"=":key,carinfo:weight,carinfo:height,carinfo:broad,brandinfo:name,brandinfo:series,saleinfo:money,saleinfo:time")

tblproperties ("hbase.table.name"="bigdata:car");

// 查询

select * from car;

1555

1555

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?