一:问题

| int avcodec_encode_video2 | ( | AVCodecContext * | avctx, |

|

|

| AVPacket * | avpkt, |

|

|

| const AVFrame * | frame, |

|

|

| int * | got_packet_ptr |

|

| ) |

|

|

| int avcodec_decode_video2 | ( | AVCodecContext * | avctx, |

|

|

| AVFrame * | picture, |

|

|

| int * | got_picture_ptr, |

|

|

| const AVPacket * | avpkt |

|

| ) |

|

|

Decode the video frame of size avpkt->size from avpkt->data into picture.

Some decoders may support multiple frames in a single AVPacket, such decoders would then just decode the first frame.

Takes input raw video data from frame and writes the next output packet, if available, to avpkt. The output packet does not necessarily contain data for the most recent frame, as encoders can delay and reorder input frames internally as needed.

二原因:

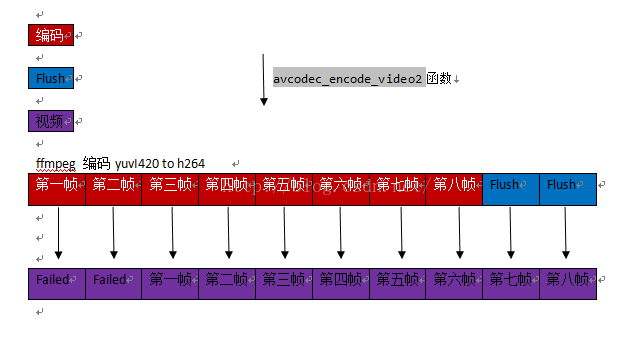

拿编码来说,丢帧原因如图:

这被称为编码延迟。延迟原因又分为两种,一是计算延迟,而是缓存延迟。

所以:

h->frames.i_delay =

param->i_sync_lookahead + // 前向考虑帧数

max ( param->i_bframe, // B帧数量

param->rc.i_lookahead) + // 码率控制前向考虑帧数

通过设置参数将编码和获取的帧间隔缩小到0,使用“zerolatency"的tune设定编码模型,详细:

三 解决

编码延迟就需要在编码所有yuv数据之后必须flush。

int vflush_encoder(AVFormatContext *fmt_ctx, unsigned int stream_index) {

int ret = 0;

int got_frame;

AVPacket enc_pkt;

if (!(fmt_ctx->streams[stream_index]->codec->codec->capabilities

& CODEC_CAP_DELAY))

return 0;

av_init_packet(&enc_pkt);

while (IS_GOING) {

enc_pkt.data = NULL;

enc_pkt.size = 0;

ret = avcodec_encode_video2(fmt_ctx->streams[stream_index]->codec,

&enc_pkt, NULL, &got_frame);

if (ret < 0)

break;

if (!got_frame) {

ret = 0;

break;

}

ret = av_write_frame(fmt_ctx, &enc_pkt);

printf("Flush Encoder: Succeed to encode 1 frame!\tsize:%5d\n",

enc_pkt.size);

av_free_packet(&enc_pkt);

if (ret < 0)

break;

}

return ret;

}

本文深入探讨了音视频编解码过程中的关键函数avcodec_encode_video2与avcodec_decode_video2,解释了编码和解码过程中可能出现的帧丢失问题,并提供了减少延迟的具体方法。

本文深入探讨了音视频编解码过程中的关键函数avcodec_encode_video2与avcodec_decode_video2,解释了编码和解码过程中可能出现的帧丢失问题,并提供了减少延迟的具体方法。

621

621

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?