引言

大数据的学习从部署安装开始,在之前的大数据私房菜中我们利用Hadoop的发行版本CDH进行了部署,而CDH的好处是利用web部署简化了大数据组件的安装步骤,只需利用CM就可以完成整一个所需的大数据平台的安装,而今天我们利用Apache源生态进行完全分布式的安装。

目录

1. 部署规划

还是老样子对集群进行安装部署规划,我们这边选择使用Vbox进行虚拟机Centos7系统的安装。集群共有三个节点,其中一个是master,另外两个做salve。

| 主机名 | ip地址 | 角色 | 系统 |

|---|---|---|---|

| master | 192.168.0.240 | master | Centos7 |

| slave1 | 192.168.0.241 | salve | Centos7 |

| slave2 | 192.168.0.242 | salve | Centos7 |

在Vbox中生成一台Other Linux(64-bit) 作为Master,其余数据节点通过克隆得到。

各组件选型如下,各组件各版本的下载可以在以下网址进行下载

北京理工大学镜像:http://mirror.bit.edu.cn/apache/

| Component | Package Version | path | 安装模式 |

|---|---|---|---|

| java | jdk1.8.0 | /opt/java/jdk1.8.0 | 所有节点 |

| scala | scala-2.11.2 | /opt/scala/scala-2.11.2 | 所有节点 |

| Hadoop | hadoop-2.6.0 | /opt/hadoop/hadoop-2.6.0 | 所有节点 |

| Hbase | hbase-1.1.5 | /opt/hbase/hbase-1.1.5 | 所有节点 |

| Spark | spark-2.4.5-bin-hadoop2.7 | /opt/spark/spark-2.4.5-bin-hadoop2.7 | 所有节点 |

| Zookeeper | zookeeper-3.4.10 | /opt/zookeeper/zookeeper-3.4.10 | 所有节点 |

| Hive | hive-1.2.2 | /opt/hive/hive-1.2.2 | 单机部署 |

| mysql | mysql-5.7.26 | /opt/mysql/mysql-5.7.26 | 单机部署 |

2. 配置要求

- JDK :Hadoop和Spark需要的环境依赖,根据官方配置说明选择jdk1.8以上。

- Scala:Spark依赖的配置,spark 1.6用2.10以下版本的scala,spark 2.0用2.11以上版本的scala。

- Hadoop: 完全分布式架构,包括hdfs和MapReduce,资源调度利用Yarn。

- Spark: 分布式存储的大数据进行处理的工具。

- zookeeper:分布式应用程序协调服务,hbase集群依赖于zookeeper。

- HBase: 分布式列式数据库。

- Hive: 基于Hadoop的一个数据仓库。

3. 环境准备

3.1 安装虚拟机

详情可以看Centos7的安装:https://blog.csdn.net/depa2018/article/details/106962948

安装完虚拟机之后,裸机需要另行安装vim和net-tools

yum -y install vim*

yum -y install net-tools

这样你就拥有了vim和ifconfig命令,另外mysql依赖于net-tools

3.2 环境变量配置

这里先对整体的环境变量进行配置,在/etc/profile文件末尾追加

#Java Config

export JAVA_HOME=/opt/java/jdk1.8

export JRE_HOME=/opt/java/jdk1.8/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

# Scala Config

export SCALA_HOME=/opt/scala/scala2.11

# Spark Config

export SPARK_HOME=/opt/spark/spark2.4.5

# Zookeeper Config

export ZK_HOME=/opt/zookeeper/zookeeper3.4

# HBase Config

export HBASE_HOME=/opt/hbase/hbase1.1.5

# Hadoop Config

export HADOOP_HOME=/opt/hadoop/hadoop2.7

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

# Hive Config

export HIVE_HOME=/opt/hive/hive1.2.2

export HIVE_CONF_DIR=${HIVE_HOME}/conf

export PATH=.:${JAVA_HOME}/bin:${SCALA_HOME}/bin:${SPARK_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${ZK_HOME}/bin:${HBASE_HOME}/bin:${HIVE_HOME}/bin:$PATH

编辑保存之后,使用source /etc/profile生效

3.3 JDK配置

# 查询已安装的jdk

rpm -qa | grep java

# 卸载已安装的jdk

yum remove java*

#解压jdk压缩包

tar -xzvf jdk-8u212-linux-x64.tar.gz -C /opt/java/

cd /opt/java/

mv jdk1.8.0_212 jdk1.8

- java -version # 校验java是否安装成功

3.4 Scala配置

#解压scala压缩包

tar -xzvf scala-2.11.12.tgz -C /opt/scala/

cd /opt/scala/

mv scala-2.11.12 scala2.11

- scala -version # 校验scala是否安装成功

3.5 配置host

在/etc/hosts文件末尾追加

192.168.0.240 master

192.168.0.241 slave1

192.168.0.242 slave2

3.6 关闭防火墙

# 关闭防火墙

systemctl stop firewalld

# 禁止防火墙开机自启

systemctl disable firewalld

3.7 关闭selinux

vim /etc/selinux/config

SELINUX=enforcing—> SELINUX=disabled

3.8 克隆虚拟机

将配置好的master虚拟机克隆成2台数据节点虚拟机,以下以vbox为例:

右键已经初步配置好的master,选择复制(clone)

更改名称,MAC地址设定为所有网卡重新生成MAC地址

选择完全复制

等待片刻Vbox就可完成复制,依次分别生成slave1、slave2。

复制好的虚拟机我们需要更好一下静态IP和hostname

# 通过修改配置文件/etc/hostname来修改hostnma

# 将master更改为salve1

vim /etc/hostname

# 通过修改配置文件

vim /etc/sysconfig/network-scripts/ifcfg-enp0s3

3.9 配置免密登录

在所有节点上生成密钥对,一路Enter

# 在所有上生成公钥文件id_rsa.pub

ssh-keygen -t rsa

master节点:

# 将公钥追加到authorized_keys

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# 修改权限,不加权限无法实现

chmod 600 ~/.ssh/authorized_keys

# 追加密钥到master

ssh slave1 cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

ssh slave2 cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

# 查看信任主机是否添加

cat /root/.ssh/authorized_keys

在/root/.ssh/authorized_keys中查看有三台主机的密钥

通过scp分发到slave1和slave2

# 将ssh文件分发到其余节点中

scp /root/.ssh/authorized_keys root@slave1:/root/.ssh/authorized_keys

scp /root/.ssh/authorized_keys root@slave2:/root/.ssh/authorized_keys

各个节点尝试相互SSH验证是否可免密登录

4. Hadoop配置安装

4.1 解压hadoop

# 解压hadoop

tar -xzvf hadoop-2.7.7.tar.gz -C /opt/hadoop/

cd /opt/hadoop/

mv hadoop-2.7.7 hadoop2.7

hadoop的配置文件主要有以下几个,引用hadoop权威指南来说明几个文件的作用

有关配置文件的说明也可以参考官方文档:http://hadoop.apache.org/docs/

| 文件名称 | 格式 | 描述 |

|---|---|---|

| hadoop-env.sh | Bash脚本 | 记录脚本中要用到的环境变量以运行hadoop |

| core-site.xml | hadoop配置xml | hadoop core的配置项 |

| hdfs-site.xml | hadoop配置xml | hadoop守护进程的配置项 |

| mapred-site.xml | hadoop配置xml | MapReduce守护进程的配置项 |

| master | 纯文本 | 运行namenode的机器列表 |

| slaves | 纯文本 | 运行dataNode的机器列表 |

| hadoop-metrics.properties | java属性 | 控制如何在hadoop上发布度量的属性 |

| log4j.properties | java属性 | 系统日志文件,namenode审计日志 |

4.2 hadoop-env.sh

找到export JAVA_HOME=${JAVA_HOME}将相对路径更改为绝对路径。

export JAVA_HOME=${JAVA_HOME} # 修改为

export JAVA_HOME=/opt/java/jdk1.8

4.3 core-site.xml

修改hadoop核心配置文件

cd /opt/hadoop/hadoop2.7/etc/hadoop

vim core-site.xml

在<configuration></configuration>节点中加入配置信息:

<property>

<name>hadoop.temp.dir</name>

<value>file:/root/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

4.4 hdfs-site.xml

修改hdfs-site.xml,配置hdfs的存放路径

cd /opt/hadoop/hadoop2.7/etc/hadoop

vim hdfs-site.xml

在<configuration></configuration>节点中加入配置信息:

<property>

<name>dfs:replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/root/hadoop/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/root/hadoop/data</value>

</property>

4.5 mapred-site.xml

复制mapred-site.xml.template文件并重命名为mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

修改mapred-site.xml

在<configuration></configuration>节点中加入配置信息,让MapReduce运行在yarn

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

4.6 yarn-site.xml

修改yarn-site.xml配置yarn集群资源调度

cd /opt/hadoop/hadoop2.7/etc/hadoop

vim yarn-site.xml

在<configuration></configuration>节点中加入配置信息:

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8182</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

4.7 slaves

修改slaves添加从节点

cd /opt/hadoop/hadoop2.7/etc/hadoop

vim slaves

## 在文件添加从节点:

slave1

slave2

4.8 分发hadoop

分发配置好的hadoop到从节点

scp -r /opt/hadoop root@slave1:/opt

scp -r /opt/hadoop root@slave2:/opt

4.9 hadoop的启动

4.9.1 格式化namenode

hadoop namenode -format ##格式化名称节点

当出现以下INFO表示名称节点namenode初始化成功

INFO common.Storage: Storage directory /root/hadoop/name has been successfully formatted.

4.9.2 启动hadoop

# 在namenode下输入以下命令

start-all.sh

第一次启动hadoop会询问Are you sure you want to continue connecting(yes/no)?输入yes即可

可以在每个节点中输入jps查看进程

## master

[root@master name]# jps

4705 NameNode

5043 ResourceManager

5303 Jps

4892 SecondaryNameNode

## slave1

[root@slave1 hadoop]# jps

2162 DataNode

2387 Jps

2265 NodeManager

## slave2

[root@slave2 hadoop]# jps

2150 DataNode

2376 Jps

2253 NodeManager

我们看到master上启动了namenode守护进程、secondarynamenode的守护进程个yarn的resourcemanager,而在从节点中启动了datanode和nodemanager进程。

hadoop本身自带了一个webUI访问页面用于查看集群的基本情况,yarn也自带了一个webUI访问界面用于查看任务情况,可以通过在浏览器中输入以下两个网址访问查看

# Yarn

http://192.168.0.240:8088/

# Dfs

http://192.168.0.240:50070/

进入界面可以查看

5. Spark配置安装

5.1 解压spark

# 解压spark

tar -xzvf spark-2.4.5-bin-hadoop2.7.tgz -C /opt/spark/

cd /opt/spark/

mv spark-2.4.5-bin-hadoop2.7 spark2.4.5

5.2 修改 spark-env.sh

# 进入spark的配置文件夹

cd $SPARK_HOME/conf

# 拷贝一份spark的环境变量配置文件并重命名为spark-env.sh

cp spark-env.sh.template spark-env.sh

# 修改spark-env.sh

vim spark-env.sh

在spark的环境变量配置文件中添加以下内容,将scala、jdk和hadoop路径配置上去,再指定master的ip为master,spark的执行内存设置为4G,保存退出即可。

export SCALA_HOME=/opt/scala/scala2.11

export JAVA_HOME=/opt/java/jdk1.8

export HADOOP_HOME=/opt/hadoop/hadoop2.7

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export SPARK_HOME=/opt/spark/spark2.4.5

export SPARK_MASTER_IP=master

export SPARK_EXECUTOR_MEMORY=4G

5.3 修改slaves

和hadoop一样将需要的slave节点添加到slave文本文件里面

# 复制conf目录下的slaves.template并重命名为slaves

cp slaves.template slaves

添加slaves节点,在slaves文本文件末尾添加如下内容

将localhost注释掉

# localhost

slave1

slave2

5.4 分发spark

我们将配置好的spark分发到各个slave节点上

scp -r /opt/spark root@slave1:/opt

scp -r /opt/spark root@slave2:/opt

5.5 启动spark

首先我们要启动hadoop,在前面已经配置好的hadoop,另外已经用start-all.sh启动了hadoop集群,现在我们要启动spark首先我们来看看spark的sbin目录下的执行文件有哪些?

[root@master conf]# ll /opt/spark/spark2.4.5/sbin/

total 92

-rwxr-xr-x 1 1000 1000 2803 Feb 3 03:47 slaves.sh

-rwxr-xr-x 1 1000 1000 1429 Feb 3 03:47 spark-config.sh

-rwxr-xr-x 1 1000 1000 5689 Feb 3 03:47 spark-daemon.sh

-rwxr-xr-x 1 1000 1000 1262 Feb 3 03:47 spark-daemons.sh

-rwxr-xr-x 1 1000 1000 1190 Feb 3 03:47 start-all.sh

-rwxr-xr-x 1 1000 1000 1274 Feb 3 03:47 start-history-server.sh

-rwxr-xr-x 1 1000 1000 2050 Feb 3 03:47 start-master.sh

-rwxr-xr-x 1 1000 1000 1877 Feb 3 03:47 start-mesos-dispatcher.sh

-rwxr-xr-x 1 1000 1000 1423 Feb 3 03:47 start-mesos-shuffle-service.sh

-rwxr-xr-x 1 1000 1000 1279 Feb 3 03:47 start-shuffle-service.sh

-rwxr-xr-x 1 1000 1000 3151 Feb 3 03:47 start-slave.sh

-rwxr-xr-x 1 1000 1000 1527 Feb 3 03:47 start-slaves.sh

-rwxr-xr-x 1 1000 1000 1857 Feb 3 03:47 start-thriftserver.sh

-rwxr-xr-x 1 1000 1000 1478 Feb 3 03:47 stop-all.sh

-rwxr-xr-x 1 1000 1000 1056 Feb 3 03:47 stop-history-server.sh

-rwxr-xr-x 1 1000 1000 1080 Feb 3 03:47 stop-master.sh

-rwxr-xr-x 1 1000 1000 1227 Feb 3 03:47 stop-mesos-dispatcher.sh

-rwxr-xr-x 1 1000 1000 1084 Feb 3 03:47 stop-mesos-shuffle-service.sh

-rwxr-xr-x 1 1000 1000 1067 Feb 3 03:47 stop-shuffle-service.sh

-rwxr-xr-x 1 1000 1000 1557 Feb 3 03:47 stop-slave.sh

-rwxr-xr-x 1 1000 1000 1064 Feb 3 03:47 stop-slaves.sh

-rwxr-xr-x 1 1000 1000 1066 Feb 3 03:47 stop-thriftserver.sh

我们可以看到spark的命令和hadoop的命令名称是一样的,为了避免混淆我们对集群的常用命令命名一个别名。

- ~/.bashrc # 修改bashrc添加别名

alias starthadoop='/opt/hadoop/hadoop2.7/sbin/start-all.sh'

alias stophadoop='/opt/hadoop/hadoop2.7/sbin/stop-all.sh'

alias formathadoop='/opt/hadoop/hadoop2.8/bin/hdfs namenode -format'

alias startdfs='/opt/hadoop/hadoop2.7/sbin/start-dfs.sh'

alias stopdfs='/opt/hadoop/hadoop2.7/sbin/stop-dfs.sh'

alias startyarn='/opt/hadoop/hadoop2.7/sbin/start-yarn.sh'

alias stopyarn='/opt/hadoop/hadoop2.7/sbin/stop-yarn.sh'

alias starthbase='/opt/hbase/hbase1.1.5/bin/start-hbase.sh'

alias stophbase='/opt/hbase/hbase1.1.5/bin/stop-hbase.sh'

alias startzk='/opt/zookeeper/zookeeper3.4/bin/zkServer.sh start'

alias stopzk='/opt/zookeeper/zookeeper3.4/bin/zkServer.sh stop'

alias statuszk='/opt/zookeeper/zookeeper3.4/bin/zkServer.sh status'

alias startsp='/opt/spark/spark2.4.5/sbin/start-all.sh'

alias stopsp='/opt/spark/spark2.4.5/sbin/stop-all.sh'

这样我们就可以通过startsp命令进行spark的启动,如果不配置别名可以直接用

/opt/spark/spark2.4.5/sbin/start-all.sh

没有报错即表示spark启动成功

[root@master spark2.4.5]# /opt/spark/spark2.4.5/sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

slave2: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-slave2.out

slave1: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-slave1.out

我们还可以通过在浏览器输入master的ip地址:8080来查看spark的状态界面

6. Zookeeper配置安装

ZooKeeper是一个分布式的,开放源码的分布式应用程序协调服务,是Google的Chubby一个开源的实现,是Hadoop和Hbase的重要组件。它是一个为分布式应用提供一致性服务的软件,提供的功能包括:配置维护、域名服务、分布式同步、组服务等。

6.1 解压zookeeper

# 解压zookeeper

tar -xzvf zookeeper-3.4.10.tar.gz -C /opt/zookeeper/

cd /opt/zookeeper/

mv zookeeper-3.4.10 zookeeper3.4

6.2 创建zookeeper数据目录

# 所有节点创建zookeeper所需的数据目录

mkdir -p /root/zookeeper/data

mkdir -p /root/zookeeper/logs

6.3 配置myid

# 在/root/zookeeper/data创建myid文件

# master为1,slave1为2,slave2为3

cd /root/zookeeper/data

# master

echo 1 > myid

# slave1

echo 2 > myid

# slave2

echo 3 > myid

6.4 修改zoo.cfg

zookeeper的核心配置文件为zoo.cfg,我们拷贝zoo_sample.cfg并重命名为zoo.cfg

cd /opt/zookeeper/zookeeper3.4/conf

cp zoo_sample.cfg zoo.cfg

添加以下内容,server.1即myid为1的master,其余配置为默认配置即可

dataDir=/root/zookeeper/data

dataLogDir=/root/zookeeper/logs

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

6.5 分发zookeeper

通过scp分发zookeeper

scp -r /opt/zookeeper root@slave1:/opt

scp -r /opt/zookeeper root@slave2:/opt

6.6 启动zookeeper

我们在启动zookeeper之前依次关闭spark和hadoop

/opt/spark/spark2.4.5/sbin/stop-all.sh

/opt/hadoop/hadoop2.7/sbin/stop-all.sh

然后通过以下命令启动

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh start

# 也可以通过我们设置的别名来启动

startzk

通过/opt/zookeeper/zookeeper3.4/bin/zkServer.sh status或者statuszk来查看zookeeper的状态,如下可知master和slave2为follower,而slave1为leader

[root@master conf]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Mode: follower

[root@slave1 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Mode: leader

[root@slave2 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Mode: follower

然后我们启动hadoop和spark。

7. HBase配置安装

7.1 解压HBase

# 解压HBase

tar -xzvf hbase-1.1.5-bin.tar.gz -C /opt/hbase/

cd /opt/hbase/

mv hbase-1.1.5 hbase1.1.5

7.2 修改hbase-env.sh

hbase的配置文件存放在 /opt/hbase/hbase1.1.5/conf,hbase-env.sh为hbase的环境变量文件,添加以下内容即可:

export JAVA_HOME=/opt/java/jdk1.8

export HADOOP_HOME=/opt/hadoop/hadoop2.7

export HBASE_HOME=/opt/hbase/hbase1.1.5

export HBASE_CLASSPATH=/opt/hadoop/hadoop2.7/etc/hadoop

export HBASE_PID_DIR=/root/hbase/pids

export HBASE_MANAGES_ZK=false

指定jdk、hadoop的路径之后,HBASE_MANAGES_ZK配置为false则为不启用hbase自带的zookeeper而采用集群的zookeeper

7.3 修改 hbase-site.xml

修改habse的核心配置文件hbase-site.xml

在<configuration></configuration>节点中加入配置信息:

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<!-- hbase端口 -->

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<!-- 超时时间 -->

<property>

<name>zookeeper.session.timeout</name>

<value>120000</value>

</property>

<!--防止服务器时间不同步出错 -->

<property>

<name>hbase.master.maxclockskew</name>

<value>150000</value>

</property>

<!-- 集群主机配置 -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<!-- 路径存放 -->

<property>

<name>hbase.tmp.dir</name>

<value>/root/hbase/tmp</value>

</property>

<!-- true表示分布式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 指定master -->

<property>

<name>hbase.master</name>

<value>master:60000</value>

</property>

7.4 修改regionservers

添加regionservers节点,这边我们添加slave1和slave2作为regionservers,master作为hmstaer

在regionservers文件中删除localhost,添加以下内容即可:

slave1

slave2

7.5 分发hbase

将配置好的habse通过scp分发到各个从节点上

scp -r /opt/hbase root@slave1:/opt

scp -r /opt/hbase root@slave2:/opt

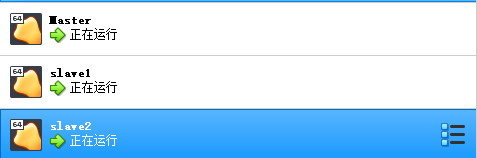

7.6 启动hbase

通过/opt/hbase/hbase1.1.2/bin/start-hbase.sh或者别名starthbase启动hbase集群,注意要确保zookeeper和hadoop已经启动了

[root@master conf]# starthbase

starting master, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-master-master.out

Java HotSpot™ 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot™ 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

slave1: starting regionserver, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-regionserver-slave1.out

slave2: starting regionserver, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-regionserver-slave2.out

slave2: Java HotSpot™ 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

slave2: Java HotSpot™ 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

slave1: Java HotSpot™ 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

slave1: Java HotSpot™ 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

在各个节点上用jps查看habse的守护进程是否启动了

[root@master conf]# jps

5490 NameNode

5318 QuorumPeerMain

6662 Jps

5832 ResourceManager

6412 HMaster

6109 Master

5679 SecondaryNameNode

[root@slave1 ~]# jps

5104 Jps

4881 HRegionServer

4786 Worker

4438 QuorumPeerMain

4632 NodeManager

4525 DataNode

[root@slave2 ~]# jps

4624 NodeManager

5089 Jps

4436 QuorumPeerMain

4517 DataNode

4778 Worker

4877 HRegionServer

我们可以从jps上看到master赢启动了hmaster而在从节点已经启动了hregionserver进程。

我们还可以通过在浏览器输入master的ip地址:16010来查看hbase的状态界面

8. Mysql安装

8.1 卸载源生mariadb

# 查看本地mariadb

rpm -qa|grep mariadb

# 卸载mariadb

rpm -e --nodeps mariadb-libs-5.5.64-1.el7.x86_64

8.2 rpm安装mysql

# 解压mysql

tar -xvf mysql-5.7.26-1.el7.x86_64.rpm-bundle.tar

解压后的数据文件如下:

通过rpm安装mysql

rpm -ivh mysql-community-common-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-compat-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-devel-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-server-5.7.26-1.el7.x86_64.rpm

8.3 mysql配置

# 初始化mysql,mysql的owner为mysql

mysqld --initialize --user=mysql

# 查看初始后生成的随机密码

cat /var/log/mysqld.log

log如下

[root@master mysql-5.7]# cat /var/log/mysqld.log

2020-06-26T06:28:36.768304Z 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use --explicit_defaults_for_timestamp server option (see documentation for more details).

2020-06-26T06:28:38.086643Z 0 [Warning] InnoDB: New log files created, LSN=45790

2020-06-26T06:28:38.208614Z 0 [Warning] InnoDB: Creating foreign key constraint system tables.

2020-06-26T06:28:38.265686Z 0 [Warning] No existing UUID has been found, so we assume that this is the first time that this server has been started. Generating a new UUID: 452a78ff-b776-11ea-8c58-08002788f808.

2020-06-26T06:28:38.266618Z 0 [Warning] Gtid table is not ready to be used. Table ‘mysql.gtid_executed’ cannot be opened.

2020-06-26T06:28:38.267012Z 1 [Note] A temporary password is generated for root@localhost: rRCV27G7MI:u

修改mysql管理者密码

# 设置mysql服务自启

systemctl start mysqld.service

# 重启mysqld

systemctl restart mysqld

# 登录mysql,修改管理者用户密码

mysql -u root -p

ALTER USER 'root'@'localhost' IDENTIFIED BY 'toor';

8.4 其余配置

查看mysql的字符库,若server的字符库不是utf-8需要修改

SHOW VARIABLES like 'character%';

修改字符库

# 修改/etc/my.cnf配置文件

vim /etc/my.cnf

# 在 [mysqld] 下面加上这个配置

character-set-server=utf8

# 重启mysql

service mysqld restart

8.5 元数据库配置

| Service | Database | User | password |

|---|---|---|---|

| Hive Metastore Server | metastore | hive | hive |

创建database语句为:

create database metastore default character set utf8 default collate utf8_general_ci;

grant all on metastore.* to 'hive'@'%' identified by 'hive';

通过show databases;验证数据库

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| metastore |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

至此mysql的相关配置已经完成了。

9. Hive配置安装

9.1 解压hive

# 解压hive

tar -zxvf apache-hive-1.2.2-bin.tar.gz -C /opt/hive/

cd /opt/hive/

mv apache-hive-1.2.2-bin hive1.2.2

9.2 配置Mysql的JDBC

# 解压缩jdbc

tar -xzvf mysql-connector-java-5.1.47.tar.gz

# 拷贝jdbc到hive

cp mysql-connector-java-5.1.47-bin.jar /opt/hive/hive1.2.2/lib/

9.3 创建hive所需目录

在本地创建hive所需目录

mkdir -p /root/hive/warehouse

mkdir /opt/hive/tmp

chmod 777 /opt/hive/tmp

在hdfs创建hive所需目录

hadoop fs -mkdir -p /root/hive/warehouse

hadoop fs -mkdir -p /root/hive/

# 赋予权限

hadoop fs -chmod 777 /root/hive/

hadoop fs -chmod 777 /root/hive/warehouse

9.4 修改hive-env.sh

进入hive-1.2.2/conf目录,复制hive-env.sh.templaete为hive-env.sh

cd /opt/hive/hive1.2.2/conf/

cp hive-env.sh.template hive-env.sh

vim hive-env.sh

添加以下内容:

export HADOOP_HOME=/opt/hadoop/hadoop2.7

export HIVE_CONF_DIR=/opt/hive/hive1.2.2/conf

export HIVE_AUX_JARS_PATH=/opt/hive/hive1.2.2/lib

9.5 修改hive-site.xml

拷贝配置模版

cd /opt/hive/hive1.2.2/conf/

cp hive-default.xml.template hive-site.xml

修改 hive-site.xml,在<configuration></configuration>节点中修改配置信息;

并且将hive-site.xml做如下替换:

${system:java.io.tmpdir} >> /opt/hive/tmp

${system:user.name} >> root

<!-- 指定HDFS中的hive仓库地址 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/root/hive/warehouse</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/root/hive</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value></value>

</property>

<!-- 指定mysql的连接 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://master:3306/metastore?createDatabaseIfNotExist=true&useSSL=false</value>

</property>

<!-- 指定驱动类 -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<!-- 指定用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<!-- 指定密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

9.6 启动hive

在命令行输入hive启动hive

会有一个spark的报错:

ls: cannot access /opt/spark/spark2.4.5/lib/spark-assembly-*.jar: No such file or directory

原因为spark的lib变更为jars

修改办法:

cd /opt/hive/hive1.2.2/bin

vim hive

# 找到下列行

sparkAssemblyPath=`ls ${SPARK_HOME}/libs/spark-assembly-*.jar`

# 将其更改为

sparkAssemblyPath=`ls ${SPARK_HOME}/jars/*.jar`

修改完成后就可以正常启动了

[root@master conf]# hive

Logging initialized using configuration in jar:file:/opt/hive/hive1.2.2/lib/hive-common-1.2.2.jar!/hive-log4j.properties

hive> show databases;

OK

default

Time taken: 1.188 seconds, Fetched: 1 row(s)

hive> show tables;

OK

Time taken: 0.055 seconds

hive>

10. 一键启停集群

由于集群包含zookeeper、hadoop、hbase、spark和hive,在集群启停的过程需要一个个命令敲太麻烦了,于是乎弄了一个一键启停集群的shell脚本:

10.1 start-cluster.sh

#!/bin/sh

echo -e "========Start The Cluster========"

# start zookeeper on master

echo -e "Starting zookeeper on master Now !!!"

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh start

echo -e "\n"

sleep 5s

# start zookeeper on slave1

echo -e "Starting zookeeper on slave1 Now !!!"

ssh root@slave1 << remotessh

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh start

echo -e "\n"

sleep 5s

exit

remotessh

# start zookeeper on slave2

echo -e "Starting zookeeper on slave2 Now !!! "

ssh root@slave2 << remotessh

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh start

echo -e "\n"

sleep 5s

exit

remotessh

# start hadoop cluster

echo -e "Starting Hadoop Now !!!"

/opt/hadoop/hadoop2.7/sbin/start-all.sh

sleep 10s

# start hbase cluster

echo -e "Starting hbase cluster Now !!! "

/opt/hbase/hbase1.1.5/bin/start-hbase.sh

echo -e "\n"

sleep 10s

# start spark cluster

echo -e "Starting spark cluster Now !!! "

/opt/spark/spark2.4.5/sbin/start-all.sh

echo -e "\n"

sleep 10s

echo -e "The Result Of The Command \"jps\" :"

for node in master slave1 slave2

do

echo "----------------$node------------------------"

ssh $node "source /etc/profile; jps;"

done

一键启动集群如下:

[root@master ~]# startcluster

========Start The Cluster========

Starting zookeeper on master Now !!!

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

Starting zookeeper on slave1 Now !!!

Pseudo-terminal will not be allocated because stdin is not a terminal.

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

Starting zookeeper on slave2 Now !!!

Pseudo-terminal will not be allocated because stdin is not a terminal.

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

Starting Hadoop Now !!!

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /opt/hadoop/hadoop2.7/logs/hadoop-root-namenode-master.out

slave1: starting datanode, logging to /opt/hadoop/hadoop2.7/logs/hadoop-root-datanode-slave1.out

slave2: starting datanode, logging to /opt/hadoop/hadoop2.7/logs/hadoop-root-datanode-slave2.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /opt/hadoop/hadoop2.7/logs/hadoop-root-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/hadoop2.7/logs/yarn-root-resourcemanager-master.out

slave2: starting nodemanager, logging to /opt/hadoop/hadoop2.7/logs/yarn-root-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop/hadoop2.7/logs/yarn-root-nodemanager-slave1.out

Starting hbase cluster Now !!!

starting master, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-master-master.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

slave1: starting regionserver, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-regionserver-slave1.out

slave2: starting regionserver, logging to /opt/hbase/hbase1.1.5/logs/hbase-root-regionserver-slave2.out

Starting spark cluster Now !!!

starting org.apache.spark.deploy.master.Master, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

slave1: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-slave1.out

slave2: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/spark2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-slave2.out

The Result Of The Command "jps" :

----------------master------------------------

7440 NameNode

7635 SecondaryNameNode

4484 RunJar

8168 HMaster

4346 RunJar

8364 Master

7293 QuorumPeerMain

7789 ResourceManager

8573 Jps

5998 Jps

----------------slave1------------------------

3605 NodeManager

3766 HRegionServer

3497 DataNode

4059 Jps

3436 QuorumPeerMain

3935 Worker

----------------slave2------------------------

3488 QuorumPeerMain

3651 NodeManager

3812 HRegionServer

3543 DataNode

4122 Jps

3982 Worker

10.2 stop-cluster.sh

#!/bin/sh

echo -e "========Stop The Cluster========"

# stop hadoop cluster

echo -e "Stoping Hadoop Now !!!"

/opt/hadoop/hadoop2.7/sbin/stop-all.sh

sleep 10s

# stop hbase cluster

echo -e "Stoping hbase cluster Now !!! "

/opt/hbase/hbase1.1.5/bin/stop-hbase.sh

echo -e "\n"

sleep 15s

# stop spark cluster

echo -e "Stoping spark cluster Now !!! "

/opt/spark/spark2.4.5/sbin/stop-all.sh

echo -e "\n"

sleep 10s

# stop zookeeper on master

echo -e "Stoping zookeeper on master Now !!!"

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh stop

echo -e "\n"

sleep 5s

# stop zookeeper on slave1

echo -e "Stoping zookeeper on slave1 Now !!!"

ssh root@slave1 << remotessh

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh stop

echo -e "\n"

sleep 5s

exit

remotessh

# stop zookeeper on slave2

echo -e "Stoping zookeeper on slave2 Now !!! "

ssh root@slave2 << remotessh

/opt/zookeeper/zookeeper3.4/bin/zkServer.sh stop

echo -e "\n"

sleep 5s

exit

remotessh

echo -e "The Result Of The Command \"jps\" :"

for node in master slave1 slave2

do

echo "----------------$node------------------------"

ssh $node "source /etc/profile; jps;"

done

一键停止集群效果:

[root@master ~]# stopcluster

========Stop The Cluster========

Stoping Hadoop Now !!!

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [master]

master: stopping namenode

slave2: stopping datanode

slave1: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

slave1: stopping nodemanager

slave2: stopping nodemanager

no proxyserver to stop

Stoping hbase cluster Now !!!

stopping hbase....................

Stoping spark cluster Now !!!

slave2: stopping org.apache.spark.deploy.worker.Worker

slave1: stopping org.apache.spark.deploy.worker.Worker

stopping org.apache.spark.deploy.master.Master

Stoping zookeeper on master Now !!!

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

Stoping zookeeper on slave1 Now !!!

Pseudo-terminal will not be allocated because stdin is not a terminal.

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

Stoping zookeeper on slave2 Now !!!

Pseudo-terminal will not be allocated because stdin is not a terminal.

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/zookeeper3.4/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

The Result Of The Command "jps" :

----------------master------------------------

4484 RunJar

4346 RunJar

9274 Jps

5998 Jps

----------------slave1------------------------

4264 Jps

----------------slave2------------------------

4340 Jps

[root@master ~]#

10.3 配置启停集群的别名

在编辑保存好两个shell脚本之后,在~/.bashrc 追加以下内容

alias startcluster='/root/start-cluster.sh'

alias stopcluster='/root/stop-cluster.sh'

保存完毕之后source ~/.bashrc生效即可使用startcluster来启动集群和stopcluster来关闭集群。

538

538

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?