1.安装jdk

-

配置环境变量

JAVA_HOME:D:\java\jdk1.8.0_31 PATH:%JAVA_HOME%\bin;%JAVA_HOME%\jre\bin CLASSPATH:.;%JAVA_HOME%\lib;%JAVA_HOME%\lib\tools.jar -

注:jdk尽量安装到自定义目录,默认的Program File有空格,可能会导致后续运行某些命令报错

2.安装hadoop

-

下载:http://archive.apache.org/dist/hadoop/core/

-

环境变量配置

HADOOP_HOME=D:\java\hadoop-2.8.4 PATH:%HADOOP_HOME%\bin -

详细参考:https://blog.csdn.net/luanpeng825485697/article/details/79420532

3.安装Scala

- 下载地址:http://www.scala-lang.org/download/all.html

这里使用的是2.11.8版本的 - 配置环境变量

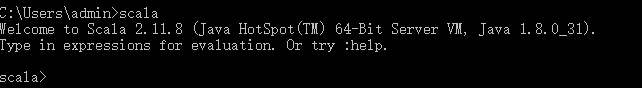

SCALA_HOME:D:\java\scala-2.11.8 PATH:%SCALA_HOME%\bin - 验证是否安装成功:

在cmd窗口中,输入 scala,回车,如下图

4.安装spark

- 地址:http://spark.apache.org/downloads.html

- 配置环境变量

SPARK_HOME:D:\java\spark-2.1.0-bin-hadoop2.7 Path:%SPARK_HOME%\bin - cmd 运行spark-shell

- 自动生成/tmp/hive文件夹,路径为执行cmd命令的根目录

C:\Users\admin> C:/tmp/hive

D:\java\spark-2.1.0-bin-hadoop2.7\bin> D:/tmp/hive - 自动生成metastore_db文件夹,路径为执行cmd命令的当前目录下,如:

C:\Users\admin> C:\Users\admin\metastore_db

- 自动生成/tmp/hive文件夹,路径为执行cmd命令的根目录

- 异常

Exception in thread "main" java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:258) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:359) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:263) at org.apache.spark.sql.hive.HiveSharedState.metadataHive$lzycompute(HiveSharedState.scala:39) at org.apache.spark.sql.hive.HiveSharedState.metadataHive(HiveSharedState.scala:38) at org.apache.spark.sql.hive.HiveSharedState.externalCatalog$lzycompute(HiveSharedState.scala:46) at org.apache.spark.sql.hive.HiveSharedState.externalCatalog(HiveSharedState.scala:45) at org.apache.spark.sql.hive.HiveSessionState.catalog$lzycompute(HiveSessionState.scala:50) at org.apache.spark.sql.hive.HiveSessionState.catalog(HiveSessionState.scala:48) at org.apache.spark.sql.hive.HiveSessionState$$anon$1.<init>(HiveSessionState.scala:63) at org.apache.spark.sql.hive.HiveSessionState.analyzer$lzycompute(HiveSessionState.scala:63) at org.apache.spark.sql.hive.HiveSessionState.analyzer(HiveSessionState.scala:62) at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:49) at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64) at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:542) at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:302) at com.dtxy.xbdp.widget.HiveOutputWidget$.main(HiveOutputWidget.scala:101) at com.dtxy.xbdp.widget.HiveOutputWidget.main(HiveOutputWidget.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at com.intellij.rt.execution.application.AppMain.main(AppMain.java:147) Caused by: java.lang.RuntimeException: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx------ at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522) at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:171) ... 27 more Caused by: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx------ at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:612) at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:554) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:508) ... 28 more- 1 下载对应hadoop版本的winutils,拷贝到hadoop/bin目录下

- 2 The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rw-rw-rw- (on Windows)

这个是/tmp/hive的权限错误,执行如下命令:List current permissions: %HADOOP_HOME%\bin\winutils.exe ls \tmp\hive Set permissions: %HADOOP_HOME%\bin\winutils.exe chmod 777 \tmp\hive List updated permissions: %HADOOP_HOME%\bin\winutils.exe ls \tmp\hive - 参考:https://blog.csdn.net/zgjdzwhy/article/details/71056801

5.idea集成Scala

5.1 idea安装Scala插件

- File->Settings->Plugins

搜索Scala在线安装;

点击Install plugin from disk从本地安装

5.2 安装Scala SDK

- 下载:https://www.scala-lang.org/download/2.11.8.html

其版本应与上面spark/jars/中scala版本一致,2.1.0版本spark对应的scala版本为2.11.8 - File->Project Structure->Global Libraries 添加Scala的SDK

- 代码运行:设置本地模式:run=>edit configrations=>Application=>选择我们应用,VM options上添加-Dspark.master=local

3544

3544

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?