Donatello的吐槽:我为什么要转这篇文章?是因为我在caffe的官网教程上看到提到了vision_layers.hpp,但是我自己找不到,去翻了很多帖子,找了很多github的仓库,怀疑是版本更新后废除了该文件,终于在这篇文章下面找到了确切的说法……

原文地址:http://blog.csdn.net/mounty_fsc/article/details/51085654

Caffe中,Blob,Layer,Net,Solver是最为核心的类,以下介绍这几个类,Solver将在下一节介绍。

1 Blob

1.1 简介

Blob是:

- 对待处理数据带一层封装,用于在Caffe中通信传递。

- 也为CPU和GPU间提供同步能力

- 数学上,是一个N维的C风格的存储数组

总的来说,Caffe使用Blob来交流数据,其是Caffe中标准的数组与统一的内存接口,它是多功能的,在不同的应用场景具有不同的含义,如可以是:batches of images, model parameters, and derivatives for optimization等。

1.2 源代码

<code class="language-c++ hljs cpp has-numbering"><span class="hljs-comment">/**

* @brief A wrapper around SyncedMemory holders serving as the basic

* computational unit through which Layer%s, Net%s, and Solver%s

* interact.

*

* TODO(dox): more thorough description.

*/</span>

<span class="hljs-keyword">template</span> <<span class="hljs-keyword">typename</span> Dtype>

<span class="hljs-keyword">class</span> Blob {

<span class="hljs-keyword">public</span>:

Blob()

: data_(), diff_(), count_(<span class="hljs-number">0</span>), capacity_(<span class="hljs-number">0</span>) {}

<span class="hljs-comment">/// @brief Deprecated; use <code>Blob(const vector<int>& shape)</code>. </span>

<span class="hljs-keyword">explicit</span> Blob(<span class="hljs-keyword">const</span> <span class="hljs-keyword">int</span> num, <span class="hljs-keyword">const</span> <span class="hljs-keyword">int</span> channels, <span class="hljs-keyword">const</span> <span class="hljs-keyword">int</span> height,

<span class="hljs-keyword">const</span> <span class="hljs-keyword">int</span> width);

<span class="hljs-keyword">explicit</span> Blob(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">int</span>></span>& shape);

.....

<span class="hljs-keyword">protected</span>:

<span class="hljs-built_in">shared_ptr</span><SyncedMemory> data_;

<span class="hljs-built_in">shared_ptr</span><SyncedMemory> diff_;

<span class="hljs-built_in">shared_ptr</span><SyncedMemory> shape_data_;

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">int</span>></span> shape_;

<span class="hljs-keyword">int</span> count_;

<span class="hljs-keyword">int</span> capacity_;

DISABLE_COPY_AND_ASSIGN(Blob);

}; <span class="hljs-comment">// class Blob </span></code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li></ul>

注:此处只保留了构造函数与成员变量。

说明:

- Blob在实现上是对SyncedMemory(见1.5部分)进行了一层封装。

- shape_为blob维度,见1.3部分

- data_为原始数据

- diff_为梯度信息

- count为该blob的总容量(即数据的size),函数count(x,y)(或count(x))返回某个切片[x,y]([x,end])内容量,本质上就是shape[x]shape[x+1]….*shape[y]的值

1.3 Blob的shape

由源代码中可以注意到Blob有个成员变量:vector shape_

其作用:

- 对于图像数据,shape可以定义为4维的数组(Num, Channels, Height, Width)或(n, k, h, w),所以Blob数据维度为n*k*h*w,Blob是row-major保存的,因此在(n, k, h, w)位置的值物理位置为((n * K + k) * H + h) * W + w。其中Number是数据的batch size,对于256张图片为一个training batch的ImageNet来说n = 256;Channel是特征维度,如RGB图像k = 3

- 对于全连接网络,使用2D blobs (shape (N, D)),然后调用InnerProductLayer

- 对于参数,维度根据该层的类型和配置来确定。对于有3个输入96个输出的卷积层,Filter核 11 x 11,则blob为96 x 3 x 11 x 11. 对于全连接层,1000个输出,1024个输入,则blob为1000 x 1024.

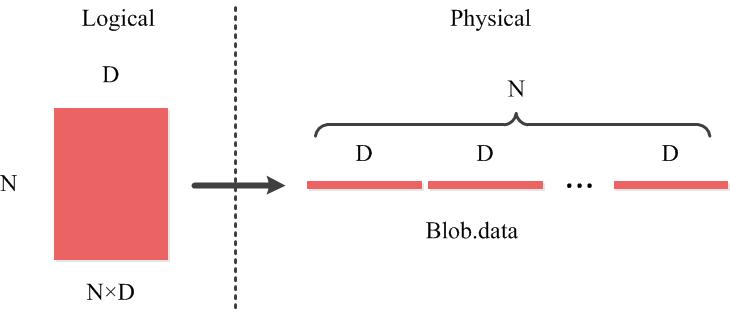

1.4 Blob的行优先的存储方式

以Blob中二维矩阵为例(如全连接网络shape (N, D)),如图所示。同样的存储方式可以推广到多维。

1.5 SyncedMemory

由1.2知,Blob本质是对SyncedMemory的再封装。其核心代码如下:

<code class="hljs r has-numbering">/**

* @brief Manages memory allocation and synchronization between the host (CPU)

* and device (GPU).

*

* TODO(dox): more thorough description.

*/

class SyncedMemory {

public:

<span class="hljs-keyword">...</span>

const void* cpu_data();

const void* gpu_data();

void* mutable_cpu_data();

void* mutable_gpu_data();

<span class="hljs-keyword">...</span>

private:

<span class="hljs-keyword">...</span>

void* cpu_ptr_;

void* gpu_ptr_;

<span class="hljs-keyword">...</span>

}; // class SyncedMemory </code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li></ul>

Blob同时保存了data_和diff_,其类型为SyncedMemory的指针。

对于data_(diff_相同),其实际值要么存储在CPU(cpu_ptr_)要么存储在GPU(gpu_ptr_),有两种方式访问CPU数据(GPU相同):

- 常量方式,void* cpu_data(),其不改变cpu_ptr_指向存储区域的值。

-

可变方式,void* mutable_cpu_data(),其可改变cpu_ptr_指向存储区值。

以mutable_cpu_data()为例<code class="hljs cs has-numbering"><span class="hljs-keyword">void</span>* SyncedMemory::mutable_cpu_data() { to_cpu(); head_ = HEAD_AT_CPU; <span class="hljs-keyword">return</span> cpu_ptr_; } inline <span class="hljs-keyword">void</span> SyncedMemory::to_cpu() { <span class="hljs-keyword">switch</span> (head_) { <span class="hljs-keyword">case</span> UNINITIALIZED: CaffeMallocHost(&cpu_ptr_, size_, &cpu_malloc_use_cuda_); caffe_memset(size_, <span class="hljs-number">0</span>, cpu_ptr_); head_ = HEAD_AT_CPU; own_cpu_data_ = <span class="hljs-keyword">true</span>; <span class="hljs-keyword">break</span>; <span class="hljs-keyword">case</span> HEAD_AT_GPU: <span class="hljs-preprocessor">#ifndef CPU_ONLY</span> <span class="hljs-keyword">if</span> (cpu_ptr_ == NULL) { CaffeMallocHost(&cpu_ptr_, size_, &cpu_malloc_use_cuda_); own_cpu_data_ = <span class="hljs-keyword">true</span>; } caffe_gpu_memcpy(size_, gpu_ptr_, cpu_ptr_); head_ = SYNCED; <span class="hljs-preprocessor">#<span class="hljs-keyword">else</span></span> NO_GPU; <span class="hljs-preprocessor">#<span class="hljs-keyword">endif</span></span> <span class="hljs-keyword">break</span>; <span class="hljs-keyword">case</span> HEAD_AT_CPU: <span class="hljs-keyword">case</span> SYNCED: <span class="hljs-keyword">break</span>; } }</code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li></ul>

说明:

- 经验上来说,如果不需要改变其值,则使用常量调用的方式,并且,不要在你对象中保存其指针。为何要这样设计呢,因为这样涉及能够隐藏CPU到GPU的同步细节,以及减少数据传递从而提高效率,当你调用它们的时候,SyncedMem会决定何时去复制数据,通常情况是仅当gnu或cpu修改后有复制操作,引用1官方文档中有一个例子说明何时进行复制操作。

- 调用mutable_cpu_data()可以让head转移到cpu上

- 第一次调用mutable_cpu_data()是UNINITIALIZED将执行9到14行,将为cpu_ptr_分配host内存

- 若head从gpu转移到cpu,将把数据从gpu复制到cpu中

2 Layer

2.1 简介

Layer是Caffe的基础以及基本计算单元。Caffe十分强调网络的层次性,可以说,一个网络的大部分功能都是以Layer的形式去展开的,如convolute,pooling,loss等等。

在创建一个Caffe模型的时候,也是以Layer为基础进行的,需按照src/caffe/proto/caffe.proto中定义的网络及参数格式定义网络 prototxt文件(需了解google protocol buffer)

2.2 Layer与Blob的关系

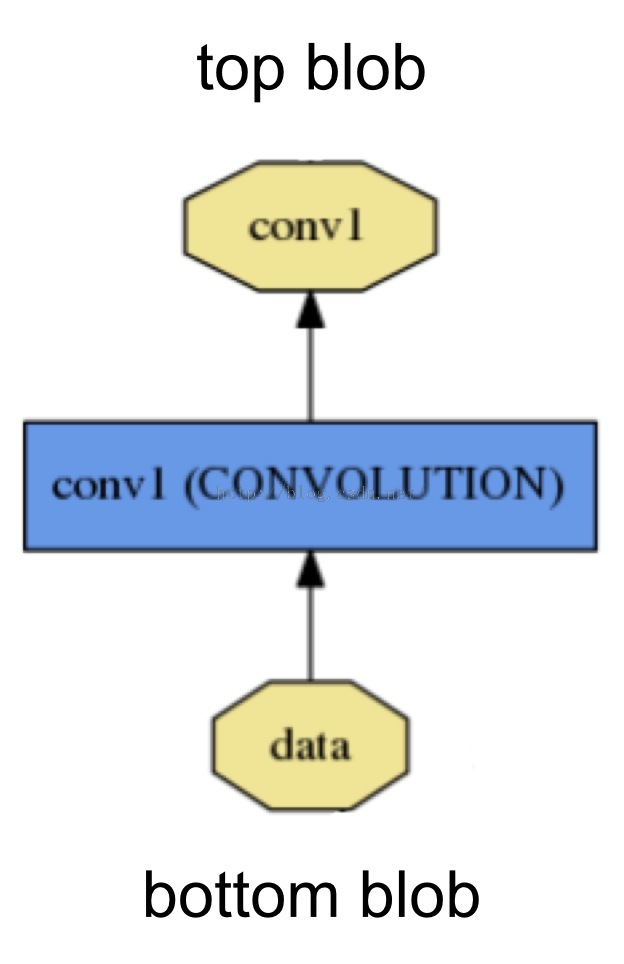

如图,名为conv1的Layer 的输入是名为data的bottom blob,其输出是名为conv1的top blob。

其protobuff定义如下,一个layer有一个到多个的top和bottom,其对应于blob

<code class="hljs css has-numbering"><span class="hljs-tag">layer</span> <span class="hljs-rules">{

<span class="hljs-rule"><span class="hljs-attribute">name</span>:<span class="hljs-value"> <span class="hljs-string">"conv1"</span>

type: <span class="hljs-string">"Convolution"</span>

bottom: <span class="hljs-string">"data"</span>

top: <span class="hljs-string">"conv1"</span>

....

</span></span></span>} </code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li></ul>

2.3 源代码

<code class="hljs cpp has-numbering"> <span class="hljs-comment">/**

* Layer%s must implement a Forward function, in which they take their input

* (bottom) Blob%s (if any) and compute their output Blob%s (if any).

* They may also implement a Backward function, in which they compute the error

* gradients with respect to their input Blob%s, given the error gradients with

* their output Blob%s.

*/</span>

<span class="hljs-keyword">template</span> <<span class="hljs-keyword">typename</span> Dtype>

<span class="hljs-keyword">class</span> Layer {

<span class="hljs-keyword">public</span>:

<span class="hljs-comment">/**

* You should not implement your own constructor. Any set up code should go

* to SetUp(), where the dimensions of the bottom blobs are provided to the

* layer.

*/</span>

<span class="hljs-keyword">explicit</span> Layer(<span class="hljs-keyword">const</span> LayerParameter& param)

: layer_param_(param), is_shared_(<span class="hljs-keyword">false</span>) {

...

}

<span class="hljs-keyword">virtual</span> ~Layer() {}

<span class="hljs-comment">/**

* @brief Implements common layer setup functionality.

* @param bottom the preshaped input blobs

* @param top

* the allocated but unshaped output blobs, to be shaped by Reshape

*/</span>

<span class="hljs-keyword">void</span> SetUp(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top) {

...

}

...

<span class="hljs-comment">/**

* @brief Given the bottom blobs, compute the top blobs and the loss.

* \return The total loss from the layer.

*

* The Forward wrapper calls the relevant device wrapper function

* (Forward_cpu or Forward_gpu) to compute the top blob values given the

* bottom blobs. If the layer has any non-zero loss_weights, the wrapper

* then computes and returns the loss.

*

* Your layer should implement Forward_cpu and (optionally) Forward_gpu.

*/</span>

<span class="hljs-keyword">inline</span> Dtype Forward(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top);

<span class="hljs-comment">/**

* @brief Given the top blob error gradients, compute the bottom blob error

* gradients.

*

* @param top

* the output blobs, whose diff fields store the gradient of the error

* with respect to themselves

* @param propagate_down

* a vector with equal length to bottom, with each index indicating

* whether to propagate the error gradients down to the bottom blob at

* the corresponding index

* @param bottom

* the input blobs, whose diff fields will store the gradient of the error

* with respect to themselves after Backward is run

*

* The Backward wrapper calls the relevant device wrapper function

* (Backward_cpu or Backward_gpu) to compute the bottom blob diffs given the

* top blob diffs.

*

* Your layer should implement Backward_cpu and (optionally) Backward_gpu.

*/</span>

<span class="hljs-keyword">inline</span> <span class="hljs-keyword">void</span> Backward(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">bool</span>></span>& propagate_down,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom);

...

<span class="hljs-keyword">protected</span>:

<span class="hljs-comment">/** The protobuf that stores the layer parameters */</span>

LayerParameter layer_param_;

<span class="hljs-comment">/** The phase: TRAIN or TEST */</span>

Phase phase_;

<span class="hljs-comment">/** The vector that stores the learnable parameters as a set of blobs. */</span>

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-built_in">shared_ptr</span><Blob<Dtype></span> > > blobs_;

<span class="hljs-comment">/** Vector indicating whether to compute the diff of each param blob. */</span>

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">bool</span>></span> param_propagate_down_;

<span class="hljs-comment">/** The vector that indicates whether each top blob has a non-zero weight in

* the objective function. */</span>

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Dtype></span> loss_;

<span class="hljs-keyword">virtual</span> <span class="hljs-keyword">void</span> Forward_cpu(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top) = <span class="hljs-number">0</span>;

<span class="hljs-keyword">virtual</span> <span class="hljs-keyword">void</span> Forward_gpu(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top) {

<span class="hljs-comment">// LOG(WARNING) << "Using CPU code as backup."; </span>

<span class="hljs-keyword">return</span> Forward_cpu(bottom, top);

}

<span class="hljs-keyword">virtual</span> <span class="hljs-keyword">void</span> Backward_cpu(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">bool</span>></span>& propagate_down,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom) = <span class="hljs-number">0</span>;

<span class="hljs-keyword">virtual</span> <span class="hljs-keyword">void</span> Backward_gpu(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& top,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-keyword">bool</span>></span>& propagate_down,

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& bottom) {

<span class="hljs-comment">// LOG(WARNING) << "Using CPU code as backup."; </span>

Backward_cpu(top, propagate_down, bottom);

}

...

}; <span class="hljs-comment">// class Layer </span></code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li><li>38</li><li>39</li><li>40</li><li>41</li><li>42</li><li>43</li><li>44</li><li>45</li><li>46</li><li>47</li><li>48</li><li>49</li><li>50</li><li>51</li><li>52</li><li>53</li><li>54</li><li>55</li><li>56</li><li>57</li><li>58</li><li>59</li><li>60</li><li>61</li><li>62</li><li>63</li><li>64</li><li>65</li><li>66</li><li>67</li><li>68</li><li>69</li><li>70</li><li>71</li><li>72</li><li>73</li><li>74</li><li>75</li><li>76</li><li>77</li><li>78</li><li>79</li><li>80</li><li>81</li><li>82</li><li>83</li><li>84</li><li>85</li><li>86</li><li>87</li><li>88</li><li>89</li><li>90</li><li>91</li><li>92</li><li>93</li><li>94</li><li>95</li><li>96</li><li>97</li><li>98</li><li>99</li><li>100</li><li>101</li><li>102</li><li>103</li><li>104</li><li>105</li><li>106</li><li>107</li><li>108</li><li>109</li><li>110</li><li>111</li><li>112</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li><li>38</li><li>39</li><li>40</li><li>41</li><li>42</li><li>43</li><li>44</li><li>45</li><li>46</li><li>47</li><li>48</li><li>49</li><li>50</li><li>51</li><li>52</li><li>53</li><li>54</li><li>55</li><li>56</li><li>57</li><li>58</li><li>59</li><li>60</li><li>61</li><li>62</li><li>63</li><li>64</li><li>65</li><li>66</li><li>67</li><li>68</li><li>69</li><li>70</li><li>71</li><li>72</li><li>73</li><li>74</li><li>75</li><li>76</li><li>77</li><li>78</li><li>79</li><li>80</li><li>81</li><li>82</li><li>83</li><li>84</li><li>85</li><li>86</li><li>87</li><li>88</li><li>89</li><li>90</li><li>91</li><li>92</li><li>93</li><li>94</li><li>95</li><li>96</li><li>97</li><li>98</li><li>99</li><li>100</li><li>101</li><li>102</li><li>103</li><li>104</li><li>105</li><li>106</li><li>107</li><li>108</li><li>109</li><li>110</li><li>111</li><li>112</li></ul>

说明:每一层定义了三种操作

- Setup:Layer的初始化

- Forward:前向传导计算,根据bottom计算top,调用了Forward_cpu(必须实现)和Forward_gpu(可选,若未实现,则调用cpu的)

- Backward:反向传导计算,根据top计算bottom的梯度,其他同上

2.4 派生类分类

在Layer的派生类中,主要可以分为Vision Layers

- Vision Layers

Vison 层主要用于处理视觉图像相关的层,以图像作为输入,产生其他的图像。其主要特点是具有空间结构。

包含Convolution(conv_layer.hpp)、Pooling(pooling_layer.hpp)、Local Response Normalization(LRN)(lrn_layer.hpp)、im2col等,注:老版本的Caffe有头文件include/caffe/vision_layers.hpp,新版本中用include/caffe/layer/conv_layer.hpp等取代 - Loss Layers

这些层产生loss,如Softmax(SoftmaxWithLoss)、Sum-of-Squares / Euclidean(EuclideanLoss)、Hinge / Margin(HingeLoss)、Sigmoid Cross-Entropy(SigmoidCrossEntropyLoss)、Infogain(InfogainLoss)、Accuracy and Top-k等 - Activation / Neuron Layers

元素级别的运算,运算均为同址计算(in-place computation,返回值覆盖原值而占用新的内存)。如:ReLU / Rectified-Linear and Leaky-ReLU(ReLU)、Sigmoid(Sigmoid)、TanH / Hyperbolic Tangent(TanH)、Absolute Value(AbsVal)、Power(Power)、BNLL(BNLL)等 - Data Layers

网络的最底层,主要实现数据格式的转换,如:Database(Data)、In-Memory(MemoryData)、HDF5 Input(HDF5Data)、HDF5 Output(HDF5Output)、Images(ImageData)、Windows(WindowData)、Dummy(DummyData)等 - Common Layers

Caffe提供了单个层与多个层的连接。如:Inner Product(InnerProduct)、Splitting(Split)、Flattening(Flatten)、Reshape(Reshape)、Concatenation(Concat)、Slicing(Slice)、Elementwise(Eltwise)、Argmax(ArgMax)、Softmax(Softmax)、Mean-Variance Normalization(MVN)等

注,括号内为Layer Type,没有括号暂缺信息,详细咱见引用2

3 Net

3.1 简介

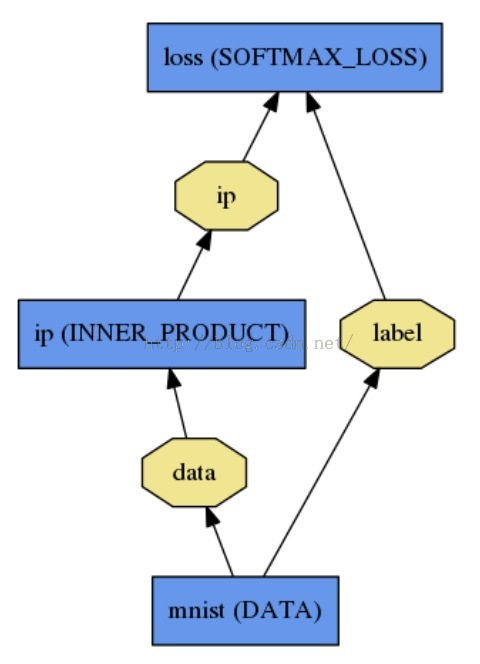

一个Net由多个Layer组成。一个典型的网络从data layer(从磁盘中载入数据)出发到loss layer结束。如图是一个简单的逻辑回归分类器。

如下定义:

<code class="hljs css has-numbering"><span class="hljs-tag">name</span>: "<span class="hljs-tag">LogReg</span>"

<span class="hljs-tag">layer</span> <span class="hljs-rules">{

<span class="hljs-rule"><span class="hljs-attribute">name</span>:<span class="hljs-value"> <span class="hljs-string">"mnist"</span>

type: <span class="hljs-string">"Data"</span>

top: <span class="hljs-string">"data"</span>

top: <span class="hljs-string">"label"</span>

data_param {

source: <span class="hljs-string">"input_leveldb"</span>

batch_size: <span class="hljs-number">64</span>

</span></span></span>}

}

<span class="hljs-tag">layer</span> <span class="hljs-rules">{

<span class="hljs-rule"><span class="hljs-attribute">name</span>:<span class="hljs-value"> <span class="hljs-string">"ip"</span>

type: <span class="hljs-string">"InnerProduct"</span>

bottom: <span class="hljs-string">"data"</span>

top: <span class="hljs-string">"ip"</span>

inner_product_param {

num_output: <span class="hljs-number">2</span>

</span></span></span>}

}

<span class="hljs-tag">layer</span> <span class="hljs-rules">{

<span class="hljs-rule"><span class="hljs-attribute">name</span>:<span class="hljs-value"> <span class="hljs-string">"loss"</span>

type: <span class="hljs-string">"SoftmaxWithLoss"</span>

bottom: <span class="hljs-string">"ip"</span>

bottom: <span class="hljs-string">"label"</span>

top: <span class="hljs-string">"loss"</span>

</span></span></span>}</code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li></ul>

3.2 源代码

<code class="hljs cpp has-numbering"><span class="hljs-comment">/**

* @brief Connects Layer%s together into a directed acyclic graph (DAG)

* specified by a NetParameter.

*

* TODO(dox): more thorough description.

*/</span>

<span class="hljs-keyword">template</span> <<span class="hljs-keyword">typename</span> Dtype>

<span class="hljs-keyword">class</span> Net {

<span class="hljs-keyword">public</span>:

...

<span class="hljs-comment">/// @brief Initialize a network with a NetParameter.</span>

<span class="hljs-keyword">void</span> Init(<span class="hljs-keyword">const</span> NetParameter& param);

...

<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*>& Forward(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>* > & bottom,

Dtype* loss = NULL);

...

<span class="hljs-comment">/**

* The network backward should take no input and output, since it solely

* computes the gradient w.r.t the parameters, and the data has already been

* provided during the forward pass.

*/</span>

<span class="hljs-keyword">void</span> Backward();

...

Dtype ForwardBackward(<span class="hljs-keyword">const</span> <span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>* > & bottom) {

Dtype loss;

Forward(bottom, &loss);

Backward();

<span class="hljs-keyword">return</span> loss;

}

...

<span class="hljs-keyword">protected</span>:

...

<span class="hljs-comment">/// @brief The network name</span>

<span class="hljs-built_in">string</span> name_;

<span class="hljs-comment">/// @brief The phase: TRAIN or TEST</span>

Phase phase_;

<span class="hljs-comment">/// @brief Individual layers in the net</span>

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-built_in">shared_ptr</span><Layer<Dtype></span> > > layers_;

<span class="hljs-comment">/// @brief the blobs storing intermediate results between the layer.</span>

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-built_in">shared_ptr</span><Blob<Dtype></span> > > blobs_;

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*></span> > bottom_vecs_;

<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><<span class="hljs-stl_container"><span class="hljs-built_in">vector</span><Blob<Dtype></span>*></span> > top_vecs_;

...

<span class="hljs-comment">/// The root net that actually holds the shared layers in data parallelism</span>

<span class="hljs-keyword">const</span> Net* <span class="hljs-keyword">const</span> root_net_;

};

} <span class="hljs-comment">// namespace caffe</span></code><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li><li>38</li><li>39</li><li>40</li><li>41</li><li>42</li><li>43</li><li>44</li><li>45</li><li>46</li><li>47</li><li>48</li><li>49</li></ul><ul class="pre-numbering"><li>1</li><li>2</li><li>3</li><li>4</li><li>5</li><li>6</li><li>7</li><li>8</li><li>9</li><li>10</li><li>11</li><li>12</li><li>13</li><li>14</li><li>15</li><li>16</li><li>17</li><li>18</li><li>19</li><li>20</li><li>21</li><li>22</li><li>23</li><li>24</li><li>25</li><li>26</li><li>27</li><li>28</li><li>29</li><li>30</li><li>31</li><li>32</li><li>33</li><li>34</li><li>35</li><li>36</li><li>37</li><li>38</li><li>39</li><li>40</li><li>41</li><li>42</li><li>43</li><li>44</li><li>45</li><li>46</li><li>47</li><li>48</li><li>49</li></ul>

说明:

- Init中,通过创建blob和layer搭建了整个网络框架,以及调用各层的SetUp函数。

- blobs_存放这每一层产生的blobls的中间结果,bottom_vecs_存放每一层的bottom blobs,top_vecs_存放每一层的top blobs

参考文献:

[1].http://caffe.berkeleyvision.org/tutorial/net_layer_blob.html

[2].http://caffe.berkeleyvision.org/tutorial/layers.html

[3].https://yufeigan.github.io

[4].https://www.zhihu.com/question/27982282

1477

1477

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?