spark的yarn集群提交任务,遇到依赖找不到问题

Job aborted due to stage failure: Task 21 in stage 15.0 failed 4 times, most recent failure: Lost task 21.3 in stage 15.0 (TID 1631) (IOT-147 executor 1): org.apache.spark.SparkException: [FAILED_EXECUTE_UDF] Failed to execute user defined function (UDFRegistrationKaTeX parse error: Can't use function '$' in math mode at position 7: Lambda$̲1220/0x00000008…anon 1. h a s N e x t ( W h o l e S t a g e C o d e g e n E x e c . s c a l a : 760 ) a t o r g . a p a c h e . s p a r k . s q l . e x e c u t i o n . d a t a s o u r c e s . v 2. W r i t i n g S p a r k T a s k . 1.hasNext(WholeStageCodegenExec.scala:760) at org.apache.spark.sql.execution.datasources.v2.WritingSparkTask. 1.hasNext(WholeStageCodegenExec.scala:760)atorg.apache.spark.sql.execution.datasources.v2.WritingSparkTask.anonfun$run 1 ( W r i t e T o D a t a S o u r c e V 2 E x e c . s c a l a : 464 ) a t o r g . a p a c h e . s p a r k . u t i l . U t i l s 1(WriteToDataSourceV2Exec.scala:464) at org.apache.spark.util.Utils 1(WriteToDataSourceV2Exec.scala:464)atorg.apache.spark.util.Utils.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1563)

at org.apache.spark.sql.execution.datasources.v2.WritingSparkTask.run(WriteToDataSourceV2Exec.scala:509)

at org.apache.spark.sql.execution.datasources.v2.WritingSparkTask.run ( W r i t e T o D a t a S o u r c e V 2 E x e c . s c a l a : 448 ) a t o r g . a p a c h e . s p a r k . s q l . e x e c u t i o n . d a t a s o u r c e s . v 2. D a t a W r i t i n g S p a r k T a s k (WriteToDataSourceV2Exec.scala:448) at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask (WriteToDataSourceV2Exec.scala:448)atorg.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask.run(WriteToDataSourceV2Exec.scala:514)

at org.apache.spark.sql.execution.datasources.v2.V2TableWriteExec. a n o n f u n anonfun anonfunwriteWithV2 2 ( W r i t e T o D a t a S o u r c e V 2 E x e c . s c a l a : 411 ) a t o r g . a p a c h e . s p a r k . s c h e d u l e r . R e s u l t T a s k . r u n T a s k ( R e s u l t T a s k . s c a l a : 92 ) a t o r g . a p a c h e . s p a r k . T a s k C o n t e x t . r u n T a s k W i t h L i s t e n e r s ( T a s k C o n t e x t . s c a l a : 161 ) a t o r g . a p a c h e . s p a r k . s c h e d u l e r . T a s k . r u n ( T a s k . s c a l a : 139 ) a t o r g . a p a c h e . s p a r k . e x e c u t o r . E x e c u t o r 2(WriteToDataSourceV2Exec.scala:411) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:92) at org.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161) at org.apache.spark.scheduler.Task.run(Task.scala:139) at org.apache.spark.executor.Executor 2(WriteToDataSourceV2Exec.scala:411)atorg.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:92)atorg.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161)atorg.apache.spark.scheduler.Task.run(Task.scala:139)atorg.apache.spark.executor.ExecutorTaskRunner. a n o n f u n anonfun anonfunrun 3 ( E x e c u t o r . s c a l a : 554 ) a t o r g . a p a c h e . s p a r k . u t i l . U t i l s 3(Executor.scala:554) at org.apache.spark.util.Utils 3(Executor.scala:554)atorg.apache.spark.util.Utils.tryWithSafeFinally(Utils.scala:1529)

at org.apache.spark.executor.Executor T a s k R u n n e r . r u n ( E x e c u t o r . s c a l a : 557 ) a t j a v a . b a s e / j a v a . u t i l . c o n c u r r e n t . T h r e a d P o o l E x e c u t o r . r u n W o r k e r ( T h r e a d P o o l E x e c u t o r . j a v a : 1128 ) a t j a v a . b a s e / j a v a . u t i l . c o n c u r r e n t . T h r e a d P o o l E x e c u t o r TaskRunner.run(Executor.scala:557) at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) at java.base/java.util.concurrent.ThreadPoolExecutor TaskRunner.run(Executor.scala:557)atjava.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)atjava.base/java.util.concurrent.ThreadPoolExecutorWorker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:834)

Caused by: java.lang.NoClassDefFoundError: org/lionsoul/ip2region/xdb/Searcher

at spark.util.IpToCityConverter2.convertToCity(IpToCityConverter2.java:19)

at spark.ZxyDiagrams.lambda m a i n main mainc94c15e5 1 ( Z x y D i a g r a m s . j a v a : 201 ) a t o r g . a p a c h e . s p a r k . s q l . U D F R e g i s t r a t i o n . 1(ZxyDiagrams.java:201) at org.apache.spark.sql.UDFRegistration. 1(ZxyDiagrams.java:201)atorg.apache.spark.sql.UDFRegistration.anonfun$register$352(UDFRegistration.scala:752)

… 19 more

解决办法

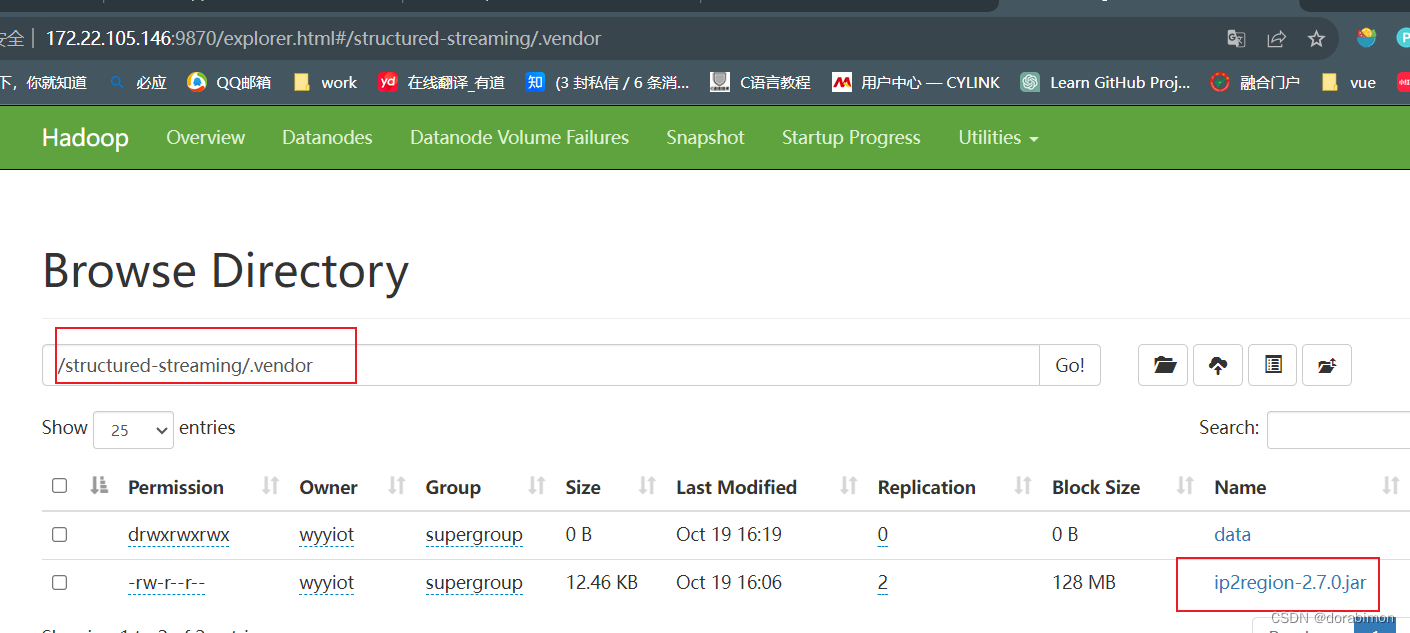

把依赖的jar把发到hdfs的文件系统的某目录下,并所有节点都要保证能查看hadoop的文件系统

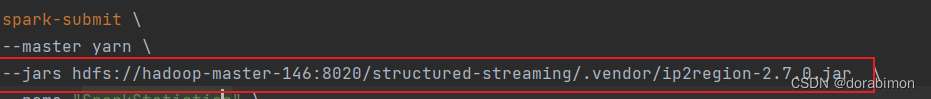

然后在提交时把这个jar包带上:

6万+

6万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?