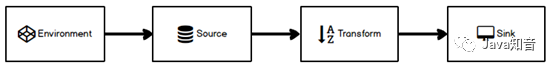

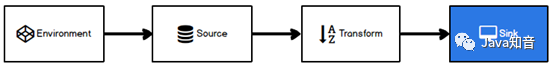

Flink开发流程

和其他所有的计算框架一样,Flink也有一些基础的开发步骤以及基础,核心的API,从开发步骤的角度来讲,主要分为四大部分

Environment

Flink Job在提交执行计算时,需要首先建立和Flink框架之间的联系,也就指的是当前的Flink运行环境,只有获取了环境信息,才能将task调度到不同的taskManager执行。而这个环境对象的获取方式相对比较简单。

// 批处理环境

val env = ExecutionEnvironment.getExecutionEnvironment

// 流式数据处理环境

val env = StreamExecutionEnvironment.getExecutionEnvironment

Source

示例文件所需添加的依赖

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

</dependencies>

整个Maven工程的配置项

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>nj.zb.kb09</groupId>

<artifactId>finkstu</artifactId>

<version>1.0-SNAPSHOT</version>

<name>finkstu</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

</dependencies>

<build>

<pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) -->

<plugins>

<!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle -->

<plugin>

<artifactId>maven-clean-plugin</artifactId>

<version>3.1.0</version>

</plugin>

<!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging -->

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.0</version>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.1</version>

</plugin>

<plugin>

<artifactId>maven-jar-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-install-plugin</artifactId>

<version>2.5.2</version>

</plugin>

<plugin>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.2</version>

</plugin>

<!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle -->

<plugin>

<artifactId>maven-site-plugin</artifactId>

<version>3.7.1</version>

</plugin>

<plugin>

<artifactId>maven-project-info-reports-plugin</artifactId>

<version>3.0.0</version>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

data.txt

ws_001,1609314670,45.0

ws_002,1609314671,33.0

ws_003,1609314672,32.0

ws_002,1609314673,23.0

ws_003,1609314674,31.0

ws_002,1609314675,45.0

ws_003,1609314676,18.0

ws_002,1609314677,34.0

ws_003,1609314678,47.0

ws_001,1609314679,55.0

ws_001,1609314680,25.0

ws_001,1609314681,25.0

ws_001,1609314682,25.0

ws_001,1609314683,26.0

ws_001,1609314684,21.0

ws_001,1609314685,24.0

ws_001,1609314686,15.0

从集合读取数据

一般情况下,可以将数据临时存储到内存中,形成特殊的数据结构后,作为数据源使用。这里的数据结构采用集合类型是比较普遍的。

代码展示:

package scala

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object SourceTest {

//env source transform sink

def main(args: Array[String]): Unit = {

//创建环境

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//设置并行度

//env.setParallelism(2)

//创建流,从集合中读取数据

val stream1: DataStream[WaterSensor] = env.fromCollection(List(

WaterSensor("ws_001", 1577844001, 45.0),

WaterSensor("ws_002", 1577844015, 43.0),

WaterSensor("ws_003", 1577844020, 42.0)

))

//打印

stream1.print()

//启动流

env.execute("demo")

}

//定义样例类:水位传感器:用于接收空高数据

//id:传感器编号 ts:时间戳 vc:空高

case class WaterSensor(id:String,ts:Long,vc:Double)

}

结果展示:

从文件中读取数据

通常情况下,我们会从存储介质中获取数据,比较常见的就是将日志文件作为数据源。

读取本地文件

代码展示:

package scala

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object SourceFile {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//读取本地文件

val fileDS1: DataStream[String] = env.readTextFile("input/data.txt")

fileDS1.print()

env.execute("sensor")

}

}

结果展示:

读取HDFS文件

代码展示:

package scala

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object SourceFile {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//读取HDFS文件

val fileDS2: DataStream[String] = env.readTextFile("hdfs://hadoop100:9000/kb09file/word2.txt")

fileDS2.print()

env.execute("sensor")

}

}

结果展示:

注意:读取HDFS文件时一定要添加两个依赖包:hadoop-common和hadoop-hdfs

Kafka读取数据

Kafka作为消息传输队列,是一个分布式的、高吞吐量、易于扩展地基于主题发布/订阅的消息系统。在现今企业级开发中,Kafka和Flink成为构建一个实时的数据处理系统的首选。

代码展示:

package scala

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

import org.apache.kafka.clients.consumer.ConsumerConfig

object SourceKafka {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val prop = new Properties()

prop.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.100:9092")

prop.setProperty(ConsumerConfig.GROUP_ID_CONFIG,"flink-kafka-demo")

prop.setProperty(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer")

prop.setProperty(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer")

prop.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"latest")

val kafkaDStream: DataStream[String] = env.addSource(

new FlinkKafkaConsumer[String]("sensor", new SimpleStringSchema(), prop)

)

kafkaDStream.print()

env.execute("kafkademo")

}

}

- 创建所需的topic

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100:2181 --create --topic sensor --partitions 1 --replication-factor 1

- 启动sensor消息创建者

[root@hadoop100 ~]# kafka-console-producer.sh --topic sensor --broker-list 192.168.136.100:9092

- 运行Scala程序

- 在sensor生产消息

hello flink

hello spark

hello scala

hello kafka

- 相应的在控制台打印出了结果

自定义数据源

大多数情况下,前面的数据源已经能够满足需要,但是难免会存在特殊情况的场合,所以Flink也提供了能自定义数据源的方式

代码展示:

package scala

import scala.util.Random

import org.apache.flink.streaming.api.functions.source.SourceFunction

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object SourceMy {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val mydefDStream: DataStream[WaterSensor] = env.addSource(new MySensorSource())

mydefDStream.print()

env.execute("mydefsource")

}

}

case class WaterSensor(id: String, ts: Long, vc: Double)

class MySensorSource extends SourceFunction[WaterSensor] {

var flag=true

override def run(sourceContext: SourceFunction.SourceContext[WaterSensor]): Unit = {

while (flag){

//采集数据

sourceContext.collect(

WaterSensor(

"sensor_"+new Random().nextInt(3),

16091404656011L,

new Random().nextInt(5)+40)

)

Thread.sleep(1000)

}

}

override def cancel(): Unit = {

flag=false

}

}

结果展示:

Transform

在Spark中,算子分为转换算子和行动算子,转换算子的作用可以通过算子方法的调用将一个RDD转换另外一个RDD,Flink中也存在同样的操作,可以将一个数据流转换为其他的数据流。

转换过程中,数据流的类型也会发生变化,那么到底Flink支持什么样的数据类型呢,其实我们常用的数据类型,Flink都是支持的。比如:Long、String、Integer、元组、样例类、List、Map等。

Map

- 映射:将数据流中的数据进行转换,形成新的数据流,消费一个元素并产出一个元素

- 参数:Scala匿名函数或MapFunction

- 返回:DataStream

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Map {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val sensorDS: DataStream[WaterSensor] = env.fromCollection(

List(

WaterSensor("sensor_0",16091404656011L,44.0),

WaterSensor("sensor_1",16091404656011L,43.0),

WaterSensor("sensor_2",16091404656011L,45.0)

)

)

val mapDStream: DataStream[(String, Long, Double)] = sensorDS.map(x=>(x.id+"_bak",x.ts+1,x.vc+1))

mapDStream.print()

env.execute("sensor")

}

case class WaterSensor(id: String, ts: Long, vc: Double)

}

结果展示:

MapFunction

Flink为每一个算子的参数都至少提供了Scala匿名函数和函数类两种的方式,其中如果使用函数类作为参数的话,需要让自定义函数继续指定的父类或实现特定的接口。例如:MapFunction

- 匿名函数

代码展示:

package transform

import org.apache.flink.api.common.functions.MapFunction

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object Transform_mapFunction {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

//匿名函数实现map

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

waterDStream.print("niminglei")

env.execute("sensor")

}

}

}

case class WaterSensor(id: String, ts: Long, vc: Double)

结果展示:

- 自定义函数

代码展示:

package transform

import org.apache.flink.api.common.functions.MapFunction

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object Transform_mapFunction {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

//自定义map类,实现map操作 实现MapFunction接口,功能同上

val waterDStream2: DataStream[WaterSensor] = fileDS.map(new MyMapFunction)

waterDStream2.print("zidinglei")

env.execute("sensor")

}

}

case class WaterSensor(id: String, ts: Long, vc: Double)

class MyMapFunction extends MapFunction[String,WaterSensor]{

override def map(t: String): WaterSensor = {

val strings: Array[String] = t.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

}

}

结果展示:

RichMapFunction

所有Flink函数类都有其Rich版本。它与常规函数的不同在于,可以获取运行环境的上下文,并拥有一些生命周期方法,所以可以实现更复杂的功能。也有意味着提供了更多的、更丰富的功能。例如:RichMapFunction

代码展示:

package transform

import org.apache.flink.api.common.functions.RichMapFunction

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_RichMapFunction {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val sensors: DataStream[String] = env.readTextFile("input/data.txt")

val myMapDS: DataStream[WaterSensor] = sensors.map(new MyRichMapFunction)

myMapDS.print()

env.execute("map")

}

}

class MyRichMapFunction extends RichMapFunction[String,WaterSensor]{

override def map(in: String): WaterSensor = {

val strings: Array[String] = in.split(",")

WaterSensor(strings(0),strings(1).toLong,strings(2).toDouble)

}

//富函数提供了生命周期方法

override def open(parameters: Configuration): Unit = {}

override def close(): Unit = {}

}

case class WaterSensor(id: String, ts: Long, vc: Double)

结果展示:

Rich Function有一个生命周期的概念。典型的生命周期方法有:

- open()方法是rich function的初始化方法,当一个算子例如map或者filter被调用之前open()会被调用

- close()方法是生命周期中的最后一个调用的方法,做一些清理工作

- getRuntimeContext()方法提供了函数的RuntimeContext的一些信息,例如函数执行的并行度,任务的名字,以及state状态

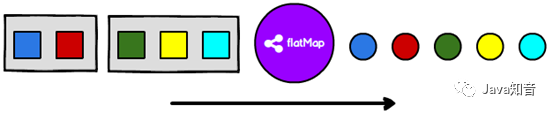

flatMap

- 扁平映射:将数据流中的整体拆分成一个一个的个体使用,消费一个元素并产生零到多个元素

- 参数:Scala匿名函数或FlatMapFunction

- 返回:DataStream

代码展示:

package transform

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object Transform_FlatMap {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val listDStream: DataStream[List[Int]] = env.fromCollection(

List(

List(1, 2, 3, 4, 5),

List(6, 7, 8, 9, 10)

)

)

val resultStream: DataStream[Int] = listDStream.flatMap(list=>list)

resultStream.print()

env.execute("flatMap")

}

}

结果展示:

filter

- 过滤:根据指定的规则将满足条件(true)的数据保留,不满足条件(false)的数据丢弃

- 参数:Scala匿名函数或FilterFunction

- 返回:DataStream

代码展示:

package transform

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object Transform_Filter {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val listStream: DataStream[List[Int]] = env.fromCollection(

List(

List(1, 2, 3, 4),

List(5, 6, 7, 8, 9, 10),

List(11, 12, 13, 14),

List(15, 16, 17, 18, 19, 20, 21),

List(21, 22),

List(23, 24, 25, 26, 27, 28, 29, 30)

)

)

listStream.filter(list=>list.size>6).print("filter")

env.execute("filter")

}

}

结果展示:

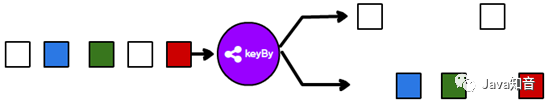

keyBy

在Spark中有一个GroupBy的算子,用于根据指定的规则将数据进行分组,在Flink中也有类似的功能,那就是keyBy,根据指定的key对数据进行分流

- 分流:根据指定的key将元素发送到不同的分区,相同的key会被分到一个分区(这里分区指的就是下游算子多个并行节点的其中一个)。keyBy()是通过哈希来分区的。

- 参数:Scala匿名函数或POJO属性或元组索引,不能使用数组

- 返回:KeyedStream

代码展示:

package transform

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.streaming.api.scala.{DataStream, KeyedStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_KeyBy {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val sensorDS: DataStream[WaterSensor] = env.fromCollection(

List(

WaterSensor("sensor_0",16091404656011L,44.0),

WaterSensor("sensor_1",16091404656011L,43.0),

WaterSensor("sensor_2",16091404656011L,45.0)

)

)

val mapDStream: DataStream[(String, Long, Double)] = sensorDS.map(x=>(x.id+"_bak",x.ts+1,x.vc+1))

//mapDStream.print()

val keyStream: KeyedStream[(String, Long, Double), String] = mapDStream.keyBy(_._1)

/*

使用keyBy进行分组

TODO关于返回的key的类型

1.如果是位置索引或字段名称,程序无法推断出key的类型,所以给一个java的Tuple类型

2.如果是匿名函数或函数类的方式,可以推断出key的类型,比较推荐使用

分组的概念:分组只是逻辑上进行分组,打上了记号(标签),跟并行度没有绝对的关系

同一个分组的数据在一起

同一个分区里可以有多个不同的组

*/

/*val value1: KeyedStream[WaterSensor, String] = sensorDS.keyBy(x=>x.id)

val value2: KeyedStream[WaterSensor, Tuple] = sensorDS.keyBy("id")

val value3: KeyedStream[WaterSensor, Tuple] = sensorDS.keyBy(0)*/

keyStream.print()

env.execute("sensor")

}

}

结果展示:

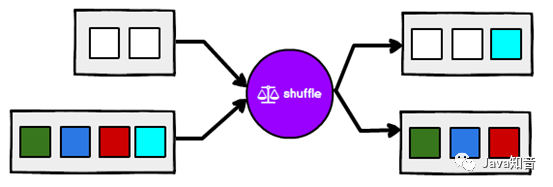

shuffle

- 打乱重组(洗牌):将数据按照均匀分布打散到下游

- 参数:无

- 返回:DataStream

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

object Transform_Shuffle {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(3)

val fileDStream: DataStream[String] = env.readTextFile("input/data.txt")

val shuffleStream: DataStream[String] = fileDStream.shuffle

fileDStream.print("data")

shuffleStream.print("shuffle")

env.execute("shuffledemo")

}

}

结果展示:

split

在某些情况下,我们需要将数据流根据某些特征拆分成两个或者多个数据流,给不同数据流增加标记以便于从流中取出

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{DataStream, SplitStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Split {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

val splitStream: SplitStream[WaterSensor] = waterDStream.split(sensor => {

if (sensor.vc <30) {

Seq("normal")

} else if (sensor.vc < 40) {

Seq("warn")

} else {

Seq("alarm")

}

})

env.execute("splitdemo")

}

}

select

将数据流进行切分后,如何从流中讲不同的标价取出呢,这时就需要使用select算子了。

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{DataStream, SplitStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Split {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

val splitStream: SplitStream[WaterSensor] = waterDStream.split(sensor => {

if (sensor.vc <30) {

Seq("normal")

} else if (sensor.vc < 40) {

Seq("warn")

} else {

Seq("alarm")

}

})

val normalStream: DataStream[WaterSensor] = splitStream.select("normal")

val warnStream: DataStream[WaterSensor] = splitStream.select("warn")

val alarmStream: DataStream[WaterSensor] = splitStream.select("alarm")

normalStream.print("normal")

warnStream.print("warn")

alarmStream.print("alarm")

env.execute("splitdemo")

}

}

结果展示:

connect

在某些情况下,我们需要将两个不同来源的数据流进行连接,实现数据匹配,比如订单支付和第三方交易信息,这两个信息的数据就来自于不同数据源,连接后,将订单支付和第三方交易信息进行对账,此时,才能算真正的支付完成。

Flink中的connect算子可以连接两个保持他们类型的数据流,两个数据流被connect之后,只是被放在了一个同一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{ConnectedStreams, DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Connect {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

val numStream: DataStream[Int] = env.fromCollection(List(1,2,3,4,5,6))

val connStream: ConnectedStreams[WaterSensor, Int] = waterDStream.connect(numStream)

connStream.map(sensor=>sensor.id,num=>num+1).print("connect")

env.execute("connectdemo")

}

}

结果展示:

union

对两个或者两个以上的DataStream进行union操作,产生一个包含所有DataStream元素的新DataStream

connect与union区别:

1.union之前两个流的类型必须是一样的,connect可以不一样。

2.connect只能操作两个流,union可以操作多个。

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{ConnectedStreams, DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Connect {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val num1Stream: DataStream[Int] = env.fromCollection(List(1,2,3))

val num2Stream: DataStream[Int] = env.fromCollection(List(4,5,6))

val num3Stream: DataStream[Int] = env.fromCollection(List(7,8,9))

val unionStream: DataStream[Int] = num1Stream.union(num2Stream).union(num3Stream)

//unionStream.print()

env.execute("uniondemo")

}

}

结果展示:

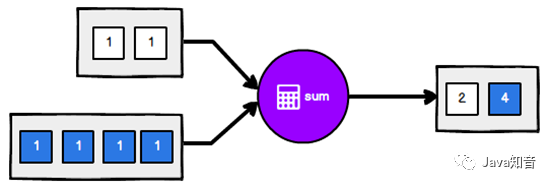

Operator

Flink作为计算框架,主要应用于数据计算处理上,所以在keyBy对数据进行分流后,可以对数据进行相应的统计分析

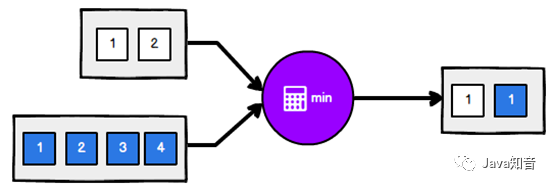

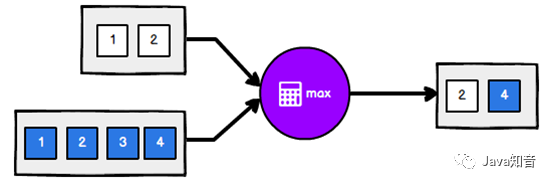

滚动聚合算子(Rolling 、Aggregation)

这些算子可以针对KeyedStream的每一个支流做聚合。执行完成后,会将聚合的结果合成一个流返回,所以结果都是DataStream

- sum()

- min()

- max()

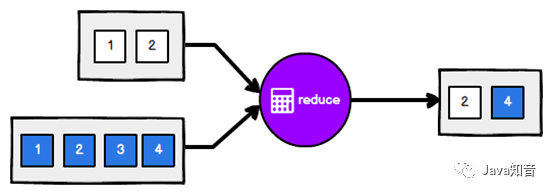

reduce

一个分组数据流的聚合操作,合并当前的元素和上次聚合的结果,产生一个新的值,返回的流中包含每一次聚合的结果,而不是只返回最后一次聚合的最终结果。

代码展示:

package transform

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.api.scala._

object Transform_Reduce {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

val keyStream: KeyedStream[WaterSensor, String] = waterDStream.keyBy(_.id)

val reduceStream: DataStream[WaterSensor] = keyStream.reduce((x, y) => {

println(x.id, x.vc, y.id, y.vc)

WaterSensor(x.id, System.currentTimeMillis(), x.vc + y.vc)

})

reduceStream.print()

env.execute("reducedemo")

}

}

结果展示:

process

Flink在数据流通过keyBy进行分流处理后,如果想要处理过程中获取环境相关信息,可以采用process算子自定义继承KeyedProcessFunction抽象类,并定义泛型[KEY,IN,OUT]

代码展示:

package transform

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.util.Collector

import org.apache.flink.streaming.api.scala._

object Transform_Process {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val fileDS: DataStream[String] = env.readTextFile("input/data.txt")

val waterDStream: DataStream[WaterSensor] = fileDS.map(x => {

val strings: Array[String] = x.split(",")

WaterSensor(strings(0), strings(1).toLong, strings(2).toDouble)

})

val keyStream: KeyedStream[WaterSensor, String] = waterDStream.keyBy(_.id)

val outStream: DataStream[String] = keyStream.process(new MyKeyedProcessFunction)

outStream.print("myprocess")

env.execute("myprocess")

}

}

class MyKeyedProcessFunction extends KeyedProcessFunction[String,WaterSensor,String]{

override def processElement(i: WaterSensor, context: KeyedProcessFunction[String, WaterSensor, String]#Context, collector: Collector[String]): Unit = {

val key: String = context.getCurrentKey

println(key)

collector.collect("process key:"+key+" value:"+i)

}

}

结果展示:

Sink

Sink有下沉的意思,在Flink中所谓的Sink其实可以表示为将数据存储起来的意思,也可以将范围扩大,表示将处理完的数据发送到指定的存储系统的输出操作。

代码展示:

package sink

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer, FlinkKafkaProducer}

import org.apache.kafka.clients.consumer.ConsumerConfig

import org.apache.flink.streaming.api.scala._

object SinkKafka {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val prop = new Properties()

prop.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.100:9092")

prop.setProperty(ConsumerConfig.GROUP_ID_CONFIG,"flink-kafka-demo")

prop.setProperty(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer")

prop.setProperty(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer")

prop.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"latest")

val kafkaDStream: DataStream[String] = env.addSource(

new FlinkKafkaConsumer[String]("sensor", new SimpleStringSchema(), prop)

)

kafkaDStream.addSink(new FlinkKafkaProducer[String]("192.168.136.100:9092","sensorout",new SimpleStringSchema()))

kafkaDStream.print()

env.execute("kafkademo")

}

}

- 创建两个相应的topic

[root@hadoop100 ~]# kafka-topics.sh --zookeeper hadoop100:2181 --create --topic sensor --partitions 1 --replication-factor 1

[root@hadoop100 ~]# kafka-topics.sh --zookeeper hadoop100:2181 --create --topic sensorout --partitions 1 --replication-factor 1

- 创建生产信息

[root@hadoop100 ~]# kafka-console-producer.sh --topic sensor --broker-list hadoop100:9092

- 创建消费信息

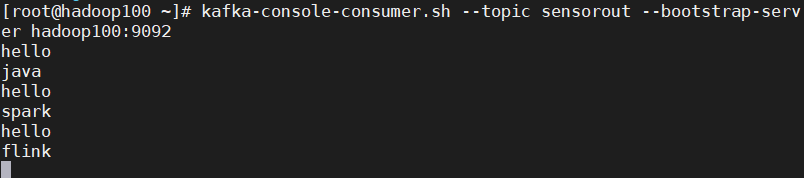

[root@hadoop100 ~]# kafka-console-consumer.sh --topic sensorout --bootstrap-server hadoop100:9092

- 启动Scala程序

- 在生产者sensor生产信息

hello

java

hello

spark

hello

flink

- 相应的在控制台打印出了信息和sensorout消费到了消息

1327

1327

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?