tf.train.exponential_decay(learning_rate, global_, decay_steps, decay_rate, staircase=True/False)

import tensorflow as tf;

import numpy as np;

import matplotlib.pyplot as plt;

learning_rate = 0.1

decay_rate = 0.96

global_steps = 1000

decay_steps = 100

global_ = tf.Variable(tf.constant(0))

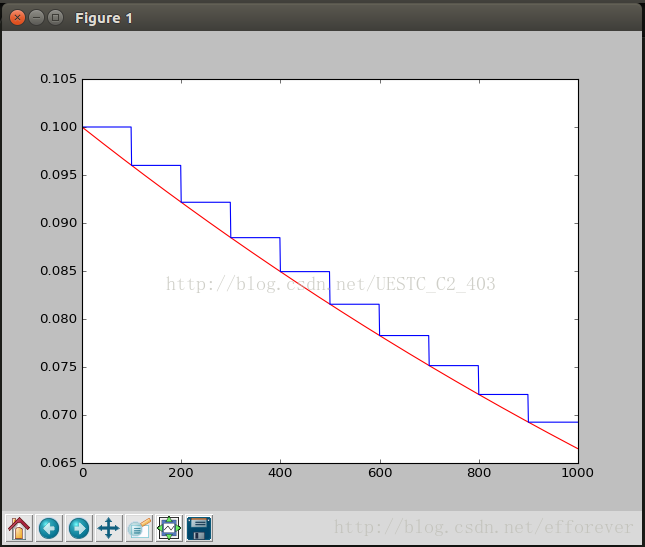

c = tf.train.exponential_decay(learning_rate, global_, decay_steps, decay_rate, staircase=True)

d = tf.train.exponential_decay(learning_rate, global_, decay_steps, decay_rate, staircase=False)

T_C = []

F_D = []

with tf.Session() as sess:

for i in range(global_steps):

T_c = sess.run(c,feed_dict={global_: i})

T_C.append(T_c)

F_d = sess.run(d,feed_dict={global_: i})

F_D.append(F_d)

plt.figure(1)

plt.plot(range(global_steps), F_D, 'r-')

plt.plot(range(global_steps), T_C, 'b-')

plt.show() 分析:

初始的学习速率是0.1,总的迭代次数是1000次,如果staircase=True,那就表明每decay_steps次计算学习速率变化,更新原始学习速率,如果是False,那就是每一步都更新学习速率。红色表示False,绿色表示True。

计算方式:decayed_lr = lr * decay_rate ^ (global_step/decay_steps)

381

381

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?