一、基础安装(所有节点执行)----------------------------------------

时间同步

关闭防火墙

sudo ufw disable

sudo ufw status

关闭交换内存

临时关闭

sudo swapoff -a

free -m

永久关闭

sudo vim /etc/fstab

注释掉交换内存

转发 IPv4 并让 iptables 看到桥接流量

官方文档:https://v1-28.docs.kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

安装docker----------------------------------------------------

下载 docker

地址

https://download.docker.com/linux/ubuntu/dists/jammy/pool/stable/amd64/

https://github.com/Mirantis/cri-dockerd/releases

安装 docker

dpkg -i ./containerd.io_1.6.20-1_amd64.deb \

./docker-ce-cli_24.0.5-1~ubuntu.22.04~jammy_amd64.deb \

./docker-ce_24.0.5-1~ubuntu.22.04~jammy_amd64.deb \

./docker-buildx-plugin_0.11.2-1~ubuntu.22.04~jammy_amd64.deb \

./ocker-compose-plugin_2.20.2-1~ubuntu.22.04~jammy_amd64.deb

dpkg -i cri-dockerd_0.3.10.3-0.ubuntu-jammy_amd64.deb

安装kubeadm,kubelet,kubectl

软件源

sudo apt-get update

# apt-transport-https 可能是一个虚拟包(dummy package);如果是的话,你可以跳过安装这个包

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

#下载用于 Kubernetes 软件包仓库的公共签名密钥。所有仓库都使用相同的签名密钥,因此你可以忽略URL中的版本:

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# 此操作会覆盖 /etc/apt/sources.list.d/kubernetes.list 中现存的所有配置。

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

apt update

查找kubeadm的版本

apt-cache madison kubeadm

安装Kubernetes:

sudo apt install -y kubeadm=1.28.9-2.1 kubectl=1.28.9-2.1 kubelet=1.28.9-2.1

锁定版本

以免apt upgrade时自动升级,出现版本不兼容的情况:

sudo apt-mark hold kubelet kubeadm kubectl

下载镜像

查看该版本用到的docker镜像

kubeadm config images list

拉取镜像

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/google_containers

sudo docker pull ${MY_REGISTRY}/kube-apiserver:v1.28.9

sudo docker pull ${MY_REGISTRY}/kube-controller-manager:v1.28.9

sudo docker pull ${MY_REGISTRY}/kube-scheduler:v1.28.9

sudo docker pull ${MY_REGISTRY}/kube-proxy:v1.28.9

sudo docker pull ${MY_REGISTRY}/pause:3.9

sudo docker pull ${MY_REGISTRY}/etcd:3.5.12-0

sudo docker pull ${MY_REGISTRY}/coredns:v1.10.1

添加Tag

默认使用的是 registry.k8s.io

sudo docker tag ${MY_REGISTRY}/kube-apiserver:v1.28.9 registry.k8s.io/kube-apiserver:v1.28.9

sudo docker tag ${MY_REGISTRY}/kube-scheduler:v1.28.9 registry.k8s.io/kube-scheduler:v1.28.9

sudo docker tag ${MY_REGISTRY}/kube-controller-manager:v1.28.9 registry.k8s.io/kube-controller-manager:v1.28.9

sudo docker tag ${MY_REGISTRY}/kube-proxy:v1.28.9 registry.k8s.io/kube-proxy:v1.28.9

sudo docker tag ${MY_REGISTRY}/etcd:3.5.12-0 registry.k8s.io/etcd:3.5.12-0

sudo docker tag ${MY_REGISTRY}/pause:3.9 registry.k8s.io/pause:3.9

sudo docker tag ${MY_REGISTRY}/coredns:v1.10.1 registry.k8s.io/coredns:v1.10.1

二、安装控制面(contoller plan1节点执行)-----------------------------------------

方法1:使用配置文件安装 master

打印默认配置

kubeadm config print init-defaults > /etc/kubernetes/kubeadm.conf

修改配置

vim /etc/kubernetes/kubeadm.conf

内容如下:

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.122.12

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: 192.168.122.12

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.28.9

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

初始化master节点

kubeadm init --config=/etc/kubernetes/kubeadm.conf

成功信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.122.12:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:a98f46a770843d62c18187638b04b86967611db2a448842c2c826071d641dce2

拷贝认证配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

取消安装

如果需要

kubeadm reset --cri-socket unix:///run/cri-dockerd.sock

方法2:使用参数(未测试)

kubeadm init --node-name=192.168.122.12 --kubernetes-version=v1.28.9 --apiserver-advertise-address=192.168.122.12 --pod-network-cidr=10.244.0.0/16 --cri-socket=unix:///run/cri-dockerd.sock --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

安装网络插件 flannel

kube-flannel_v0.25.0.yml

官方文档:https://github.com/flannel-io/flannel/blob/v0.25.0/Documentation/kube-flannel.yml

如果有多个网卡,需添加参数 --iface=xxx, 指定网卡。

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.4.0-flannel1

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.25.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.25.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=enp1s0

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

---

安装flannel

kubectl create -f flannel_v0.25.0.yml

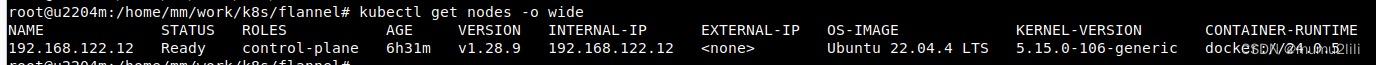

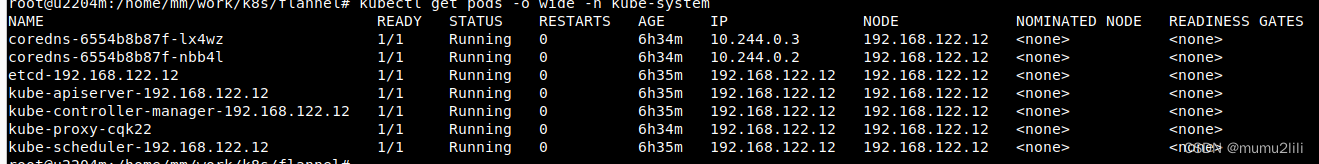

验证集群

查看节点

查看pod

三、安装工作节点----------------------------------------------------

把工作节点加入到集群

192.168.122.13 是slave ip, 192.168.122.12 是 master ip

kubeadm join 192.168.122.12:6443 --node-name 192.168.122.13 --token eipqir.f26q8k19iofaihz0 --discovery-token-ca-cert-hash sha256:a98f46a770843d62c18187638b04b86967611db2a448842c2c826071d641dce2 --cri-socket=unix:///run/cri-dockerd.so

输出如下信息,表示成功

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

四、启用主节点同时作为工作节点(实验环境)-----------------------------------------

kubectl taint nodes --all node-role.kubernetes.io/master-

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?