rte_pktmbuf_pool_create

/**

@param name: 内存池名

@param n: mbuf数量

@param cache_size: 每个core缓存的大小

@param priv_size: 私有数据大小

@param data_room_size:mbuf大小RTE_MBUF_DEFAULT_DATAROOM + RTE_PKTMBUF_HEADROOM

@param socket_id: 指定SOCKET_ID号, NUMA场景下是NUMA id,否则可设置为SOCKET_ID_ANY;

*/

rte_struct rte_mempool* rte_pktmbuf_pool_create (const char * name,

unsigned n,

unsigned cache_size,

uint16_t priv_size,

uint16_t data_room_size,

int socket_id

)

ret_mbuf

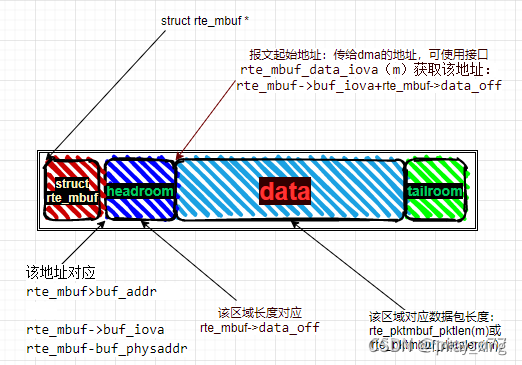

单segment 的 ret_mbuf结构

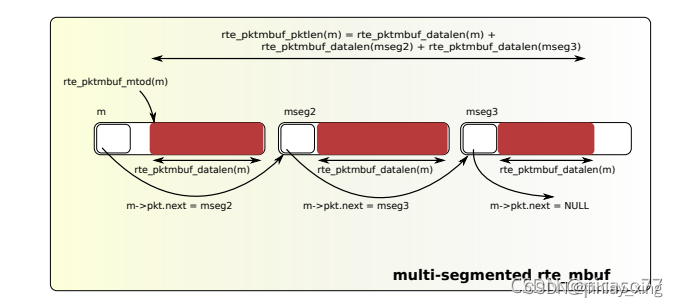

多segment 的 ret_mbuf结构

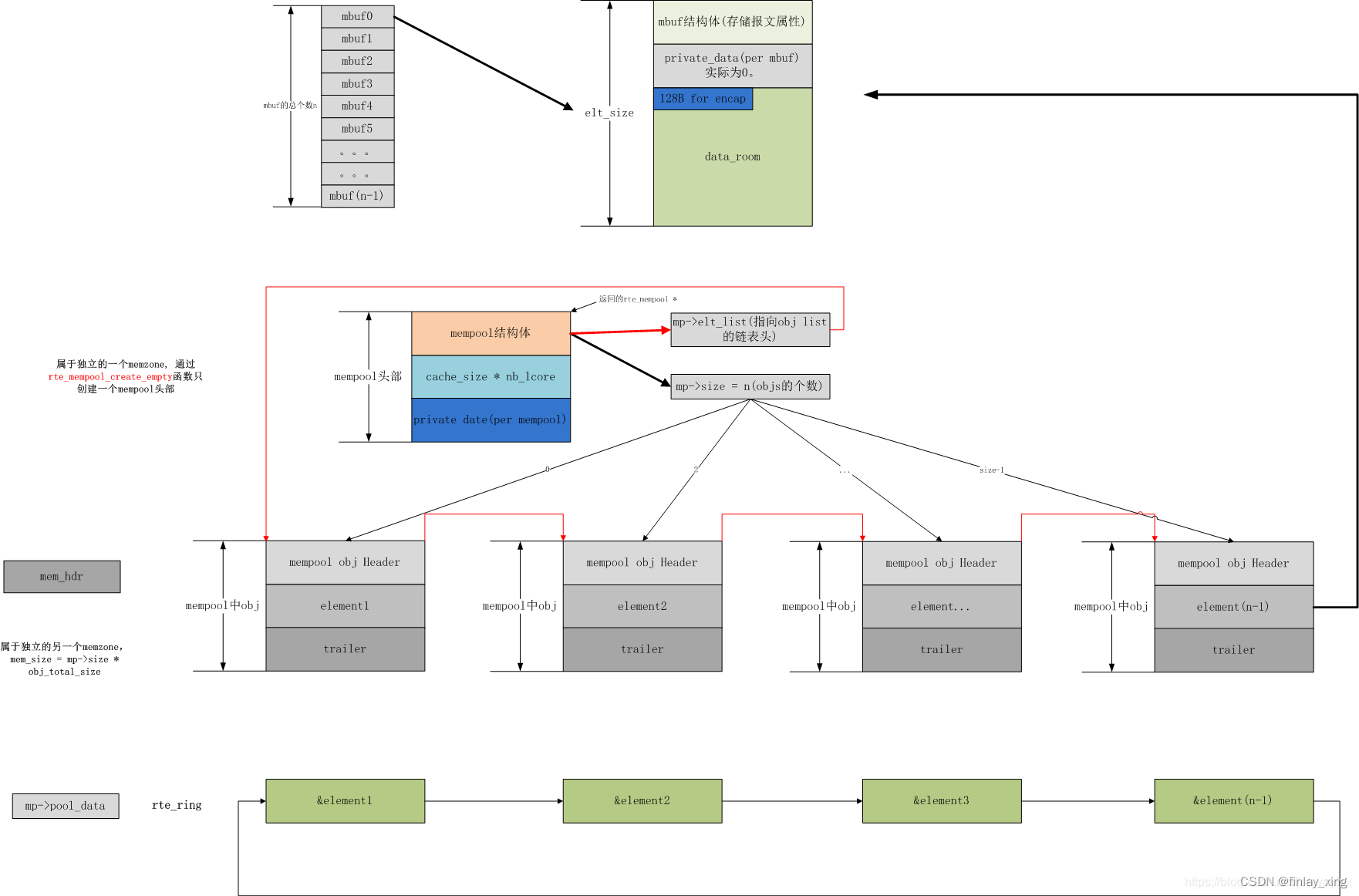

rte_mempool 创建

rte_pktmbuf_pool_create函数源码

/* helper to create a mbuf pool */

struct rte_mempool *

rte_pktmbuf_pool_create(const char *name, unsigned int n,

unsigned int cache_size, uint16_t priv_size, uint16_t data_room_size,

int socket_id)

{

return rte_pktmbuf_pool_create_by_ops(name, n, cache_size, priv_size,

data_room_size, socket_id, NULL);

}

rte_pktmbuf_pool_create_by_ops函数:

1:创建并初始化mempool

2:申请obj,并填充到mempool

struct rte_mempool *

rte_pktmbuf_pool_create_by_ops(const char *name, unsigned int n,

unsigned int cache_size, uint16_t priv_size, uint16_t data_room_size,

int socket_id, const char *ops_name)

{

struct rte_mempool *mp;

struct rte_pktmbuf_pool_private mbp_priv;

const char *mp_ops_name = ops_name;

unsigned elt_size;

int ret;

if (RTE_ALIGN(priv_size, RTE_MBUF_PRIV_ALIGN) != priv_size) {

RTE_LOG(ERR, MBUF, "mbuf priv_size=%u is not aligned\n",

priv_size);

rte_errno = EINVAL;

return NULL;

}

/**

* 指定在mempool中的每个元素的大小,即为上面提到的缓冲区大小加上private data的大小

* 再加上struct rte_mbuf结构的大小

*/

elt_size = sizeof(struct rte_mbuf) + (unsigned)priv_size +

(unsigned)data_room_size;

memset(&mbp_priv, 0, sizeof(mbp_priv));

mbp_priv.mbuf_data_room_size = data_room_size;

mbp_priv.mbuf_priv_size = priv_size;

//rte_mempool_create_empty()创建内存池

mp = rte_mempool_create_empty(name, n, elt_size, cache_size,

sizeof(struct rte_pktmbuf_pool_private), socket_id, 0);

if (mp == NULL)

return NULL;

if (mp_ops_name == NULL)

mp_ops_name = rte_mbuf_best_mempool_ops();

ret = rte_mempool_set_ops_byname(mp, mp_ops_name, NULL);

if (ret != 0) {

RTE_LOG(ERR, MBUF, "error setting mempool handler\n");

rte_mempool_free(mp);

rte_errno = -ret;

return NULL;

}

rte_pktmbuf_pool_init(mp, &mbp_priv);

// 申请obj,填充到mp里面,在rte_mempool_populate_default函数内会申请obj内存,并将所有的obj存入mp中

ret = rte_mempool_populate_default(mp);

if (ret < 0) {

rte_mempool_free(mp);

rte_errno = -ret;

return NULL;

}

rte_mempool_obj_iter(mp, rte_pktmbuf_init, NULL);

return mp;

}

rte_mempool_create_empty函数:创建一个空的mempool,并初始化mempool.

struct rte_mempool *

rte_mempool_create_empty(const char *name, unsigned n, unsigned elt_size,

unsigned cache_size, unsigned private_data_size,

int socket_id, unsigned flags)

{

char mz_name[RTE_MEMZONE_NAMESIZE];

struct rte_mempool_list *mempool_list;

struct rte_mempool *mp = NULL;

struct rte_tailq_entry *te = NULL;

const struct rte_memzone *mz = NULL;

size_t mempool_size;

unsigned int mz_flags = RTE_MEMZONE_1GB|RTE_MEMZONE_SIZE_HINT_ONLY;

struct rte_mempool_objsz objsz;

unsigned lcore_id;

int ret;

/* compilation-time checks */

RTE_BUILD_BUG_ON((sizeof(struct rte_mempool) &

RTE_CACHE_LINE_MASK) != 0);

RTE_BUILD_BUG_ON((sizeof(struct rte_mempool_cache) &

RTE_CACHE_LINE_MASK) != 0);

#ifdef RTE_LIBRTE_MEMPOOL_DEBUG

RTE_BUILD_BUG_ON((sizeof(struct rte_mempool_debug_stats) &

RTE_CACHE_LINE_MASK) != 0);

RTE_BUILD_BUG_ON((offsetof(struct rte_mempool, stats) &

RTE_CACHE_LINE_MASK) != 0);

#endif

//获取rte_mempool_tailq队列,从中取出头节点,我们创建的mempool结构填充好,就挂接在这个节点上。

mempool_list = RTE_TAILQ_CAST(rte_mempool_tailq.head, rte_mempool_list);

/* asked for zero items */

if (n == 0) {

rte_errno = EINVAL;

return NULL;

}

/* asked cache too big */

if (cache_size > RTE_MEMPOOL_CACHE_MAX_SIZE ||

CALC_CACHE_FLUSHTHRESH(cache_size) > n) {

rte_errno = EINVAL;

return NULL;

}

/* "no cache align" imply "no spread" */

if (flags & MEMPOOL_F_NO_CACHE_ALIGN)

flags |= MEMPOOL_F_NO_SPREAD;

/* calculate mempool object sizes. */

// 计算了每个obj的大小,这个obj又是由三个部分组成的,objhdr,elt_size,objtlr,即头,数据区,尾

if (!rte_mempool_calc_obj_size(elt_size, flags, &objsz)) {

rte_errno = EINVAL;

return NULL;

}

rte_mcfg_mempool_write_lock();

/*

* reserve a memory zone for this mempool: private data is

* cache-aligned

*/

private_data_size = (private_data_size +

RTE_MEMPOOL_ALIGN_MASK) & (~RTE_MEMPOOL_ALIGN_MASK);

/* try to allocate tailq entry */

te = rte_zmalloc("MEMPOOL_TAILQ_ENTRY", sizeof(*te), 0);

if (te == NULL) {

RTE_LOG(ERR, MEMPOOL, "Cannot allocate tailq entry!\n");

goto exit_unlock;

}

//mempool_size这个名字太有误导性,这里指的是计算mempool的头结构的大小

// cache计算的是所有核上的cache之和

mempool_size = MEMPOOL_HEADER_SIZE(mp, cache_size);

mempool_size += private_data_size;

mempool_size = RTE_ALIGN_CEIL(mempool_size, RTE_MEMPOOL_ALIGN);

ret = snprintf(mz_name, sizeof(mz_name), RTE_MEMPOOL_MZ_FORMAT, name);

if (ret < 0 || ret >= (int)sizeof(mz_name)) {

rte_errno = ENAMETOOLONG;

goto exit_unlock;

}

//创建内存池的内存,大小为mempool_size 也就是分配这个mempool头结构大小的空间

mz = rte_memzone_reserve(mz_name, mempool_size, socket_id, mz_flags);

if (mz == NULL)

goto exit_unlock;

/* init the mempool structure */

mp = mz->addr;

memset(mp, 0, MEMPOOL_HEADER_SIZE(mp, cache_size));

ret = strlcpy(mp->name, name, sizeof(mp->name));

if (ret < 0 || ret >= (int)sizeof(mp->name)) {

rte_errno = ENAMETOOLONG;

goto exit_unlock;

}

mp->mz = mz;

mp->size = n;

mp->flags = flags;

mp->socket_id = socket_id;

mp->elt_size = objsz.elt_size;

mp->header_size = objsz.header_size;

mp->trailer_size = objsz.trailer_size;

/* Size of default caches, zero means disabled. */

mp->cache_size = cache_size;

mp->private_data_size = private_data_size;

STAILQ_INIT(&mp->elt_list);

STAILQ_INIT(&mp->mem_list);

/*

* local_cache pointer is set even if cache_size is zero.

* The local_cache points to just past the elt_pa[] array.

*/

mp->local_cache = (struct rte_mempool_cache *)

RTE_PTR_ADD(mp, MEMPOOL_HEADER_SIZE(mp, 0));

/* Init all default caches. */

if (cache_size != 0) {

for (lcore_id = 0; lcore_id < RTE_MAX_LCORE; lcore_id++)

mempool_cache_init(&mp->local_cache[lcore_id],

cache_size);

}

te->data = mp;

rte_mcfg_tailq_write_lock();

//创建的mempool挂接到mempool_list。

TAILQ_INSERT_TAIL(mempool_list, te, next);

rte_mcfg_tailq_write_unlock();

rte_mcfg_mempool_write_unlock();

return mp;

exit_unlock:

rte_mcfg_mempool_write_unlock();

rte_free(te);

rte_mempool_free(mp);

return NULL;

}

}

rte_mempool_calc_obj_size函数:

/* get the header, trailer and total size of a mempool element. */

// 获取 mempool 存储元素的头部、尾部和总大小,可以理解为mbuf的大小

uint32_t

rte_mempool_calc_obj_size(uint32_t elt_size, uint32_t flags,

struct rte_mempool_objsz *sz)

{

struct rte_mempool_objsz lsz;

sz = (sz != NULL) ? sz : &lsz;

//obj 头部大小

sz->header_size = sizeof(struct rte_mempool_objhdr);

if ((flags & MEMPOOL_F_NO_CACHE_ALIGN) == 0)

sz->header_size = RTE_ALIGN_CEIL(sz->header_size,

RTE_MEMPOOL_ALIGN);

// obj 尾部大小

#ifdef RTE_LIBRTE_MEMPOOL_DEBUG

sz->trailer_size = sizeof(struct rte_mempool_objtlr);

#else

sz->trailer_size = 0;

#endif

/* element size is 8 bytes-aligned at least */

// obj 对齐后的element size

sz->elt_size = RTE_ALIGN_CEIL(elt_size, sizeof(uint64_t));

/* expand trailer to next cache line */

if ((flags & MEMPOOL_F_NO_CACHE_ALIGN) == 0) {

sz->total_size = sz->header_size + sz->elt_size +

sz->trailer_size;

sz->trailer_size += ((RTE_MEMPOOL_ALIGN -

(sz->total_size & RTE_MEMPOOL_ALIGN_MASK)) &

RTE_MEMPOOL_ALIGN_MASK);

}

/*

* increase trailer to add padding between objects in order to

* spread them across memory channels/ranks

*/

if ((flags & MEMPOOL_F_NO_SPREAD) == 0) {

unsigned new_size;

new_size = optimize_object_size(sz->header_size + sz->elt_size +

sz->trailer_size);

sz->trailer_size = new_size - sz->header_size - sz->elt_size;

}

/* this is the size of an object, including header and trailer */

//obj 的总大小

sz->total_size = sz->header_size + sz->elt_size + sz->trailer_size;

return sz->total_size;

}

rte_mempool_populate_default函数:创建所有obj的内存,并存入ring队列

int

rte_mempool_populate_default(struct rte_mempool *mp)

{

unsigned int mz_flags = RTE_MEMZONE_1GB|RTE_MEMZONE_SIZE_HINT_ONLY;

char mz_name[RTE_MEMZONE_NAMESIZE];

const struct rte_memzone *mz;

ssize_t mem_size;

size_t align, pg_sz, pg_shift = 0;

rte_iova_t iova;

unsigned mz_id, n;

int ret;

bool need_iova_contig_obj;

size_t max_alloc_size = SIZE_MAX;

调用 common_ring_alloc---->ring_alloc

ret = mempool_ops_alloc_once(mp);

if (ret != 0)

return ret;

/* mempool must not be populated */

if (mp->nb_mem_chunks != 0)

return -EEXIST;

need_iova_contig_obj = !(mp->flags & MEMPOOL_F_NO_IOVA_CONTIG);

ret = rte_mempool_get_page_size(mp, &pg_sz);

if (ret < 0)

return ret;

if (pg_sz != 0)

pg_shift = rte_bsf32(pg_sz);

for (mz_id = 0, n = mp->size; n > 0; mz_id++, n -= ret) {

size_t min_chunk_size;

//获取内存池中元素内存总大小 n*(mp->header_size + mp->elt_size + mp->trailer_size)

mem_size = rte_mempool_ops_calc_mem_size(

mp, n, pg_shift, &min_chunk_size, &align);

if (mem_size < 0) {

ret = mem_size;

goto fail;

}

ret = snprintf(mz_name, sizeof(mz_name),

RTE_MEMPOOL_MZ_FORMAT "_%d", mp->name, mz_id);

if (ret < 0 || ret >= (int)sizeof(mz_name)) {

ret = -ENAMETOOLONG;

goto fail;

}

/* if we're trying to reserve contiguous memory, add appropriate

* memzone flag.

*/

if (min_chunk_size == (size_t)mem_size)

mz_flags |= RTE_MEMZONE_IOVA_CONTIG;

/* Allocate a memzone, retrying with a smaller area on ENOMEM */

// 创建mempool中元素的全部内存,

do {

mz = rte_memzone_reserve_aligned(mz_name,

RTE_MIN((size_t)mem_size, max_alloc_size),

mp->socket_id, mz_flags, align);

if (mz != NULL || rte_errno != ENOMEM)

break;

max_alloc_size = RTE_MIN(max_alloc_size,

(size_t)mem_size) / 2;

} while (mz == NULL && max_alloc_size >= min_chunk_size);

if (mz == NULL) {

ret = -rte_errno;

goto fail;

}

if (need_iova_contig_obj)

iova = mz->iova;

else

iova = RTE_BAD_IOVA;

if (pg_sz == 0 || (mz_flags & RTE_MEMZONE_IOVA_CONTIG))

//给每一个memhdr和obj赋值,将其设置到mp对应的链表里面同时把obj enqueue到ring里面去,允许用户获取

ret = rte_mempool_populate_iova(mp, mz->addr,

iova, mz->len,

rte_mempool_memchunk_mz_free,

(void *)(uintptr_t)mz);

else

ret = rte_mempool_populate_virt(mp, mz->addr,

mz->len, pg_sz,

rte_mempool_memchunk_mz_free,

(void *)(uintptr_t)mz);

if (ret < 0) {

rte_memzone_free(mz);

goto fail;

}

}

return mp->size;

fail:

rte_mempool_free_memchunks(mp);

return ret;

}

rte_mempool 获取内存

获取mempool中的内存调用接口 rte_mempool_get_bulk。以下为rte_mempool_get_bulk源码:

1:rte_mempool_default_cache 函数为 获取核(lcore_id)对应的cache。先从自己的core的cache拿到cache控制块&mp->local_cache[lcore_id]

2:rte_mempool_generic_get函数用于获取所需的内存

static __rte_always_inline int

rte_mempool_get_bulk(struct rte_mempool *mp, void **obj_table, unsigned int n)

{

struct rte_mempool_cache *cache;

//获取locre_id 对应的 cache

cache = rte_mempool_default_cache(mp, rte_lcore_id());

return rte_mempool_generic_get(mp, obj_table, n, cache);

}

static __rte_always_inline int

rte_mempool_generic_get(struct rte_mempool *mp, void **obj_table,

unsigned int n, struct rte_mempool_cache *cache)

{

int ret;

ret = __mempool_generic_get(mp, obj_table, n, cache);

if (ret == 0)

__mempool_check_cookies(mp, obj_table, n, 1);

return ret;

}

__mempool_generic_get函数:

1:首先判断cache->len当前的容量是否满足获取的个数,

2:如果不够的话,再往cache里面增加obj,最后再从cache里面获取obj。

static __rte_always_inline int

__mempool_generic_get(struct rte_mempool *mp, void **obj_table,

unsigned int n, struct rte_mempool_cache *cache)

{

int ret;

uint32_t index, len;

void **cache_objs;

/* No cache provided or cannot be satisfied from cache */

if (unlikely(cache == NULL || n >= cache->size))

goto ring_dequeue;

cache_objs = cache->objs;

/* Can this be satisfied from the cache? */

// 判断cache->len当前的容量是否满足获取的个数

if (cache->len < n) {

/* No. Backfill the cache first, and then fill from it */

uint32_t req = n + (cache->size - cache->len);

/* How many do we require i.e. number to fill the cache + the request */

// 当前容量不够,所以取req个obj,存入cache

ret = rte_mempool_ops_dequeue_bulk(mp,

&cache->objs[cache->len], req);

if (unlikely(ret < 0)) {

/*

* In the off chance that we are buffer constrained,

* where we are not able to allocate cache + n, go to

* the ring directly. If that fails, we are truly out of

* buffers.

*/

goto ring_dequeue;

}

cache->len += req;

}

/* Now fill in the response ... */

// 从cache中获取obj

for (index = 0, len = cache->len - 1; index < n; ++index, len--, obj_table++)

*obj_table = cache_objs[len];

cache->len -= n;

__MEMPOOL_STAT_ADD(mp, get_success, n);

return 0;

ring_dequeue:

/* get remaining objects from ring */

// 从ring 队列中获取obj

ret = rte_mempool_ops_dequeue_bulk(mp, obj_table, n);

if (ret < 0)

__MEMPOOL_STAT_ADD(mp, get_fail, n);

else

__MEMPOOL_STAT_ADD(mp, get_success, n);

return ret;

}

mbuf和mempool结构图解

3131

3131

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?