一个小回顾:

视频中或者讲义中在讲到为什么线性回归时损失函数会采用均方误差的时候用的其误差关于高斯分布时的求解非常有意思,也是一个很好的证明方法,可以转化为等价的问题,可以仔细体会一下。

另外在求解logistic回归时的目标函数采用了最大似然概率模型很容易就求出了。所用数据库为mnist手写字数据库,此次分类0和1字符。另外数据库我已经上传到了这儿

clc

clear all

X = load('mnist_all');

mytrain0 = X.train0;

mytrain1 = X.train1;

mytest0 = X.test0;

mytest1 = X.test1;

test = [mytest0',mytest1'];

test = ismember(test,0);

test = ismember(test,0);

test =double( [ones(1,size(test,2));test]);

testy = [zeros(size(mytest0,1),1);ones(size(mytest1,1),1)];

num0 = size(mytrain0,1);

num1 = size(mytrain1,1);

train = [mytrain0',mytrain1'];

train = ismember(train,0);

train = ismember(train,0);

numall = size(train,2);

index = randperm(numall);

y = [zeros(num0,1);ones(num1,1)];

train = train(:,index);

y = y(index);

train =double( [ones(1,numall);train]);

learnRate = 0.1;

w = rand(size(train,1),1);

%% stochastic gradient descent

iter = 100;

% for j = 1:iter

% for i = 1:numall

% dis = my_sigmod(w'*train(:,i))-y(i);

% luckyrate = train(:,i)*dis;

% w = w- learnRate*luckyrate;

% end

% w_f = w;

% end

%% batch gradient descent

for k = 1:1

for f = 1:100

temp = repmat(y' - my_sigmod(w'*train),size(train,1),1);

dis = temp.*train;

sum_ = sum(dis,2);

w = w+ learnRate *sum_;

end

w_f = w;

end

num_correct = 0;

num_error = 0;

num_all = size(test,2);

for i = 1:num_all

if my_sigmod(w_f'*test(:,i))>0.5

preValue = 1;

else

preValue = 0;

end

if preValue==testy(i)

num_correct = num_correct+1;

else

num_error = num_error +1;

end

end

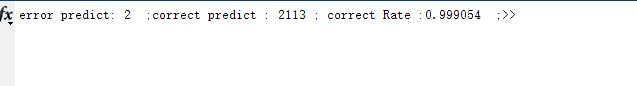

fprintf('error predict: %d ;',num_error);

fprintf('correct predict : %d ; ',num_correct);

fprintf('correct Rate :%f ;', num_correct/num_all);

程序实现了随机梯度下降法和批次梯度下降法,均取得了很好的效果。如图其正确率达到了99.9%

484

484

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?