Mysql 使用 B+ tree 这种数据结构保存数据,Cassandra 使用 LSM tree。其结果就是 Cassandra 的删除操作也变成了一种写入操作,只不过写入的是一个 tomb, 而真正的删除,在 compaction 时才发生。

Cassandra TRACING:

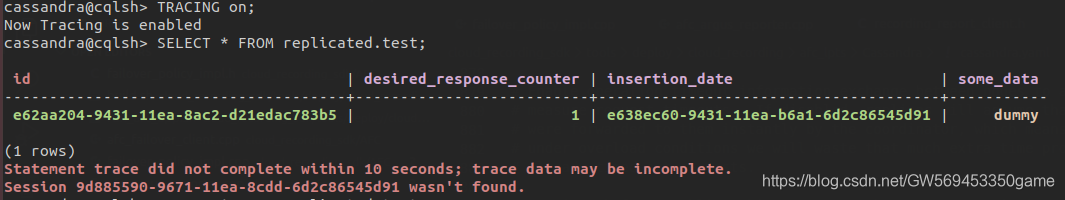

如果cassandra开启了 TRACING ON, 然后查询报错:

多半是 system_traces KEYSPACE 的配置有问题,导致跨 DC 写数据超时(因为开启tracing需要向 system_traces 中的 table 写入数据),需要:

alter keyspace system_traces xxx

Cassandra Read Repair 参数:

关于 nodetool repair:

nodetool repair # 默认会repair 该node自己的token range 和 保存的 replicas 的 token range, 所以会牵扯到好几个node的相互之间数据对比

nodetool repair -pr # 只会 repair 该node 自己的token range, 所以,如果需要将某个keyspace的数据全部repair,需要在所有 hold 这个 keyspace的 node 上运行 nodetool repair -pr

除了手动执行 repair, cassandra 还会在query该数据的时候触发 repair, 即 read repair. 关于 Read Repair:

Two types of read repair: foreground and background.

Foreground here means blocking – we complete all operations before returning to the client. Background means non-blocking – we begin the background repair operation and then return to the client before it has completed.

关于配置 background Read Repair 的参数:

dclocal_read_repair_chance = 0.1 # 同一个dc中随机read_repair的概率是 10%

read_repair_chance = 0.0 # cluster 中随机 read_repaire的概率是 0

speculative_retry = xxx

• ALWAYS: The coordinator node always sends extra read requests to all replicas after every read of this table.

• NONE: The coordinator node never sends extra read requests after a read of this table.

• Xpercentile: The coordinator node sends extra read requests if the table’s latency is higher than normal. That is, extra reads are triggered after a percent of the normal read latency of a table elapses. For example, the coordinator sends out extra read requests after waiting for 48ms (80 percent of 60ms) if a table’s latency is 60 seconds on average.

• Nms: The coordinator node sends out extra read requests if it doesn’t receive any results from the target node in N milliseconds.

注意, 参数 dclocal_read_repair_chance 和 speculative_retry 同时使用好像有个bug,或导致跨DC 的read repair, check this link, 需要关闭其一,例如:

alter table test with dclocal_read_repair_chance=0.0;

关于 Cassandra metrics:

关于如何查看 replication latency:

replication 发生各个node之间,通过查看CrossNodeLatency 指标可以看到 cassandra的复制延时:

注意, cassandra 的Latency metrics 是统计node争个存活期间的数据,其中的Count是单调递增的,只有在node重启时才会reset.

Count=117 表示从该node start开始,接收到177个Message,其中平均延时是 520712.29534117645 微秒(0.52 秒);

OneMinuteRate = 1.356... 表示每分钟平均收到 1.35 个Message;

99thPercentile : 5960319.812 表示 99%的消息在 5.96 秒内收到;

…

curl http://127.0.0.1:8778/org.apache.cassandra.metrics:type=Messaging,name=* | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2311 0 2311 0 0 2274 0 --:--:-- 0:00:01 --:--:-- 2274

{

"request": {

"mbean": "org.apache.cassandra.metrics:name=*,type=Messaging",

"type": "read"

},

"value": {

"org.apache.cassandra.metrics:name=CrossNodeLatency,type=Messaging": {

"Mean": 520712.29534117645,

"StdDev": 1200436.6643582033,

"75thPercentile": 268650.95,

"98thPercentile": 5960319.812,

"RateUnit": "events/second",

"95thPercentile": 4139110.981,

"99thPercentile": 5960319.812,

"Max": 5960319.812,

"Count": 117,

"FiveMinuteRate": 0.33695134016087785,

"50thPercentile": 186563.16,

"MeanRate": 2.661284598969726,

"Min": 2816.16,

"OneMinuteRate": 1.3564407341962146,

"DurationUnit": "microseconds",

"999thPercentile": 5960319.812,

"FifteenMinuteRate": 0.11664276131878955

}

},

"timestamp": 1586250903,

"status": 200

}

关于 Cassandra Internode 加密传输:

Cassandra 默认使用 7000 端口传输数据,但是配置了ssl加密的话使用的是 7001 端口。

使用 ssl 加密的话,证书不正确的node不能加入cluster,会报证书验证失败,同时开启了 ssl 加密 7000 端口就不能用于 node 间的数据传输了。但是还是可以连接 client(同样非ssl).

推荐使用该脚本生成keystore 和 truststore:

#!/bin/bash

KEY_STORE_PATH="$PWD/resources/opt/cassandra/conf/certs"

mkdir -p "$KEY_STORE_PATH"

KEY_STORE="$KEY_STORE_PATH/cassandra.keystore"

PKS_KEY_STORE="$KEY_STORE_PATH/cassandra.pks12.keystore"

TRUST_STORE="$KEY_STORE_PATH/cassandra.truststore"

PASSWORD=xxxx

CLUSTER_NAME=mycluster

CLUSTER_PUBLIC_CERT="$KEY_STORE_PATH/CLUSTER_${CLUSTER_NAME}_PUBLIC.cer"

CLIENT_PUBLIC_CERT="$KEY_STORE_PATH/CLIENT_${CLUSTER_NAME}_PUBLIC.cer"

### Cluster key setup.

# Create the cluster key for cluster communication.

keytool -genkey -keyalg RSA -alias "${CLUSTER_NAME}_CLUSTER" -keystore "$KEY_STORE" -storepass "$PASSWORD" -keypass "$PASSWORD" \

-dname "CN=MyCompany Image $CLUSTER_NAME cluster, OU=A, O=MyCompany, L=Shanghai, ST=CA, C=CN, DC=MyCompany, DC=com" \

-validity 36500

# Create the public key for the cluster which is used to identify nodes.

keytool -export -alias "${CLUSTER_NAME}_CLUSTER" -file "$CLUSTER_PUBLIC_CERT" -keystore "$KEY_STORE" \

-storepass "$PASSWORD" -keypass "$PASSWORD" -noprompt

# Import the identity of the cluster public cluster key into the trust store so that nodes can identify each other.

keytool -import -v -trustcacerts -alias "${CLUSTER_NAME}_CLUSTER" -file "$CLUSTER_PUBLIC_CERT" -keystore "$TRUST_STORE" \

-storepass "$PASSWORD" -keypass "$PASSWORD" -noprompt

### Client key setup.

# Create the client key for CQL.

keytool -genkey -keyalg RSA -alias "${CLUSTER_NAME}_CLIENT" -keystore "$KEY_STORE" -storepass "$PASSWORD" -keypass "$PASSWORD" \

-dname "CN=MyCompany Image $CLUSTER_NAME client, OU=MyCompany, O=MyCompany, L=Shanghai, ST=CA, C=CN, DC=MyCompany, DC=com" \

-validity 36500

# Create the public key for the client to identify itself.

keytool -export -alias "${CLUSTER_NAME}_CLIENT" -file "$CLIENT_PUBLIC_CERT" -keystore "$KEY_STORE" \

-storepass "$PASSWORD" -keypass "$PASSWORD" -noprompt

# Import the identity of the client pub key into the trust store so nodes can identify this client.

keytool -importcert -v -trustcacerts -alias "${CLUSTER_NAME}_CLIENT" -file "$CLIENT_PUBLIC_CERT" -keystore "$TRUST_STORE" \

-storepass "$PASSWORD" -keypass "$PASSWORD" -noprompt

keytool -importkeystore -srckeystore "$KEY_STORE" -destkeystore "$PKS_KEY_STORE" -deststoretype PKCS12 \

-srcstorepass "$PASSWORD" -deststorepass "$PASSWORD"

openssl pkcs12 -in "$PKS_KEY_STORE" -nokeys -out "${CLUSTER_NAME}_CLIENT.cer.pem" -passin pass:xxxx

openssl pkcs12 -in "$PKS_KEY_STORE" -nodes -nocerts -out "${CLUSTER_NAME}_CLIENT.key.pem" -passin pass:xxxx

如果只需要使用node之间通讯加密,可以忽略client证书;client 证书可用于双向ssl认证,即server也会认证client的合法性。 详见连接:http://cloudurable.com/blog/cassandra-ssl-cluster-setup/index.html

Cassandra使用总结:

1. cassandra 在跨区传输时,如果网络丢包严重,当写入量很大时,会导致产生很多的hints, 影响数据同步。可在 /var/lib/cassandar/hints 目录下看到大量文件。适当提高 hinted_handoff_throttle_in_kb,max_hints_delivery_threads 能改善同步性能,但是不能解决根本问题。最好是设置好replicates 的本地存储。避免跨网络不好的的replicates传输。查看日志能看到coordinater又大量的日志:

INFO [HintsDispatcher:73] 2020-05-25 03:26:03,615 HintsDispatchExecutor.java:289 - Finished hinted handoff of file 6766cc74-59a5-455f-90cf-2ef8b91df6ea-1590376417285-1.hints to endpoint /1.2.3.4: 6766cc74-59a5-455f-90cf-2ef8b91df6ea, partially

查看 metric 也能看到很多HintsTimeOut:

curl http://localhost:8778/jolokia/list

curl http://localhost:8778/jolokia/read/org.apache.cassandra.metrics:type=HintsService,name=HintsTimedOut | python -m json.tool

这时使用 nodetool repair 也无济于事,只能等很长时间后(根据数据量和网络情况,数分钟至数小时。。。),coordinater 将所有的hints传到该node,数据才能同步上。

2 . 在写入时序数据时,可设置compatction为 TimeWindowCompactionStrategy,但是注意,如果 gc_grace_seconds 时间设置为0,会导致不能生成 hints(或者生成 hints 立即 expire了). check this link.

三个参数:

- max_hint_window_ms controlling how long to collect hints for.

- gc_grace_seconds indicating hint expiration time. (同时也是 Tombstones 被删除的时间。)

- Data TTL determining duration of data validity.

推荐设置:

- When not using data TTL, gc_grace_seconds(默认十天) should be (far) longer than max_hint_window_ms (默认3小时).

- When using data TTL, gc_grace_seconds should be (reasonably) larger than the smaller of max_hint_window_ms and data TTL. 对于使用 TTL 的数据来说,gc_grace_seconds 一般可以根据TTL改小点,以便快速删除Tombstones, 但是又不应该设置小于 max_hint_window_ms (默认3小时), that’s all…

如果是少量数据,但又想使用cassandra的p2p特性,可以考虑 Hazelcast.

- https://thelastpickle.com/blog/2016/07/27/about-deletes-and-tombstones.html

- https://thelastpickle.com/blog/2016/12/08/TWCS-part1.html

- http://www.benstopford.com/2015/02/14/log-structured-merge-trees/

- https://priyankvex.wordpress.com/2019/04/28/introduction-to-lsm-trees-may-the-logs-be-with-you/

- https://thelastpickle.com/blog/

3640

3640

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?