1、简介

在之前的案例中,spark 作业退出之后,所有的内容都销毁了,如果要对之前运行的程序进行调试和优化,是没有办法查询的,这个时候就有必要部署Spark History Server了。Spark History Server是一个非常重要的工具,可以帮助用户管理和监控 Spark 应用程序的执行情况,提高应用程序的执行效率和性能。可以保存 Spark 应用程序的历史记录,即使应用程序已经结束,用户仍然可以查看和分析历史记录,从而更好地了解应用程序的执行情况和性能指标。

2、k8s 部署

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '12'

username: xxxxxx

creationTimestamp: '2023-08-30T13:47:33.000+08:00'

generation: 16

name: spark-history-server

namespace: spark-operator

resourceVersion: '176130158'

selfLink: /apis/apps/v1/namespaces/spark-operator/deployments/spark-history-server

uid: a1ff1919-1363-4e33-ad3c-3b0babb344a4

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: spark-history-server

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

template:

metadata:

labels:

app: spark-history-server

updateTime: '1698115904624'

spec:

containers:

- args:

- /opt/spark/bin/spark-class

- org.apache.spark.deploy.history.HistoryServer

env:

- name: HADOOP_USER_NAME

value: dlink

image: deploy.deepexi.com/fastdata/spark:dev_20230705

imagePullPolicy: IfNotPresent

name: spark-history-server

ports:

- containerPort: 18080

name: http

protocol: TCP

resources:

limits:

cpu: '2'

memory: 4Gi

requests:

cpu: '1'

memory: 2Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /opt/spark/conf

name: spark-conf-configmap

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

name: spark-conf-configmap

name: spark-conf-configmap

status:

availableReplicas: 1

conditions:

- type: Progressing

lastTransitionTime: '2023-08-30T13:47:33.000+08:00'

lastUpdateTime: '2023-10-24T10:51:46.000+08:00'

message: >-

ReplicaSet "spark-history-server-5bd9b585bb" has successfully

progressed.

reason: NewReplicaSetAvailable

status: 'True'

- type: Available

lastTransitionTime: '2023-11-20T15:42:52.000+08:00'

lastUpdateTime: '2023-11-20T15:42:52.000+08:00'

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: 'True'

observedGeneration: 16

readyReplicas: 1

replicas: 1

updatedReplicas: 1

------------------- service -----------------

NodePort 模式

apiVersion: v1

kind: Service

metadata:

creationTimestamp: '2023-08-30T13:51:24.000+08:00'

labels:

app: spark-history-server

name: spark-history-server

namespace: spark-operator

resourceVersion: '134481651'

selfLink: /api/v1/namespaces/spark-operator/services/spark-history-server

uid: a81b3844-8675-4e08-90fb-949a4399a8cc

spec:

type: NodePort

clusterIP: 192.168.29.142

externalTrafficPolicy: Cluster

ports:

- nodePort: 35601

port: 18080

protocol: TCP

targetPort: 18080

selector:

app: spark-history-server

sessionAffinity: None

status:

loadBalancer: {}

------------------configmap---------------

apiVersion: v1

kind: ConfigMap

metadata:

name: spark-history-configmap

namespace: spark-operator

data:

spark-defaults.conf: |

spark.hadoop.fs.s3a.path.style.access=true

spark.hadoop.fs.s3a.endpoint={{.Values.global.minio.url}}

spark.hadoop.fs.s3a.secret.key={{.Values.global.minio.secretkey}}

spark.hadoop.fs.s3a.access.key={{.Values.global.minio.accesskey}}

spark.hadoop.fs.s3a.buffer.dir=/tmp

spark.hadoop.fs.s3a.connection.ssl.enabled=false

spark.history.fs.cleaner.enabled=true

spark.eventLog.enabled=true

spark.eventLog.dir={{.Values.eventLog.dir}}

spark.history.fs.logDirectory={{.Values.eventLog.dir}}

proxy-user=dlink

-----------------ingress------------------

### 适配不同的k8s 版本###

{{- if semverCompare ">=1.19-0" .Capabilities.KubeVersion.GitVersion -}}

---

apiVersion: networking.k8s.io/v1

{{- else if semverCompare ">=1.14-0" .Capabilities.KubeVersion.GitVersion -}}

---

apiVersion: networking.k8s.io/v1beta1

{{- else -}}

---

apiVersion: extensions/v1beta1

{{- end }}

kind: Ingress

metadata:

name: spark-history-server

namespace: spark-operator

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/proxy-body-size: 102400m

spec:

rules:

- host: spark-history.{{ .Values.envHost }}

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

{{- if semverCompare ">=1.19-0" $.Capabilities.KubeVersion.GitVersion }}

service:

name: spark-history-server

port:

number: 18080

{{- else }}

serviceName: spark-history-server

servicePort: 18080

{{- end }}

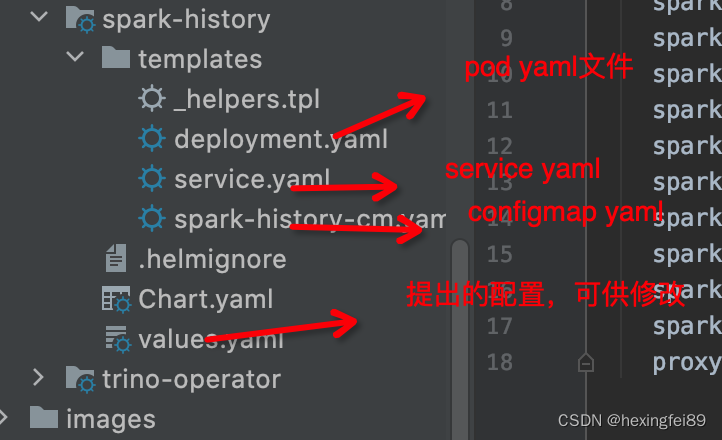

3、helm 脚本部署

前提安装配置了helm 插件 使用

helm create spark-history-server

工程建好后有如下几层目录

---------------deployment.yaml----------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: spark-history-server

namespace: spark-operator

spec:

selector:

matchLabels:

app: spark-history-server

replicas: 1

template:

metadata:

labels:

app: spark-history-server

spec:

containers:

- image: {{ .Values.images.image }}

name: spark-history-server

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: "2"

memory: 4Gi

requests:

cpu: "1"

memory: 2Gi

args: ["/opt/spark/bin/spark-class", "org.apache.spark.deploy.history.HistoryServer"]

env:

- name: HADOOP_USER_NAME

value: dlink

ports:

- containerPort: 18080

name: http

volumeMounts:

- mountPath: /opt/spark/conf

name: spark-history-configmap

volumes:

- configMap:

defaultMode: 420

name: spark-history-configmap

name: spark-history-configmap

----------------service---------------------

apiVersion: v1

kind: Service

metadata:

labels:

app: spark-history-server

name: spark-history-server

namespace: spark-operator

spec:

type: ClusterIP

ports:

- port: 18080

protocol: TCP

targetPort: 18080

name: http

selector:

app: spark-history-server

--------------------configmap-----------------

apiVersion: v1

kind: ConfigMap

metadata:

name: spark-history-configmap

namespace: spark-operator

data:

spark-defaults.conf: |

spark.hadoop.fs.s3a.path.style.access=true

spark.hadoop.fs.s3a.endpoint={{.Values.global.minio.url}}

spark.hadoop.fs.s3a.secret.key={{.Values.global.minio.secretkey}}

spark.hadoop.fs.s3a.access.key={{.Values.global.minio.accesskey}}

spark.hadoop.fs.s3a.buffer.dir=/tmp

spark.hadoop.fs.s3a.connection.ssl.enabled=false

spark.history.fs.cleaner.enabled=true

spark.eventLog.enabled=true

spark.eventLog.dir={{.Values.eventLog.dir}}

spark.history.fs.logDirectory={{.Values.eventLog.dir}}

proxy-user=dlink

-------------------values.yaml----------------

replicaCount: 1

images:

repository:

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

image: deploy.deepexi.com/dlink/spark:release-3.0.0-20230926

service:

type: ClusterIP

port: 80

eventLog:

dir: s3a://dlink/event

nodeSelector: {}

tolerations: []

affinity: {}

---------------ingress.yaml--------------

## 见上面代码块里ingress配置

创建完成执行

helm upgrade --install spark-history ./spark-history -f ./spark-history/values.yaml

664

664

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?