Kubernetes集群+etcd单实例部署k8s管理系统

目录

一、 Kubernetes 和相关组件介绍

1.Kubernetes 概述

Kubernetes 是 Google 开源的容器集群管理系统,基于 Docker 构建一个容器的调度服务,提供资源调度、均衡容灾、服务注册、动态扩缩容等功能套件。基于容器的云平台。官网:https://kubernetes.io/

2.Kubernetes 架构设计图

3.Kubernetes 常见组件介绍

master:Kubernetes 管理节点。

apiserver:提供接口服务,用户通过 apiserver 来管理整个容器集群平台。API Server 负责和 etcd 交互(其他组件不会直接操作 etcd,只有 API Server 这么做),整个 Kubernetes 集群的所有交互都是以 API Server 为核心的。如:

所有对集群进行的查询和管理都要通过 API 来进行。

所有模块之间并不会互相调用,而是通过和 API Server 打交道来完成自己那部分的工作 、API Server 提供的验证和授权保证了整个集群的安全。

scheduler:Kubernetes 调度服务。

Replication Controllers:复制,保证 pod 的高可用。

minion:真正运行容器 container 的物理机。Kubernetes 中需要很多 minion 机器,来提供运算。

container:容器,可以运行服务和程序。

Pod:在 Kubernetes 系统中,调度的最小颗粒不是单纯的容器,而是抽象成一个 Pod,Pod 是一个可以被创建、销毁、调度、管理的最小的部署单元。Pod 中可以包括一个或一组容器。

Kube_proxy:代理。做端口转发,相当于 LVS-NAT 模式中的负载调度器。Proxy 解决了同一宿主机,相同服务端口冲突的问题,还提供了的对外服务的能力,Proxy 后端使用了随机、轮询负载均衡算法。

etcd:etcd 存储 Kubernetes 的配置信息,可以理解为是 k8s 的数据库,存储着 k8s 容器云平台中所有节点、Pods、网络等信息。

Services: Services 是 Kubernetes 最外围的单元,通过虚拟一个访问 IP 及服务端口,可以访问我们定义好的 Pod 资源,目前的版本是通过 iptables 的 nat 转发来实现,转发的目标端口为 Kube_proxy 生成的随机端口。

Labels :标签。Labels 是用于区分 Pod、Service、Replication Controller 的 key/value 键值对,仅使用在 Pod、Service、 Replication Controller 之间的关系识别,但对这些单元本身迚行操作时得使用 name 标签。

Deployment:Kubernetes Deployment 用于更新 Pod 和 Replica Set(下一代的 Replication Controller)的方法,你可以在 Deployment 对象中只描述你所期望的理想状态(预期的运行状态),Deployment 控制器会将现在的实际状态转换成期望的状态。Deployment 可以帮我们实现无人值守的上线,大大降低我们的上线过程的复杂沟通、操作风险。

4.各组件之间的关系

Kubernetes 的架构由一个 master 和多个 minion 组成,master 通过 api 提供服务,接受 kubectl 的请求来调度管理整个集群。 kubectl: 是 k8s 平台的一个管理命令。

Replication controller 定义了多个 pod 戒者容器需要运行,如果当前集群中运行的 pod 或容器达不到配置的数量,Replication controller 会调度容器在多个 minion 上运行,保证集群中的 pod 数量。

service 则定义真实对外提供的服务,一个 service 会对应后端运行的多个 container。

Kubernetes 是个管理平台,minion 上的 kube-proxy 拥有提供真实服务公网 IP。客户端访问 kubernetes 中提供的服务,是直接访问到 kube-proxy 上的。

在 Kubernetes 中 pod 是一个基本单元,一个 pod 可以是提供相同功能的多个 container,这些容器会被部署在同一个 minion 上。minion 是运行 Kubelet 中容器的物理机。minion 接受 master 的指令创建 pod 或者容器。

二、kubernetes集群+etcd单实例部署过程

1、集群规划阶段:

主机环境

• 192.168.56.7 (node2)• kubernetes计算端

• 192.168.56.6 (node1)• kubernetes计算端

• 192.168.56.5 (master)• etcd服务 和 kubernetes控制端

2、集群基础环境准备阶段:

2.1、操作系统基础: centos7

2.2、基础操作系统处理:

2.1、关闭防火墙:

# systemctl stop firewalld

# systemctl disable firewalld

2.2、hosts文件配置:

注:在三个节点/etc/hosts下增加如下内容:

# vim /etc/hosts

192.168.56.5 k8s_master

192.168.56.6 k8s_node1

192.168.56.7 k8s_node2

2.3、关闭selinux安全防护服务 :

临时性关闭,系统重启之后消失

# setenforce 0

查看selinux状态:

# getenforce

永久关闭selinux安全防护 :

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/’ /etc/sysconfig/selinux

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

2.4、禁用swap服务

执行此命令临时关闭:

# swapoff -a

永久禁用

# sed -i 's/.*swap.*/#&/' /etc/fstab

2.5\安装epel源:# wget http://mirrors.163.com/.help/CentOS7-Base-163.repo # yum makecache # yum clean all # yum repolist~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

3、etcd存储和flanneld部署

3.1、etcd存储服务介绍:

etcd是一个分布式, 高性能, 高可用的键值存储系统,由CoreOS开发并维护的,

灵感来自于 ZooKeeper 和 Doozer,它使用Go语言编写,并通过Raft一致性算法处理日志复制以保证强一致性。

特点:

简单: curl可访问的用户的API(HTTP+JSON)

安全: 可选的SSL客户端证书认证

快速: 单实例每秒 1000 次写操作

可靠: 使用Raft保证一致性

注:如下都是在master主机上操作:

1)、部署etcd服务(单实例模式)

[root@master ~]# yum -y install etcd flannel ntp

2)、配置etcd主配置参数

[root@master ~]# vim /etc/etcd/etcd.conf

参数配置如下:

#[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ##etcd存储数据的目录 #ETCD_WAL_DIR="" #ETCD_LISTEN_PEER_URLS="http://localhost:2380" ETCD_LISTEN_CLIENT_URLS="http://localhost:2379,http://192.168.56.5:2379" ###etcd服务的client通信端口 #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="default" ##etcd节点的名称,如果是集群的模式需要配置,单实例按照默认即可 #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" #[Clustering] #ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.56.5:2379" ###etcd对外服务广播的地址 #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" #ETCD_INITIAL_CLUSTER="default=http://localhost:2380" #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true"

3)、启用etcd服务并查看运行情况

[root@master ~]# systemctl start etcd #启动etcd服务 [root@master ~]# systemctl enable etcd #设置etcd服务开启自启

[root@master ~]# netstat -anpt | grep 2379 #查看etcd服务端口号 tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 1708/etcd tcp 0 0 192.168.56.5:2379 0.0.0.0:* LISTEN 1708/etcd tcp 0 0 192.168.56.5:50012 192.168.56.5:2379 ESTABLISHED 3517/kube-apiserver tcp 0 0 192.168.56.5:2379 192.168.56.5:50412 ESTABLISHED 1708/etcd tcp 0 0 127.0.0.1:2379 127.0.0.1:39046 ESTABLISHED 1708/etcd

[root@master ~]# etcdctl member list #检查etcd集群成员列表,这里只有一台 8e9e05c52164694d: name=default peerURLs=http://localhost:2380 clientURLs=http://192.168.56.5:2379 isLeader=true

4)、设置etcd网络配置

[root@master ~]# etcdctl mkdir /k8s/network ##创建一个目录 /k8s/network 用于存储 flannel 网络信息 [root@master ~]# etcdctl set /k8s/network/config '{"Network":"10.255.0.0/16"}' ##给 /k8s/network/config 赋一个字符串的值 [root@master ~]# etcdctl get /k8s/network/config #查看etcd网络 {"Network":"10.255.0.0/16"}

3.2、flanneld服务过程解析

注:如下是在master上的操作

1. 从 etcd 中获取出 /k8s/network/config 的值

2. 划分 subnet 子网,并在 etcd 中进行注册

3. 将子网信息记录到 /run/flannel/subnet.env 中

1)、部署flanneld服务

[root@master ~]# yum -y install etcd flannel ntp

2)、配置flanneld配置参数

[root@master pod]# vim /etc/sysconfig/flanneld

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://192.168.56.5:2379" ###etcd服务的通信地址 # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/k8s/network" ##etcd网络地址目录 # Any additional options that you want to pass FLANNEL_OPTIONS="--iface=ens33" ##通信的物理接口,根据机器的网卡名称而定

3)、启动flanneld服务,并查看flanneld网络

[root@master ~]# systemctl start flanneld [root@master ~]# systemctl enable flanneld

[root@master ~]# ifconfig ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.5 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe7c:25e0 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:7c:25:e0 txqueuelen 1000 (Ethernet) RX packets 363883 bytes 183411929 (174.9 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 272021 bytes 153547711 (146.4 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 10.255.99.0 netmask 255.255.0.0 destination 10.255.99.0 inet6 fe80::7ff8:6ec:d1db:510d prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3 bytes 144 (144.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 2816014 bytes 1350052898 (1.2 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2816014 bytes 1350052898 (1.2 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

3)、查看子网信息

[root@master ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.255.0.0/16 FLANNEL_SUBNET=10.255.99.1/24 FLANNEL_MTU=1472 FLANNEL_IPMASQ=false

注:subnet.env的作用

• 之后将会有一个脚本将 subnet.dev 转写成一个 docker 的环境变量文件 /run/flannel/docker。

• docker0 的地址是由 /run/flannel/subnet.env 的 FLANNEL_SUBNET 参数决定的。

[root@master ~]# cat /run/flannel/docker DOCKER_OPT_BIP="--bip=10.255.99.1/24" DOCKER_OPT_IPMASQ="--ip-masq=true" DOCKER_OPT_MTU="--mtu=1472" DOCKER_NETWORK_OPTIONS=" --bip=10.255.99.1/24 --ip-masq=true --mtu=1472"

4、kubernetes集群部署

4.1、kubernetes控制端部署

注:如下是在master上的操作

1)、部署kubernetes服务

[root@master ~]# yum -y install kubernetes

2)、配置kubernetes配置参数

[root@master ~]# vim /etc/kubernetes/config

### # kubernetes system config # The following values are used to configure various aspects of all # kubernetes services, including # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" ##日志等级 # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" ##false表示不允许特权容器 # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://192.168.56.5:8080" ##kubernetes控制节点的地址和监听端口

3)、配置apiserver配置参数

[root@master ~]# vim /etc/kubernetes/apiserver

### # kubernetes system config # The following values are used to configure the kube-apiserver # The address on the local server to listen to. KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" ##监听所有接口 # The port on the local server to listen on. # KUBE_API_PORT="--port=8080" # Port minions listen on # KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.56.5:2379" ##etcd通信地址及端口 # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" ##kubernetes启动的每一个pod和service都会从这个范围中分配地址 # default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=AlwaysAdmit" ###不做限制,允许节点可以访问apiserver # Add your own! KUBE_API_ARGS=""

4)、配置kube-scheduler配置参数

[root@master ~]# vim /etc/kubernetes/scheduler

### # kubernetes scheduler config # default config should be adequate # Add your own! KUBE_SCHEDULER_ARGS="0.0.0.0"

5)、在master上启用kube-apiserver、kube-controller-manager、kube-scheduler和flanneld服务

[root@master ~]# systemctl start kube-apiserver kube-controller-manager kube-scheduler flanneld [root@master ~]# systemctl enable kube-apiserver kube-controller-manager kube-scheduler flanneld [root@master ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.5 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe7c:25e0 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:7c:25:e0 txqueuelen 1000 (Ethernet) RX packets 394993 bytes 188813723 (180.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 300857 bytes 170716035 (162.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 10.255.99.0 netmask 255.255.0.0 destination 10.255.99.0 inet6 fe80::7ff8:6ec:d1db:510d prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3 bytes 144 (144.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 3130110 bytes 1500651217 (1.3 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3130110 bytes 1500651217 (1.3 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

4.2、kubernetes计算节点的部署

- 4.2、计算节点1部署

注:node1节点上的操作如下:

1)、部署kubernetes服务、flanneld服务

[root@node1 ~]# yum -y install kubernetes flannel ntp

2)、配置flanneld配置参数

[root@node1 ~]# vim /etc/sysconfig/flanneld

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://192.168.56.5:2379" ##指定etcd服务器地址 # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/k8s/network" ##etcd网络目录 # Any additional options that you want to pass FLANNEL_OPTIONS="--iface=ens33" ##通信的物理接口。根据服务器的实际网卡名称而定

3)、配置kubernetes配置参数

[root@node1 ~]# vim /etc/kubernetes/config

### # kubernetes system config # The following values are used to configure various aspects of all # kubernetes services, including # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://192.168.56.5:8080" ###指定master的地址和端口

4)、配置kubelet配置参数

[root@node1 ~]# vim /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" ##监听所有的接口 # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=node1" ###计算节点的名称,要和本机名称保持一致 # location of the api-server KUBELET_API_SERVER="--api-servers=http://192.168.56.5:8080" ###指定master的apiserver地址及端口 # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/tianyebj/pod-infrastructure:latest" ###创建的容器的下载的镜像库地址 # Add your own! KUBELET_ARGS=""

5、启用计算节点的服务

[root@node1 ~]# systemctl start flanneld kube-proxy kubelet docker [root@node1 ~]# systemctl enable flanneld kube-proxy kubelet docker [root@node1 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.255.7.1 netmask 255.255.255.0 broadcast 0.0.0.0 ether 02:42:2c:a6:0e:cf txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.6 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fea7:2ec8 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:a7:2e:c8 txqueuelen 1000 (Ethernet) RX packets 246762 bytes 224753290 (214.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 195589 bytes 37610468 (35.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 10.255.7.0 netmask 255.255.0.0 destination 10.255.7.0 inet6 fe80::1d70:2f33:97fc:ef09 prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3 bytes 144 (144.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 72 bytes 6048 (5.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 72 bytes 6048 (5.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

6、查看kubernetes-proxy的端口号

[root@node1 ~]# netstat -antp | grep proxy

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 1881/kube-proxy tcp 0 0 192.168.56.6:60328 192.168.56.5:8080 ESTABLISHED 1881/kube-proxy tcp 0 0 192.168.56.6:60340 192.168.56.5:8080 ESTABLISHED 1881/kube-proxy

- 4.2、计算节点2部署

注:node2节点上的操作如下:

1)、部署kubernetes服务、flanneld服务

[root@node2 ~]# yum -y install kubernetes flannel ntp

2)、配置flanneld配置参数

[root@node2 ~]# vim /etc/sysconfig/flanneld

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://192.168.56.5:2379" ##指定etcd服务器地址 # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/k8s/network" ##etcd网络目录 # Any additional options that you want to pass FLANNEL_OPTIONS="--iface=ens33" ##通信的物理接口。根据服务器的实际网卡名称而定

3)、配置kubernetes配置参数

[root@node2 ~]# vim /etc/kubernetes/config

### # kubernetes system config # The following values are used to configure various aspects of all # kubernetes services, including # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://192.168.56.5:8080" ###指定master的地址和端口

4)、配置kubelet配置参数

[root@node2 ~]# vim /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" ##监听所有的接口 # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=node2" ###计算节点的名称,要和本机名称保持一致 # location of the api-server KUBELET_API_SERVER="--api-servers=http://192.168.56.5:8080" ###指定master的apiserver地址及端口 # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/tianyebj/pod-infrastructure:latest" ###创建的容器的下载的镜像库地址 # Add your own! KUBELET_ARGS=""

5、启用计算节点的服务

[root@node1 ~]# systemctl start flanneld kube-proxy kubelet docker [root@node1 ~]# systemctl enable flanneld kube-proxy kubelet docker [root@node1 ~]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1472 inet 10.255.40.1 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::42:f1ff:fe0f:2e1 prefixlen 64 scopeid 0x20<link> ether 02:42:f1:0f:02:e1 txqueuelen 0 (Ethernet) RX packets 3 bytes 216 (216.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 8 bytes 648 (648.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.7 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe81:bcf9 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:81:bc:f9 txqueuelen 1000 (Ethernet) RX packets 256267 bytes 229752864 (219.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 177806 bytes 33406861 (31.8 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 10.255.40.0 netmask 255.255.0.0 destination 10.255.40.0 inet6 fe80::c010:e977:6f9d:9614 prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3 bytes 144 (144.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 72 bytes 6048 (5.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 72 bytes 6048 (5.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

4.3、查看kubernetes-proxy的端口号

[root@node1 ~]# netstat -antp | grep proxy

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 1832/kube-proxy tcp 0 0 192.168.56.7:45780 192.168.56.5:8080 ESTABLISHED 1832/kube-proxy tcp 0 0 192.168.56.7:45778 192.168.56.5:8080 ESTABLISHED 1832/kube-proxy

三、kubernetes集群验证并创建docker容器

1、master上查看集群的运行状态

[root@master ~]# kubectl get nodes NAME STATUS AGE node1 Ready 21h node2 Ready 21h

2、通过kubernetes控制节点使用yaml文件创建docker容器

1)、创建pod yaml文件

[root@master ~]# mkdir -p k8s_yaml/pod [root@master ~]# cd k8s_yaml/pod/ [root@master ~]# vim nginx_pod.yaml

apiVersion: v1 #定义k8s api的版本v1 kind: Pod #kind资源类型为 Pod metadata: #元数据声明 name: nginx # 名称是nginx labels: #标签申明 app: web #标签名称是 app:web spec: #容器详细信息 containers: # 容器信息 - name: nginx #容器名称 image: nginx:1.13 #容器镜像 ports: #容器访问端口 - containerPort: 80

2)、创建nginx容器并查看创建容器的状态

[root@master ~]# kubectl create -f nginx_pod.yaml [root@master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 20h [root@master ~]# kubectl get pod nginx NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 20h

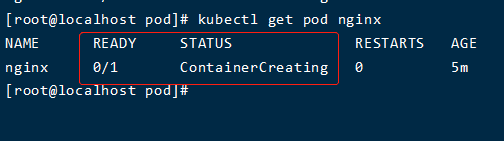

注:如果启动的docker的一直处于ContainerCreating对状态

查看pod详细信息确定问题

[root@master ~]# kubectl describe pod nginx

在报错信息中了解到Scheduled调度服务选择了哪个节点上创建pod,在pull拉取镜像的时候失败了。

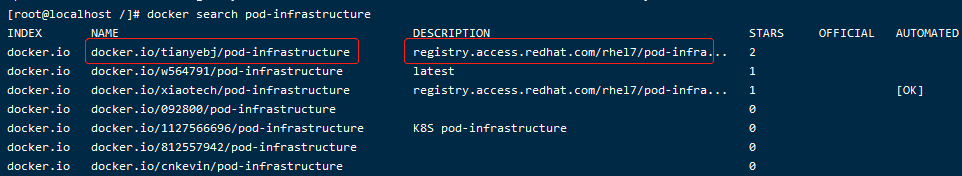

[root@node1 ~]# docker search pod-infrastructure

修改node节点上kubelet下载地址

[root@node1 ~]# vim /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=node1" # location of the api-server KUBELET_API_SERVER="--api-servers=http://192.168.56.5:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/tianyebj/pod-infrastructure:latest" # Add your own! KUBELET_ARGS=""

[root@node1 ~]# systemctl restart kubelet.service

再去master上查看pod详细信息

[root@master ~]# kubectl describe pod nginx

再到对应的节点上查看创建的docker容器

[root@node1 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7e8eb4c4ba65 nginx:1.13 "nginx -g 'daemon ..." 20 hours ago Up 20 hours k8s_nginx.78d00b5_nginx_default_38506d64-bd3d-11eb-8148-000c297c25e0_61460ab2 b5b9071a536c docker.io/tianyebj/pod-infrastructure:latest "/pod" 20 hours ago Up 20 hours k8s_POD.b8a70607_nginx_default_38506d64-bd3d-11eb-8148-000c297c25e0_371c9ae3

四、kubernetes控制界面部署

注:Kubernetes Dashboard(仪表盘)是一个旨在将通用的基于Web的监控和操作界面加入 Kubernetes 的项目。

注:在master上操作的

1、创建dashboard-deployment.yaml配置文件

[root@master ~]# vim /etc/kubernetes/dashboard-deployment.yaml

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kubernetes-dashboard-latest namespace: kube-system spec: ###定义pod属性 replicas: 1 template: metadata: labels: k8s-app: kubernetes-dashboard version: latest kubernetes.io/cluster-service: "true" spec: ###定义容器的属性 containers: - name: kubernetes-dashboard image: docker.io/bestwu/kubernetes-dashboard-amd64:v1.6.3 ###指定镜像名称,这是中文的镜像 imagePullPolicy: IfNotPresent resources: limits: ###关于pod使用的cpu和内存的硬件资源限制 cpu: 100m memory: 50Mi requests: cpu: 100m memory: 50Mi ports: - containerPort: 9090 args: - --apiserver-host=http://192.168.1.1:8080 ###apiserver服务地址和端口 livenessProbe: httpGet: path: / port: 9090 ###容器的地址端口 initialDelaySeconds: 30 timeoutSeconds: 30

2、创建dashboard-service.yaml配置文件

[root@master ~]# vim /etc/kubernetes/dashboard-service.yaml

apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system ##命令空间名称 labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" spec: selector: k8s-app: kubernetes-dashboard ports: - port: 80 targetPort: 9090

注:service 的三种端口:

port:service 暴露在 cluster ip 上的端口,port 是提供给集群内部客户访问 service 的入口。

nodePort:nodePort 是 k8s 提供给集群外部客户访问 service 入口的一种方式。

targetPort:targetPort 是 pod 中容器实例上的端口,从 port 和 nodePort 上到来的数据最终经过 kube-proxy 流入到后端 pod 的 targetPort 上进入容器。

3、在计算节点准备web镜像

注:必须在node1和node2同时都操作一次的,

[root@node1 ~]# mkdir /root/k8s [root@node1 ~]# cd /root/k8s [root@node1 k8s]# ls docker.io-bestwu-kubernetes-dashboard-amd64-zh.tar pod-infrastructure.tar #将这两个软件包上传到该目录 [root@node1 k8s]# docker load -i pod-infrastructure.tar [root@node1 k8s]# docker load -i docker.io-bestwu-kubernetes-dashboard-amd64-zh.tar

4、启动dashboard的deployment和service容器

[root@master ~]# kubectl create -f /etc/kubernetes/dashboard-deployment.yaml [root@master ~]# kubectl create -f /etc/kubernetes/dashboard-service.yaml

5、查看运行情况及启动日志

[root@master ~]# kubectl get deployment --all-namespaces NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE kube-system kubernetes-dashboard-latest 1 1 1 1 1h

[root@master ~]# kubectl get svc --all-namespaces NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes 10.254.0.1 <none> 443/TCP 1d kube-system kubernetes-dashboard 10.254.22.16 <none> 80/TCP 1h

[root@master ~]# kubectl get pod -o wide --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE default nginx 1/1 Running 0 1h 10.255.40.2 node2 kube-system kubernetes-dashboard-latest-625244387-nxblb 1/1 Running 0 1h 10.255.7.2 node1

查看日志

[root@master ~]# kubectl get pod -n kube-system -o wide

[root@master ~]# kubectl logs kubernetes-dashboard-latest-2915596453-m9wpz -n kube-system

Using HTTP port: 8443 Using apiserver-host location: http://192.168.56.5:8080 Skipping in-cluster config Using random key for csrf signing No request provided. Skipping authorization header Successful initial request to the apiserver, version: v1.5.2 No request provided. Skipping authorization header Creating in-cluster Heapster client Could not enable metric client: Health check failed: the server could not find the requested resource (get services heapster). Continuing.

注:出现以上就是表示容器已经启动完成

6、在谷歌的浏览器上访问apiserver设置的地址 http://192.168.56.5:8080/ui

注:如果出现这个错误:

Error: 'dial tcp 10.255.7.2:9090: getsockopt: connection refused'

1、需要检查apiserver的地址设置的是否正确(重启apiserver和kubenets),然后就是flannel是否配置启动

2、配置Kubernetes网络,在master和nodes上都需要安装flannel 检查master和node上配置文件是否一致。

3、检查iptables -L -n ,检查node节点上的FORWARD 查看转发是否是drop,如果是drop,则开启

主节点的:

节点1的:

节点2的:

根据如上的发现节点的FORWARD是drop的状态,我们就需要将FORWARD的状态修改为ACCEPT

[root@node1 k8s]# iptables -P FORWARD ACCEPT

[root@node1 k8s]# iptables -L -n

在master上访问一下容器的

[root@master kubernetes]# curl -i http://10.255.7.2:9090

HTTP/1.1 200 OK

Accept-Ranges: bytes

Cache-Control: no-store

Content-Length: 848

Content-Type: text/html; charset=utf-8

Last-Modified: Fri, 28 Jul 2017 12:38:51 GMT

Date: Wed, 26 May 2021 09:46:10 GMT

<!doctype html> <html ng-app="kubernetesDashboard"> <head> <meta charset="utf-8"> <title ng-controller="kdTitle as $ctrl" ng-bind="$ctrl.title()"></title> <link rel="icon" type="image/png" href="assets/images/kubernetes-logo.png"> <meta name="viewport" content="width=device-width"> <link rel="stylesheet" href="static/vendor.9aa0b786.css"> <link rel="stylesheet" href="static/app.8ebf2901.css"> </head> <body> <!--[if lt IE 10]>

<p class="browsehappy">You are using an <strong>outdated</strong> browser.

Please <a href="upgrade">http://browsehappy.com/">upgrade your browser</a> to improve your

experience.</p>

<![endif]--> <kd-chrome layout="column" layout-fill> </kd-chrome> <script src="static/vendor.840e639c.js"></script> <script src="api/appConfig.json"></script> <script src="static/app.68d2caa2.js"></script> </body> </html>注:如上的显示就是正常访问的情况,然后在浏览器上访问就可以了

http://192.168.56.5:8080/ui

5732

5732

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?