Android Runtime is one of the core building blocks in the Android ecosystem. Most of you probably heard the terms: Dalvik, ART, JIT and AOT. If you ever wondered what those terms exactly mean and how does Android Runtime work to make our apps as fast as possible — you’ll learn all that in this article 🚀.

So let's get into it!

What is Android Runtime?

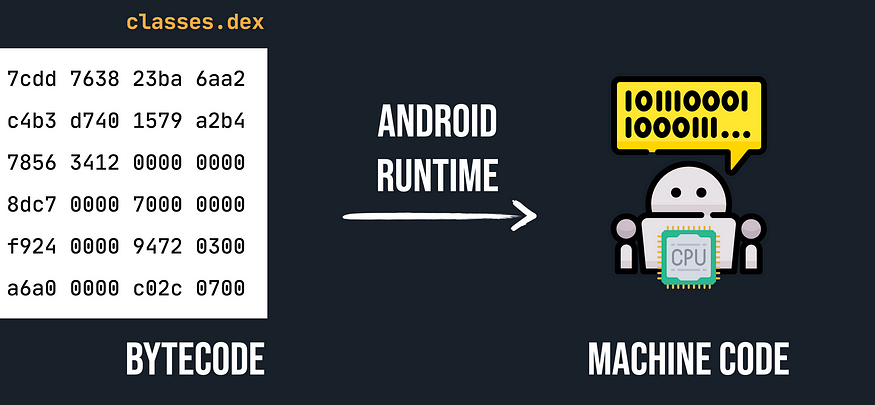

When we build our app and generate APK, part of that APK are .dex files. Those files contain the source code of our app including all libraries that we used in low-level code designed for a software interpreter — the bytecode.

When a user runs our app the bytecode written in .dex files is translated by Android Runtime into the machine code — a set of instructions, that can be directly understood by the machine and is processed by the CPU.

Android Runtime also manages memory and garbage collection but, to not make this article too long I’ll focus here only on a compilation.

The compilation of the bytecode into machine code can be done using various strategies and all those strategies have their tradeoffs. And to understand how Android Runtime works today we have to move back in time a couple of years and first learn about Dalvik.

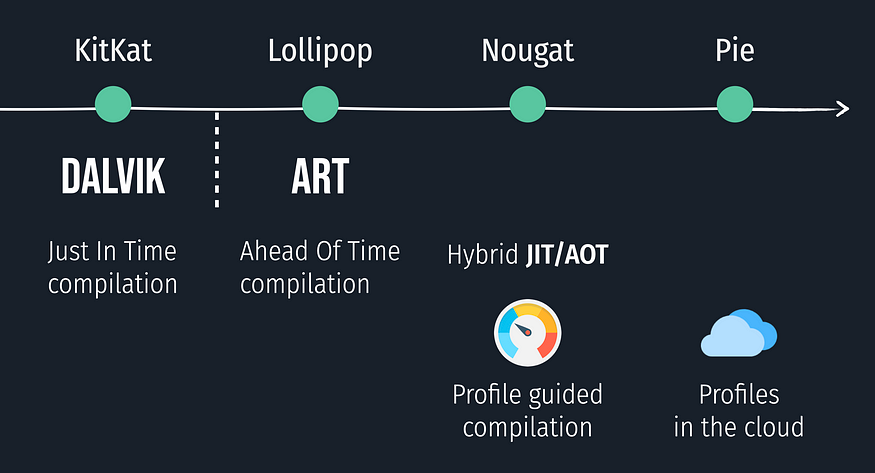

Dalvik (up to Android K)

In the early days of Android smartphones were not so powerful as now. Most phones at a time have very little RAM, some of them even as little as 200MB.

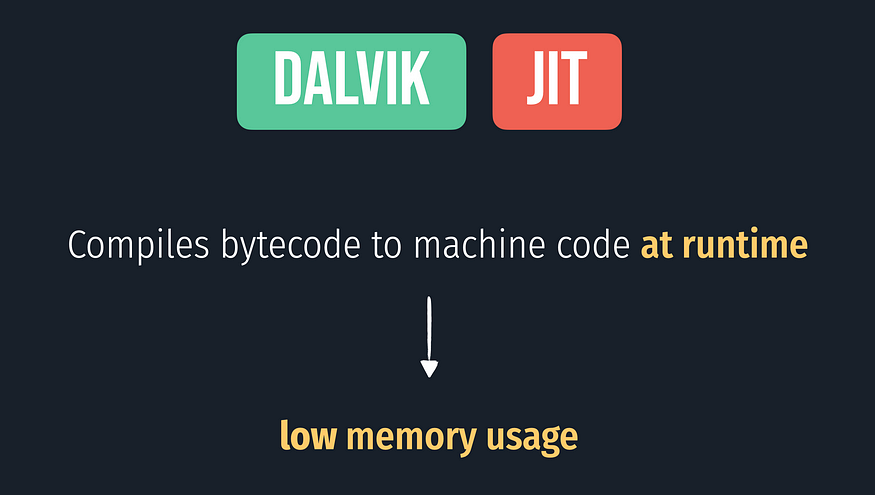

No wonder the first Android Runtime, known as Dalvik, was implemented to optimize exactly this one parameter: RAM usage.

So instead of compiling the whole app to machine code before running it, it used the strategy called Just In Time compilation, JIT in short.

In this strategy, the compiler works a little bit as an interpreter. It compiles small chunks of code during the execution of the app — at runtime.

And because Dalvik only compiles the code that it needs and does it at runtime it allows saving a lot of RAM.

But this strategy has one serious drawback — because this all happens at runtime it obviously has a negative impact on runtime performance.

Eventually, some optimizations were introduced to make Dalvik more performant. Some of the frequently used compiled pieces of code were cached and not recompiled again. But this was very limited because of how scarce RAM was in those early days.

That worked fine for a couple of years but meanwhile, phones became more and more performant and got more and more RAM. And since also apps were getting bigger the JIT performance impact was becoming more of a problem.

That’s why in Android L there was a new Android Runtime introduced: ART.

ART (Android L)

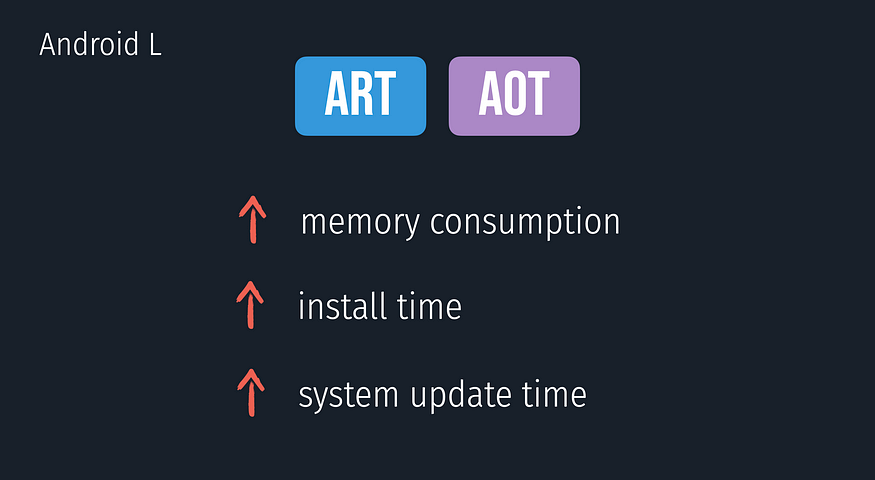

The way ART worked in Android L was a 180-degree change from what we knew from Dalvik. ART instead of using Just in Time compilation like it was done in Dalvik used a strategy called Ahead of Time compilation.

In ART instead of interpreting code at runtime code was compiled before running the app and when the app was running, machine code was already prepared.

This approach hugely improved runtime performance since running native, machine code is even 20 times faster than just in time compilation.

Drawbacks:

ART in Android L used a lot more RAM than Dalvik.

Another drawback is that it took more time to install the app since after downloading the APK the whole app needed to be transformed to the machine code and it also took longer to perform a system update because all apps need to be reoptimized.

For frequently run parts of the app it obviously pays off to have it precompiled but the reality is most parts of the app are opened by the users very very rarely and it almost never pays off to have the whole app precompiled.

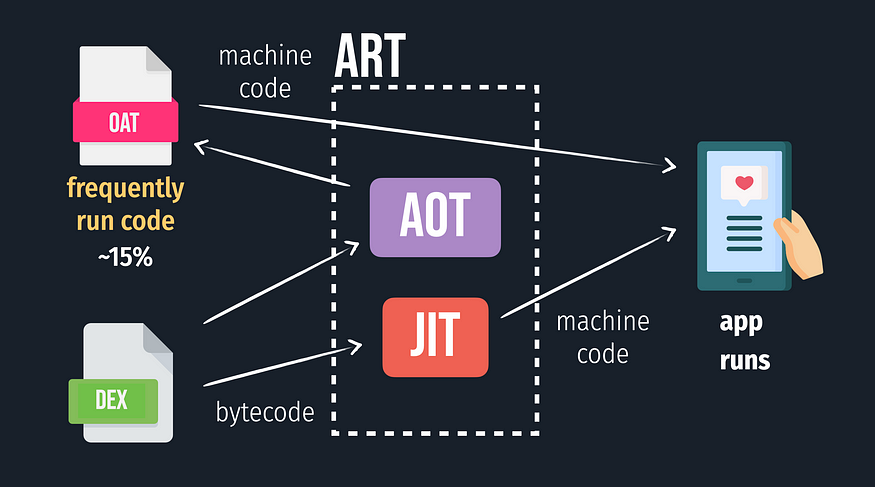

And that’s why in Android N, Just In Time compilation was introduced back to Android Runtime along with something called profile-guided compilation.

Profile-guided compilation (Android N)

Profile guided compilation is a strategy that allows to constantly improve the performance of Android apps as they run. By default, the app is compiled using the Just in Time compilation strategy, but when ART detects that some methods are “hot” which means that they run frequently, ART can precompile and cache those methods to achieve the best performance.

This strategy allows providing the best possible performance for key parts of the app while reducing the RAM usage. Since as it turns out for most apps only 10% to 20% of code is frequently used.

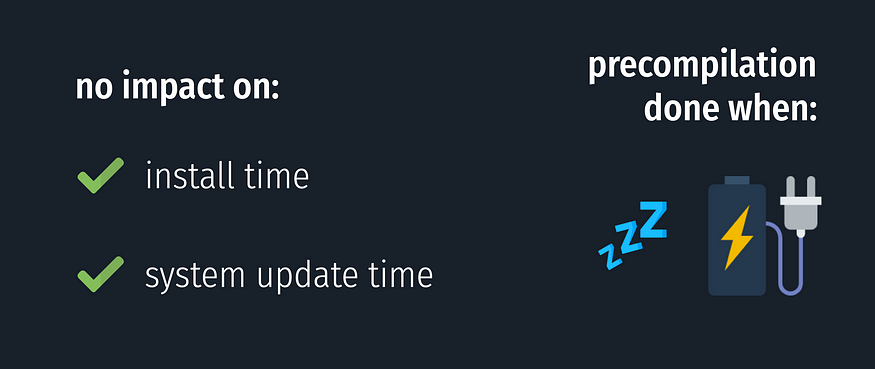

After this change ART, no more impacted the speed of app installs and system updates. Precompilation of key parts of an app happened only while the device was idle and charging to minimize the impact on the device battery.

The only drawback of this approach is that in order to get profile data and precompile frequently used methods and classes user has to actually use an app. That means a few first usages of the app might be kinda slow because in that case only Just in Time compilation is going to be used.

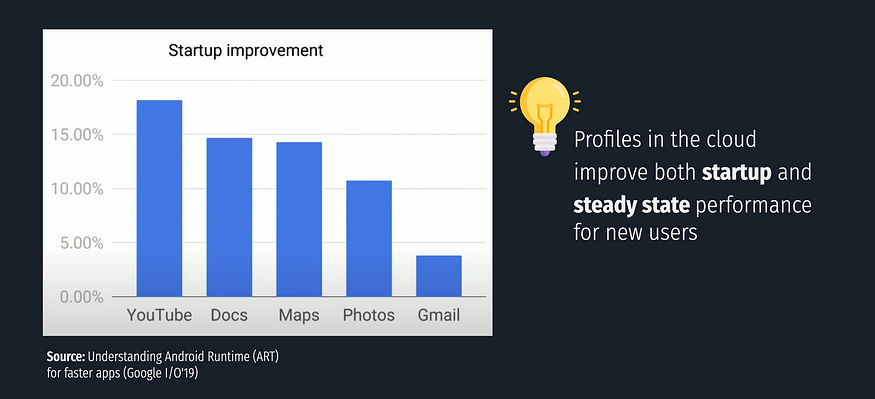

And that’s why to improve this initial user experience in Android P, Google introduced profiles in the cloud.

Profiles in the cloud (Android P)

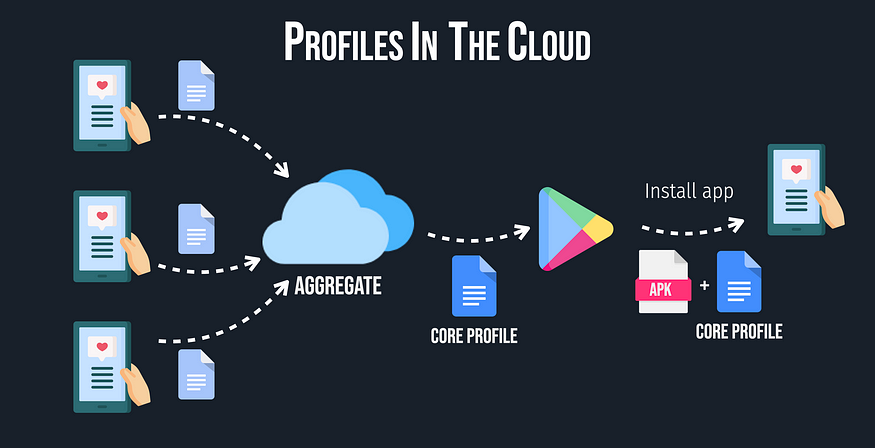

The main idea behind profiles in the cloud is that most people use the app in a pretty similar way. So in order to improve performance right after installation, we can collect profile data from people who already used this app. This aggregated profile data is used to create a file called a common core profile for the application.

So when a new user installs the app this file is downloaded alongside the application. ART uses it to precompile classes and methods that are frequently run by most of the users. That way, new users get a better performance right after downloading an app.

That does not mean the old strategy is no longer used. After the user runs an app, ART will gather user-specific profile data and recompile code that is frequently used by this particular user when the device is idle.

And the best part of all this is that we, developers who are making apps don’t have to do anything to enable this feature. It all happens behind the scenes in Android Runtime.

Summary

Android Runtime is responsible for compiling bytecode which is a part of an APK into device-specific machine code which can be understood directly by the CPU.

The first Android Runtime implementation was called Dalvik which used Just in Time compilation to optimize usage of RAM, which was very scarce at the time.

In order to improve performance in Android L, ART was introduced which used Ahead of time compilation. That allowed achieving better runtime performance but caused longer installation time and more RAM usage.

That’s why in Android N, JIT was introduced back into ART and profile guided compilation allowed to achieve better performance for the parts of the code that are frequently run.

To allow users to get the best performance possible right after an app is installed in Android P, Google introduced profiles in the cloud which complements previous optimizations by adding the common core profile file which is downloaded with APK and allows ART to precompile parts of the code which are most frequently run by previous app users.

All these optimizations allow Android Runtime to make our apps as performant as possible 🚀.

Check out my other articles or videos:

- How Dagger, Hilt and Koin differ under the hood? (+ video 🎥)

- Measure and optimize bitmap size using Glide or Picasso (+ video 🎥)

- Create Android Studio plugin to toggle “Show layout bounds” (+ video 🎥)

367

367

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?