一、传Sqoop软件包并解压改名

下载地址:https://mirror-hk.koddos.net/apache/sqoop/

win+R cmd

C:\Users\Administrator>scp -r C:\\Administrator\Downloads\sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz root@10.10.10.128:/root

[root@master ~]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# mv ./sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

二、配置Sqoop

创建Sqoop的配置文件sqoop-env.sh

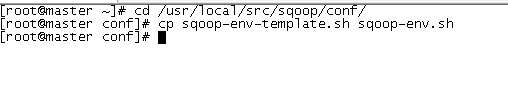

[root@master ~]# cd /usr/local/src/sqoop/conf/

[root@master conf]# cp sqoop-env-template.sh sqoop-env.sh

修改sqoop-env.sh

[root@master conf]# vi sqoop-env.sh

export HADOOP_COMMON_HOME=/usr/local/src/hadoop

export HADOOP_MAPRED_HOME=/usr/local/src/hadoop

export HBASE_HOME=/usr/local/src/hbase

export HIVE_HOME=/usr/local/src/hive

配置环境变量

[root@master conf]# vi /etc/profile.d/sqoop.sh

export SQOOP_HOME=/usr/local/src/sqoop

export PATH=${SQOOP_HOME}/bin:$PATH

连接数据库

[root@master conf]# source /etc/profile.d/sqoop.sh

[root@master conf]# cp /root/software/mysql-connector-java-5.1.46.jar /usr/local/src/sqoop/lib/

三、启动sqoop

启动所有进程

[root@master ~]# su - hadoop

Last login: Fri Apr 22 15:05:13 CST 2022 on pts/1

[hadoop@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out

10.10.10.130: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out

10.10.10.129: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out

10.10.10.130: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out

10.10.10.129: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

2305 ResourceManager

2565 Jps

1926 NameNode

2136 SecondaryNameNode

测试Sqoop连接mysql

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://127.0.0.1:3306 --username root -P

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

22/04/29 15:16:26 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Enter password:

22/04/29 15:16:34 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

Fri Apr 29 15:16:34 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

information_schema

mysql

performance_schema

sys

连接hive

[hadoop@master ~]$ cp /usr/local/src/hive/lib/hive-common-2.0.0.jar /usr/local/src/sqoop/lib/

四、Sqoop模板命令

创建mysql数据库和数据表

[hadoop@master ~]$ mysql -uroot -p'Password123!'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 7

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> create database sample;

Query OK, 1 row affected (0.00 sec)

mysql> use sample

Database changed

mysql> create table student(number char(9) primary key,name varchar(10));

Query OK, 0 rows affected (0.01 sec)

mysql> insert student values('01','zhangsan'),('02','lisi'),('03','wangwu');

Query OK, 3 rows affected (0.00 sec)

Records: 3 Duplicates: 0 Warnings: 0

mysql> select * from student;

+--------+----------+

| number | name |

+--------+----------+

| 01 | zhangsan |

| 02 | lisi |

| 03 | wangwu |

+--------+----------+

3 rows in set (0.00 sec)

mysql> quit

Bye

创建hive数据库和数据表

[hadoop@master ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.0.0.jar!/hive-log4j2.properties

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> use sample;

OK

Time taken: 0.477 seconds

hive> show tables;

OK

student

Time taken: 0.152 seconds, Fetched: 1 row(s)

hive> select * from student;

OK

01|zhangsan NULL

02|lisi NULL

03|wangwu NULL

Time taken: 0.773 seconds, Fetched: 3 row(s)

hive> quit;

在hive中创建sample数据库和student数据表

[hadoop@master ~]$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.0.0.jar!/hive-log4j2.properties

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> create database sample;

OK

Time taken: 0.694 seconds

hive> use sample;

OK

Time taken: 0.013 seconds

hive> create table student(number STRING,name STRING);

OK

Time taken: 0.23 seconds

hive> exit;

从mysql导出数据,导入hive

[hadoop@master ~]$ sqoop import --connect jdbc:mysql://master:3306/sample --username root --password Password123! --table student --fields-terminated-by '|' --delete-target-dir --num-mappers 1 --hive-import --hive-database sample --hive-table student

从hive导出数据,导入mysql

[hadoop@master ~]$ mysql -uroot -pPassword123! -e 'delete from sample.student;'

[hadoop@master ~]$ sqoop export --connect "jdbc:mysql://master:3306/sample?useUnicode=true&characterEncoding=utf-8" --username root --password Password123! --table student --input-fields-terminated-by '|' --export-dir /user/hive/warehouse/sample.db/student/*

五、Sqoop组件应用

列出mysql所有数据库

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://master:3306 --username root --password Password123!

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

22/04/29 16:44:07 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

22/04/29 16:44:07 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

22/04/29 16:44:07 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

Fri Apr 29 16:44:07 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

information_schema

hive

mysql

performance_schema

sample

sys

连接mysql并列出sample数据库中的表

[hadoop@master ~]$ sqoop list-tables --connect jdbc:mysql://master:3306/sample --username root --password Password123!

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

22/04/29 16:44:44 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

22/04/29 16:44:44 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

22/04/29 16:44:44 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

Fri Apr 29 16:44:44 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

student

将关系型数据的表结构复制到hive

[hadoop@master ~]$ sqoop create-hive-table -connect jdbc:mysql://localhost:3306/sample -table student -username root -password Password123! -hive-table test

从关系型数据库导入文件到hive

[hadoop@master ~]$ sqoop import --connect jdbc:mysql://master:3306/sample --username root --password Password123! --table student --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table test

将hive中的表数据导入到mysql中

[hadoop@master ~]$ sqoop export -connect jdbc:mysql://master:3306/sample -username root -password Password123! -table student --input-fields-terminated-by '\001' -export-dir /user/hibe/warehouse/test从数据库导出表的数据到HDFS上文件

[hadoop@master ~]$ sqoop import -connect jdbc:mysql://master:3306/sample -username root -password Password123! -table student --num-mappers 1 -target-dir /user/test

从数据库增量导入表数据到HDFS

[hadoop@master ~]$ mysql -uroot -pPassword123!

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 103

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> use sample;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> insert into student values('04','sss');

Query OK, 1 row affected (0.01 sec)

mysql> insert into student values('05','ss2');

Query OK, 1 row affected (0.00 sec)

mysql> insert into student values('06','ss3');

Query OK, 1 row affected (0.01 sec)

mysql> alter table student modify column number int;

Query OK, 3 rows affected (0.04 sec)

Records: 3 Duplicates: 0 Warnings: 0

mysql> exit;

Bye

[hadoop@master ~]$ sqoop import -connect jdbc:mysql://master:3306/sample -username root -password Password123! -table student --num-mappers 1 -target-dir /user/test -check-column number -incremental append -last-value 0

[hadoop@master ~]$ hdfs dfs -cat /user/test/part-m-00001

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?