启动某data节点失败:

service hadoop-hdfs-datanode start

starting datanode, logging to /var/log/hadoop-hdfs/xxxx.out

Failed to start Hadoop datanode. Return value: 1 [FAILED]vim /var/log/hadoop-hdfs/xxxx.out

ulimit -a for user hdfs

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 58780

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65536

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 131072

virtual memory (kbytes, -v) unlimited

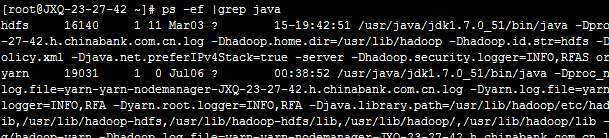

file locks (-x) unlimitedps -ef |grep java

kill -9 16140

重启:service hadoop-hdfs-datanode start

OK!

su hdfs

hdfs dfsadmin -report

可以看到dead的节点已经恢复过来。

4983

4983

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?